- Researchers & Academics: Automate and deepen research on specific topics, gathering comprehensive summaries with citations.

- Content Creators & Writers: Generate detailed background information and well-sourced content drafts for articles, blogs, or reports.

- Developers & AI Enthusiasts: Experiment with and build upon a sophisticated, fully local AI agent for web research without external API dependencies.

- Students: Conduct thorough research for assignments, essays, or projects, ensuring information is well-summarized and cited.

- Data Analysts: Automate the initial information gathering phase for various analytical tasks.

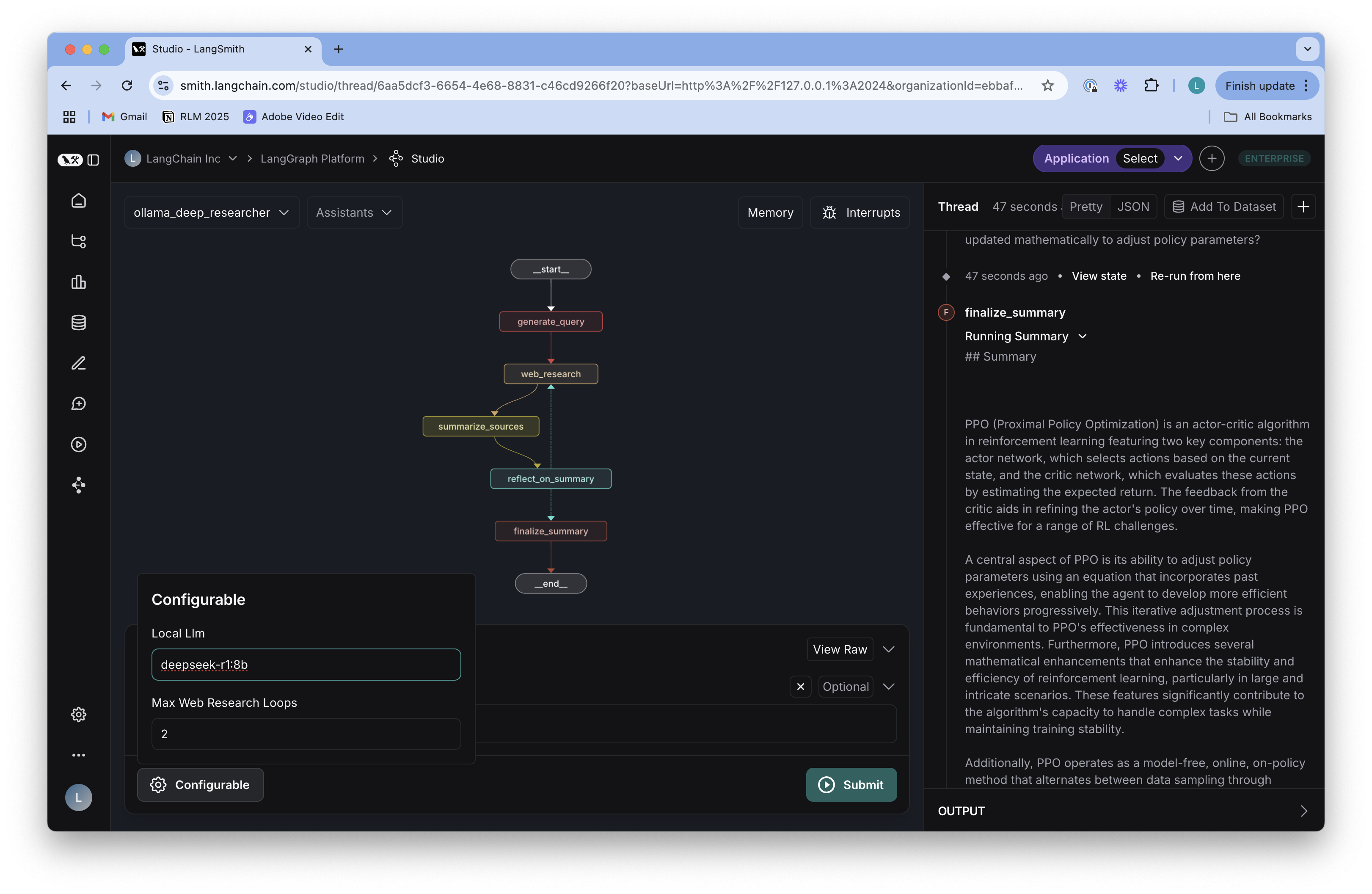

- Fully Local Operation: Conducts entire web research processes using local LLMs (Ollama/LM Studio), ensuring data privacy and eliminating reliance on external API costs or internet connectivity for core AI processing.

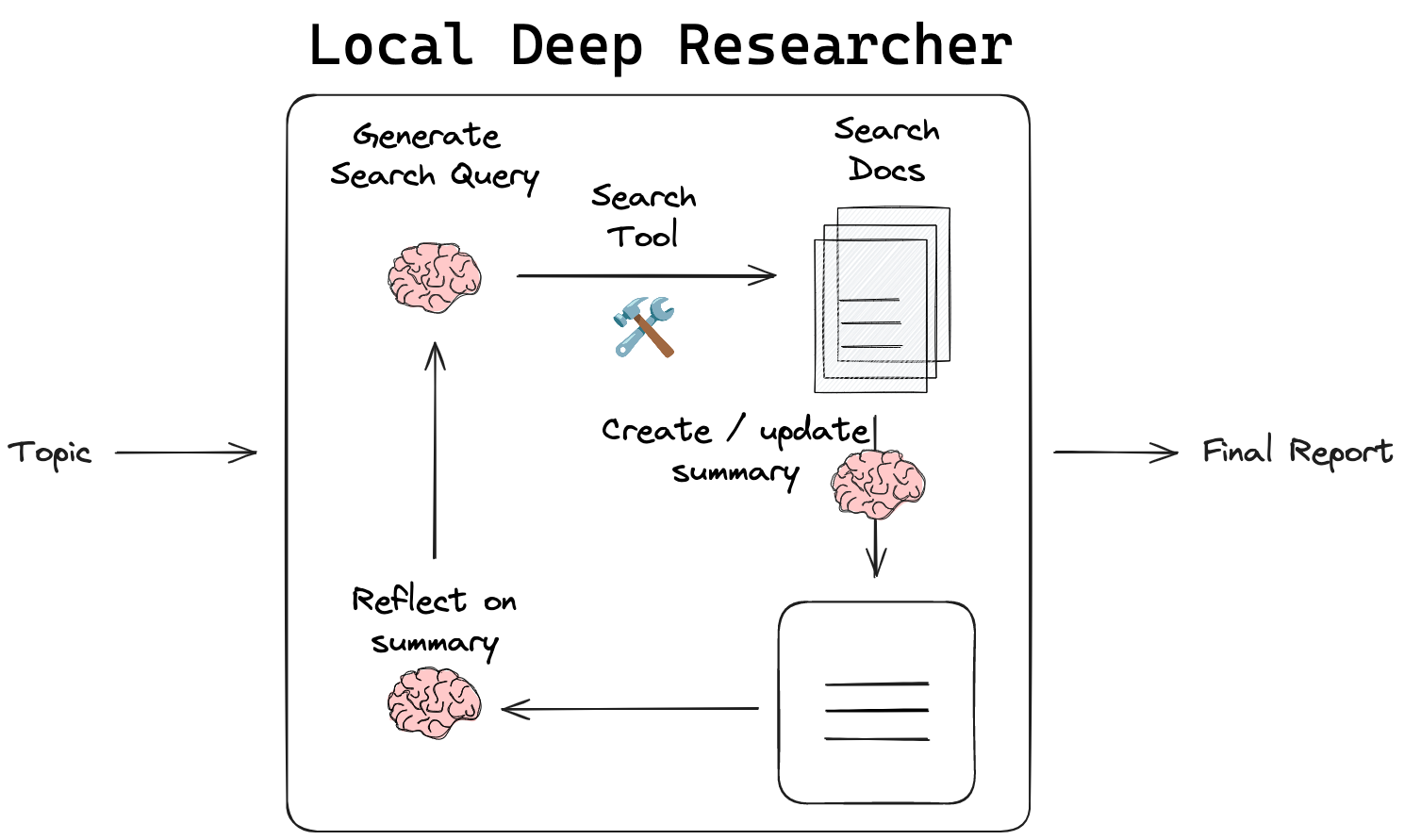

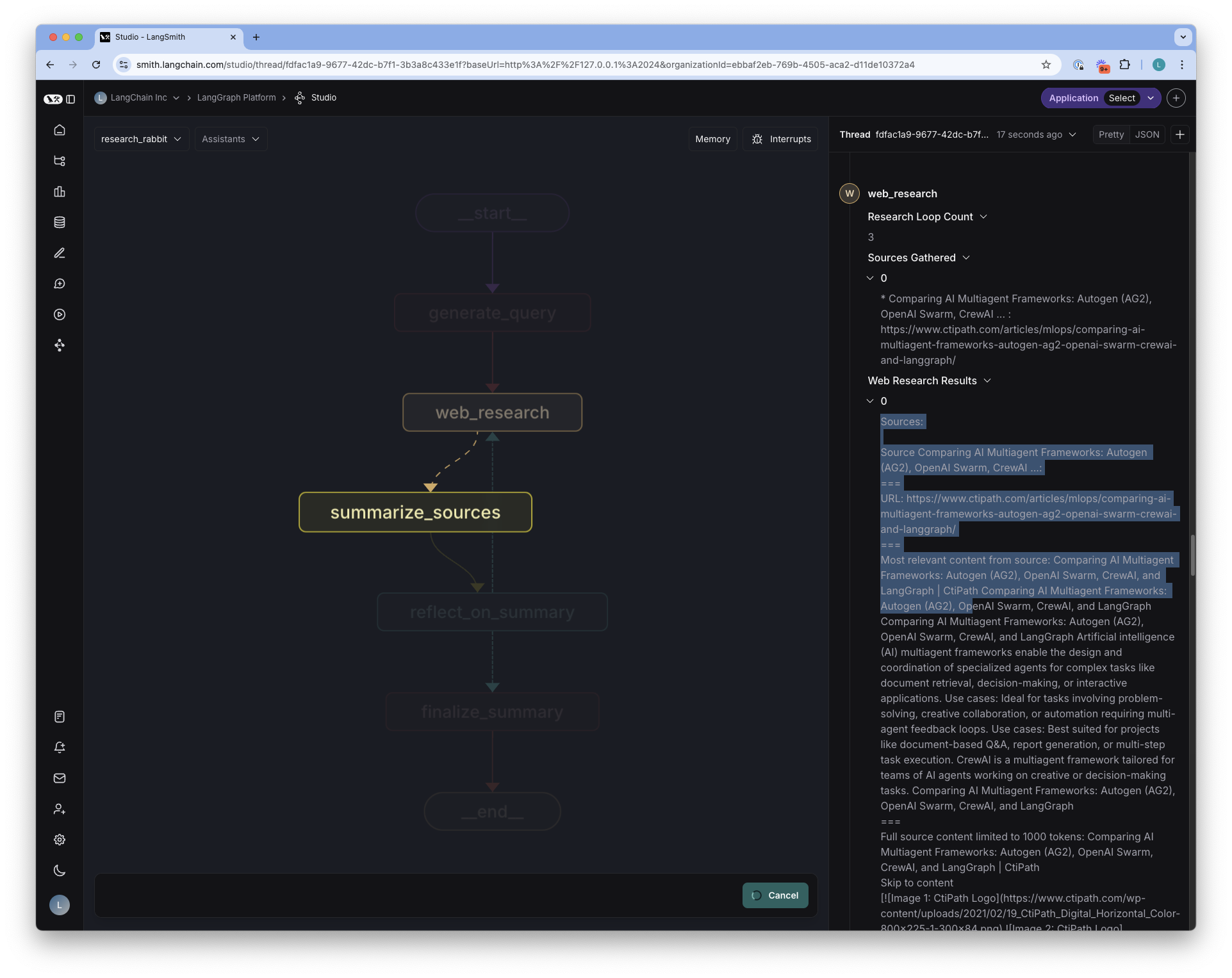

- Autonomous & Iterative Research: Utilizes an intelligent, self-correcting loop to iteratively refine its understanding of a topic, identifying and actively seeking to fill knowledge gaps.

- Comprehensive Output: Generates a detailed markdown report with precise citations for all sources, promoting transparency and verifiability of information.

- Graph-Based State Management: Efficiently manages research progress and gathered sources within a graph state, allowing for complex, multi-step reasoning.

- Customizable Research Depth: Users can configure the number of iterations, allowing control over the depth and scope of the research performed.

- Unmatched Data Privacy: All processing stays local, ideal for sensitive research topics.

- Cost-Effective: Eliminates external LLM API costs by using local models.

- Transparent Sourcing: Provides citations for all information in the final report.

- Deep & Iterative Research: Mimics human research by refining queries and filling knowledge gaps over multiple steps.

- Accessibility for Local LLMs: Great for users already running Ollama or LM Studio.

- Requires Local LLM Setup: Not a plug-and-play solution; needs local LLM installation and configuration.

- Computational Demands: Running LLMs locally can be resource-intensive, requiring capable hardware.

- Search Tool Dependency: Still relies on an external search engine/tool to fetch web content.

- Learning Curve: Users need some familiarity with LangChain concepts and local LLM setup.

- No Direct UI (Implied): Primarily a programmatic tool, requiring coding to use.

Proud of the love you're getting? Show off your AI Toolbook reviews—then invite more fans to share the love and build your credibility.

Add an AI Toolbook badge to your site—an easy way to drive followers, showcase updates, and collect reviews. It's like a mini 24/7 billboard for your AI.

Reviews

Rating Distribution

Average score

Popular Mention

FAQs

Similar AI Tools

tavily

Tavily is a specialized search engine meticulously optimized for Large Language Models (LLMs) and AI agents. Its primary goal is to provide real-time, accurate, and unbiased information, significantly enhancing the ability of AI applications to retrieve and process data efficiently. Unlike traditional search APIs, Tavily focuses on delivering highly relevant content snippets and structured data that are specifically tailored for AI workflows like Retrieval-Augmented Generation (RAG), aiming to reduce AI hallucinations and enable better decision-making.

tavily

Tavily is a specialized search engine meticulously optimized for Large Language Models (LLMs) and AI agents. Its primary goal is to provide real-time, accurate, and unbiased information, significantly enhancing the ability of AI applications to retrieve and process data efficiently. Unlike traditional search APIs, Tavily focuses on delivering highly relevant content snippets and structured data that are specifically tailored for AI workflows like Retrieval-Augmented Generation (RAG), aiming to reduce AI hallucinations and enable better decision-making.

tavily

Tavily is a specialized search engine meticulously optimized for Large Language Models (LLMs) and AI agents. Its primary goal is to provide real-time, accurate, and unbiased information, significantly enhancing the ability of AI applications to retrieve and process data efficiently. Unlike traditional search APIs, Tavily focuses on delivering highly relevant content snippets and structured data that are specifically tailored for AI workflows like Retrieval-Augmented Generation (RAG), aiming to reduce AI hallucinations and enable better decision-making.

jina

Jina AI is a Berlin-based software company that provides a "search foundation" platform, offering various AI-powered tools designed to help developers build the next generation of search applications for unstructured data. Its mission is to enable businesses to create reliable and high-quality Generative AI (GenAI) and multimodal search applications by combining Embeddings, Rerankers, and Small Language Models (SLMs). Jina AI's tools are designed to provide real-time, accurate, and unbiased information, optimized for LLMs and AI agents.

jina

Jina AI is a Berlin-based software company that provides a "search foundation" platform, offering various AI-powered tools designed to help developers build the next generation of search applications for unstructured data. Its mission is to enable businesses to create reliable and high-quality Generative AI (GenAI) and multimodal search applications by combining Embeddings, Rerankers, and Small Language Models (SLMs). Jina AI's tools are designed to provide real-time, accurate, and unbiased information, optimized for LLMs and AI agents.

jina

Jina AI is a Berlin-based software company that provides a "search foundation" platform, offering various AI-powered tools designed to help developers build the next generation of search applications for unstructured data. Its mission is to enable businesses to create reliable and high-quality Generative AI (GenAI) and multimodal search applications by combining Embeddings, Rerankers, and Small Language Models (SLMs). Jina AI's tools are designed to provide real-time, accurate, and unbiased information, optimized for LLMs and AI agents.

Perplexity AI

Perplexity AI is a powerful AI‑powered answer engine and search assistant launched in December 2022. It combines real‑time web search with large language models (like GPT‑4.1, Claude 4, Sonar), delivering direct answers with in‑text citations and multi‑turn conversational context.

Perplexity AI

Perplexity AI is a powerful AI‑powered answer engine and search assistant launched in December 2022. It combines real‑time web search with large language models (like GPT‑4.1, Claude 4, Sonar), delivering direct answers with in‑text citations and multi‑turn conversational context.

Perplexity AI

Perplexity AI is a powerful AI‑powered answer engine and search assistant launched in December 2022. It combines real‑time web search with large language models (like GPT‑4.1, Claude 4, Sonar), delivering direct answers with in‑text citations and multi‑turn conversational context.

Ministral refers to Mistral AI’s new “Les Ministraux” series—comprising Ministral 3B and Ministral 8B—launched in October 2024. These are ultra-efficient, open-weight LLMs optimized for on-device and edge computing, with a massive 128 K‑token context window. They offer strong reasoning, knowledge, multilingual support, and function-calling capabilities, outperforming previous models in the sub‑10B parameter class

Mistral Ministral ..

Ministral refers to Mistral AI’s new “Les Ministraux” series—comprising Ministral 3B and Ministral 8B—launched in October 2024. These are ultra-efficient, open-weight LLMs optimized for on-device and edge computing, with a massive 128 K‑token context window. They offer strong reasoning, knowledge, multilingual support, and function-calling capabilities, outperforming previous models in the sub‑10B parameter class

Mistral Ministral ..

Ministral refers to Mistral AI’s new “Les Ministraux” series—comprising Ministral 3B and Ministral 8B—launched in October 2024. These are ultra-efficient, open-weight LLMs optimized for on-device and edge computing, with a massive 128 K‑token context window. They offer strong reasoning, knowledge, multilingual support, and function-calling capabilities, outperforming previous models in the sub‑10B parameter class

Qwen Chat

Qwen Chat is Alibaba Cloud’s conversational AI assistant built on the Qwen series (e.g., Qwen‑7B‑Chat, Qwen1.5‑7B‑Chat, Qwen‑VL, Qwen‑Audio, and Qwen2.5‑Omni). It supports text, vision, audio, and video understanding, plus image and document processing, web search integration, and image generation—all through a unified chat interface.

Qwen Chat

Qwen Chat is Alibaba Cloud’s conversational AI assistant built on the Qwen series (e.g., Qwen‑7B‑Chat, Qwen1.5‑7B‑Chat, Qwen‑VL, Qwen‑Audio, and Qwen2.5‑Omni). It supports text, vision, audio, and video understanding, plus image and document processing, web search integration, and image generation—all through a unified chat interface.

Qwen Chat

Qwen Chat is Alibaba Cloud’s conversational AI assistant built on the Qwen series (e.g., Qwen‑7B‑Chat, Qwen1.5‑7B‑Chat, Qwen‑VL, Qwen‑Audio, and Qwen2.5‑Omni). It supports text, vision, audio, and video understanding, plus image and document processing, web search integration, and image generation—all through a unified chat interface.

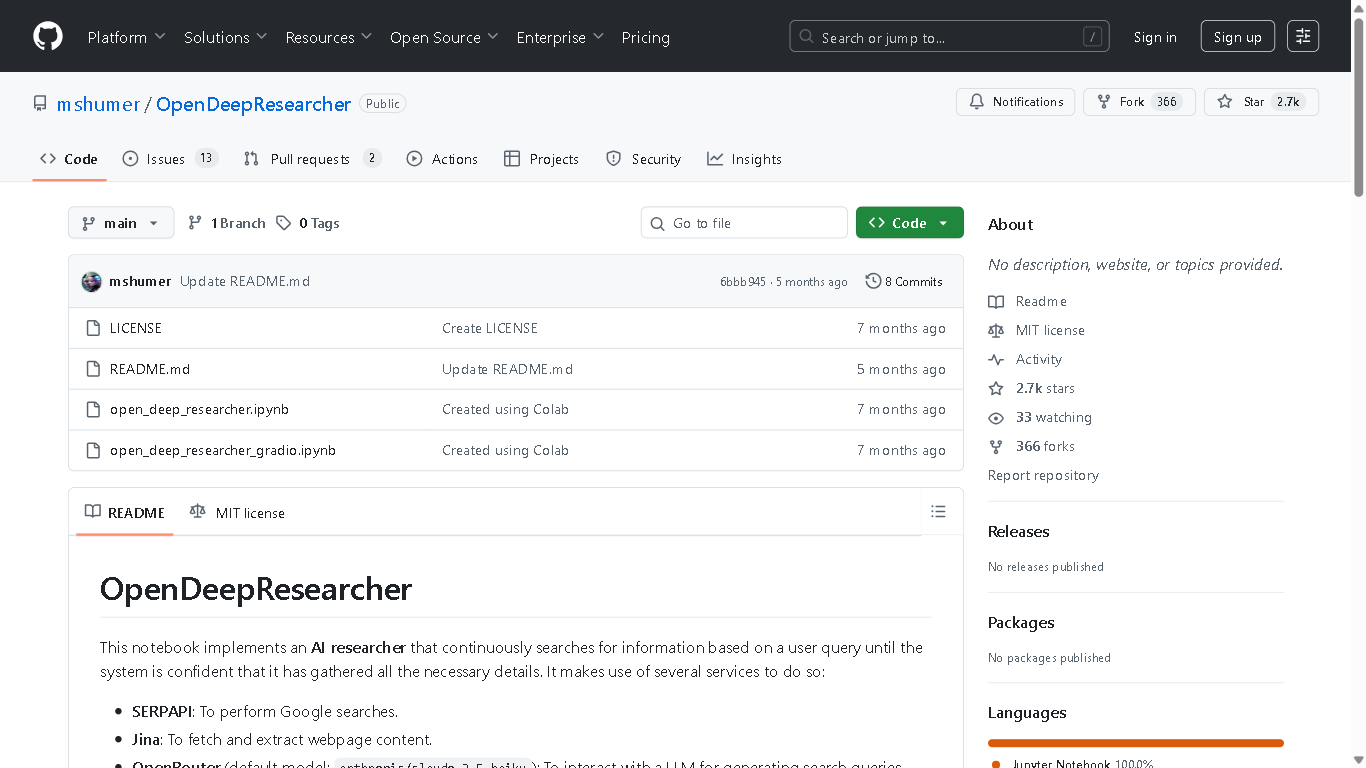

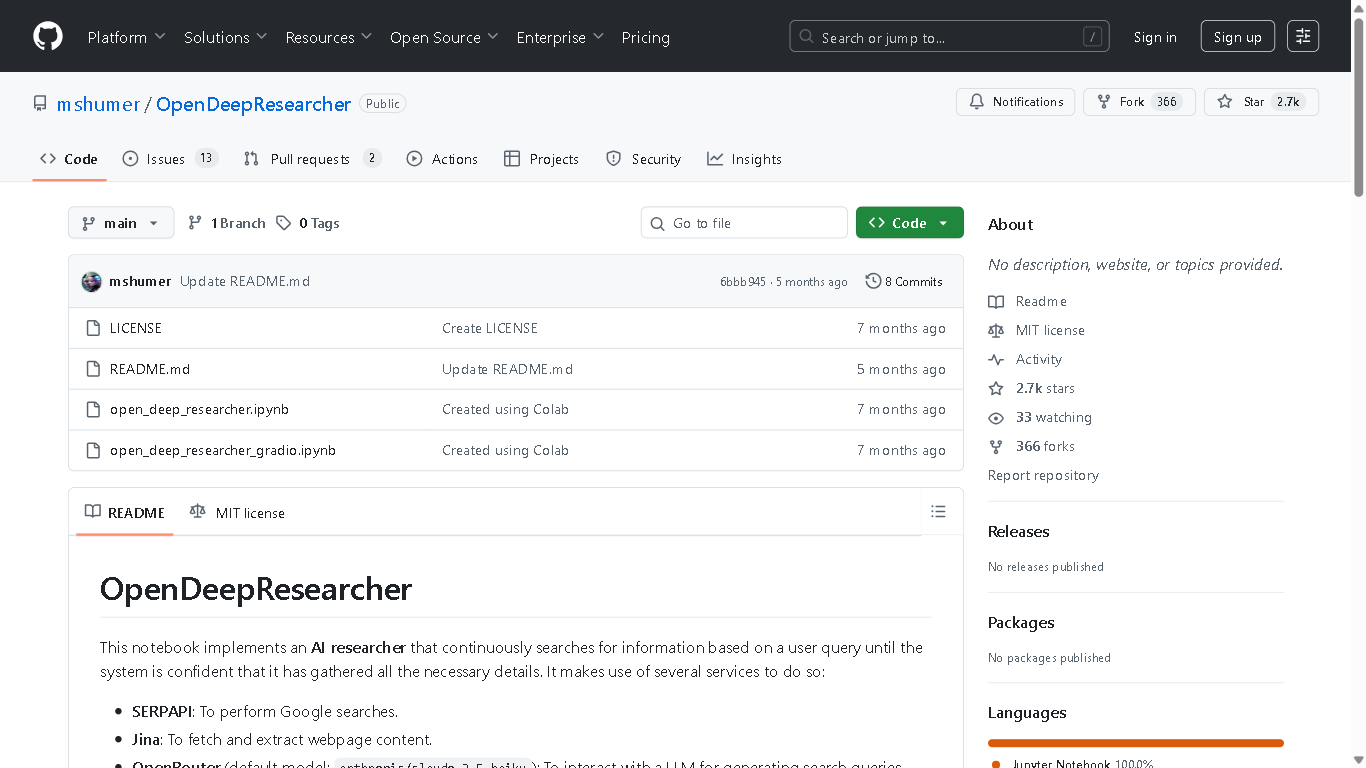

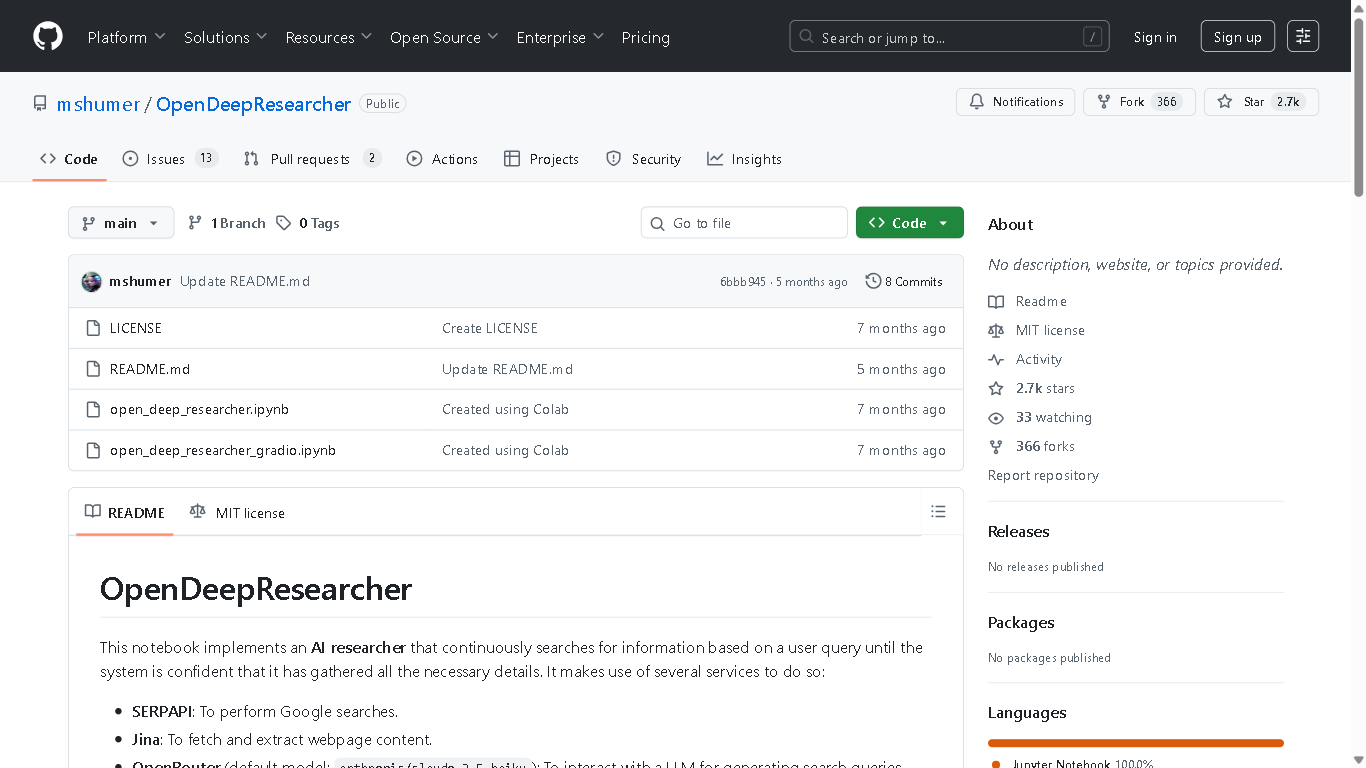

Open Deep Research..

OpenDeepResearcher is an open-source Python library designed to simplify and streamline the process of conducting deep research using large language models (LLMs). It provides a user-friendly interface for researchers to efficiently explore vast datasets, generate insightful summaries, and perform complex analyses, all powered by the capabilities of LLMs.

Open Deep Research..

OpenDeepResearcher is an open-source Python library designed to simplify and streamline the process of conducting deep research using large language models (LLMs). It provides a user-friendly interface for researchers to efficiently explore vast datasets, generate insightful summaries, and perform complex analyses, all powered by the capabilities of LLMs.

Open Deep Research..

OpenDeepResearcher is an open-source Python library designed to simplify and streamline the process of conducting deep research using large language models (LLMs). It provides a user-friendly interface for researchers to efficiently explore vast datasets, generate insightful summaries, and perform complex analyses, all powered by the capabilities of LLMs.

WebDev Arena

LMArena is an open, crowdsourced platform for evaluating large language models (LLMs) based on human preferences. Rather than relying purely on automated benchmarks, it presents paired responses from different models to users, who vote for which is better. These votes build live leaderboards, revealing which models perform best in real-use scenarios. Key features include prompt-to-leaderboard comparison, transparent evaluation methods, style control for how responses are formatted, and auditability of feedback data. The platform is particularly valuable for researchers, developers, and AI labs that want to understand how their models compare when judged by real people, not just metrics.

WebDev Arena

LMArena is an open, crowdsourced platform for evaluating large language models (LLMs) based on human preferences. Rather than relying purely on automated benchmarks, it presents paired responses from different models to users, who vote for which is better. These votes build live leaderboards, revealing which models perform best in real-use scenarios. Key features include prompt-to-leaderboard comparison, transparent evaluation methods, style control for how responses are formatted, and auditability of feedback data. The platform is particularly valuable for researchers, developers, and AI labs that want to understand how their models compare when judged by real people, not just metrics.

WebDev Arena

LMArena is an open, crowdsourced platform for evaluating large language models (LLMs) based on human preferences. Rather than relying purely on automated benchmarks, it presents paired responses from different models to users, who vote for which is better. These votes build live leaderboards, revealing which models perform best in real-use scenarios. Key features include prompt-to-leaderboard comparison, transparent evaluation methods, style control for how responses are formatted, and auditability of feedback data. The platform is particularly valuable for researchers, developers, and AI labs that want to understand how their models compare when judged by real people, not just metrics.

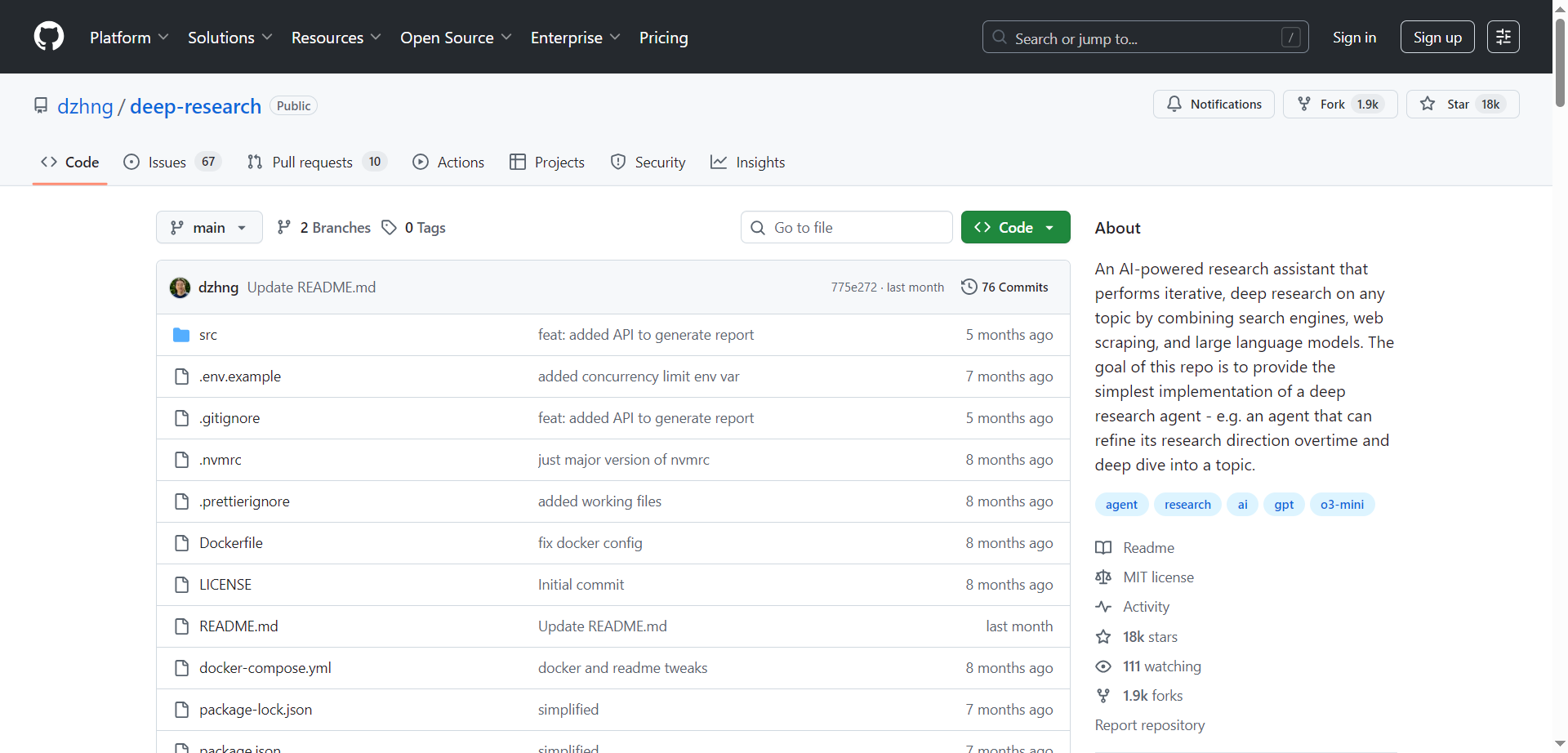

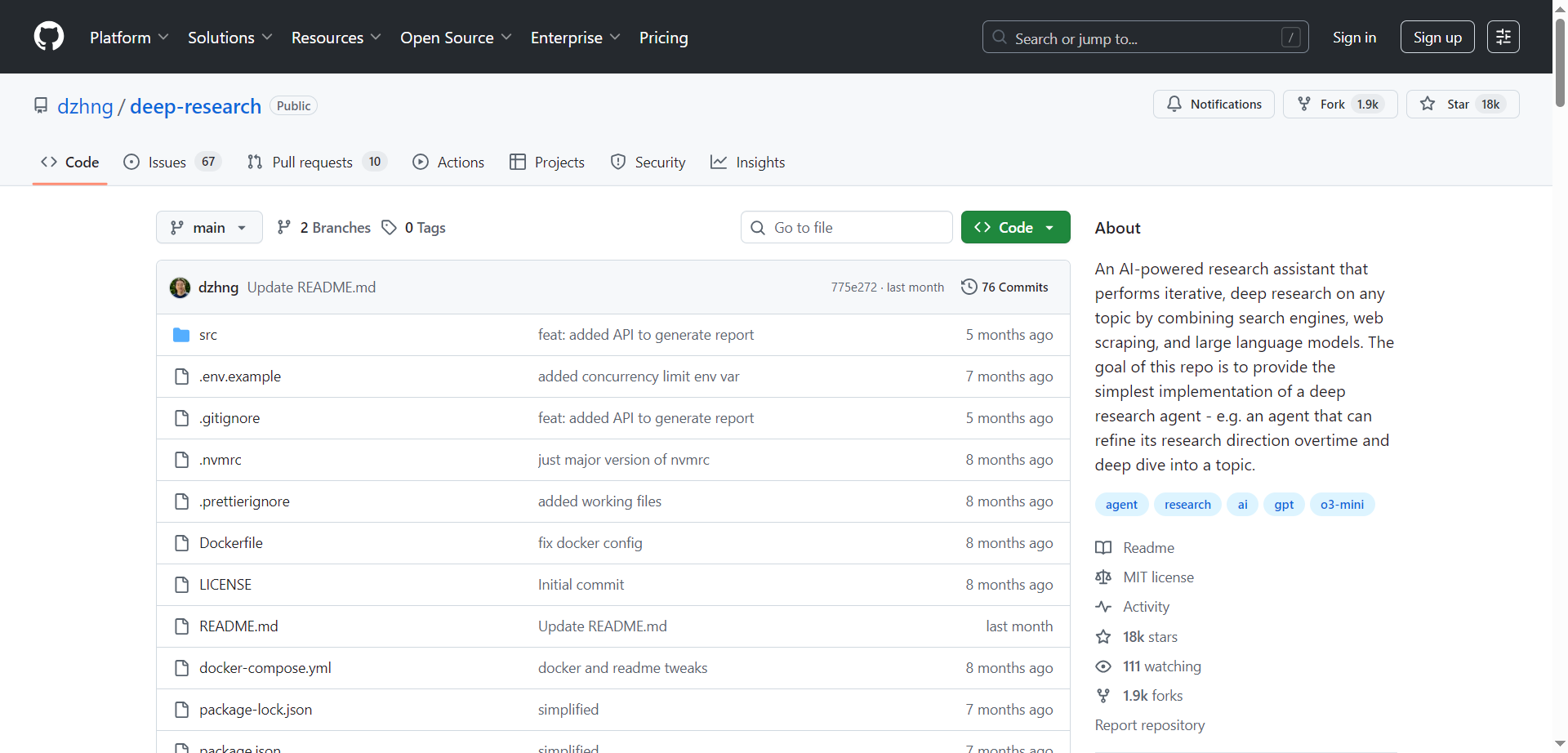

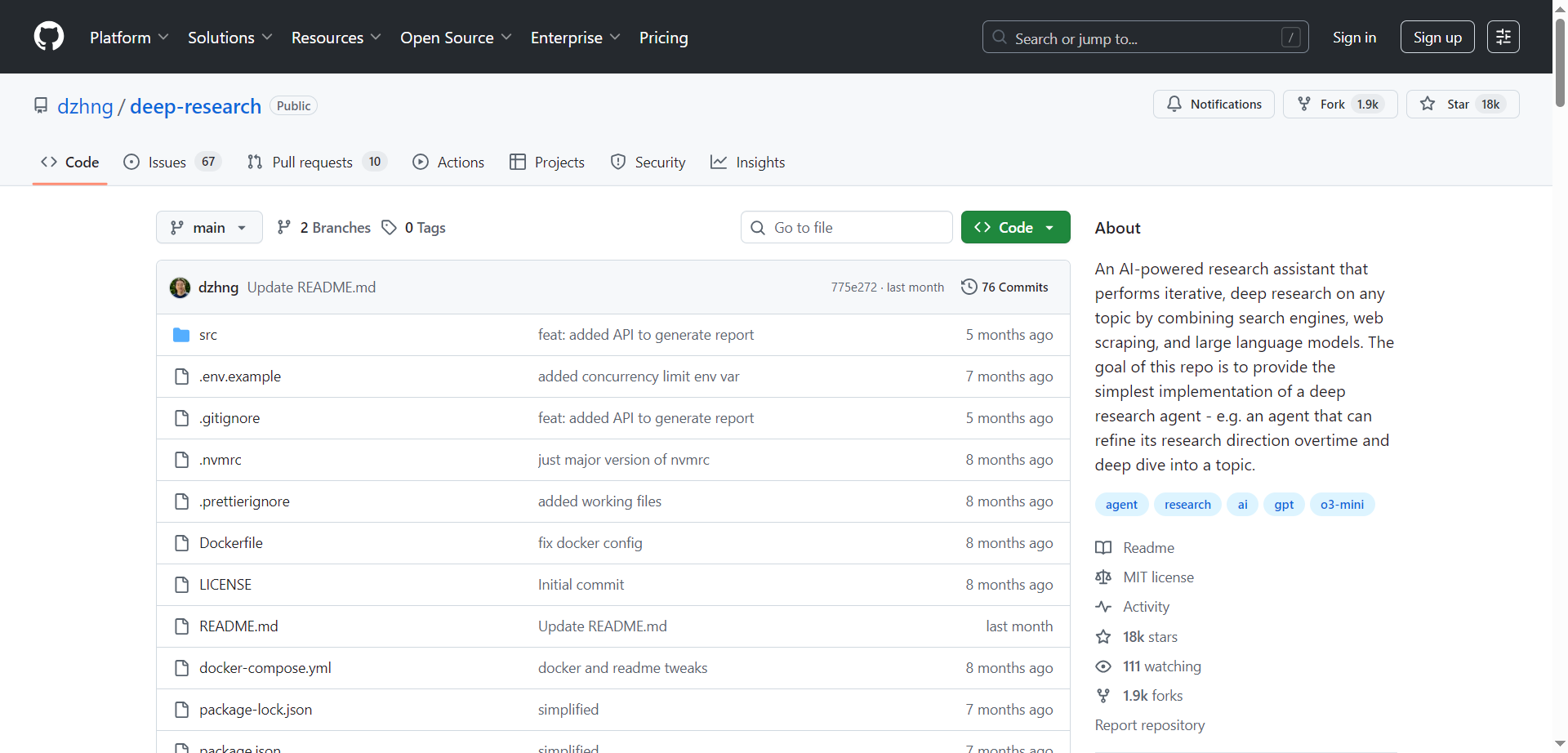

Deep Research

Deep Research is an AI-powered research assistant designed to perform iterative and in-depth exploration of any topic. It combines search engines, web scraping, and large language models to refine its research direction over multiple iterations. The system generates targeted search queries, processes results, and dives deeper based on new findings, producing comprehensive markdown reports with detailed insights and sources. Its goal is to facilitate deep understanding and knowledge discovery while keeping the implementation simple—under 500 lines of code—making it accessible for customization and building upon. Deep Research aims to streamline complex research processes through automation and intelligent analysis.

Deep Research

Deep Research is an AI-powered research assistant designed to perform iterative and in-depth exploration of any topic. It combines search engines, web scraping, and large language models to refine its research direction over multiple iterations. The system generates targeted search queries, processes results, and dives deeper based on new findings, producing comprehensive markdown reports with detailed insights and sources. Its goal is to facilitate deep understanding and knowledge discovery while keeping the implementation simple—under 500 lines of code—making it accessible for customization and building upon. Deep Research aims to streamline complex research processes through automation and intelligent analysis.

Deep Research

Deep Research is an AI-powered research assistant designed to perform iterative and in-depth exploration of any topic. It combines search engines, web scraping, and large language models to refine its research direction over multiple iterations. The system generates targeted search queries, processes results, and dives deeper based on new findings, producing comprehensive markdown reports with detailed insights and sources. Its goal is to facilitate deep understanding and knowledge discovery while keeping the implementation simple—under 500 lines of code—making it accessible for customization and building upon. Deep Research aims to streamline complex research processes through automation and intelligent analysis.

ChatBetter

ChatBetter is an AI platform designed to unify access to all major large language models (LLMs) within a single chat interface. Built for productivity and accuracy, ChatBetter leverages automatic model selection to route every query to the most capable AI—eliminating guesswork about which model to use. Users can directly compare responses from OpenAI, Anthropic, Google, Meta, DeepSeek, Perplexity, Mistral, xAI, and Cohere models side by side, or merge answers for comprehensive insights. The system is crafted for teams and individuals alike, enabling complex research, planning, and writing tasks to be accomplished efficiently in one place.

ChatBetter

ChatBetter is an AI platform designed to unify access to all major large language models (LLMs) within a single chat interface. Built for productivity and accuracy, ChatBetter leverages automatic model selection to route every query to the most capable AI—eliminating guesswork about which model to use. Users can directly compare responses from OpenAI, Anthropic, Google, Meta, DeepSeek, Perplexity, Mistral, xAI, and Cohere models side by side, or merge answers for comprehensive insights. The system is crafted for teams and individuals alike, enabling complex research, planning, and writing tasks to be accomplished efficiently in one place.

ChatBetter

ChatBetter is an AI platform designed to unify access to all major large language models (LLMs) within a single chat interface. Built for productivity and accuracy, ChatBetter leverages automatic model selection to route every query to the most capable AI—eliminating guesswork about which model to use. Users can directly compare responses from OpenAI, Anthropic, Google, Meta, DeepSeek, Perplexity, Mistral, xAI, and Cohere models side by side, or merge answers for comprehensive insights. The system is crafted for teams and individuals alike, enabling complex research, planning, and writing tasks to be accomplished efficiently in one place.

Haystack by Deepse..

Haystack is an open-source framework developed by deepset for building production-ready search and question-answering systems powered by language models. It enables developers to connect LLMs with structured and unstructured data sources, perform retrieval-augmented generation, and create semantic search pipelines. Haystack provides flexibility to integrate various retrievers, readers, and document stores like Elasticsearch, FAISS, and Pinecone. It’s widely used for enterprise document Q&A, chatbots, and knowledge management systems, helping teams deploy scalable, high-performance AI-powered search.

Haystack by Deepse..

Haystack is an open-source framework developed by deepset for building production-ready search and question-answering systems powered by language models. It enables developers to connect LLMs with structured and unstructured data sources, perform retrieval-augmented generation, and create semantic search pipelines. Haystack provides flexibility to integrate various retrievers, readers, and document stores like Elasticsearch, FAISS, and Pinecone. It’s widely used for enterprise document Q&A, chatbots, and knowledge management systems, helping teams deploy scalable, high-performance AI-powered search.

Haystack by Deepse..

Haystack is an open-source framework developed by deepset for building production-ready search and question-answering systems powered by language models. It enables developers to connect LLMs with structured and unstructured data sources, perform retrieval-augmented generation, and create semantic search pipelines. Haystack provides flexibility to integrate various retrievers, readers, and document stores like Elasticsearch, FAISS, and Pinecone. It’s widely used for enterprise document Q&A, chatbots, and knowledge management systems, helping teams deploy scalable, high-performance AI-powered search.

Fetch.ai

Fetch.ai is a decentralized AI platform built to power the emerging agentic economy by enabling autonomous AI agents to interact, transact, and collaborate across digital and real-world environments. The platform combines blockchain technology with advanced machine learning to create a network where millions of AI agents operate independently yet connect seamlessly to solve complex tasks such as supply chain automation, personalized services, and data sharing. Fetch.ai offers a complete technology stack, including personal AI assistants, developer tools, and business automation solutions, designed for real-world impact. Its open ecosystem supports flexibility, privacy, and interoperability, empowering users and enterprises to build, discover, and transact through intelligent, autonomous AI agents.

Fetch.ai

Fetch.ai is a decentralized AI platform built to power the emerging agentic economy by enabling autonomous AI agents to interact, transact, and collaborate across digital and real-world environments. The platform combines blockchain technology with advanced machine learning to create a network where millions of AI agents operate independently yet connect seamlessly to solve complex tasks such as supply chain automation, personalized services, and data sharing. Fetch.ai offers a complete technology stack, including personal AI assistants, developer tools, and business automation solutions, designed for real-world impact. Its open ecosystem supports flexibility, privacy, and interoperability, empowering users and enterprises to build, discover, and transact through intelligent, autonomous AI agents.

Fetch.ai

Fetch.ai is a decentralized AI platform built to power the emerging agentic economy by enabling autonomous AI agents to interact, transact, and collaborate across digital and real-world environments. The platform combines blockchain technology with advanced machine learning to create a network where millions of AI agents operate independently yet connect seamlessly to solve complex tasks such as supply chain automation, personalized services, and data sharing. Fetch.ai offers a complete technology stack, including personal AI assistants, developer tools, and business automation solutions, designed for real-world impact. Its open ecosystem supports flexibility, privacy, and interoperability, empowering users and enterprises to build, discover, and transact through intelligent, autonomous AI agents.

Typing Mind

TypingMind is a powerful frontend for large language models, giving users a clean, customizable interface to interact with AI more efficiently. It enhances the user experience by offering advanced features such as conversation organization, prompt management, model switching, and private local usage options. TypingMind provides a more flexible and user-friendly environment than standard AI chat interfaces, allowing users to optimize workflows, manage sessions, and personalize interactions. It is built for individuals and teams who want full control over how they use LLMs without relying on default chat UIs.

Typing Mind

TypingMind is a powerful frontend for large language models, giving users a clean, customizable interface to interact with AI more efficiently. It enhances the user experience by offering advanced features such as conversation organization, prompt management, model switching, and private local usage options. TypingMind provides a more flexible and user-friendly environment than standard AI chat interfaces, allowing users to optimize workflows, manage sessions, and personalize interactions. It is built for individuals and teams who want full control over how they use LLMs without relying on default chat UIs.

Typing Mind

TypingMind is a powerful frontend for large language models, giving users a clean, customizable interface to interact with AI more efficiently. It enhances the user experience by offering advanced features such as conversation organization, prompt management, model switching, and private local usage options. TypingMind provides a more flexible and user-friendly environment than standard AI chat interfaces, allowing users to optimize workflows, manage sessions, and personalize interactions. It is built for individuals and teams who want full control over how they use LLMs without relying on default chat UIs.

Editorial Note

This page was researched and written by the ATB Editorial Team. Our team researches each AI tool by reviewing its official website, testing features, exploring real use cases, and considering user feedback. Every page is fact-checked and regularly updated to ensure the information stays accurate, neutral, and useful for our readers.

If you have any suggestions or questions, email us at hello@aitoolbook.ai