- AI Developers & Engineers: The primary audience, utilizing Jina AI's APIs and SDKs to build custom neural search, RAG (Retrieval-Augmented Generation), and AI agent systems.

- Businesses Building AI Solutions: Companies looking to integrate advanced search capabilities into their products, improve data retrieval for LLMs, or create more intelligent applications.

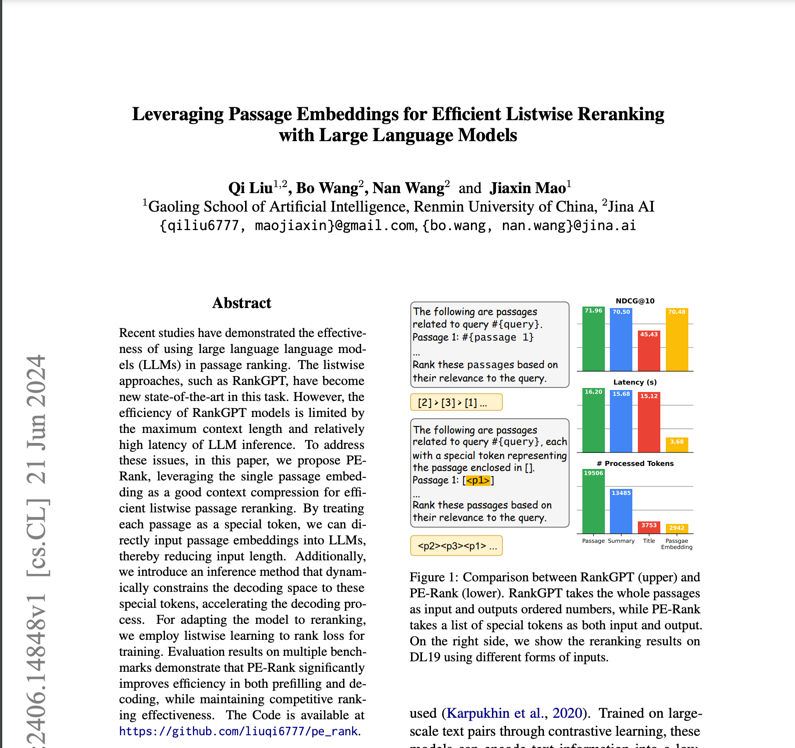

- Researchers & Data Scientists: Explore and implement state-of-the-art embedding models, rerankers, and classification techniques for multimodal data.

- Content Creators & Media Organizations: Transform vast multimedia assets into searchable knowledge, streamline internal research, and enrich user experiences.

- E-commerce & Retail Leaders: Deliver precise product recommendations and in-depth search experiences for global product catalogs.

- Financial Firms & Consultancies: Gain real-time insights from large-scale data cleaning and domain-specific model training.

- Search Foundation for AI: Jina AI positions itself as a "search foundation" optimized for LLMs and AI agents, specifically built to handle unstructured data (text, images, audio, video).

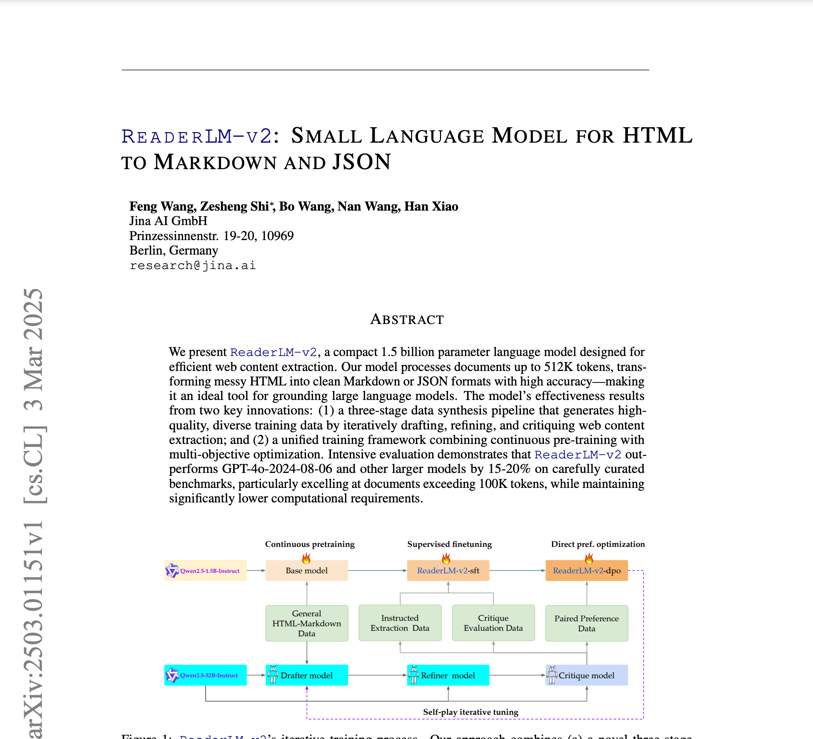

- Comprehensive Suite of Search Tools: Offers a modular ecosystem of highly specialized tools like Reader (URL to Markdown), Embeddings (multimodal, multilingual), Reranker (relevance maximization), DeepSearch (iterative reasoning search), Classifier, and Segmenter.

- Designed for RAG (Retrieval-Augmented Generation): Its components are specifically engineered to improve the context and relevance of information fed into LLMs, directly addressing the challenge of AI hallucinations.

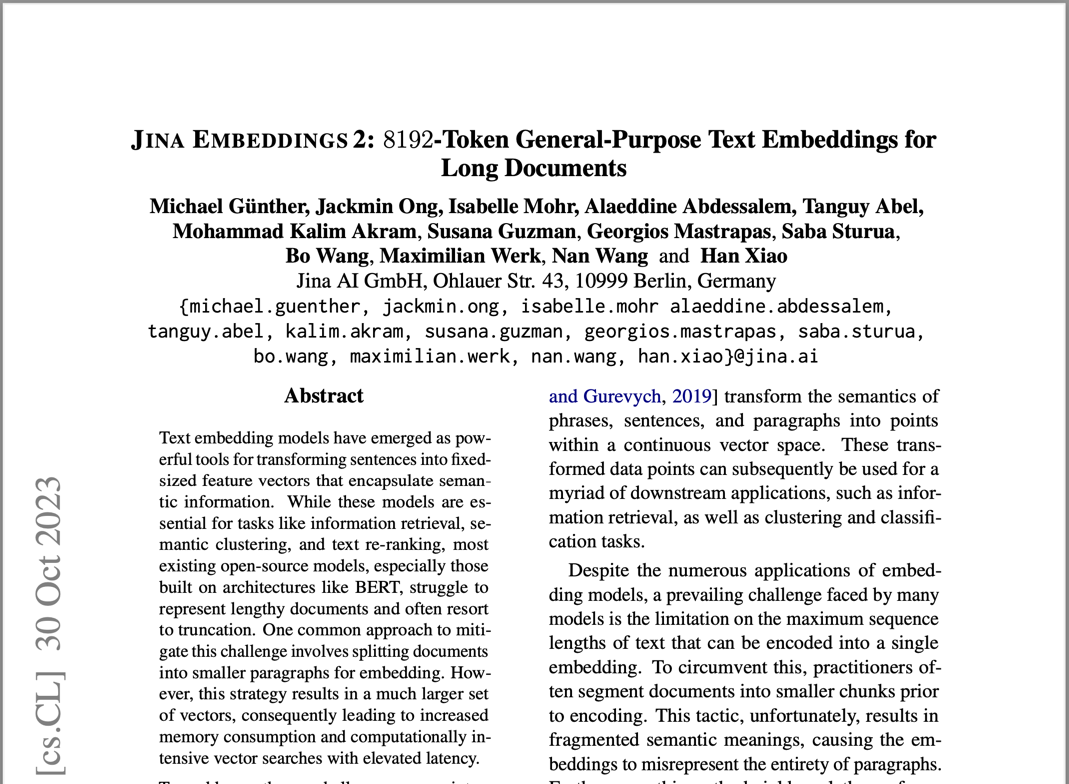

- Multimodal & Multilingual Capabilities: Jina Embeddings v3, for instance, offers state-of-the-art performance across multiple languages and data modalities (text, images).

- Open-Source Roots & Enterprise Readiness: While offering hosted APIs, Jina AI also has a strong open-source presence (e.g., on GitHub).

- Holistic Approach to AI Search: Instead of just one feature, Jina AI offers a complete toolkit for building sophisticated search and RAG systems.

- Directly Addresses LLM Limitations: Its focus on grounding LLMs with accurate and relevant real-time data is critical for advanced AI applications.

- High-Quality Models: The mention of "world-class" embeddings and rerankers, along with detailed model performance (e.g., Jina Embeddings v3's efficiency), suggests strong underlying AI capabilities.

- Developer-Centric: Provides APIs, SDKs, and clear documentation, empowering developers to build complex AI solutions.

- Flexible Deployment: Options for hosted APIs and on-premise deployment cater to various business needs and security requirements.

- Multimodality: The ability to handle text, images, and potentially other data types for search and retrieval is a significant advantage.

- Complexity for Beginners: While powerful, the platform is geared towards AI developers and might have a steeper learning curve for those new to neural search or RAG concepts.

- Cost for High Usage: While a free tier exists, extensive commercial use will involve API costs based on requests and token usage.

- Not a Direct End-User Product: It's an infrastructure layer for building AI applications, meaning end-users won't directly interact with "Jina AI" but rather with applications powered by it.

- Abstract Concepts: The terms like "Embeddings," "Reranker," and "DeepSearch" require a certain level of technical understanding to fully grasp their utility.

Proud of the love you're getting? Show off your AI Toolbook reviews—then invite more fans to share the love and build your credibility.

Add an AI Toolbook badge to your site—an easy way to drive followers, showcase updates, and collect reviews. It's like a mini 24/7 billboard for your AI.

Reviews

Rating Distribution

Average score

Popular Mention

FAQs

Similar AI Tools

tavily

Tavily is a specialized search engine meticulously optimized for Large Language Models (LLMs) and AI agents. Its primary goal is to provide real-time, accurate, and unbiased information, significantly enhancing the ability of AI applications to retrieve and process data efficiently. Unlike traditional search APIs, Tavily focuses on delivering highly relevant content snippets and structured data that are specifically tailored for AI workflows like Retrieval-Augmented Generation (RAG), aiming to reduce AI hallucinations and enable better decision-making.

tavily

Tavily is a specialized search engine meticulously optimized for Large Language Models (LLMs) and AI agents. Its primary goal is to provide real-time, accurate, and unbiased information, significantly enhancing the ability of AI applications to retrieve and process data efficiently. Unlike traditional search APIs, Tavily focuses on delivering highly relevant content snippets and structured data that are specifically tailored for AI workflows like Retrieval-Augmented Generation (RAG), aiming to reduce AI hallucinations and enable better decision-making.

tavily

Tavily is a specialized search engine meticulously optimized for Large Language Models (LLMs) and AI agents. Its primary goal is to provide real-time, accurate, and unbiased information, significantly enhancing the ability of AI applications to retrieve and process data efficiently. Unlike traditional search APIs, Tavily focuses on delivering highly relevant content snippets and structured data that are specifically tailored for AI workflows like Retrieval-Augmented Generation (RAG), aiming to reduce AI hallucinations and enable better decision-making.

LangChain AI

LangChain AI Local Deep Researcher is an autonomous, fully local web research assistant designed to conduct in-depth research on user-provided topics. It leverages local Large Language Models (LLMs) hosted by Ollama or LM Studio to iteratively generate search queries, summarize findings from web sources, and refine its understanding by identifying and addressing knowledge gaps. The final output is a comprehensive markdown report with citations to all sources.

LangChain AI

LangChain AI Local Deep Researcher is an autonomous, fully local web research assistant designed to conduct in-depth research on user-provided topics. It leverages local Large Language Models (LLMs) hosted by Ollama or LM Studio to iteratively generate search queries, summarize findings from web sources, and refine its understanding by identifying and addressing knowledge gaps. The final output is a comprehensive markdown report with citations to all sources.

LangChain AI

LangChain AI Local Deep Researcher is an autonomous, fully local web research assistant designed to conduct in-depth research on user-provided topics. It leverages local Large Language Models (LLMs) hosted by Ollama or LM Studio to iteratively generate search queries, summarize findings from web sources, and refine its understanding by identifying and addressing knowledge gaps. The final output is a comprehensive markdown report with citations to all sources.

Perplexity AI

Perplexity AI is a powerful AI‑powered answer engine and search assistant launched in December 2022. It combines real‑time web search with large language models (like GPT‑4.1, Claude 4, Sonar), delivering direct answers with in‑text citations and multi‑turn conversational context.

Perplexity AI

Perplexity AI is a powerful AI‑powered answer engine and search assistant launched in December 2022. It combines real‑time web search with large language models (like GPT‑4.1, Claude 4, Sonar), delivering direct answers with in‑text citations and multi‑turn conversational context.

Perplexity AI

Perplexity AI is a powerful AI‑powered answer engine and search assistant launched in December 2022. It combines real‑time web search with large language models (like GPT‑4.1, Claude 4, Sonar), delivering direct answers with in‑text citations and multi‑turn conversational context.

LM Arena

LMArena is a platform designed to allow users to contribute to the development of AI through collective feedback. Users interact with and provide feedback on various Large Language Models (LLMs) by voting on their responses, thereby helping to shape and improve AI capabilities. The platform fosters a global community and features a leaderboard to showcase user contributions.

LM Arena

LMArena is a platform designed to allow users to contribute to the development of AI through collective feedback. Users interact with and provide feedback on various Large Language Models (LLMs) by voting on their responses, thereby helping to shape and improve AI capabilities. The platform fosters a global community and features a leaderboard to showcase user contributions.

LM Arena

LMArena is a platform designed to allow users to contribute to the development of AI through collective feedback. Users interact with and provide feedback on various Large Language Models (LLMs) by voting on their responses, thereby helping to shape and improve AI capabilities. The platform fosters a global community and features a leaderboard to showcase user contributions.

Ducky

Ducky is a fully managed AI-powered retrieval platform built to simplify semantic search and Retrieval-Augmented Generation (RAG) for developers. It abstracts away the complexity of indexing, chunking, embedding, reranking, and vector databases—letting you focus on building AI applications rather than managing infrastructure. With a developer-first approach, Ducky offers a simple SDK in Python and TypeScript, fast indexing, low-latency retrieval, and high relevance via multi-step pipelines. It's especially tuned for use in LLM agents, ensuring context-aware, hallucination-resistant responses. Designed for ease and speed, you can go from zero to search-enabled app in minutes. The platform includes a generous free tier and clear, tiered pricing as you scale. Whether you’re building customer support tools, knowledge agents, or AI-enhanced search features, Ducky handles the infrastructure so developers can build smarter.

Ducky

Ducky is a fully managed AI-powered retrieval platform built to simplify semantic search and Retrieval-Augmented Generation (RAG) for developers. It abstracts away the complexity of indexing, chunking, embedding, reranking, and vector databases—letting you focus on building AI applications rather than managing infrastructure. With a developer-first approach, Ducky offers a simple SDK in Python and TypeScript, fast indexing, low-latency retrieval, and high relevance via multi-step pipelines. It's especially tuned for use in LLM agents, ensuring context-aware, hallucination-resistant responses. Designed for ease and speed, you can go from zero to search-enabled app in minutes. The platform includes a generous free tier and clear, tiered pricing as you scale. Whether you’re building customer support tools, knowledge agents, or AI-enhanced search features, Ducky handles the infrastructure so developers can build smarter.

Ducky

Ducky is a fully managed AI-powered retrieval platform built to simplify semantic search and Retrieval-Augmented Generation (RAG) for developers. It abstracts away the complexity of indexing, chunking, embedding, reranking, and vector databases—letting you focus on building AI applications rather than managing infrastructure. With a developer-first approach, Ducky offers a simple SDK in Python and TypeScript, fast indexing, low-latency retrieval, and high relevance via multi-step pipelines. It's especially tuned for use in LLM agents, ensuring context-aware, hallucination-resistant responses. Designed for ease and speed, you can go from zero to search-enabled app in minutes. The platform includes a generous free tier and clear, tiered pricing as you scale. Whether you’re building customer support tools, knowledge agents, or AI-enhanced search features, Ducky handles the infrastructure so developers can build smarter.

Dotlane

Dotlane is an all-in-one AI assistant platform that brings together multiple leading AI models under a single, user-friendly interface. Instead of subscribing to or switching between different providers, users can access models from OpenAI, Anthropic, Grok, Mistral, Deepseek, and others in one place. It offers a wide range of features including advanced chat, file understanding and summarization, real-time search, and image generation. Dotlane’s mission is to make powerful AI accessible, fair, and transparent for individuals and teams alike.

Dotlane

Dotlane is an all-in-one AI assistant platform that brings together multiple leading AI models under a single, user-friendly interface. Instead of subscribing to or switching between different providers, users can access models from OpenAI, Anthropic, Grok, Mistral, Deepseek, and others in one place. It offers a wide range of features including advanced chat, file understanding and summarization, real-time search, and image generation. Dotlane’s mission is to make powerful AI accessible, fair, and transparent for individuals and teams alike.

Dotlane

Dotlane is an all-in-one AI assistant platform that brings together multiple leading AI models under a single, user-friendly interface. Instead of subscribing to or switching between different providers, users can access models from OpenAI, Anthropic, Grok, Mistral, Deepseek, and others in one place. It offers a wide range of features including advanced chat, file understanding and summarization, real-time search, and image generation. Dotlane’s mission is to make powerful AI accessible, fair, and transparent for individuals and teams alike.

WebDev Arena

LMArena is an open, crowdsourced platform for evaluating large language models (LLMs) based on human preferences. Rather than relying purely on automated benchmarks, it presents paired responses from different models to users, who vote for which is better. These votes build live leaderboards, revealing which models perform best in real-use scenarios. Key features include prompt-to-leaderboard comparison, transparent evaluation methods, style control for how responses are formatted, and auditability of feedback data. The platform is particularly valuable for researchers, developers, and AI labs that want to understand how their models compare when judged by real people, not just metrics.

WebDev Arena

LMArena is an open, crowdsourced platform for evaluating large language models (LLMs) based on human preferences. Rather than relying purely on automated benchmarks, it presents paired responses from different models to users, who vote for which is better. These votes build live leaderboards, revealing which models perform best in real-use scenarios. Key features include prompt-to-leaderboard comparison, transparent evaluation methods, style control for how responses are formatted, and auditability of feedback data. The platform is particularly valuable for researchers, developers, and AI labs that want to understand how their models compare when judged by real people, not just metrics.

WebDev Arena

LMArena is an open, crowdsourced platform for evaluating large language models (LLMs) based on human preferences. Rather than relying purely on automated benchmarks, it presents paired responses from different models to users, who vote for which is better. These votes build live leaderboards, revealing which models perform best in real-use scenarios. Key features include prompt-to-leaderboard comparison, transparent evaluation methods, style control for how responses are formatted, and auditability of feedback data. The platform is particularly valuable for researchers, developers, and AI labs that want to understand how their models compare when judged by real people, not just metrics.

Genloop AI

Genloop is a platform that empowers enterprises to build, deploy, and manage custom, private large language models (LLMs) tailored to their business data and requirements — all with minimal development effort. It turns enterprise data into intelligent, conversational insights, allowing users to ask business questions in natural language and receive actionable analysis instantly. The platform enables organizations to confidently manage their data-driven decision-making by offering advanced fine-tuning, automation, and deployment tools. Businesses can transform their existing datasets into private AI assistants that deliver accurate insights, while maintaining complete security and compliance. Genloop’s focus is on bridging the gap between AI and enterprise data operations, providing a scalable, trustworthy, and adaptive solution for teams that want to leverage AI without extensive coding or infrastructure complexity.

Genloop AI

Genloop is a platform that empowers enterprises to build, deploy, and manage custom, private large language models (LLMs) tailored to their business data and requirements — all with minimal development effort. It turns enterprise data into intelligent, conversational insights, allowing users to ask business questions in natural language and receive actionable analysis instantly. The platform enables organizations to confidently manage their data-driven decision-making by offering advanced fine-tuning, automation, and deployment tools. Businesses can transform their existing datasets into private AI assistants that deliver accurate insights, while maintaining complete security and compliance. Genloop’s focus is on bridging the gap between AI and enterprise data operations, providing a scalable, trustworthy, and adaptive solution for teams that want to leverage AI without extensive coding or infrastructure complexity.

Genloop AI

Genloop is a platform that empowers enterprises to build, deploy, and manage custom, private large language models (LLMs) tailored to their business data and requirements — all with minimal development effort. It turns enterprise data into intelligent, conversational insights, allowing users to ask business questions in natural language and receive actionable analysis instantly. The platform enables organizations to confidently manage their data-driven decision-making by offering advanced fine-tuning, automation, and deployment tools. Businesses can transform their existing datasets into private AI assistants that deliver accurate insights, while maintaining complete security and compliance. Genloop’s focus is on bridging the gap between AI and enterprise data operations, providing a scalable, trustworthy, and adaptive solution for teams that want to leverage AI without extensive coding or infrastructure complexity.

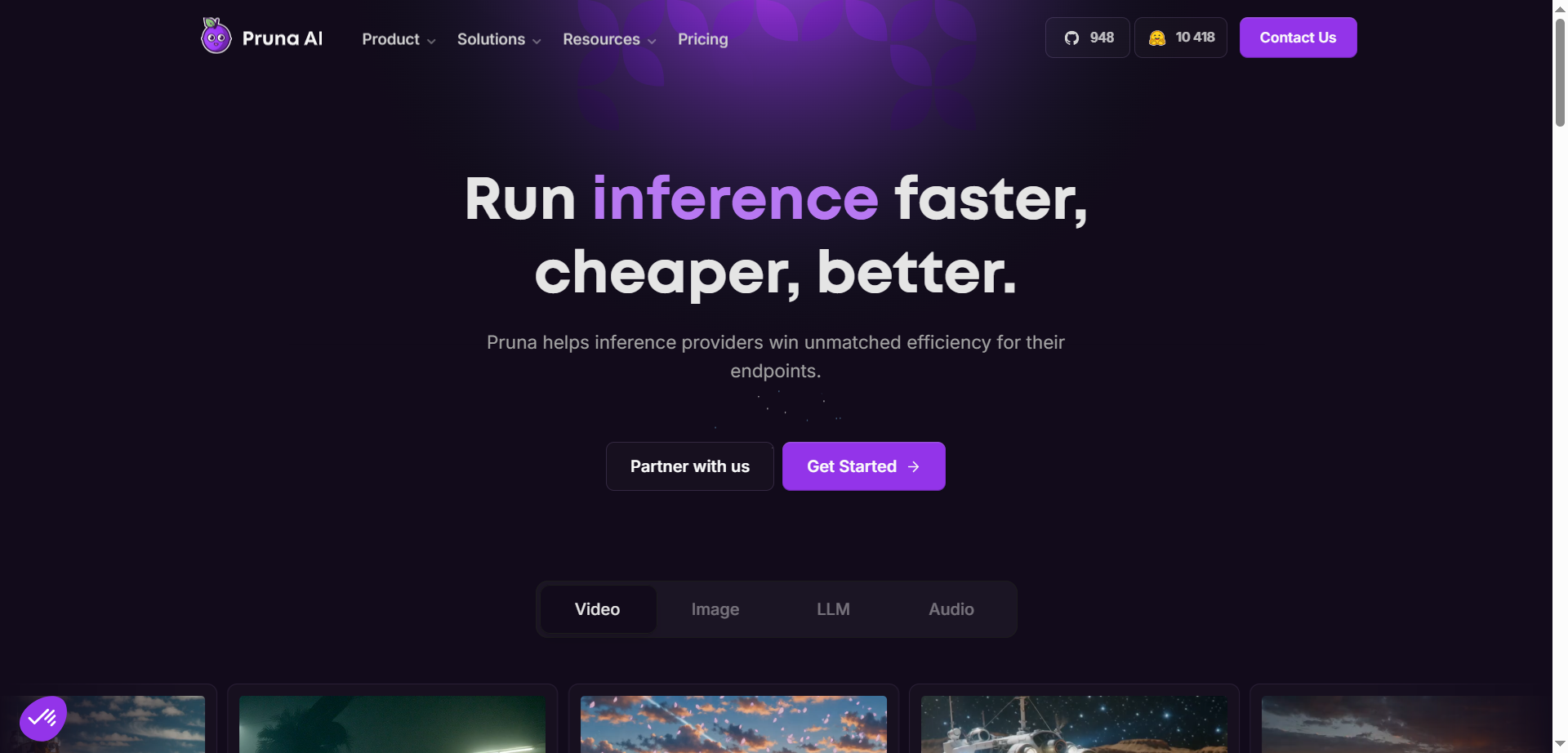

Pruna AI

Pruna.ai is an AI optimization engine designed to make machine learning models faster, smaller, cheaper, and greener with minimal overhead. It leverages advanced compression algorithms like pruning, quantization, distillation, caching, and compilation to reduce model size and accelerate inference times. The platform supports various AI models including large language models, vision transformers, and speech recognition models, making it ideal for real-time applications such as autonomous systems and recommendation engines. Pruna.ai aims to lower computational costs, decrease energy consumption, and improve deployment scalability across cloud and on-premise environments while ensuring minimal loss of model quality.

Pruna AI

Pruna.ai is an AI optimization engine designed to make machine learning models faster, smaller, cheaper, and greener with minimal overhead. It leverages advanced compression algorithms like pruning, quantization, distillation, caching, and compilation to reduce model size and accelerate inference times. The platform supports various AI models including large language models, vision transformers, and speech recognition models, making it ideal for real-time applications such as autonomous systems and recommendation engines. Pruna.ai aims to lower computational costs, decrease energy consumption, and improve deployment scalability across cloud and on-premise environments while ensuring minimal loss of model quality.

Pruna AI

Pruna.ai is an AI optimization engine designed to make machine learning models faster, smaller, cheaper, and greener with minimal overhead. It leverages advanced compression algorithms like pruning, quantization, distillation, caching, and compilation to reduce model size and accelerate inference times. The platform supports various AI models including large language models, vision transformers, and speech recognition models, making it ideal for real-time applications such as autonomous systems and recommendation engines. Pruna.ai aims to lower computational costs, decrease energy consumption, and improve deployment scalability across cloud and on-premise environments while ensuring minimal loss of model quality.

Haystack is an open-source framework developed by deepset for building production-ready search and question-answering systems powered by language models. It enables developers to connect LLMs with structured and unstructured data sources, perform retrieval-augmented generation, and create semantic search pipelines. Haystack provides flexibility to integrate various retrievers, readers, and document stores like Elasticsearch, FAISS, and Pinecone. It’s widely used for enterprise document Q&A, chatbots, and knowledge management systems, helping teams deploy scalable, high-performance AI-powered search.

Haystack by Deepse..

Haystack is an open-source framework developed by deepset for building production-ready search and question-answering systems powered by language models. It enables developers to connect LLMs with structured and unstructured data sources, perform retrieval-augmented generation, and create semantic search pipelines. Haystack provides flexibility to integrate various retrievers, readers, and document stores like Elasticsearch, FAISS, and Pinecone. It’s widely used for enterprise document Q&A, chatbots, and knowledge management systems, helping teams deploy scalable, high-performance AI-powered search.

Haystack by Deepse..

Haystack is an open-source framework developed by deepset for building production-ready search and question-answering systems powered by language models. It enables developers to connect LLMs with structured and unstructured data sources, perform retrieval-augmented generation, and create semantic search pipelines. Haystack provides flexibility to integrate various retrievers, readers, and document stores like Elasticsearch, FAISS, and Pinecone. It’s widely used for enterprise document Q&A, chatbots, and knowledge management systems, helping teams deploy scalable, high-performance AI-powered search.

Mobisoft Infotech

MI Team AI is a robust multi-LLM platform designed for enterprises seeking secure, scalable, and cost-effective AI access. It consolidates multiple AI models such as ChatGPT, Claude, Gemini, and various open-source large language models into a single platform, enabling users to switch seamlessly without juggling different tools. The platform supports deployment on private cloud or on-premises infrastructure to ensure complete data privacy and compliance. MI Team AI provides a unified workspace with role-based access controls, single sign-on (SSO), and comprehensive chat logs for transparency and auditability. It offers fixed licensing fees allowing unlimited team access under the company’s brand, making it ideal for organizations needing full control over AI usage.

Mobisoft Infotech

MI Team AI is a robust multi-LLM platform designed for enterprises seeking secure, scalable, and cost-effective AI access. It consolidates multiple AI models such as ChatGPT, Claude, Gemini, and various open-source large language models into a single platform, enabling users to switch seamlessly without juggling different tools. The platform supports deployment on private cloud or on-premises infrastructure to ensure complete data privacy and compliance. MI Team AI provides a unified workspace with role-based access controls, single sign-on (SSO), and comprehensive chat logs for transparency and auditability. It offers fixed licensing fees allowing unlimited team access under the company’s brand, making it ideal for organizations needing full control over AI usage.

Mobisoft Infotech

MI Team AI is a robust multi-LLM platform designed for enterprises seeking secure, scalable, and cost-effective AI access. It consolidates multiple AI models such as ChatGPT, Claude, Gemini, and various open-source large language models into a single platform, enabling users to switch seamlessly without juggling different tools. The platform supports deployment on private cloud or on-premises infrastructure to ensure complete data privacy and compliance. MI Team AI provides a unified workspace with role-based access controls, single sign-on (SSO), and comprehensive chat logs for transparency and auditability. It offers fixed licensing fees allowing unlimited team access under the company’s brand, making it ideal for organizations needing full control over AI usage.

LLM Chat

LLMChat is a privacy-focused, open-source AI chatbot platform designed for advanced research, agentic workflows, and seamless interaction with multiple large language models (LLMs). It offers users a minimalistic and intuitive interface enabling deep exploration of complex topics with modes like Deep Research and Pro Search, which incorporates real-time web integration for current data. The platform emphasizes user privacy by storing all chat history locally in the browser, ensuring conversations never leave the device. LLMChat supports many popular LLM providers such as OpenAI, Anthropic, Google, and more, allowing users to customize AI assistants with personalized instructions and knowledge bases for a wide variety of applications ranging from research to content generation and coding assistance.

LLM Chat

LLMChat is a privacy-focused, open-source AI chatbot platform designed for advanced research, agentic workflows, and seamless interaction with multiple large language models (LLMs). It offers users a minimalistic and intuitive interface enabling deep exploration of complex topics with modes like Deep Research and Pro Search, which incorporates real-time web integration for current data. The platform emphasizes user privacy by storing all chat history locally in the browser, ensuring conversations never leave the device. LLMChat supports many popular LLM providers such as OpenAI, Anthropic, Google, and more, allowing users to customize AI assistants with personalized instructions and knowledge bases for a wide variety of applications ranging from research to content generation and coding assistance.

LLM Chat

LLMChat is a privacy-focused, open-source AI chatbot platform designed for advanced research, agentic workflows, and seamless interaction with multiple large language models (LLMs). It offers users a minimalistic and intuitive interface enabling deep exploration of complex topics with modes like Deep Research and Pro Search, which incorporates real-time web integration for current data. The platform emphasizes user privacy by storing all chat history locally in the browser, ensuring conversations never leave the device. LLMChat supports many popular LLM providers such as OpenAI, Anthropic, Google, and more, allowing users to customize AI assistants with personalized instructions and knowledge bases for a wide variety of applications ranging from research to content generation and coding assistance.

Editorial Note

This page was researched and written by the ATB Editorial Team. Our team researches each AI tool by reviewing its official website, testing features, exploring real use cases, and considering user feedback. Every page is fact-checked and regularly updated to ensure the information stays accurate, neutral, and useful for our readers.

If you have any suggestions or questions, email us at hello@aitoolbook.ai