- AI Enthusiasts: Individuals passionate about artificial intelligence who want to directly contribute to its advancement.

- AI Researchers: Academics and researchers who can use the collective feedback data to gain insights into AI performance and user preferences.

- Developers of LLMs: Organizations and individuals creating AI models who can receive valuable feedback to iterate and improve their creations.

- Anyone Interested in AI: Users curious about how different AI models perform and wishing to compare them side-by-side.

How to Use LMArena?

- Engage with AI Models: Interact with various AI models by submitting prompts or questions.

- Provide Feedback: Compare the responses from different AI models and cast your vote for the preferred response.

- Contribute to AI Training: Your votes and interactions provide valuable data that helps train and improve AI models.

- Track Your Contribution: Participate in the global community and see your contributions reflected on the leaderboard.

- Crowdsourced AI Improvement: LMArena leverages collective user feedback on a massive scale (over 3.5 million votes) to directly influence AI development.

- Direct Impact on AI: Users have a tangible way to shape the future capabilities of AI by voting on model responses.

- Comparative AI Testing: The platform allows users to compare different AI models side-by-side, offering a unique perspective on their strengths and weaknesses.

- Gamified Contribution: The inclusion of a leaderboard adds a gamified element, encouraging ongoing participation from its community.

- Direct Impact on AI: Users can directly contribute to improving AI models, which is a powerful way to engage with the technology.

- Model Comparison: The platform provides an excellent opportunity to test and compare different AI models in real-world scenarios.

- Community Engagement: The global community and leaderboard foster a sense of participation and healthy competition.

- Data Privacy Concerns: User conversations and certain personal information will be disclosed to AI providers and may be shared publicly for research purposes. Users are explicitly warned not to submit sensitive information.

- AI Inaccuracy Warning: The platform states that inputs are processed by third-party AI, and responses may be inaccurate, which is a standard disclaimer but still a point to note.

Paid

custom

Proud of the love you're getting? Show off your AI Toolbook reviews—then invite more fans to share the love and build your credibility.

Add an AI Toolbook badge to your site—an easy way to drive followers, showcase updates, and collect reviews. It's like a mini 24/7 billboard for your AI.

Reviews

Rating Distribution

Average score

Popular Mention

FAQs

Similar AI Tools

LangChain AI

LangChain AI Local Deep Researcher is an autonomous, fully local web research assistant designed to conduct in-depth research on user-provided topics. It leverages local Large Language Models (LLMs) hosted by Ollama or LM Studio to iteratively generate search queries, summarize findings from web sources, and refine its understanding by identifying and addressing knowledge gaps. The final output is a comprehensive markdown report with citations to all sources.

LangChain AI

LangChain AI Local Deep Researcher is an autonomous, fully local web research assistant designed to conduct in-depth research on user-provided topics. It leverages local Large Language Models (LLMs) hosted by Ollama or LM Studio to iteratively generate search queries, summarize findings from web sources, and refine its understanding by identifying and addressing knowledge gaps. The final output is a comprehensive markdown report with citations to all sources.

LangChain AI

LangChain AI Local Deep Researcher is an autonomous, fully local web research assistant designed to conduct in-depth research on user-provided topics. It leverages local Large Language Models (LLMs) hosted by Ollama or LM Studio to iteratively generate search queries, summarize findings from web sources, and refine its understanding by identifying and addressing knowledge gaps. The final output is a comprehensive markdown report with citations to all sources.

Meta Llama 4

Meta Llama 4 is the latest generation of Meta’s large language model series. It features a mixture-of-experts (MoE) architecture, making it both highly efficient and powerful. Llama 4 is natively multimodal—supporting text and image inputs—and offers three key variants: Scout (17B active parameters, 10 M token context), Maverick (17B active, 1 M token context), and Behemoth (288B active, 2 T total parameters; still in development). Designed for long-context reasoning, multilingual understanding, and open-weight availability (with license restrictions), Llama 4 excels in benchmarks and versatility.

Meta Llama 4

Meta Llama 4 is the latest generation of Meta’s large language model series. It features a mixture-of-experts (MoE) architecture, making it both highly efficient and powerful. Llama 4 is natively multimodal—supporting text and image inputs—and offers three key variants: Scout (17B active parameters, 10 M token context), Maverick (17B active, 1 M token context), and Behemoth (288B active, 2 T total parameters; still in development). Designed for long-context reasoning, multilingual understanding, and open-weight availability (with license restrictions), Llama 4 excels in benchmarks and versatility.

Meta Llama 4

Meta Llama 4 is the latest generation of Meta’s large language model series. It features a mixture-of-experts (MoE) architecture, making it both highly efficient and powerful. Llama 4 is natively multimodal—supporting text and image inputs—and offers three key variants: Scout (17B active parameters, 10 M token context), Maverick (17B active, 1 M token context), and Behemoth (288B active, 2 T total parameters; still in development). Designed for long-context reasoning, multilingual understanding, and open-weight availability (with license restrictions), Llama 4 excels in benchmarks and versatility.

Mistral Saba

Mistral Saba is a 24 billion‑parameter regional language model launched by Mistral AI on February 17, 2025. Designed for native fluency in Arabic and South Asian languages (like Tamil, Malayalam, and Urdu), it delivers culturally-aware responses on single‑GPU systems—faster and more precise than much larger general models.

Mistral Saba

Mistral Saba is a 24 billion‑parameter regional language model launched by Mistral AI on February 17, 2025. Designed for native fluency in Arabic and South Asian languages (like Tamil, Malayalam, and Urdu), it delivers culturally-aware responses on single‑GPU systems—faster and more precise than much larger general models.

Mistral Saba

Mistral Saba is a 24 billion‑parameter regional language model launched by Mistral AI on February 17, 2025. Designed for native fluency in Arabic and South Asian languages (like Tamil, Malayalam, and Urdu), it delivers culturally-aware responses on single‑GPU systems—faster and more precise than much larger general models.

Chat 01 AI

Chat01.ai is a platform that offers free and unlimited chat with OpenAI 01, a new series of AI models. These models are specifically designed for complex reasoning and problem-solving in areas such as science, coding, and math, by employing a "think more before responding" approach, trying different strategies, and recognizing mistakes.

Chat 01 AI

Chat01.ai is a platform that offers free and unlimited chat with OpenAI 01, a new series of AI models. These models are specifically designed for complex reasoning and problem-solving in areas such as science, coding, and math, by employing a "think more before responding" approach, trying different strategies, and recognizing mistakes.

Chat 01 AI

Chat01.ai is a platform that offers free and unlimited chat with OpenAI 01, a new series of AI models. These models are specifically designed for complex reasoning and problem-solving in areas such as science, coding, and math, by employing a "think more before responding" approach, trying different strategies, and recognizing mistakes.

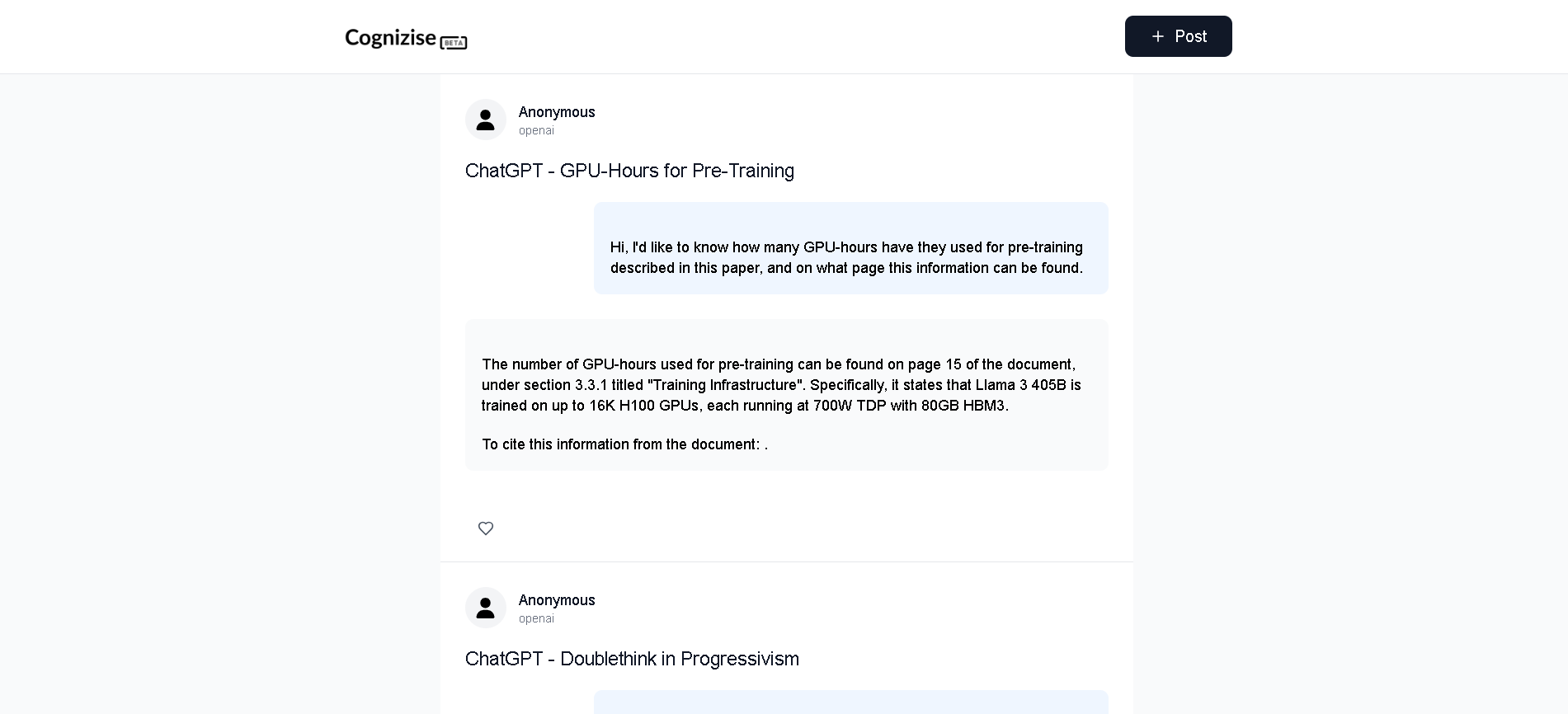

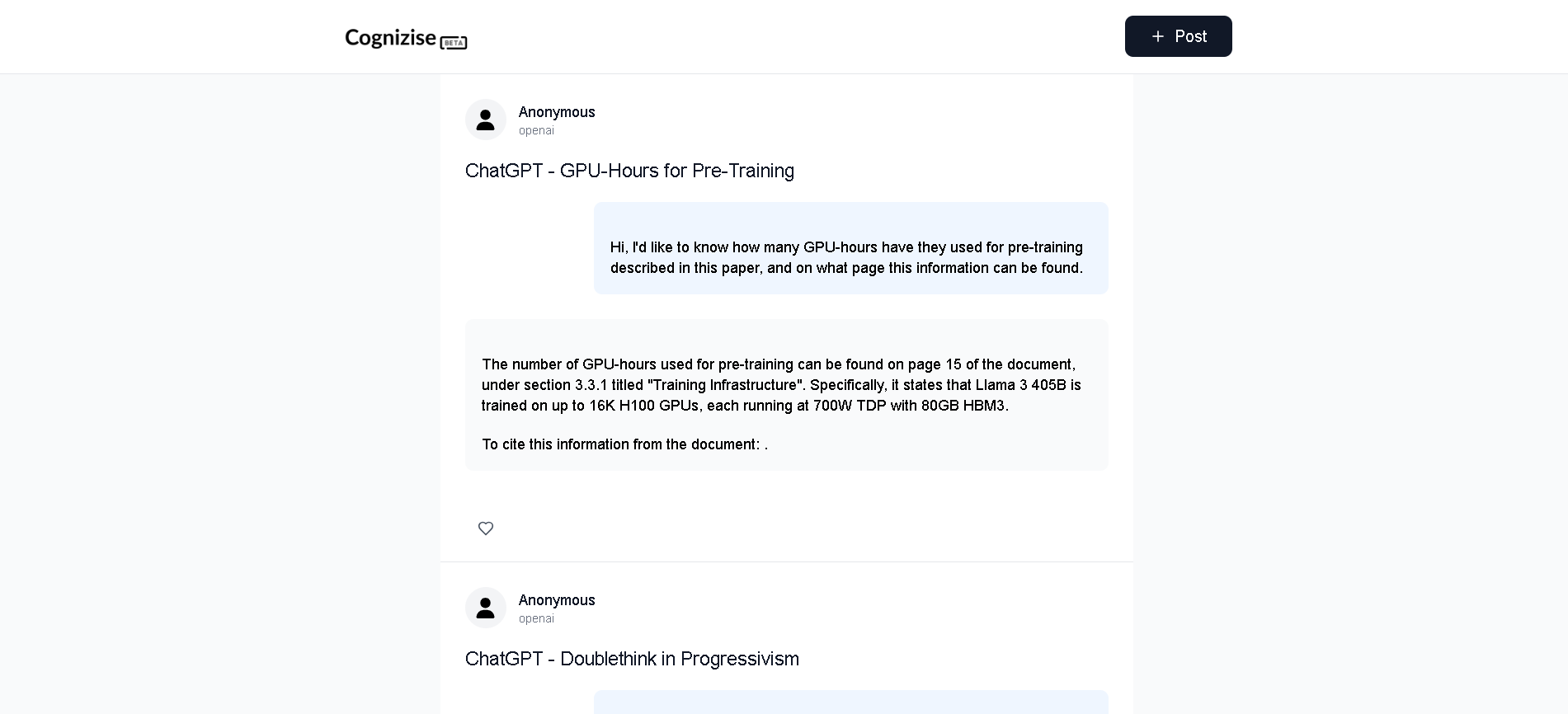

Cognizise

Cognizise.com is an AI-powered platform that allows users to ask questions and receive AI-generated answers on a wide variety of topics. It serves as a comprehensive resource for exploring AI-generated content, ranging from technical queries to practical advice and discussions on current events.

Cognizise

Cognizise.com is an AI-powered platform that allows users to ask questions and receive AI-generated answers on a wide variety of topics. It serves as a comprehensive resource for exploring AI-generated content, ranging from technical queries to practical advice and discussions on current events.

Cognizise

Cognizise.com is an AI-powered platform that allows users to ask questions and receive AI-generated answers on a wide variety of topics. It serves as a comprehensive resource for exploring AI-generated content, ranging from technical queries to practical advice and discussions on current events.

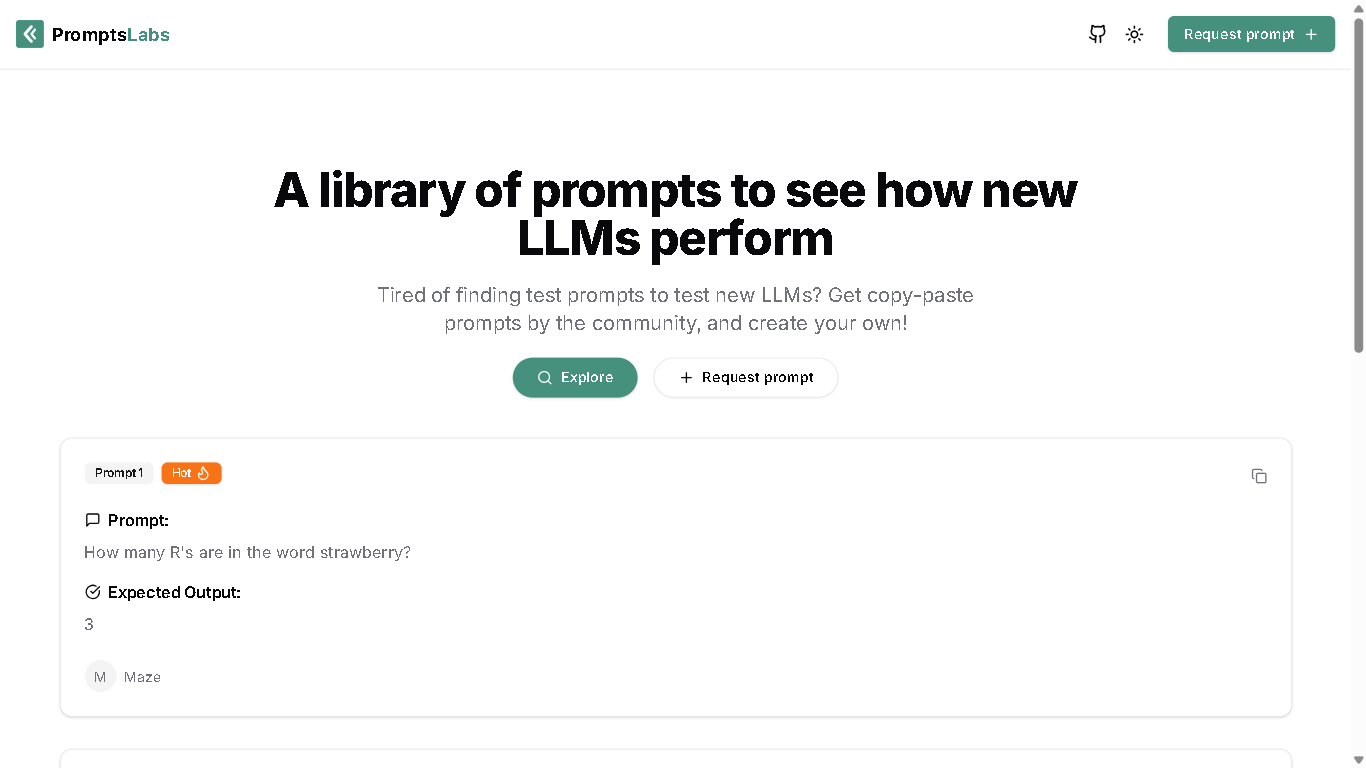

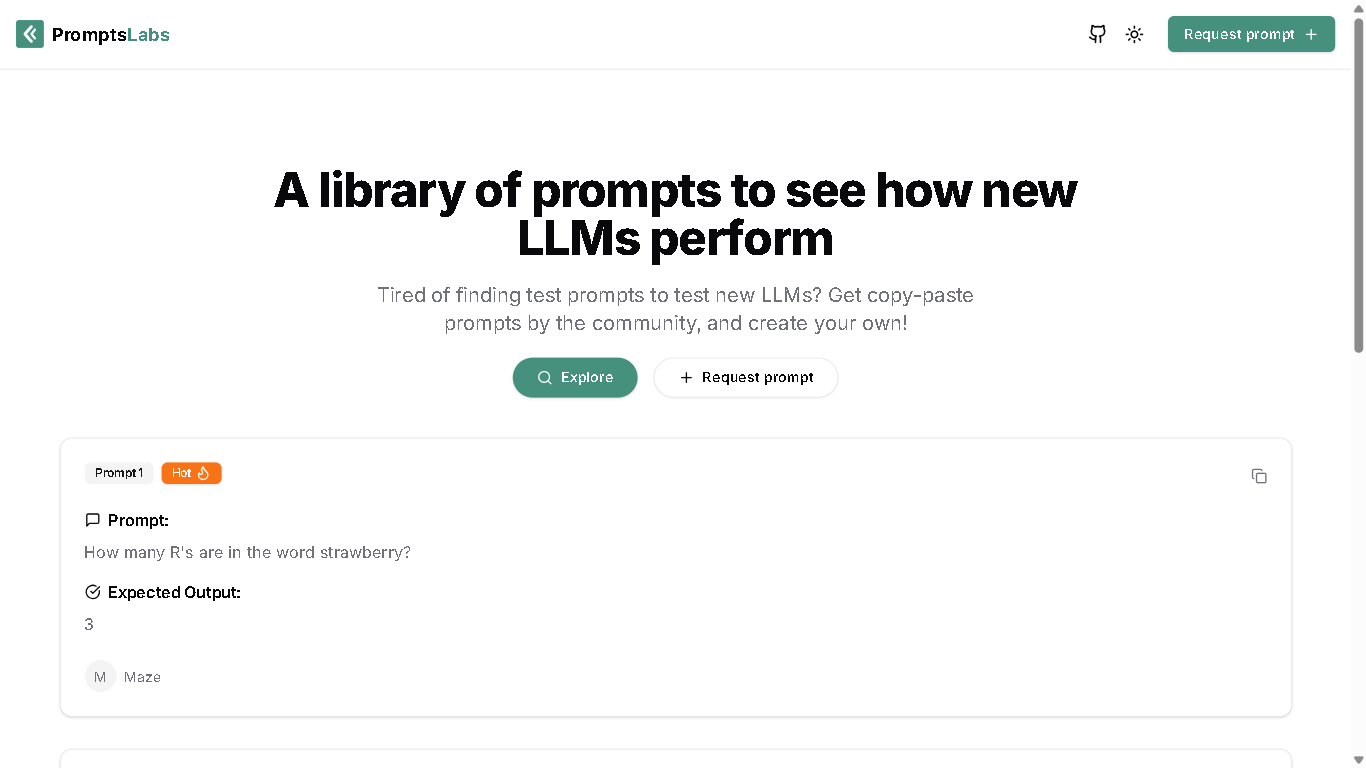

PromptsLabs

PromptsLabs is an open-source library of curated prompts designed to test and evaluate the performance of large language models (LLMs). It allows users to explore, contribute, and request prompts to better understand LLM capabilities.

PromptsLabs

PromptsLabs is an open-source library of curated prompts designed to test and evaluate the performance of large language models (LLMs). It allows users to explore, contribute, and request prompts to better understand LLM capabilities.

PromptsLabs

PromptsLabs is an open-source library of curated prompts designed to test and evaluate the performance of large language models (LLMs). It allows users to explore, contribute, and request prompts to better understand LLM capabilities.

ChatBetter

ChatBetter is an AI platform designed to unify access to all major large language models (LLMs) within a single chat interface. Built for productivity and accuracy, ChatBetter leverages automatic model selection to route every query to the most capable AI—eliminating guesswork about which model to use. Users can directly compare responses from OpenAI, Anthropic, Google, Meta, DeepSeek, Perplexity, Mistral, xAI, and Cohere models side by side, or merge answers for comprehensive insights. The system is crafted for teams and individuals alike, enabling complex research, planning, and writing tasks to be accomplished efficiently in one place.

ChatBetter

ChatBetter is an AI platform designed to unify access to all major large language models (LLMs) within a single chat interface. Built for productivity and accuracy, ChatBetter leverages automatic model selection to route every query to the most capable AI—eliminating guesswork about which model to use. Users can directly compare responses from OpenAI, Anthropic, Google, Meta, DeepSeek, Perplexity, Mistral, xAI, and Cohere models side by side, or merge answers for comprehensive insights. The system is crafted for teams and individuals alike, enabling complex research, planning, and writing tasks to be accomplished efficiently in one place.

ChatBetter

ChatBetter is an AI platform designed to unify access to all major large language models (LLMs) within a single chat interface. Built for productivity and accuracy, ChatBetter leverages automatic model selection to route every query to the most capable AI—eliminating guesswork about which model to use. Users can directly compare responses from OpenAI, Anthropic, Google, Meta, DeepSeek, Perplexity, Mistral, xAI, and Cohere models side by side, or merge answers for comprehensive insights. The system is crafted for teams and individuals alike, enabling complex research, planning, and writing tasks to be accomplished efficiently in one place.

Ask Any Model

AskAnyModel is a unified AI interface that allows users to interact with multiple leading AI models — such as GPT, Claude, Gemini, and Mistral — from a single platform. It eliminates the need for multiple subscriptions and interfaces by bringing top AI models into one streamlined environment. Users can compare responses, analyze outputs, and select the best AI model for specific tasks like content creation, coding, data analysis, or research. AskAnyModel empowers individuals and teams to harness AI diversity efficiently, offering advanced tools for prompt testing, model benchmarking, and workflow integration.

Ask Any Model

AskAnyModel is a unified AI interface that allows users to interact with multiple leading AI models — such as GPT, Claude, Gemini, and Mistral — from a single platform. It eliminates the need for multiple subscriptions and interfaces by bringing top AI models into one streamlined environment. Users can compare responses, analyze outputs, and select the best AI model for specific tasks like content creation, coding, data analysis, or research. AskAnyModel empowers individuals and teams to harness AI diversity efficiently, offering advanced tools for prompt testing, model benchmarking, and workflow integration.

Ask Any Model

AskAnyModel is a unified AI interface that allows users to interact with multiple leading AI models — such as GPT, Claude, Gemini, and Mistral — from a single platform. It eliminates the need for multiple subscriptions and interfaces by bringing top AI models into one streamlined environment. Users can compare responses, analyze outputs, and select the best AI model for specific tasks like content creation, coding, data analysis, or research. AskAnyModel empowers individuals and teams to harness AI diversity efficiently, offering advanced tools for prompt testing, model benchmarking, and workflow integration.

Mobisoft Infotech

MI Team AI is a robust multi-LLM platform designed for enterprises seeking secure, scalable, and cost-effective AI access. It consolidates multiple AI models such as ChatGPT, Claude, Gemini, and various open-source large language models into a single platform, enabling users to switch seamlessly without juggling different tools. The platform supports deployment on private cloud or on-premises infrastructure to ensure complete data privacy and compliance. MI Team AI provides a unified workspace with role-based access controls, single sign-on (SSO), and comprehensive chat logs for transparency and auditability. It offers fixed licensing fees allowing unlimited team access under the company’s brand, making it ideal for organizations needing full control over AI usage.

Mobisoft Infotech

MI Team AI is a robust multi-LLM platform designed for enterprises seeking secure, scalable, and cost-effective AI access. It consolidates multiple AI models such as ChatGPT, Claude, Gemini, and various open-source large language models into a single platform, enabling users to switch seamlessly without juggling different tools. The platform supports deployment on private cloud or on-premises infrastructure to ensure complete data privacy and compliance. MI Team AI provides a unified workspace with role-based access controls, single sign-on (SSO), and comprehensive chat logs for transparency and auditability. It offers fixed licensing fees allowing unlimited team access under the company’s brand, making it ideal for organizations needing full control over AI usage.

Mobisoft Infotech

MI Team AI is a robust multi-LLM platform designed for enterprises seeking secure, scalable, and cost-effective AI access. It consolidates multiple AI models such as ChatGPT, Claude, Gemini, and various open-source large language models into a single platform, enabling users to switch seamlessly without juggling different tools. The platform supports deployment on private cloud or on-premises infrastructure to ensure complete data privacy and compliance. MI Team AI provides a unified workspace with role-based access controls, single sign-on (SSO), and comprehensive chat logs for transparency and auditability. It offers fixed licensing fees allowing unlimited team access under the company’s brand, making it ideal for organizations needing full control over AI usage.

Prompts AI

Prompts.ai is an enterprise-grade AI platform designed to streamline, optimize, and govern generative AI workflows and prompt engineering across organizations. It centralizes access to over 35 large language models (LLMs) and AI tools, allowing teams to automate repetitive workflows, reduce costs, and boost productivity by up to 10 times. The platform emphasizes data security and compliance with standards such as SOC 2 Type II, HIPAA, and GDPR. It supports enterprises in building custom AI workflows, ensuring full visibility, auditability, and governance of AI interactions. Additionally, Prompts.ai fosters collaboration by providing a shared library of expert-built prompts and workflows, enabling businesses to scale AI adoption efficiently and securely.

Prompts AI

Prompts.ai is an enterprise-grade AI platform designed to streamline, optimize, and govern generative AI workflows and prompt engineering across organizations. It centralizes access to over 35 large language models (LLMs) and AI tools, allowing teams to automate repetitive workflows, reduce costs, and boost productivity by up to 10 times. The platform emphasizes data security and compliance with standards such as SOC 2 Type II, HIPAA, and GDPR. It supports enterprises in building custom AI workflows, ensuring full visibility, auditability, and governance of AI interactions. Additionally, Prompts.ai fosters collaboration by providing a shared library of expert-built prompts and workflows, enabling businesses to scale AI adoption efficiently and securely.

Prompts AI

Prompts.ai is an enterprise-grade AI platform designed to streamline, optimize, and govern generative AI workflows and prompt engineering across organizations. It centralizes access to over 35 large language models (LLMs) and AI tools, allowing teams to automate repetitive workflows, reduce costs, and boost productivity by up to 10 times. The platform emphasizes data security and compliance with standards such as SOC 2 Type II, HIPAA, and GDPR. It supports enterprises in building custom AI workflows, ensuring full visibility, auditability, and governance of AI interactions. Additionally, Prompts.ai fosters collaboration by providing a shared library of expert-built prompts and workflows, enabling businesses to scale AI adoption efficiently and securely.

Awan LLM

Awan LLM is a cost-effective, unlimited token large language model inference API platform designed for power users and developers. Unlike traditional API providers that charge per token, Awan LLM offers a monthly subscription model that enables users to send and receive unlimited tokens up to the model's context limit. It supports unrestricted use of LLM models without censorship or constraints. The platform is built on privately owned data centers and GPUs, allowing it to offer efficient and scalable AI services. Awan LLM supports numerous use cases including AI assistants, AI agents, roleplaying, data processing, code completion, and building AI-powered applications without worrying about token limits or costs.

Awan LLM

Awan LLM is a cost-effective, unlimited token large language model inference API platform designed for power users and developers. Unlike traditional API providers that charge per token, Awan LLM offers a monthly subscription model that enables users to send and receive unlimited tokens up to the model's context limit. It supports unrestricted use of LLM models without censorship or constraints. The platform is built on privately owned data centers and GPUs, allowing it to offer efficient and scalable AI services. Awan LLM supports numerous use cases including AI assistants, AI agents, roleplaying, data processing, code completion, and building AI-powered applications without worrying about token limits or costs.

Awan LLM

Awan LLM is a cost-effective, unlimited token large language model inference API platform designed for power users and developers. Unlike traditional API providers that charge per token, Awan LLM offers a monthly subscription model that enables users to send and receive unlimited tokens up to the model's context limit. It supports unrestricted use of LLM models without censorship or constraints. The platform is built on privately owned data centers and GPUs, allowing it to offer efficient and scalable AI services. Awan LLM supports numerous use cases including AI assistants, AI agents, roleplaying, data processing, code completion, and building AI-powered applications without worrying about token limits or costs.

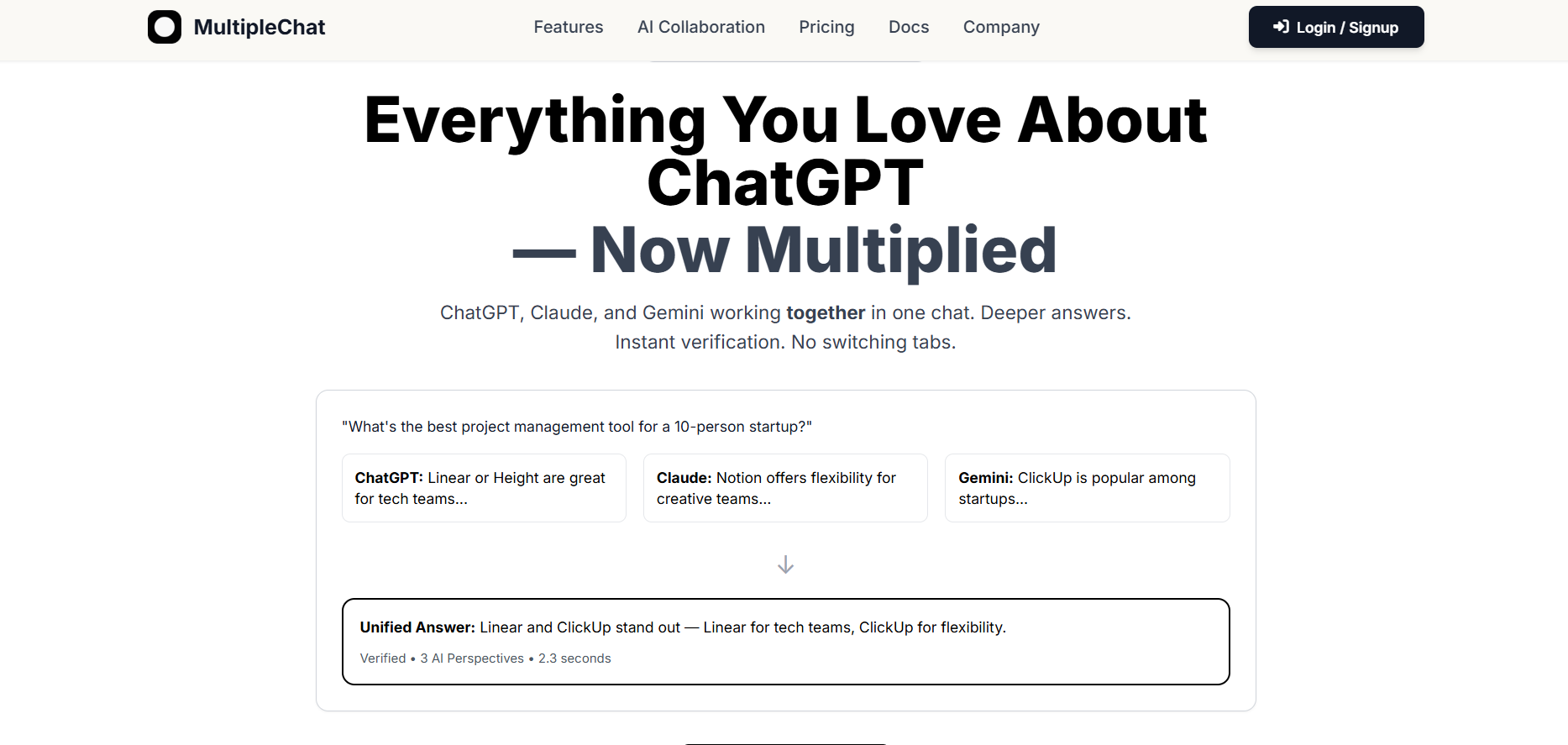

Multiple Chat

Multiple is a multi-model AI chat platform that allows users to interact with several leading AI models within a single interface. By combining responses from different AI systems, it enables deeper exploration, comparison, and verification of answers without switching tabs. The platform is designed for users who rely heavily on conversational AI and want improved accuracy, broader perspectives, and more confident outputs.

Multiple Chat

Multiple is a multi-model AI chat platform that allows users to interact with several leading AI models within a single interface. By combining responses from different AI systems, it enables deeper exploration, comparison, and verification of answers without switching tabs. The platform is designed for users who rely heavily on conversational AI and want improved accuracy, broader perspectives, and more confident outputs.

Multiple Chat

Multiple is a multi-model AI chat platform that allows users to interact with several leading AI models within a single interface. By combining responses from different AI systems, it enables deeper exploration, comparison, and verification of answers without switching tabs. The platform is designed for users who rely heavily on conversational AI and want improved accuracy, broader perspectives, and more confident outputs.

Editorial Note

This page was researched and written by the ATB Editorial Team. Our team researches each AI tool by reviewing its official website, testing features, exploring real use cases, and considering user feedback. Every page is fact-checked and regularly updated to ensure the information stays accurate, neutral, and useful for our readers.

If you have any suggestions or questions, email us at hello@aitoolbook.ai