- Web Developers: Both new and seasoned developers can use it to rapidly prototype and generate the boilerplate code for full-stack web applications.

- Beginners & "No-Code" Users: Individuals with no coding experience can create functional micro-apps by simply describing their idea in plain language.

- AI Enthusiasts: Anyone interested in exploring the capabilities of AI in software development, particularly the speed of Groq's language processing units.

How to Use It?

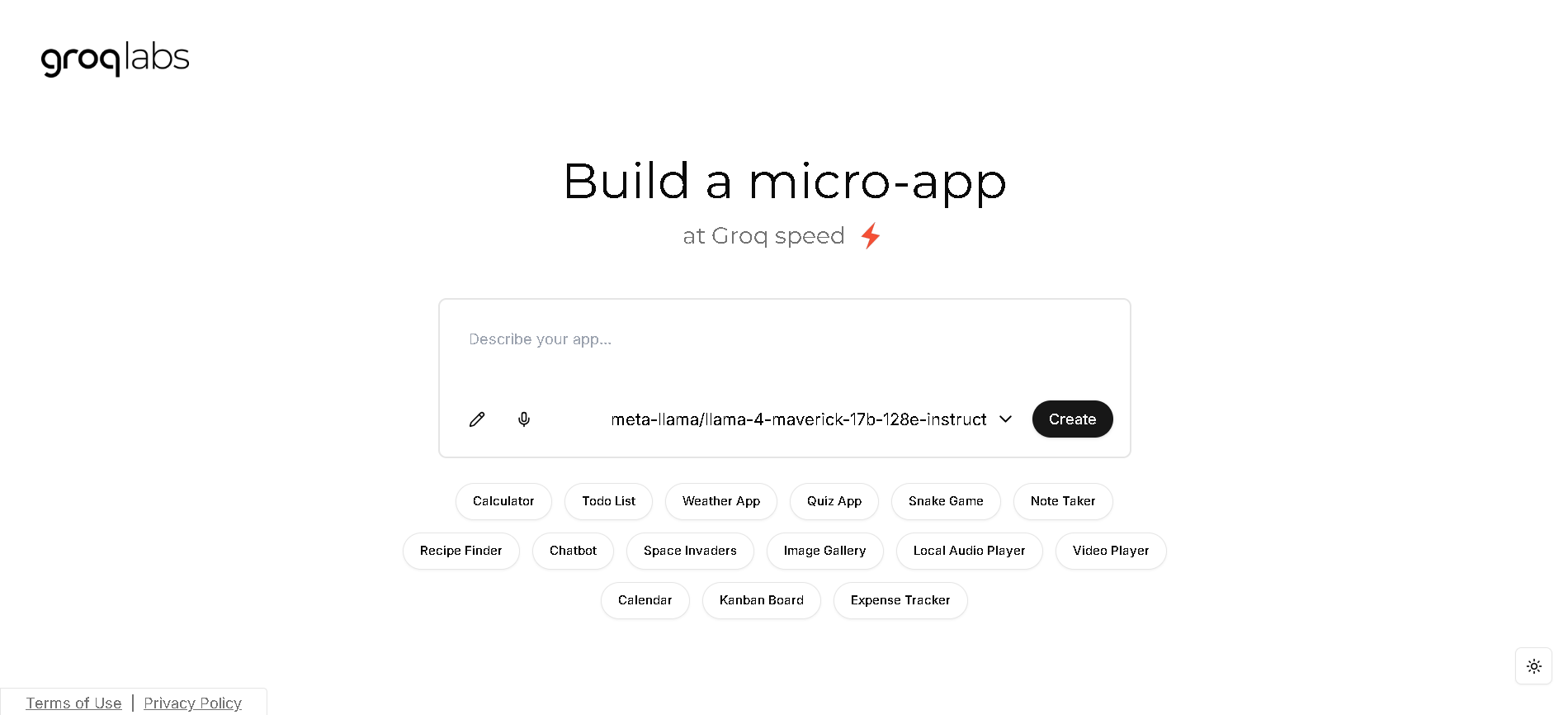

- Provide a Natural Language Prompt: Describe the application or component you want to create using a simple text prompt.

- Generate the App: The AI instantly generates the application's code and a real-time preview based on your input.

- Iterate and Refine: Use the interactive feedback system to provide more prompts and refine the app's features or fix any issues.

- Export and Share: You can easily share and export the generated application code. For local use, you can clone the open-source repository and run it with your own Groq API key.

- Blazing Speed: Powered by Groq's LPU technology, the platform is renowned for its speed, capable of generating code and full applications in hundreds of milliseconds.

- Open-Source Nature: The project is fully open-source and available on GitHub, providing complete transparency and allowing developers to customize the tool or self-host it.

- Real-Time Feedback Loop: The interactive feedback system allows for continuous improvement and version tracking, enabling users to build and refine their projects collaboratively with the AI.

- Content Safety: It integrates LlamaGuard to check for content safety, ensuring the generated output is appropriate and secure.

- Exceptional Speed: The primary advantage is the speed of code generation, which can significantly accelerate the development workflow.

- Accessibility: The tool makes application development accessible to a wider audience, including those with no coding background.

- Community-Driven: Its open-source nature fosters a community of developers who can contribute to its improvement and development.

- Hardware Requirement for Local Use: Running the tool locally requires an API key and a powerful setup, which might be a barrier for some users.

- Limited to Code Generation: The platform is focused on generating code and may not provide the full suite of features found in more comprehensive AI development environments.

- Beta Status: The tool is a prototype to showcase the capabilities of Groq and may not be a fully polished, public-facing product with dedicated customer support.

paid

custom

Proud of the love you're getting? Show off your AI Toolbook reviews—then invite more fans to share the love and build your credibility.

Add an AI Toolbook badge to your site—an easy way to drive followers, showcase updates, and collect reviews. It's like a mini 24/7 billboard for your AI.

Reviews

Rating Distribution

Average score

Popular Mention

FAQs

Similar AI Tools

Spark Ai

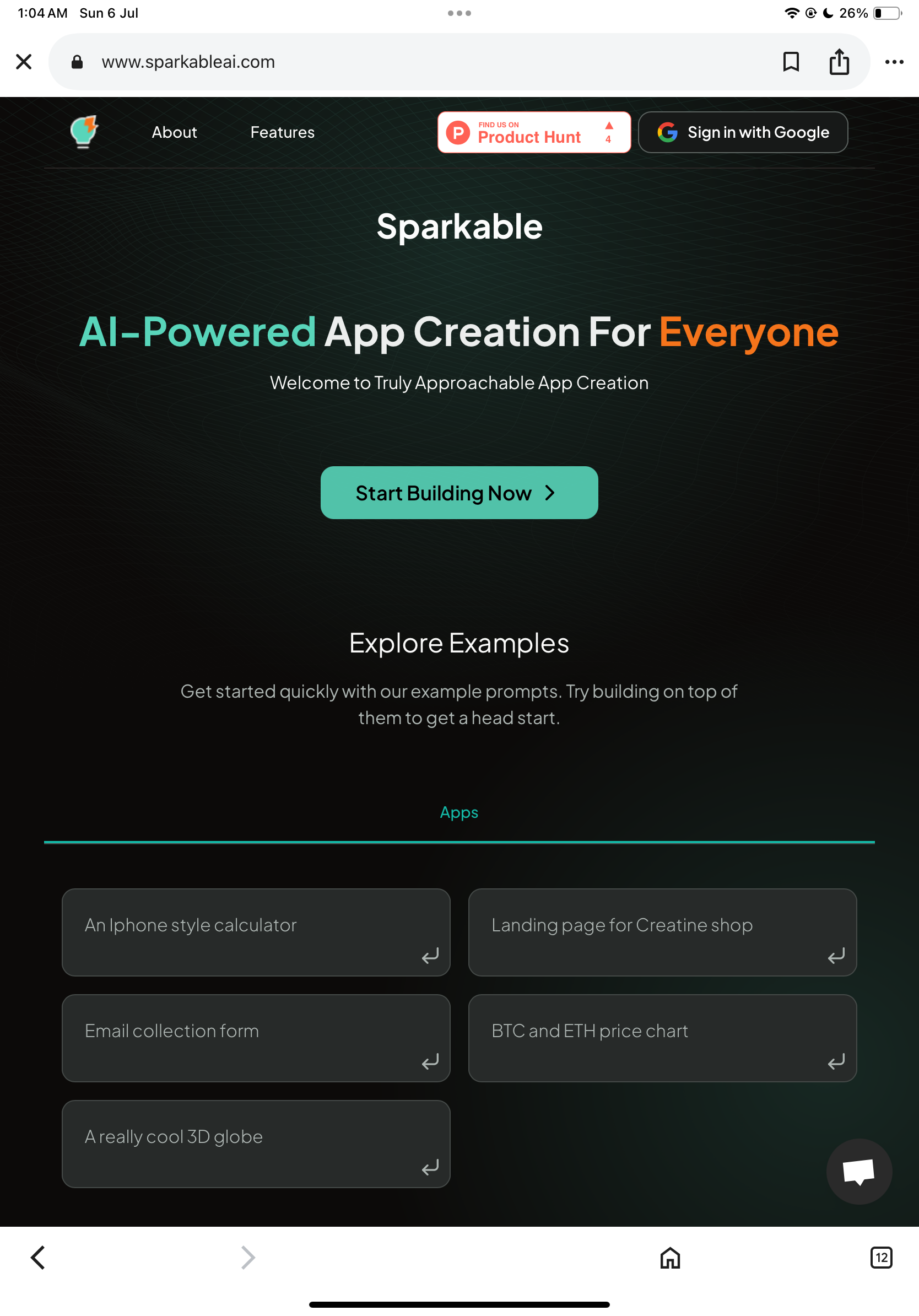

Sparkable AI is a no-code, AI-powered platform enabling users to build and fully functional web apps using plain English prompts or image uploads—no coding required. It democratizes application development, letting creators, entrepreneurs, and teams prototype and deploy tools like calculators, landing pages, data dashboards, and interactive visuals—all within minutes.

Spark Ai

Sparkable AI is a no-code, AI-powered platform enabling users to build and fully functional web apps using plain English prompts or image uploads—no coding required. It democratizes application development, letting creators, entrepreneurs, and teams prototype and deploy tools like calculators, landing pages, data dashboards, and interactive visuals—all within minutes.

Spark Ai

Sparkable AI is a no-code, AI-powered platform enabling users to build and fully functional web apps using plain English prompts or image uploads—no coding required. It democratizes application development, letting creators, entrepreneurs, and teams prototype and deploy tools like calculators, landing pages, data dashboards, and interactive visuals—all within minutes.

Boundary AI

BoundaryML.com introduces BAML, an expressive language specifically designed for structured text generation with Large Language Models (LLMs). Its primary purpose is to simplify and enhance the process of obtaining structured data (like JSON) from LLMs, moving beyond the challenges of traditional methods by providing robust parsing, error correction, and reliable function-calling capabilities.

Boundary AI

BoundaryML.com introduces BAML, an expressive language specifically designed for structured text generation with Large Language Models (LLMs). Its primary purpose is to simplify and enhance the process of obtaining structured data (like JSON) from LLMs, moving beyond the challenges of traditional methods by providing robust parsing, error correction, and reliable function-calling capabilities.

Boundary AI

BoundaryML.com introduces BAML, an expressive language specifically designed for structured text generation with Large Language Models (LLMs). Its primary purpose is to simplify and enhance the process of obtaining structured data (like JSON) from LLMs, moving beyond the challenges of traditional methods by providing robust parsing, error correction, and reliable function-calling capabilities.

All-in-One AI

All-in-One AI is a Japanese platform that provides over 200 pre-configured AI tools in a single application. Its primary purpose is to simplify AI content generation by eliminating the need for users to write complex prompts. The platform, developed by Brightiers Inc., allows users to easily create high-quality text and images for a variety of purposes, from marketing copy to social media posts.

All-in-One AI

All-in-One AI is a Japanese platform that provides over 200 pre-configured AI tools in a single application. Its primary purpose is to simplify AI content generation by eliminating the need for users to write complex prompts. The platform, developed by Brightiers Inc., allows users to easily create high-quality text and images for a variety of purposes, from marketing copy to social media posts.

All-in-One AI

All-in-One AI is a Japanese platform that provides over 200 pre-configured AI tools in a single application. Its primary purpose is to simplify AI content generation by eliminating the need for users to write complex prompts. The platform, developed by Brightiers Inc., allows users to easily create high-quality text and images for a variety of purposes, from marketing copy to social media posts.

Inweave

Inweave is an AI tool designed to help startups and scaleups automate their workflows. It allows users to create, deploy, and manage tailored AI assistants for a variety of tasks and business processes. By offering flexible model selection and robust API support, Inweave enables businesses to seamlessly integrate AI into their existing applications, boosting productivity and efficiency.

Inweave

Inweave is an AI tool designed to help startups and scaleups automate their workflows. It allows users to create, deploy, and manage tailored AI assistants for a variety of tasks and business processes. By offering flexible model selection and robust API support, Inweave enables businesses to seamlessly integrate AI into their existing applications, boosting productivity and efficiency.

Inweave

Inweave is an AI tool designed to help startups and scaleups automate their workflows. It allows users to create, deploy, and manage tailored AI assistants for a variety of tasks and business processes. By offering flexible model selection and robust API support, Inweave enables businesses to seamlessly integrate AI into their existing applications, boosting productivity and efficiency.

LM Studio

LM Studio is a local AI toolkit that empowers users to discover, download, and run Large Language Models (LLMs) directly on their personal computers. It provides a user-friendly interface to chat with models, set up a local LLM server for applications, and ensures complete data privacy as all processes occur locally on your machine.

LM Studio

LM Studio is a local AI toolkit that empowers users to discover, download, and run Large Language Models (LLMs) directly on their personal computers. It provides a user-friendly interface to chat with models, set up a local LLM server for applications, and ensures complete data privacy as all processes occur locally on your machine.

LM Studio

LM Studio is a local AI toolkit that empowers users to discover, download, and run Large Language Models (LLMs) directly on their personal computers. It provides a user-friendly interface to chat with models, set up a local LLM server for applications, and ensures complete data privacy as all processes occur locally on your machine.

Grok 4

Grok 4 is the latest and most intelligent AI model developed by xAI, designed for expert-level reasoning and real-time knowledge integration. It combines large-scale reinforcement learning with native tool use, including code interpretation, web browsing, and advanced search capabilities, to provide highly accurate and up-to-date responses. Grok 4 excels across diverse domains such as math, coding, science, and complex reasoning, supporting multimodal inputs like text and vision. With its massive 256,000-token context window and advanced toolset, Grok 4 is built to push the boundaries of AI intelligence and practical utility for both developers and enterprises.

Grok 4

Grok 4 is the latest and most intelligent AI model developed by xAI, designed for expert-level reasoning and real-time knowledge integration. It combines large-scale reinforcement learning with native tool use, including code interpretation, web browsing, and advanced search capabilities, to provide highly accurate and up-to-date responses. Grok 4 excels across diverse domains such as math, coding, science, and complex reasoning, supporting multimodal inputs like text and vision. With its massive 256,000-token context window and advanced toolset, Grok 4 is built to push the boundaries of AI intelligence and practical utility for both developers and enterprises.

Grok 4

Grok 4 is the latest and most intelligent AI model developed by xAI, designed for expert-level reasoning and real-time knowledge integration. It combines large-scale reinforcement learning with native tool use, including code interpretation, web browsing, and advanced search capabilities, to provide highly accurate and up-to-date responses. Grok 4 excels across diverse domains such as math, coding, science, and complex reasoning, supporting multimodal inputs like text and vision. With its massive 256,000-token context window and advanced toolset, Grok 4 is built to push the boundaries of AI intelligence and practical utility for both developers and enterprises.

Zinq AI

ZINQ AI is an AI-powered form builder designed to make data collection feel more natural and engaging. Instead of traditional static fields, ZINQ uses conversational, one-question-at-a-time experiences that feel more human. You can generate polished, brand-aligned forms in seconds by simply describing what you need—such as “build a customer satisfaction survey.” The platform features a visual editor for fine-tuning tone, design, and form logic, and supports versatile deployment methods such as embedded pop-ups, custom URLs, and even scheduled forms. With real-time analytics, partial response tracking, and a free plan to get started, ZINQ balances speed, aesthetics, and insights for product, marketing, HR, and research teams.

Zinq AI

ZINQ AI is an AI-powered form builder designed to make data collection feel more natural and engaging. Instead of traditional static fields, ZINQ uses conversational, one-question-at-a-time experiences that feel more human. You can generate polished, brand-aligned forms in seconds by simply describing what you need—such as “build a customer satisfaction survey.” The platform features a visual editor for fine-tuning tone, design, and form logic, and supports versatile deployment methods such as embedded pop-ups, custom URLs, and even scheduled forms. With real-time analytics, partial response tracking, and a free plan to get started, ZINQ balances speed, aesthetics, and insights for product, marketing, HR, and research teams.

Zinq AI

ZINQ AI is an AI-powered form builder designed to make data collection feel more natural and engaging. Instead of traditional static fields, ZINQ uses conversational, one-question-at-a-time experiences that feel more human. You can generate polished, brand-aligned forms in seconds by simply describing what you need—such as “build a customer satisfaction survey.” The platform features a visual editor for fine-tuning tone, design, and form logic, and supports versatile deployment methods such as embedded pop-ups, custom URLs, and even scheduled forms. With real-time analytics, partial response tracking, and a free plan to get started, ZINQ balances speed, aesthetics, and insights for product, marketing, HR, and research teams.

inception

Inception Labs is an AI research company that develops Mercury, the world's first commercial diffusion-based large language models. Unlike traditional autoregressive LLMs that generate tokens sequentially, Mercury models use diffusion architecture to generate text through parallel refinement passes. This breakthrough approach enables ultra-fast inference speeds of over 1,000 tokens per second while maintaining frontier-level quality. The platform offers Mercury for general-purpose tasks and Mercury Coder for development workflows, both featuring streaming capabilities, tool use, structured output, and 128K context windows. These models serve as drop-in replacements for traditional LLMs through OpenAI-compatible APIs and are available across major cloud providers including AWS Bedrock, Azure Foundry, and various AI platforms for enterprise deployment.

inception

Inception Labs is an AI research company that develops Mercury, the world's first commercial diffusion-based large language models. Unlike traditional autoregressive LLMs that generate tokens sequentially, Mercury models use diffusion architecture to generate text through parallel refinement passes. This breakthrough approach enables ultra-fast inference speeds of over 1,000 tokens per second while maintaining frontier-level quality. The platform offers Mercury for general-purpose tasks and Mercury Coder for development workflows, both featuring streaming capabilities, tool use, structured output, and 128K context windows. These models serve as drop-in replacements for traditional LLMs through OpenAI-compatible APIs and are available across major cloud providers including AWS Bedrock, Azure Foundry, and various AI platforms for enterprise deployment.

inception

Inception Labs is an AI research company that develops Mercury, the world's first commercial diffusion-based large language models. Unlike traditional autoregressive LLMs that generate tokens sequentially, Mercury models use diffusion architecture to generate text through parallel refinement passes. This breakthrough approach enables ultra-fast inference speeds of over 1,000 tokens per second while maintaining frontier-level quality. The platform offers Mercury for general-purpose tasks and Mercury Coder for development workflows, both featuring streaming capabilities, tool use, structured output, and 128K context windows. These models serve as drop-in replacements for traditional LLMs through OpenAI-compatible APIs and are available across major cloud providers including AWS Bedrock, Azure Foundry, and various AI platforms for enterprise deployment.

Langchain

LangChain is a powerful open-source framework designed to help developers build context-aware applications that leverage large language models (LLMs). It allows users to connect language models to various data sources, APIs, and memory components, enabling intelligent, multi-step reasoning and decision-making processes. LangChain supports both Python and JavaScript, providing modular building blocks for developers to create chatbots, AI assistants, retrieval-augmented generation (RAG) systems, and agent-based tools. The framework is widely adopted across industries for its flexibility in connecting structured and unstructured data with LLMs.

Langchain

LangChain is a powerful open-source framework designed to help developers build context-aware applications that leverage large language models (LLMs). It allows users to connect language models to various data sources, APIs, and memory components, enabling intelligent, multi-step reasoning and decision-making processes. LangChain supports both Python and JavaScript, providing modular building blocks for developers to create chatbots, AI assistants, retrieval-augmented generation (RAG) systems, and agent-based tools. The framework is widely adopted across industries for its flexibility in connecting structured and unstructured data with LLMs.

Langchain

LangChain is a powerful open-source framework designed to help developers build context-aware applications that leverage large language models (LLMs). It allows users to connect language models to various data sources, APIs, and memory components, enabling intelligent, multi-step reasoning and decision-making processes. LangChain supports both Python and JavaScript, providing modular building blocks for developers to create chatbots, AI assistants, retrieval-augmented generation (RAG) systems, and agent-based tools. The framework is widely adopted across industries for its flexibility in connecting structured and unstructured data with LLMs.

LLM Chat

LLMChat is a privacy-focused, open-source AI chatbot platform designed for advanced research, agentic workflows, and seamless interaction with multiple large language models (LLMs). It offers users a minimalistic and intuitive interface enabling deep exploration of complex topics with modes like Deep Research and Pro Search, which incorporates real-time web integration for current data. The platform emphasizes user privacy by storing all chat history locally in the browser, ensuring conversations never leave the device. LLMChat supports many popular LLM providers such as OpenAI, Anthropic, Google, and more, allowing users to customize AI assistants with personalized instructions and knowledge bases for a wide variety of applications ranging from research to content generation and coding assistance.

LLM Chat

LLMChat is a privacy-focused, open-source AI chatbot platform designed for advanced research, agentic workflows, and seamless interaction with multiple large language models (LLMs). It offers users a minimalistic and intuitive interface enabling deep exploration of complex topics with modes like Deep Research and Pro Search, which incorporates real-time web integration for current data. The platform emphasizes user privacy by storing all chat history locally in the browser, ensuring conversations never leave the device. LLMChat supports many popular LLM providers such as OpenAI, Anthropic, Google, and more, allowing users to customize AI assistants with personalized instructions and knowledge bases for a wide variety of applications ranging from research to content generation and coding assistance.

LLM Chat

LLMChat is a privacy-focused, open-source AI chatbot platform designed for advanced research, agentic workflows, and seamless interaction with multiple large language models (LLMs). It offers users a minimalistic and intuitive interface enabling deep exploration of complex topics with modes like Deep Research and Pro Search, which incorporates real-time web integration for current data. The platform emphasizes user privacy by storing all chat history locally in the browser, ensuring conversations never leave the device. LLMChat supports many popular LLM providers such as OpenAI, Anthropic, Google, and more, allowing users to customize AI assistants with personalized instructions and knowledge bases for a wide variety of applications ranging from research to content generation and coding assistance.

Awan LLM

Awan LLM is a cost-effective, unlimited token large language model inference API platform designed for power users and developers. Unlike traditional API providers that charge per token, Awan LLM offers a monthly subscription model that enables users to send and receive unlimited tokens up to the model's context limit. It supports unrestricted use of LLM models without censorship or constraints. The platform is built on privately owned data centers and GPUs, allowing it to offer efficient and scalable AI services. Awan LLM supports numerous use cases including AI assistants, AI agents, roleplaying, data processing, code completion, and building AI-powered applications without worrying about token limits or costs.

Awan LLM

Awan LLM is a cost-effective, unlimited token large language model inference API platform designed for power users and developers. Unlike traditional API providers that charge per token, Awan LLM offers a monthly subscription model that enables users to send and receive unlimited tokens up to the model's context limit. It supports unrestricted use of LLM models without censorship or constraints. The platform is built on privately owned data centers and GPUs, allowing it to offer efficient and scalable AI services. Awan LLM supports numerous use cases including AI assistants, AI agents, roleplaying, data processing, code completion, and building AI-powered applications without worrying about token limits or costs.

Awan LLM

Awan LLM is a cost-effective, unlimited token large language model inference API platform designed for power users and developers. Unlike traditional API providers that charge per token, Awan LLM offers a monthly subscription model that enables users to send and receive unlimited tokens up to the model's context limit. It supports unrestricted use of LLM models without censorship or constraints. The platform is built on privately owned data centers and GPUs, allowing it to offer efficient and scalable AI services. Awan LLM supports numerous use cases including AI assistants, AI agents, roleplaying, data processing, code completion, and building AI-powered applications without worrying about token limits or costs.

Text to API

Text to API is an LLM-powered API engine that lets you build and deploy AI-driven APIs in seconds using natural language instead of boilerplate backend code. You describe the data or functionality you want, and the engine handles schema creation, integration with providers like Firecrawl and OpenAI, and endpoint deployment behind the scenes. It’s designed to turn messy web data into clean, structured APIs, so developers can focus on product logic rather than wiring infrastructure. With a workflow built around dynamic schema generation, intelligent web scraping, and real-time updates, it’s ideal for quickly prototyping or shipping production-ready AI features.

Text to API

Text to API is an LLM-powered API engine that lets you build and deploy AI-driven APIs in seconds using natural language instead of boilerplate backend code. You describe the data or functionality you want, and the engine handles schema creation, integration with providers like Firecrawl and OpenAI, and endpoint deployment behind the scenes. It’s designed to turn messy web data into clean, structured APIs, so developers can focus on product logic rather than wiring infrastructure. With a workflow built around dynamic schema generation, intelligent web scraping, and real-time updates, it’s ideal for quickly prototyping or shipping production-ready AI features.

Text to API

Text to API is an LLM-powered API engine that lets you build and deploy AI-driven APIs in seconds using natural language instead of boilerplate backend code. You describe the data or functionality you want, and the engine handles schema creation, integration with providers like Firecrawl and OpenAI, and endpoint deployment behind the scenes. It’s designed to turn messy web data into clean, structured APIs, so developers can focus on product logic rather than wiring infrastructure. With a workflow built around dynamic schema generation, intelligent web scraping, and real-time updates, it’s ideal for quickly prototyping or shipping production-ready AI features.

Editorial Note

This page was researched and written by the ATB Editorial Team. Our team researches each AI tool by reviewing its official website, testing features, exploring real use cases, and considering user feedback. Every page is fact-checked and regularly updated to ensure the information stays accurate, neutral, and useful for our readers.

If you have any suggestions or questions, email us at hello@aitoolbook.ai