- Software Engineers & Developers: Accelerate coding workflows with ultra-fast code generation and apply-edit capabilities.

- Enterprise Teams & Businesses: Deploy high-speed AI for customer support, automation, and real-time applications.

- AI Application Builders: Integrate fast inference into voice agents, chatbots, and interactive systems.

- Cloud Platform Users: Access Mercury models through AWS Bedrock, Azure, and other major providers.

- Cost-Conscious Organizations: Reduce AI infrastructure costs with efficient diffusion-based processing.

How to Use Inception Labs?

- Choose Your Access Method: Select from direct API, major cloud providers, or third-party platforms like OpenRouter.

- Select Your Model: Pick Mercury for general tasks or Mercury Coder for development and coding workflows.

- Integrate via API: Use OpenAI-compatible endpoints as drop-in replacements for existing LLM integrations.

- Scale and Deploy: Leverage enterprise features like fine-tuning, private deployments, and forward-deployed engineering support.

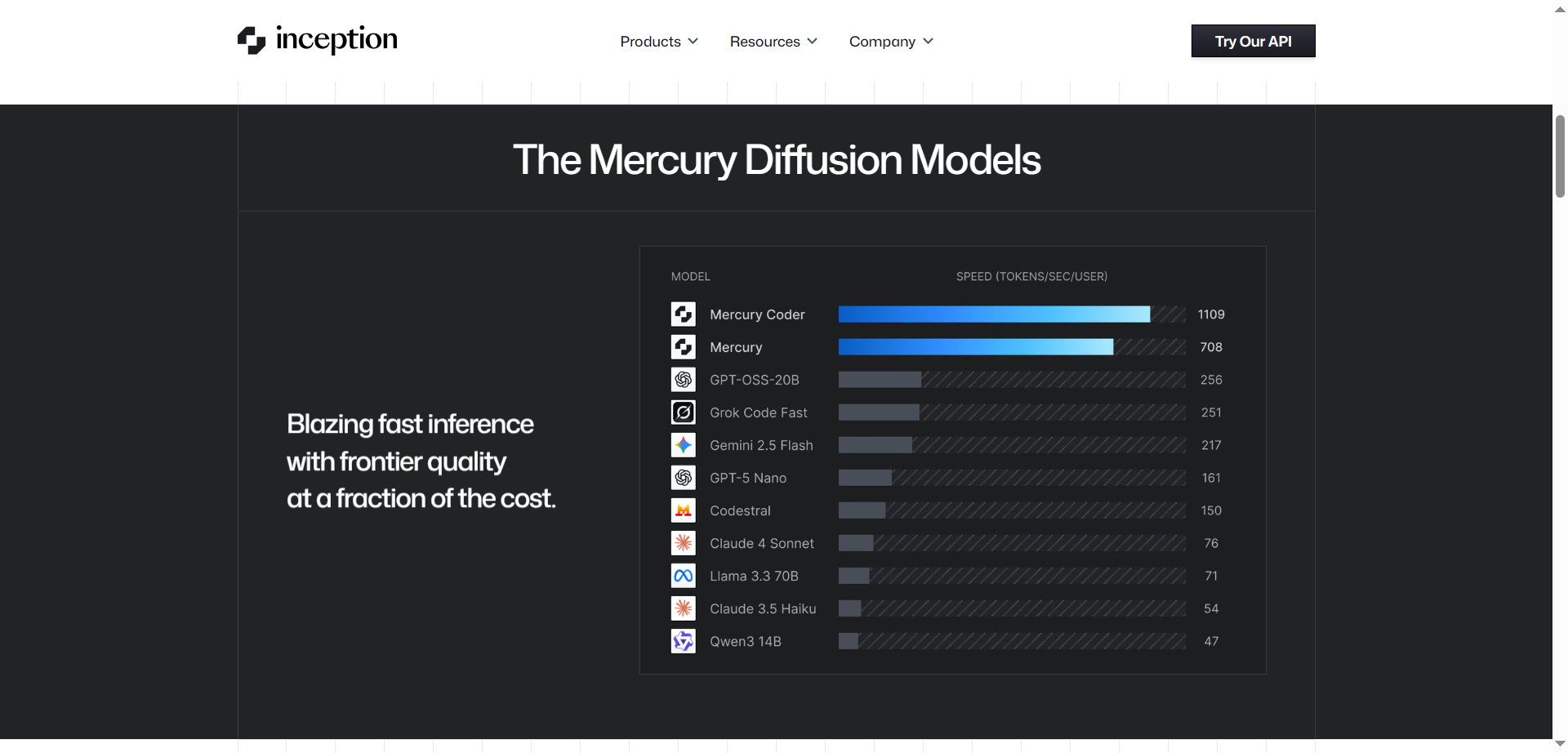

- Diffusion Architecture: First commercial dLLMs using parallel text refinement instead of sequential token prediction.

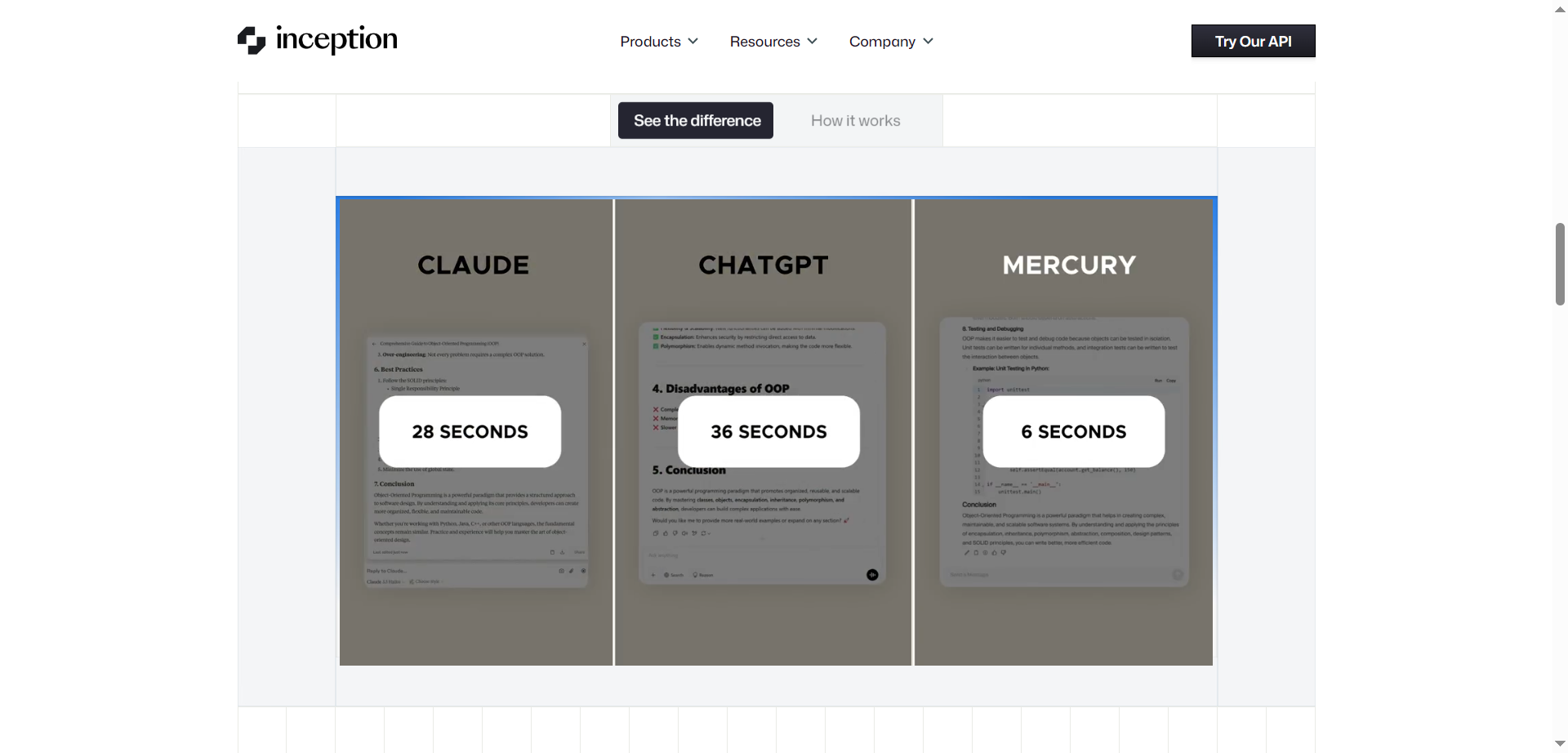

- Ultra-Fast Inference: Generate over 1,000 tokens per second, up to 10x faster than traditional LLMs.

- Cost Efficiency: Reduce computational costs with optimized diffusion processing at $0.25 input/$1 output per million tokens.

- Enterprise Ready: Available on AWS Bedrock, Azure Foundry, and major cloud platforms with enterprise support.

- OpenAI Compatible: Drop-in replacement for existing LLM integrations without code changes.

- Revolutionary diffusion approach delivers unprecedented speed improvements.

- Maintains frontier-quality results while dramatically reducing latency and costs.

- OpenAI API compatibility makes migration seamless for existing applications.

- Enterprise-grade deployment options across major cloud platforms.

- Newer diffusion technology may have limited real-world testing compared to established models.

- Documentation and community resources are still developing for this emerging approach.

- Performance gains may vary depending on specific use cases and deployment configurations.

- Enterprise pricing and custom deployment costs are not publicly transparent.

Custom

Pricing information is not directly provided.

Proud of the love you're getting? Show off your AI Toolbook reviews—then invite more fans to share the love and build your credibility.

Add an AI Toolbook badge to your site—an easy way to drive followers, showcase updates, and collect reviews. It's like a mini 24/7 billboard for your AI.

Reviews

Rating Distribution

Average score

Popular Mention

FAQs

Similar AI Tools

GenFuse AI

GenFuse AI is a powerful no-code AI automation platform that enables users to build, manage, and deploy intelligent AI agents without writing a single line of code. Designed for professionals, teams, and businesses of all sizes. GenFuse AI automates complex workflows—combining text, images, and logic—into seamless, goal-oriented agents. Whether you are creating task bots, automating internal processes, or building customer-facing assistants. GenFuseAI makes advanced AI accessible to everyone.

GenFuse AI

GenFuse AI is a powerful no-code AI automation platform that enables users to build, manage, and deploy intelligent AI agents without writing a single line of code. Designed for professionals, teams, and businesses of all sizes. GenFuse AI automates complex workflows—combining text, images, and logic—into seamless, goal-oriented agents. Whether you are creating task bots, automating internal processes, or building customer-facing assistants. GenFuseAI makes advanced AI accessible to everyone.

GenFuse AI

GenFuse AI is a powerful no-code AI automation platform that enables users to build, manage, and deploy intelligent AI agents without writing a single line of code. Designed for professionals, teams, and businesses of all sizes. GenFuse AI automates complex workflows—combining text, images, and logic—into seamless, goal-oriented agents. Whether you are creating task bots, automating internal processes, or building customer-facing assistants. GenFuseAI makes advanced AI accessible to everyone.

Chat 01 AI

Chat01.ai is a platform that offers free and unlimited chat with OpenAI 01, a new series of AI models. These models are specifically designed for complex reasoning and problem-solving in areas such as science, coding, and math, by employing a "think more before responding" approach, trying different strategies, and recognizing mistakes.

Chat 01 AI

Chat01.ai is a platform that offers free and unlimited chat with OpenAI 01, a new series of AI models. These models are specifically designed for complex reasoning and problem-solving in areas such as science, coding, and math, by employing a "think more before responding" approach, trying different strategies, and recognizing mistakes.

Chat 01 AI

Chat01.ai is a platform that offers free and unlimited chat with OpenAI 01, a new series of AI models. These models are specifically designed for complex reasoning and problem-solving in areas such as science, coding, and math, by employing a "think more before responding" approach, trying different strategies, and recognizing mistakes.

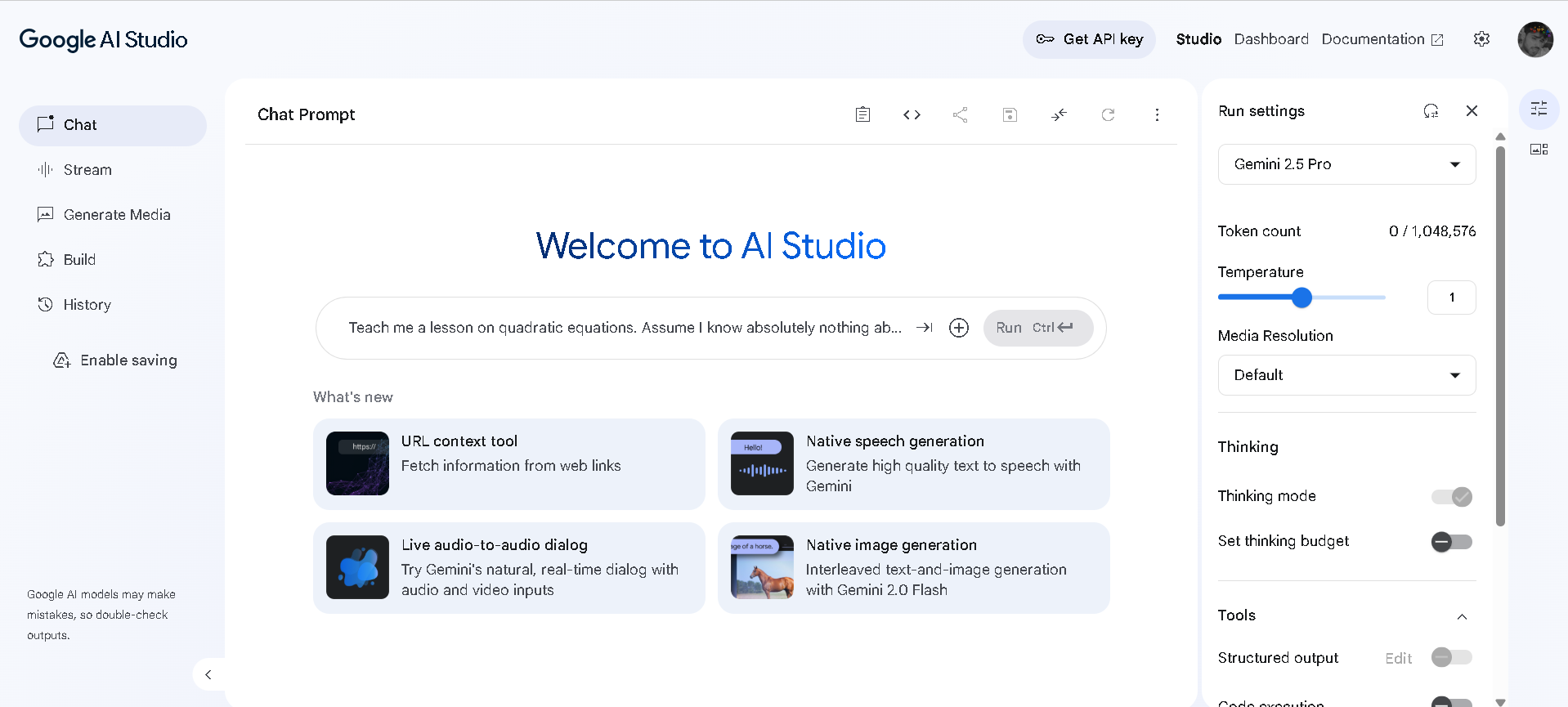

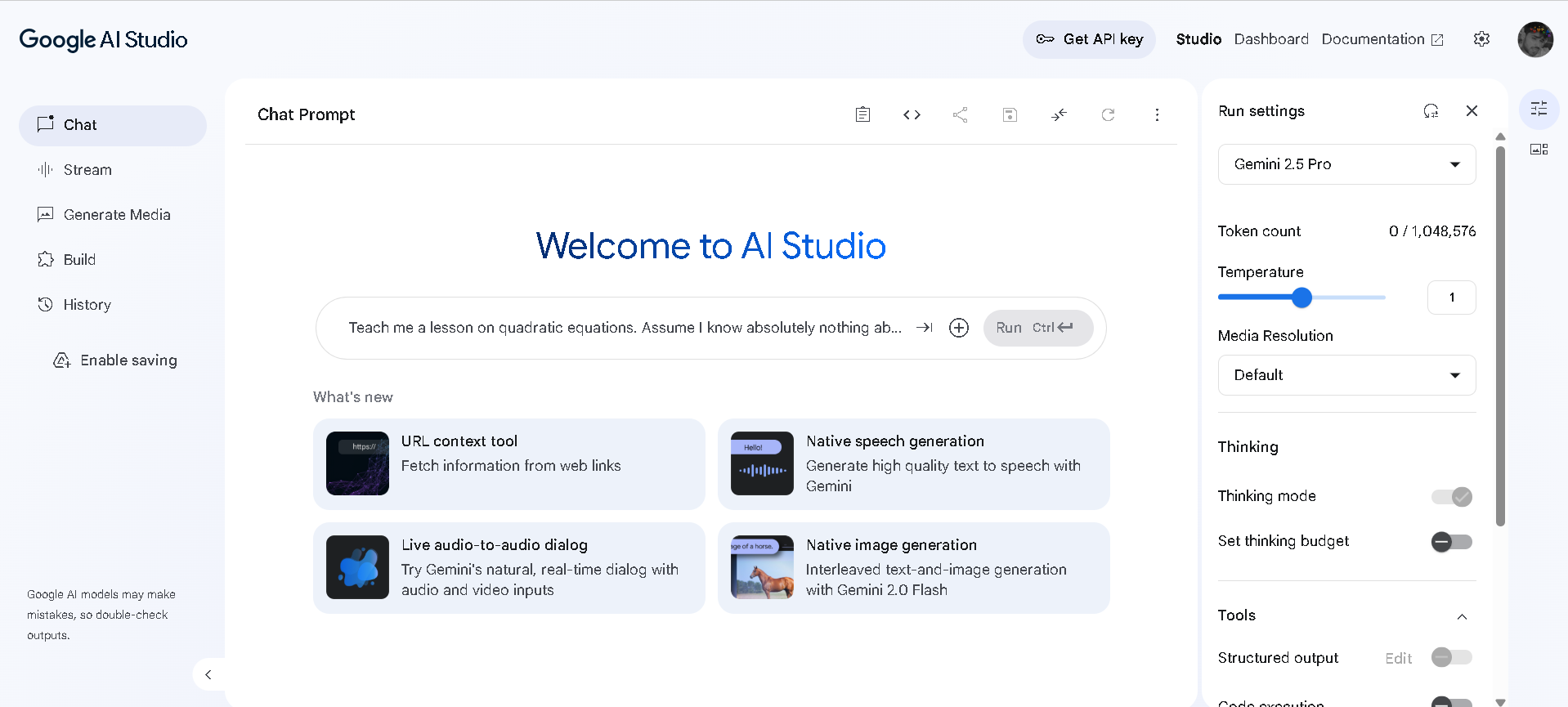

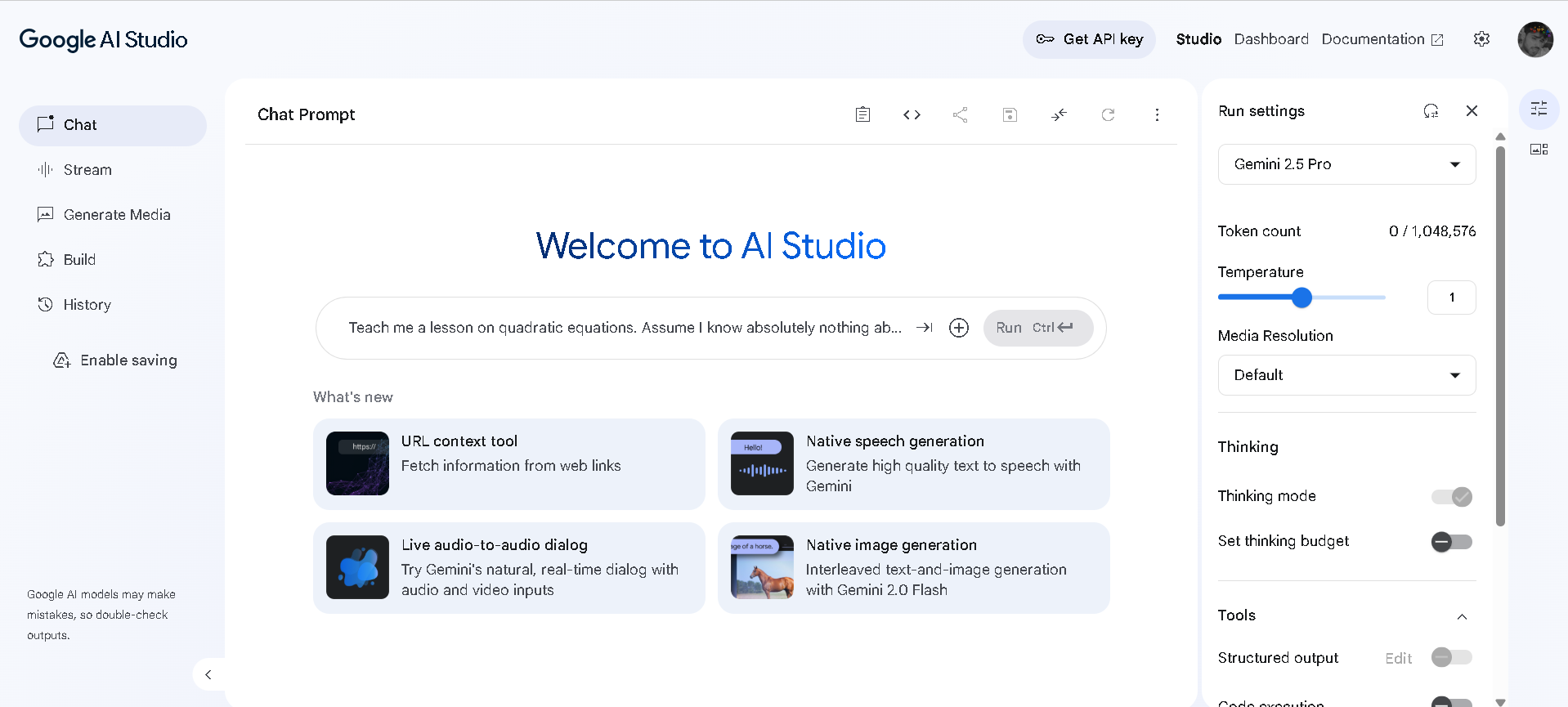

Google AI Studio

Google AI Studio is a web-based development environment that allows users to explore, prototype, and build applications using Google's cutting-edge generative AI models, such as Gemini. It provides a comprehensive set of tools for interacting with AI through chat prompts, generating various media types, and fine-tuning model behaviors for specific use cases.

Google AI Studio

Google AI Studio is a web-based development environment that allows users to explore, prototype, and build applications using Google's cutting-edge generative AI models, such as Gemini. It provides a comprehensive set of tools for interacting with AI through chat prompts, generating various media types, and fine-tuning model behaviors for specific use cases.

Google AI Studio

Google AI Studio is a web-based development environment that allows users to explore, prototype, and build applications using Google's cutting-edge generative AI models, such as Gemini. It provides a comprehensive set of tools for interacting with AI through chat prompts, generating various media types, and fine-tuning model behaviors for specific use cases.

Aisera

Aisera is an AI-driven platform designed to transform enterprise service experiences through the integration of generative AI and advanced automation. It leverages Large Language Models (LLMs) and domain-specific AI capabilities to deliver proactive, personalized, and predictive solutions across various business functions such as IT, customer service, HR, and more.

Aisera

Aisera is an AI-driven platform designed to transform enterprise service experiences through the integration of generative AI and advanced automation. It leverages Large Language Models (LLMs) and domain-specific AI capabilities to deliver proactive, personalized, and predictive solutions across various business functions such as IT, customer service, HR, and more.

Aisera

Aisera is an AI-driven platform designed to transform enterprise service experiences through the integration of generative AI and advanced automation. It leverages Large Language Models (LLMs) and domain-specific AI capabilities to deliver proactive, personalized, and predictive solutions across various business functions such as IT, customer service, HR, and more.

sales sage

SalesSage AI is an AI-powered sales enablement platform designed specifically for technical founders, startup teams, and growing businesses. It streamlines the entire sales process by providing actionable insights, dynamic role-playing scenarios, and real-time feedback to help sales teams close deals faster and more efficiently. The platform covers every stage of sales: prospecting, preparation, practice, and performance evaluation. With AI-driven analytics, SalesSage helps users identify the best leads, craft personalized messaging, and improve the overall effectiveness of their sales strategy. For startups and technical founders who may lack formal sales experience, SalesSage offers guided assistance, automated suggestions, and training modules that reduce learning curves and accelerate results.

sales sage

SalesSage AI is an AI-powered sales enablement platform designed specifically for technical founders, startup teams, and growing businesses. It streamlines the entire sales process by providing actionable insights, dynamic role-playing scenarios, and real-time feedback to help sales teams close deals faster and more efficiently. The platform covers every stage of sales: prospecting, preparation, practice, and performance evaluation. With AI-driven analytics, SalesSage helps users identify the best leads, craft personalized messaging, and improve the overall effectiveness of their sales strategy. For startups and technical founders who may lack formal sales experience, SalesSage offers guided assistance, automated suggestions, and training modules that reduce learning curves and accelerate results.

sales sage

SalesSage AI is an AI-powered sales enablement platform designed specifically for technical founders, startup teams, and growing businesses. It streamlines the entire sales process by providing actionable insights, dynamic role-playing scenarios, and real-time feedback to help sales teams close deals faster and more efficiently. The platform covers every stage of sales: prospecting, preparation, practice, and performance evaluation. With AI-driven analytics, SalesSage helps users identify the best leads, craft personalized messaging, and improve the overall effectiveness of their sales strategy. For startups and technical founders who may lack formal sales experience, SalesSage offers guided assistance, automated suggestions, and training modules that reduce learning curves and accelerate results.

Langchain

LangChain is a powerful open-source framework designed to help developers build context-aware applications that leverage large language models (LLMs). It allows users to connect language models to various data sources, APIs, and memory components, enabling intelligent, multi-step reasoning and decision-making processes. LangChain supports both Python and JavaScript, providing modular building blocks for developers to create chatbots, AI assistants, retrieval-augmented generation (RAG) systems, and agent-based tools. The framework is widely adopted across industries for its flexibility in connecting structured and unstructured data with LLMs.

Langchain

LangChain is a powerful open-source framework designed to help developers build context-aware applications that leverage large language models (LLMs). It allows users to connect language models to various data sources, APIs, and memory components, enabling intelligent, multi-step reasoning and decision-making processes. LangChain supports both Python and JavaScript, providing modular building blocks for developers to create chatbots, AI assistants, retrieval-augmented generation (RAG) systems, and agent-based tools. The framework is widely adopted across industries for its flexibility in connecting structured and unstructured data with LLMs.

Langchain

LangChain is a powerful open-source framework designed to help developers build context-aware applications that leverage large language models (LLMs). It allows users to connect language models to various data sources, APIs, and memory components, enabling intelligent, multi-step reasoning and decision-making processes. LangChain supports both Python and JavaScript, providing modular building blocks for developers to create chatbots, AI assistants, retrieval-augmented generation (RAG) systems, and agent-based tools. The framework is widely adopted across industries for its flexibility in connecting structured and unstructured data with LLMs.

Prompts AI

Prompts.ai is an enterprise-grade AI platform designed to streamline, optimize, and govern generative AI workflows and prompt engineering across organizations. It centralizes access to over 35 large language models (LLMs) and AI tools, allowing teams to automate repetitive workflows, reduce costs, and boost productivity by up to 10 times. The platform emphasizes data security and compliance with standards such as SOC 2 Type II, HIPAA, and GDPR. It supports enterprises in building custom AI workflows, ensuring full visibility, auditability, and governance of AI interactions. Additionally, Prompts.ai fosters collaboration by providing a shared library of expert-built prompts and workflows, enabling businesses to scale AI adoption efficiently and securely.

Prompts AI

Prompts.ai is an enterprise-grade AI platform designed to streamline, optimize, and govern generative AI workflows and prompt engineering across organizations. It centralizes access to over 35 large language models (LLMs) and AI tools, allowing teams to automate repetitive workflows, reduce costs, and boost productivity by up to 10 times. The platform emphasizes data security and compliance with standards such as SOC 2 Type II, HIPAA, and GDPR. It supports enterprises in building custom AI workflows, ensuring full visibility, auditability, and governance of AI interactions. Additionally, Prompts.ai fosters collaboration by providing a shared library of expert-built prompts and workflows, enabling businesses to scale AI adoption efficiently and securely.

Prompts AI

Prompts.ai is an enterprise-grade AI platform designed to streamline, optimize, and govern generative AI workflows and prompt engineering across organizations. It centralizes access to over 35 large language models (LLMs) and AI tools, allowing teams to automate repetitive workflows, reduce costs, and boost productivity by up to 10 times. The platform emphasizes data security and compliance with standards such as SOC 2 Type II, HIPAA, and GDPR. It supports enterprises in building custom AI workflows, ensuring full visibility, auditability, and governance of AI interactions. Additionally, Prompts.ai fosters collaboration by providing a shared library of expert-built prompts and workflows, enabling businesses to scale AI adoption efficiently and securely.

LLM Chat

LLMChat is a privacy-focused, open-source AI chatbot platform designed for advanced research, agentic workflows, and seamless interaction with multiple large language models (LLMs). It offers users a minimalistic and intuitive interface enabling deep exploration of complex topics with modes like Deep Research and Pro Search, which incorporates real-time web integration for current data. The platform emphasizes user privacy by storing all chat history locally in the browser, ensuring conversations never leave the device. LLMChat supports many popular LLM providers such as OpenAI, Anthropic, Google, and more, allowing users to customize AI assistants with personalized instructions and knowledge bases for a wide variety of applications ranging from research to content generation and coding assistance.

LLM Chat

LLMChat is a privacy-focused, open-source AI chatbot platform designed for advanced research, agentic workflows, and seamless interaction with multiple large language models (LLMs). It offers users a minimalistic and intuitive interface enabling deep exploration of complex topics with modes like Deep Research and Pro Search, which incorporates real-time web integration for current data. The platform emphasizes user privacy by storing all chat history locally in the browser, ensuring conversations never leave the device. LLMChat supports many popular LLM providers such as OpenAI, Anthropic, Google, and more, allowing users to customize AI assistants with personalized instructions and knowledge bases for a wide variety of applications ranging from research to content generation and coding assistance.

LLM Chat

LLMChat is a privacy-focused, open-source AI chatbot platform designed for advanced research, agentic workflows, and seamless interaction with multiple large language models (LLMs). It offers users a minimalistic and intuitive interface enabling deep exploration of complex topics with modes like Deep Research and Pro Search, which incorporates real-time web integration for current data. The platform emphasizes user privacy by storing all chat history locally in the browser, ensuring conversations never leave the device. LLMChat supports many popular LLM providers such as OpenAI, Anthropic, Google, and more, allowing users to customize AI assistants with personalized instructions and knowledge bases for a wide variety of applications ranging from research to content generation and coding assistance.

H2Loop

H2LooP AI is an enterprise-focused AI platform designed specifically for system software teams working in industries like automotive, electronics, IoT, telecom, avionics, and semiconductors. It integrates seamlessly with existing development toolsets without disrupting workflows. The platform offers fully on-premise deployment and is trained on a company’s proprietary system code, logs, and specifications, ensuring complete data privacy and security. H2LooP AI facilitates co-building, fast prototyping, and research-backed innovation tailored to complex system software development environments.

H2Loop

H2LooP AI is an enterprise-focused AI platform designed specifically for system software teams working in industries like automotive, electronics, IoT, telecom, avionics, and semiconductors. It integrates seamlessly with existing development toolsets without disrupting workflows. The platform offers fully on-premise deployment and is trained on a company’s proprietary system code, logs, and specifications, ensuring complete data privacy and security. H2LooP AI facilitates co-building, fast prototyping, and research-backed innovation tailored to complex system software development environments.

H2Loop

H2LooP AI is an enterprise-focused AI platform designed specifically for system software teams working in industries like automotive, electronics, IoT, telecom, avionics, and semiconductors. It integrates seamlessly with existing development toolsets without disrupting workflows. The platform offers fully on-premise deployment and is trained on a company’s proprietary system code, logs, and specifications, ensuring complete data privacy and security. H2LooP AI facilitates co-building, fast prototyping, and research-backed innovation tailored to complex system software development environments.

Mem0

Mem0.ai is a universal, self-improving memory layer for LLM applications that gives AI agents persistent recall across conversations. It intelligently compresses chat history into optimized representations, cutting token usage by up to 80% while preserving essential context for personalized experiences. Used by 50k+ developers and companies like Sunflower Sober and OpenNote, Mem0 enables infinite recall in healthcare, education, sales, and more, reducing costs and boosting response quality by 26% over native solutions. With one-line installation, framework compatibility, and enterprise-grade security including SOC 2 and HIPAA compliance, it deploys anywhere from Kubernetes to air-gapped servers for production-ready personalization.

Mem0

Mem0.ai is a universal, self-improving memory layer for LLM applications that gives AI agents persistent recall across conversations. It intelligently compresses chat history into optimized representations, cutting token usage by up to 80% while preserving essential context for personalized experiences. Used by 50k+ developers and companies like Sunflower Sober and OpenNote, Mem0 enables infinite recall in healthcare, education, sales, and more, reducing costs and boosting response quality by 26% over native solutions. With one-line installation, framework compatibility, and enterprise-grade security including SOC 2 and HIPAA compliance, it deploys anywhere from Kubernetes to air-gapped servers for production-ready personalization.

Mem0

Mem0.ai is a universal, self-improving memory layer for LLM applications that gives AI agents persistent recall across conversations. It intelligently compresses chat history into optimized representations, cutting token usage by up to 80% while preserving essential context for personalized experiences. Used by 50k+ developers and companies like Sunflower Sober and OpenNote, Mem0 enables infinite recall in healthcare, education, sales, and more, reducing costs and boosting response quality by 26% over native solutions. With one-line installation, framework compatibility, and enterprise-grade security including SOC 2 and HIPAA compliance, it deploys anywhere from Kubernetes to air-gapped servers for production-ready personalization.

GPTConsole

GPTConsole.ai is a developer-focused platform for creating, sharing, and monetizing autonomous AI agents that handle complex tasks beyond simple responses. It simplifies agent development through SDK, API, CLI tools, and data infrastructure managing event chaining, lifecycle, and memory, letting developers focus on objectives. Key agents include Pixie for building full web apps, dashboards, and landing pages from prompts; Chip for codebase analysis, Jira ticket generation, PR reviews; and Doodle for animated visuals. Install via npm for CLI access, generate production-ready code iteratively, and integrate with tools like GitHub or Jira. Trusted by 5000+ developers, it accelerates web/mobile app creation and automation efficiently.

GPTConsole

GPTConsole.ai is a developer-focused platform for creating, sharing, and monetizing autonomous AI agents that handle complex tasks beyond simple responses. It simplifies agent development through SDK, API, CLI tools, and data infrastructure managing event chaining, lifecycle, and memory, letting developers focus on objectives. Key agents include Pixie for building full web apps, dashboards, and landing pages from prompts; Chip for codebase analysis, Jira ticket generation, PR reviews; and Doodle for animated visuals. Install via npm for CLI access, generate production-ready code iteratively, and integrate with tools like GitHub or Jira. Trusted by 5000+ developers, it accelerates web/mobile app creation and automation efficiently.

GPTConsole

GPTConsole.ai is a developer-focused platform for creating, sharing, and monetizing autonomous AI agents that handle complex tasks beyond simple responses. It simplifies agent development through SDK, API, CLI tools, and data infrastructure managing event chaining, lifecycle, and memory, letting developers focus on objectives. Key agents include Pixie for building full web apps, dashboards, and landing pages from prompts; Chip for codebase analysis, Jira ticket generation, PR reviews; and Doodle for animated visuals. Install via npm for CLI access, generate production-ready code iteratively, and integrate with tools like GitHub or Jira. Trusted by 5000+ developers, it accelerates web/mobile app creation and automation efficiently.

FastRouter.ai

FastRouter.ai serves as a unified API gateway for large language models, simplifying access to over 100 LLMs from providers like OpenAI, Anthropic, Google, Meta, and more through a single OpenAI-compatible endpoint. Developers and enterprises route requests intelligently based on speed, cost, reliability, and performance, with automatic failover, real-time analytics, and no transaction fees. Key features include multimodal support for text, images, video, embeddings, web search integration, custom model lists, project governance with budgets and permissions, a model playground for comparisons, and seamless IDE integrations like Cursor and Cline. It handles high-scale workloads with superior latency and uptime.

FastRouter.ai

FastRouter.ai serves as a unified API gateway for large language models, simplifying access to over 100 LLMs from providers like OpenAI, Anthropic, Google, Meta, and more through a single OpenAI-compatible endpoint. Developers and enterprises route requests intelligently based on speed, cost, reliability, and performance, with automatic failover, real-time analytics, and no transaction fees. Key features include multimodal support for text, images, video, embeddings, web search integration, custom model lists, project governance with budgets and permissions, a model playground for comparisons, and seamless IDE integrations like Cursor and Cline. It handles high-scale workloads with superior latency and uptime.

FastRouter.ai

FastRouter.ai serves as a unified API gateway for large language models, simplifying access to over 100 LLMs from providers like OpenAI, Anthropic, Google, Meta, and more through a single OpenAI-compatible endpoint. Developers and enterprises route requests intelligently based on speed, cost, reliability, and performance, with automatic failover, real-time analytics, and no transaction fees. Key features include multimodal support for text, images, video, embeddings, web search integration, custom model lists, project governance with budgets and permissions, a model playground for comparisons, and seamless IDE integrations like Cursor and Cline. It handles high-scale workloads with superior latency and uptime.

Editorial Note

This page was researched and written by the ATB Editorial Team. Our team researches each AI tool by reviewing its official website, testing features, exploring real use cases, and considering user feedback. Every page is fact-checked and regularly updated to ensure the information stays accurate, neutral, and useful for our readers.

If you have any suggestions or questions, email us at hello@aitoolbook.ai