- Developers: Individuals and teams looking to integrate Google's AI models into their applications and services.

- AI Enthusiasts & Researchers: Anyone interested in experimenting with advanced AI capabilities, understanding model behaviors, and exploring new prompts.

- Content Creators: Users who need to generate text, speech, images, or engage in real-time AI-driven dialogues for creative projects.

- Businesses: Organizations exploring how to leverage generative AI for various tasks, from content generation to intelligent automation.

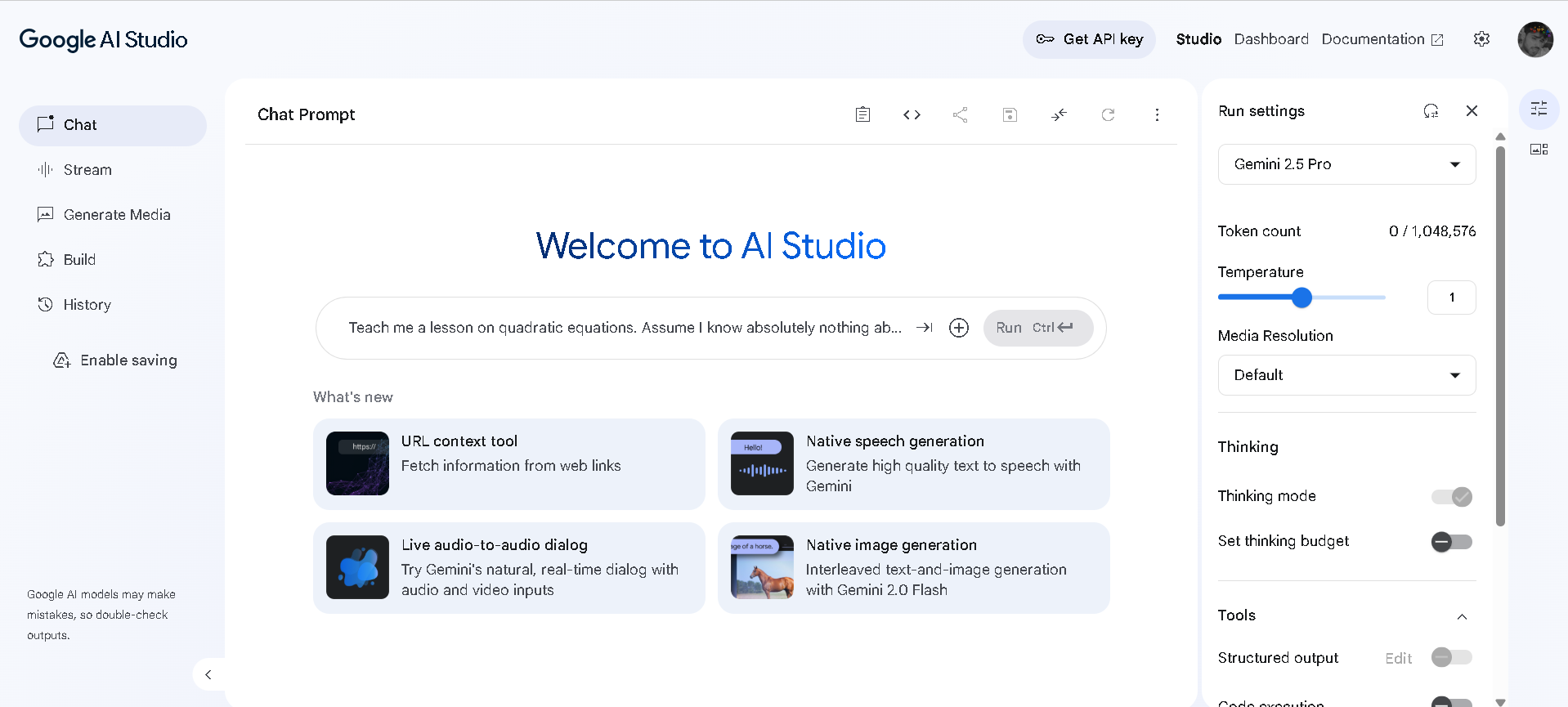

How to Use Google AI Studio?

- Choose a Model: Select a Google AI model, such as Gemini 2.5 Pro, from the available options.

- Configure Run Settings: Adjust parameters like 'Temperature' (creativity), 'Token count' (response length), and 'Media Resolution' for generated media.

- Input Prompts: Use the "Chat Prompt" interface to type in your queries, instructions, or data to interact with the AI.

- Generate & Iterate: Run the prompt to receive AI-generated responses, text, or media, then refine your prompts or settings to achieve desired outputs.

- Utilize Integrated Tools: Employ built-in features like "URL context tool" to fetch web info, "Native speech generation" for text-to-speech, or "Native image generation" for text-to-image creation.

- Integrated Tooling: It offers a suite of integrated AI capabilities, including URL context fetching, native speech generation, live audio-to-audio dialog, and native image generation, all within one environment.

- Fine-Grained Control: Users have detailed control over model parameters like temperature, token count, and media resolution, allowing for precise output tuning.

- Direct Access to Google's AI: Provides direct access to powerful Google AI models like Gemini, enabling users to leverage state-of-the-art generative capabilities.

- Prototyping Environment: It serves as an excellent sandbox for rapid prototyping and experimentation with AI before deploying models in a larger application.

- Comprehensive Feature Set: The studio offers a wide array of tools for various AI tasks, from text to audio and image generation.

- Parameter Control: The ability to adjust model settings like temperature provides significant control over the AI's output.

- User-Friendly Interface: The clean and intuitive interface makes it accessible for both beginners and experienced AI practitioners.

- Direct Access to Google's Latest AI: Users can work directly with powerful models like Gemini 2.5 Pro.

- Potential Cost: While the core studio might be free to use for basic experimentation, API usage for more extensive tasks typically incurs costs, which are not transparently visible within this interface.

- Requires Login: Access to the studio requires a Google account login, which might be a barrier for some.

Paid

Custom

Proud of the love you're getting? Show off your AI Toolbook reviews—then invite more fans to share the love and build your credibility.

Add an AI Toolbook badge to your site—an easy way to drive followers, showcase updates, and collect reviews. It's like a mini 24/7 billboard for your AI.

Reviews

Rating Distribution

Average score

Popular Mention

FAQs

Similar AI Tools

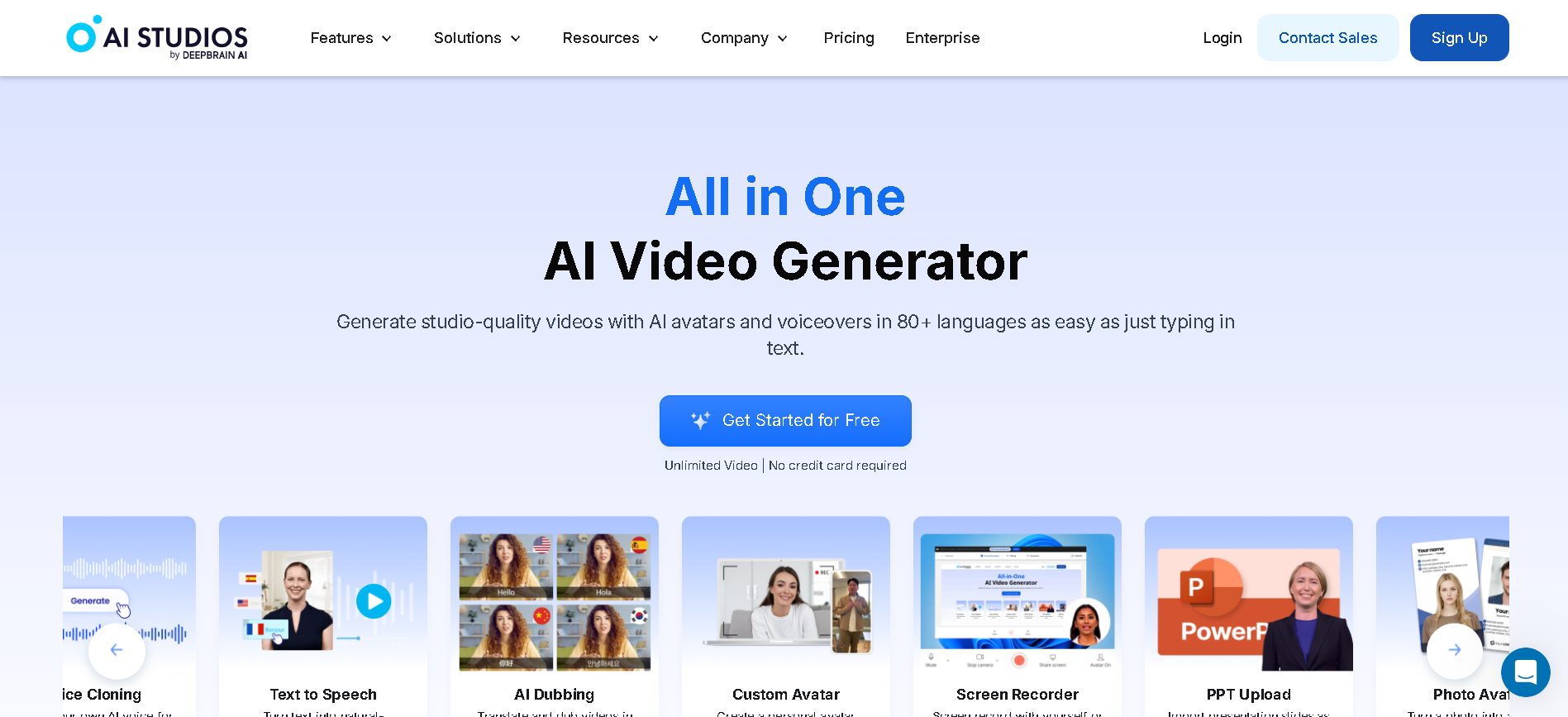

AI Studios

AI Studios is an AI-powered video creation platform that enables users to generate professional-quality videos using AI avatars. It eliminates the need for filming and complex editing, making video production fast and accessible.

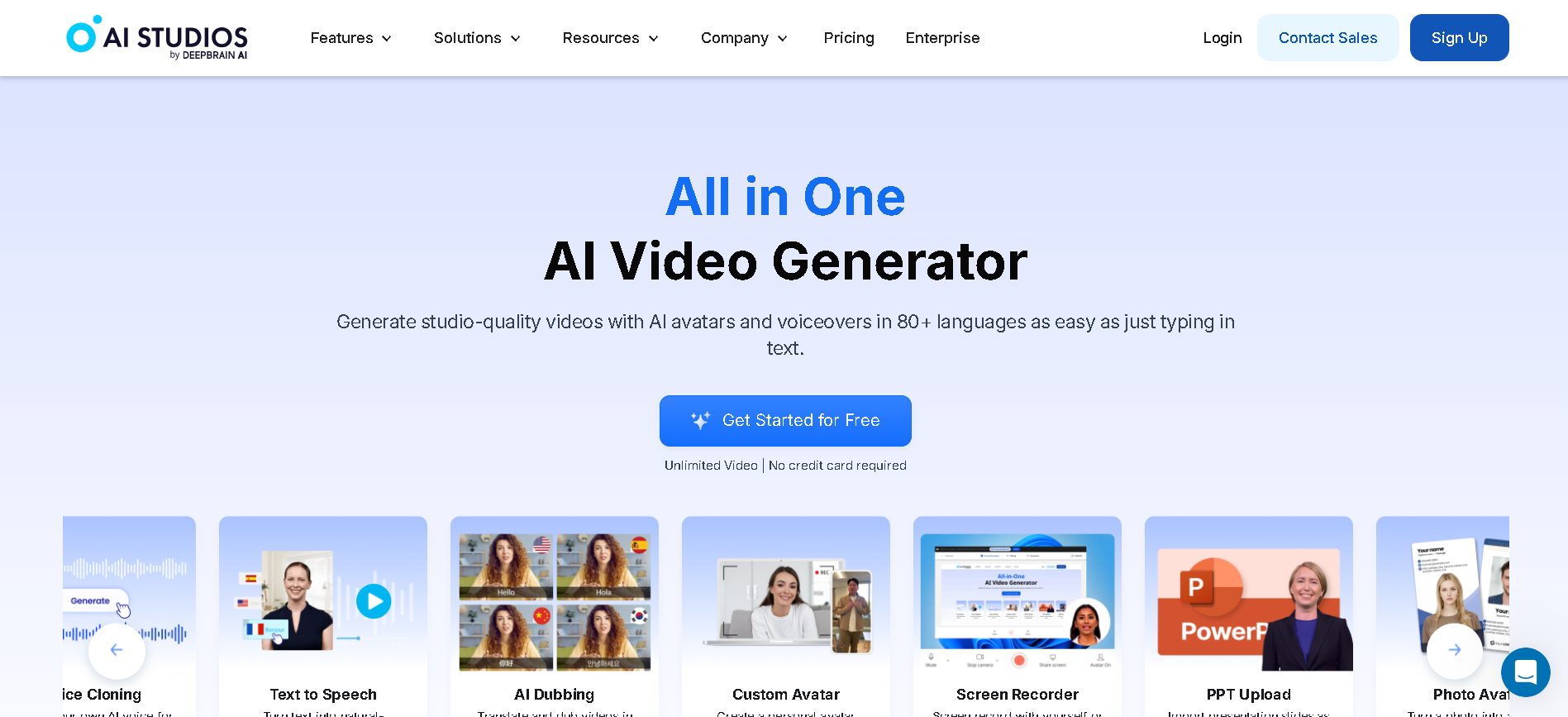

AI Studios

AI Studios is an AI-powered video creation platform that enables users to generate professional-quality videos using AI avatars. It eliminates the need for filming and complex editing, making video production fast and accessible.

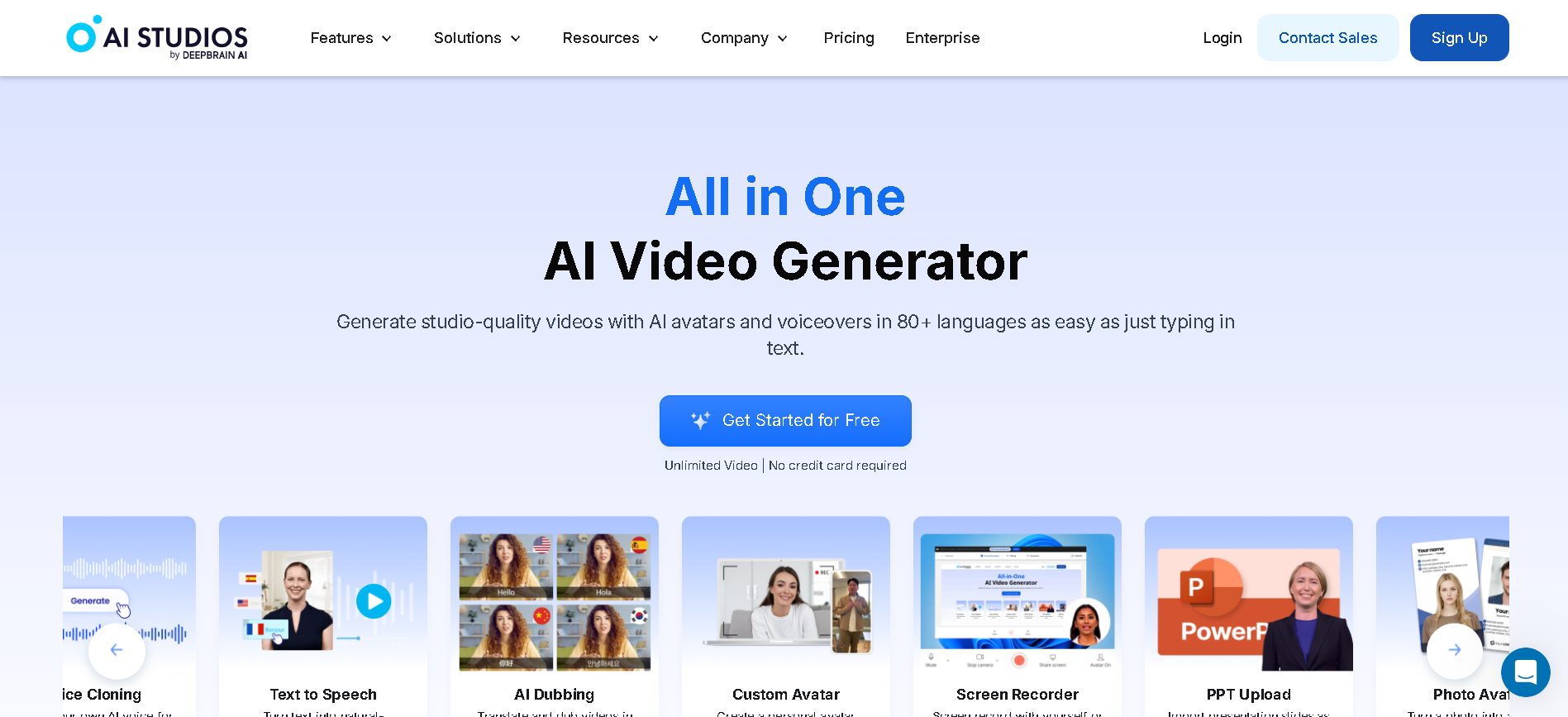

AI Studios

AI Studios is an AI-powered video creation platform that enables users to generate professional-quality videos using AI avatars. It eliminates the need for filming and complex editing, making video production fast and accessible.

Sim Studio

Sim.AI is a cloud-native platform designed to streamline the development and deployment of AI agents. It offers a user-friendly, open-source environment that allows developers to create, connect, and automate workflows effortlessly. With seamless integrations and no-code setup, Sim.AI empowers teams to enhance productivity and innovation.

Sim Studio

Sim.AI is a cloud-native platform designed to streamline the development and deployment of AI agents. It offers a user-friendly, open-source environment that allows developers to create, connect, and automate workflows effortlessly. With seamless integrations and no-code setup, Sim.AI empowers teams to enhance productivity and innovation.

Sim Studio

Sim.AI is a cloud-native platform designed to streamline the development and deployment of AI agents. It offers a user-friendly, open-source environment that allows developers to create, connect, and automate workflows effortlessly. With seamless integrations and no-code setup, Sim.AI empowers teams to enhance productivity and innovation.

Abacus.AI

ChatLLM Teams by Abacus.AI is an all‑in‑one AI assistant that unifies access to top LLMs, image and video generators, and powerful agentic tools in a single workspace. It includes DeepAgent for complex, multi‑step tasks, code execution with an editor, document/chat with files, web search, TTS, and slide/doc generation. Users can build custom chatbots, set up AI workflows, generate images and videos from multiple models, and organize work with projects across desktop and mobile apps. The platform is OpenAI‑style in usability but adds operator features for running tasks on a computer, plus DeepAgent Desktop and AppLLM for building and hosting small apps.

Abacus.AI

ChatLLM Teams by Abacus.AI is an all‑in‑one AI assistant that unifies access to top LLMs, image and video generators, and powerful agentic tools in a single workspace. It includes DeepAgent for complex, multi‑step tasks, code execution with an editor, document/chat with files, web search, TTS, and slide/doc generation. Users can build custom chatbots, set up AI workflows, generate images and videos from multiple models, and organize work with projects across desktop and mobile apps. The platform is OpenAI‑style in usability but adds operator features for running tasks on a computer, plus DeepAgent Desktop and AppLLM for building and hosting small apps.

Abacus.AI

ChatLLM Teams by Abacus.AI is an all‑in‑one AI assistant that unifies access to top LLMs, image and video generators, and powerful agentic tools in a single workspace. It includes DeepAgent for complex, multi‑step tasks, code execution with an editor, document/chat with files, web search, TTS, and slide/doc generation. Users can build custom chatbots, set up AI workflows, generate images and videos from multiple models, and organize work with projects across desktop and mobile apps. The platform is OpenAI‑style in usability but adds operator features for running tasks on a computer, plus DeepAgent Desktop and AppLLM for building and hosting small apps.

JuicyAI

Juicy AI is an innovative platform that provides a suite of AI assistants, known as "Juicers," designed to help users with a variety of tasks including writing, speaking, coding, image creation, and more. Each AI assistant is specialized for a specific function, allowing users to mix and match to create their ideal AI team. Juicy AI enables individuals and businesses to enhance productivity, streamline workflows, and tackle creative or technical challenges efficiently.

JuicyAI

Juicy AI is an innovative platform that provides a suite of AI assistants, known as "Juicers," designed to help users with a variety of tasks including writing, speaking, coding, image creation, and more. Each AI assistant is specialized for a specific function, allowing users to mix and match to create their ideal AI team. Juicy AI enables individuals and businesses to enhance productivity, streamline workflows, and tackle creative or technical challenges efficiently.

JuicyAI

Juicy AI is an innovative platform that provides a suite of AI assistants, known as "Juicers," designed to help users with a variety of tasks including writing, speaking, coding, image creation, and more. Each AI assistant is specialized for a specific function, allowing users to mix and match to create their ideal AI team. Juicy AI enables individuals and businesses to enhance productivity, streamline workflows, and tackle creative or technical challenges efficiently.

Google Vids

Google Vids is an AI-powered video creation and editing app within the Google Workspace ecosystem, designed to let teams generate polished, story-driven videos without needing a full-blown video-editing tool. It uses smart prompts and content from your files (Docs, Slides, Drive) to jump-start a video draft, then lets you customize media, voice-over, transitions and style effortlessly. Built for speed and collaboration, you can invite teammates to comment, iterate, and publish—all in the same shared workspace. Whether it’s a training clip, internal update, or marketing snippet, Google Vids cuts through the complexity and puts video creation in everyone’s reach.

Google Vids

Google Vids is an AI-powered video creation and editing app within the Google Workspace ecosystem, designed to let teams generate polished, story-driven videos without needing a full-blown video-editing tool. It uses smart prompts and content from your files (Docs, Slides, Drive) to jump-start a video draft, then lets you customize media, voice-over, transitions and style effortlessly. Built for speed and collaboration, you can invite teammates to comment, iterate, and publish—all in the same shared workspace. Whether it’s a training clip, internal update, or marketing snippet, Google Vids cuts through the complexity and puts video creation in everyone’s reach.

Google Vids

Google Vids is an AI-powered video creation and editing app within the Google Workspace ecosystem, designed to let teams generate polished, story-driven videos without needing a full-blown video-editing tool. It uses smart prompts and content from your files (Docs, Slides, Drive) to jump-start a video draft, then lets you customize media, voice-over, transitions and style effortlessly. Built for speed and collaboration, you can invite teammates to comment, iterate, and publish—all in the same shared workspace. Whether it’s a training clip, internal update, or marketing snippet, Google Vids cuts through the complexity and puts video creation in everyone’s reach.

My Clever AI

MyCleverAI is an AI-powered website design and content generation platform that enables users to generate full page layouts, HTML/CSS files, web elements or email templates from text prompts or drawings. It supports design generation, adjustment, and code export—making it suitable for small businesses, designers and freelancers who need fast, custom web assets without starting from scratch.

My Clever AI

MyCleverAI is an AI-powered website design and content generation platform that enables users to generate full page layouts, HTML/CSS files, web elements or email templates from text prompts or drawings. It supports design generation, adjustment, and code export—making it suitable for small businesses, designers and freelancers who need fast, custom web assets without starting from scratch.

My Clever AI

MyCleverAI is an AI-powered website design and content generation platform that enables users to generate full page layouts, HTML/CSS files, web elements or email templates from text prompts or drawings. It supports design generation, adjustment, and code export—making it suitable for small businesses, designers and freelancers who need fast, custom web assets without starting from scratch.

Team GPT

Team-GPT is a collaborative AI toolkit designed specifically for marketing teams and agencies to streamline content creation and campaign management. It combines access to multiple powerful AI models like ChatGPT, Claude, Gemini, and Perplexity on a single platform, eliminating the need for managing multiple subscriptions. Team-GPT enables marketing teams to build custom AI assistants trained on company or client-specific knowledge to ensure brand voice consistency and messaging accuracy. The platform supports seamless integration with popular content repositories like Google Drive and Notion, automates marketing workflows, generates professional marketing visuals, and organizes projects by client or use case for smoother team collaboration and scalable marketing operations.

Team GPT

Team-GPT is a collaborative AI toolkit designed specifically for marketing teams and agencies to streamline content creation and campaign management. It combines access to multiple powerful AI models like ChatGPT, Claude, Gemini, and Perplexity on a single platform, eliminating the need for managing multiple subscriptions. Team-GPT enables marketing teams to build custom AI assistants trained on company or client-specific knowledge to ensure brand voice consistency and messaging accuracy. The platform supports seamless integration with popular content repositories like Google Drive and Notion, automates marketing workflows, generates professional marketing visuals, and organizes projects by client or use case for smoother team collaboration and scalable marketing operations.

Team GPT

Team-GPT is a collaborative AI toolkit designed specifically for marketing teams and agencies to streamline content creation and campaign management. It combines access to multiple powerful AI models like ChatGPT, Claude, Gemini, and Perplexity on a single platform, eliminating the need for managing multiple subscriptions. Team-GPT enables marketing teams to build custom AI assistants trained on company or client-specific knowledge to ensure brand voice consistency and messaging accuracy. The platform supports seamless integration with popular content repositories like Google Drive and Notion, automates marketing workflows, generates professional marketing visuals, and organizes projects by client or use case for smoother team collaboration and scalable marketing operations.

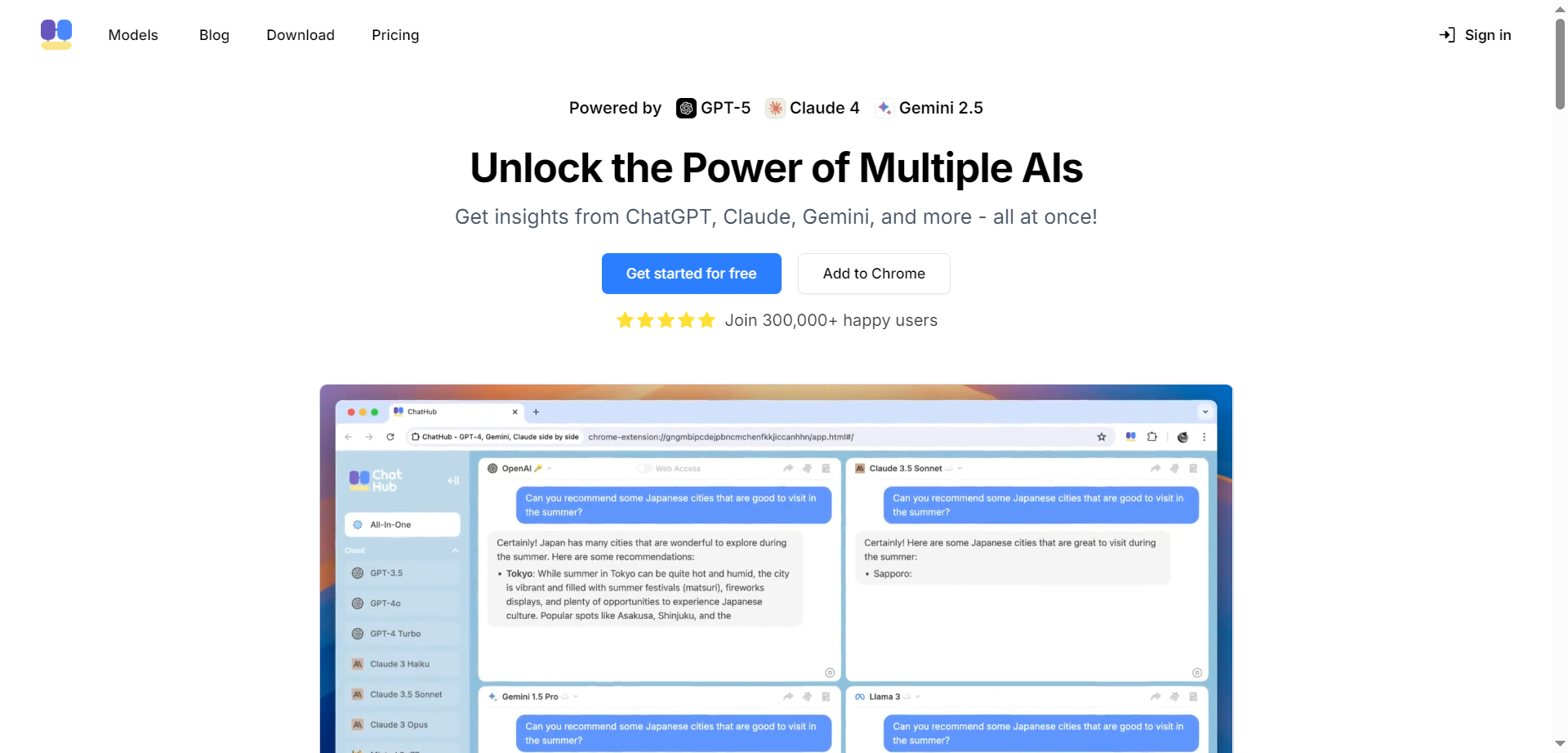

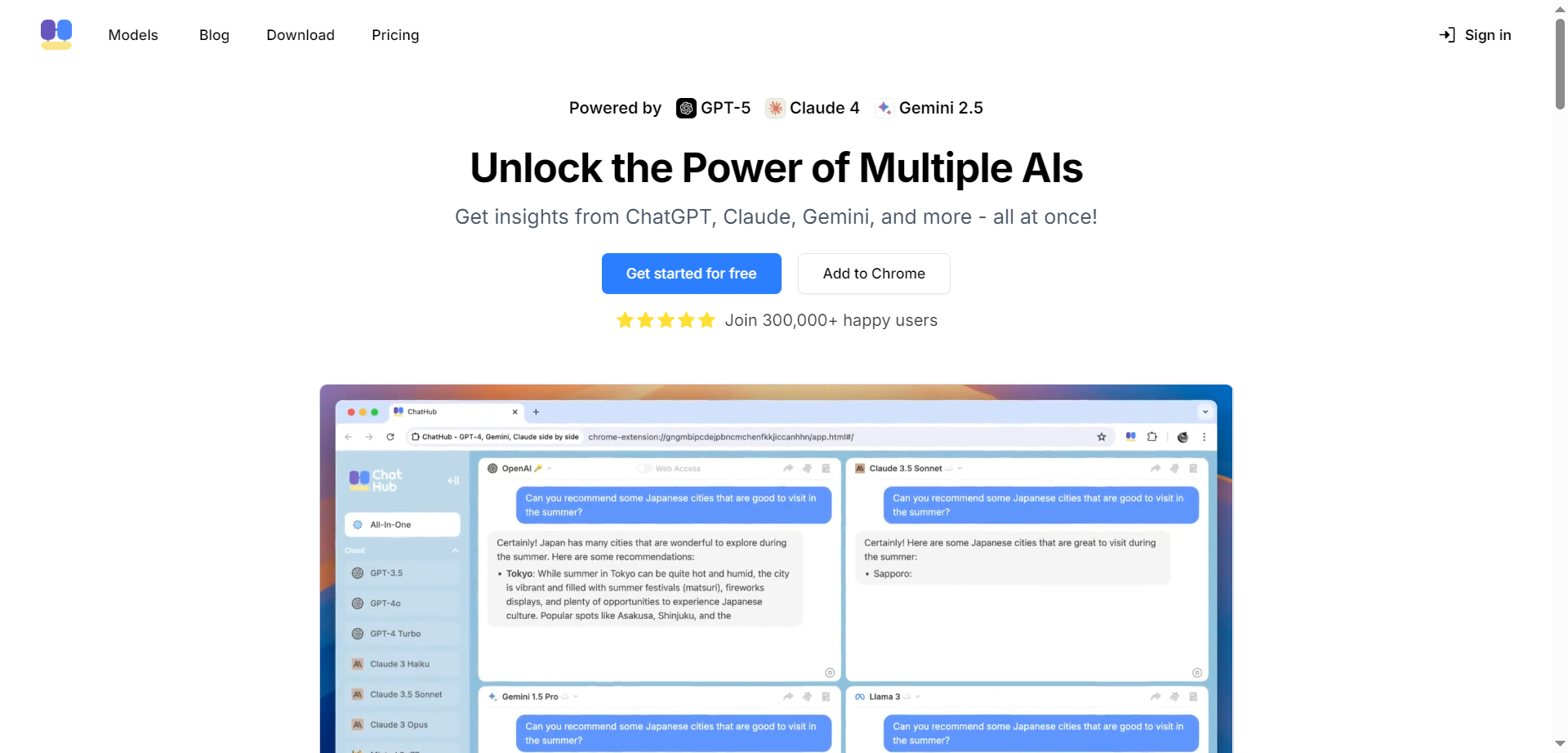

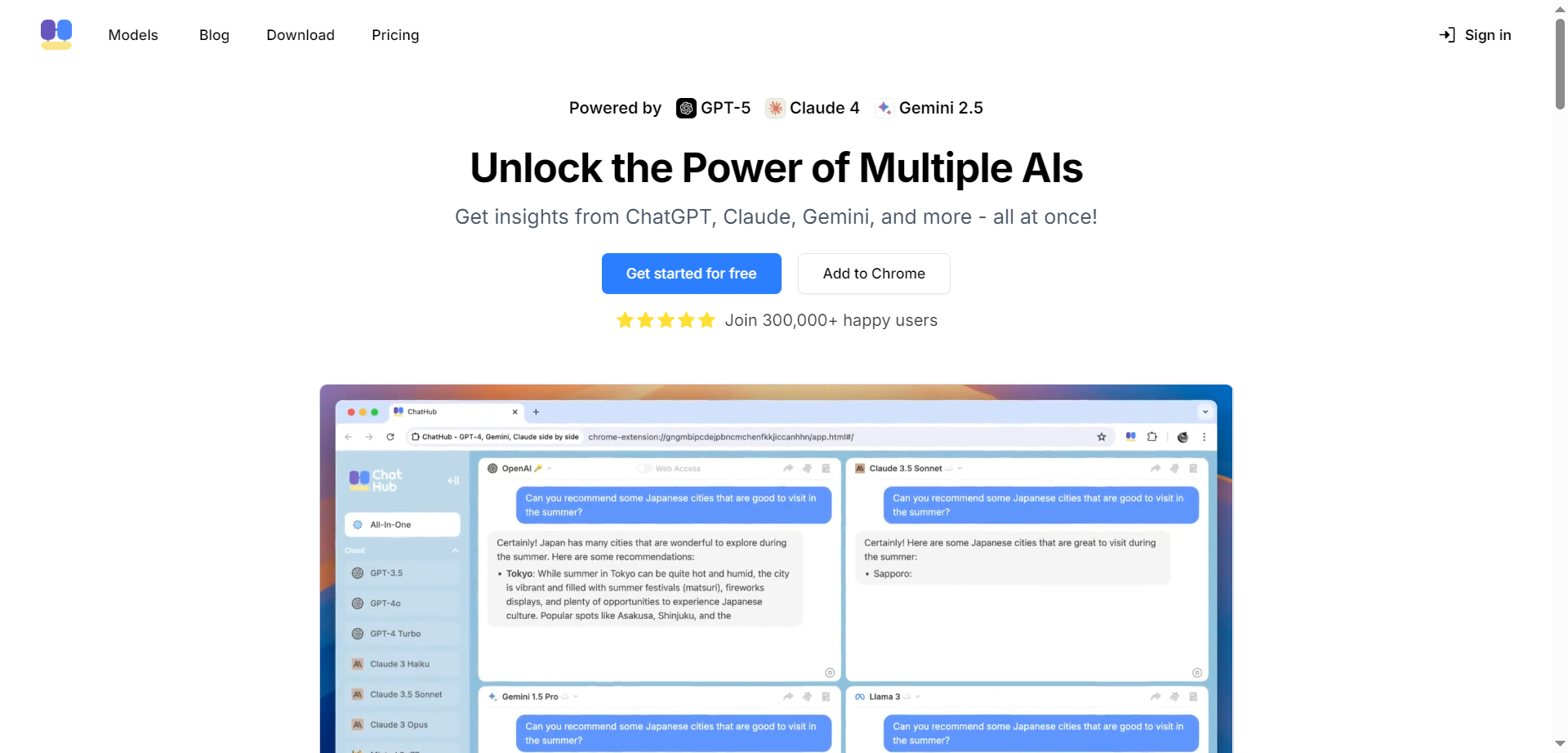

ChatHub

ChatHub is a platform that allows users to simultaneously interact with multiple AI chatbots, providing diverse AI-generated responses for enhanced insights and confidence. It supports top AI models like GPT-5, Claude 4, Gemini 2.5, Llama 3.3, and over 20 others, all accessible under one subscription. Users can input one question and receive multiple perspectives from these models, helping to cross-verify information and minimize hallucinations. ChatHub also includes AI-powered image generation using models such as DALL-E 3 and Stable Diffusion, file upload and analysis for documents and images, and features like AI-powered web search, code preview, prompt libraries, and productivity tools.

ChatHub

ChatHub is a platform that allows users to simultaneously interact with multiple AI chatbots, providing diverse AI-generated responses for enhanced insights and confidence. It supports top AI models like GPT-5, Claude 4, Gemini 2.5, Llama 3.3, and over 20 others, all accessible under one subscription. Users can input one question and receive multiple perspectives from these models, helping to cross-verify information and minimize hallucinations. ChatHub also includes AI-powered image generation using models such as DALL-E 3 and Stable Diffusion, file upload and analysis for documents and images, and features like AI-powered web search, code preview, prompt libraries, and productivity tools.

ChatHub

ChatHub is a platform that allows users to simultaneously interact with multiple AI chatbots, providing diverse AI-generated responses for enhanced insights and confidence. It supports top AI models like GPT-5, Claude 4, Gemini 2.5, Llama 3.3, and over 20 others, all accessible under one subscription. Users can input one question and receive multiple perspectives from these models, helping to cross-verify information and minimize hallucinations. ChatHub also includes AI-powered image generation using models such as DALL-E 3 and Stable Diffusion, file upload and analysis for documents and images, and features like AI-powered web search, code preview, prompt libraries, and productivity tools.

Ask AI

Ask AI is a free AI answer engine that lets you ask questions in natural language and receive instant, accurate, and factual answers, positioning itself as an alternative to traditional search engines and tools like ChatGPT. It focuses on helping users move quickly from question to solution with clear, concise responses, and can also summarize long web pages into easy-to-read bullet points, create images from simple text prompts, and check grammar with one click. With over 500 million searches processed and more than 1.4 million searches made daily, iAsk is built to accelerate research, improve learning, and save up to 80% of the time typically spent hunting for information. Its Pro tier adds advanced capabilities powered by benchmark-leading models.

Ask AI

Ask AI is a free AI answer engine that lets you ask questions in natural language and receive instant, accurate, and factual answers, positioning itself as an alternative to traditional search engines and tools like ChatGPT. It focuses on helping users move quickly from question to solution with clear, concise responses, and can also summarize long web pages into easy-to-read bullet points, create images from simple text prompts, and check grammar with one click. With over 500 million searches processed and more than 1.4 million searches made daily, iAsk is built to accelerate research, improve learning, and save up to 80% of the time typically spent hunting for information. Its Pro tier adds advanced capabilities powered by benchmark-leading models.

Ask AI

Ask AI is a free AI answer engine that lets you ask questions in natural language and receive instant, accurate, and factual answers, positioning itself as an alternative to traditional search engines and tools like ChatGPT. It focuses on helping users move quickly from question to solution with clear, concise responses, and can also summarize long web pages into easy-to-read bullet points, create images from simple text prompts, and check grammar with one click. With over 500 million searches processed and more than 1.4 million searches made daily, iAsk is built to accelerate research, improve learning, and save up to 80% of the time typically spent hunting for information. Its Pro tier adds advanced capabilities powered by benchmark-leading models.

AIToolly

AIToolly is a comprehensive directory showcasing the best AI tools and applications across diverse categories like image generation, video editing, music creation, chatbots, gaming, and productivity solutions. Users can discover, compare, and explore curated listings of cutting-edge AI software with detailed descriptions, ratings, user stats, and direct links to try tools instantly. Featuring latest additions like Nano Banana for image editing, JSON to Video for structured cinematic clips, OCMaker AI for anime characters, and RoomDesignAI for interior visuals, the platform simplifies finding perfect AI solutions through searchable categories, weekly updates, and sponsored highlights for quick decision-making.

AIToolly

AIToolly is a comprehensive directory showcasing the best AI tools and applications across diverse categories like image generation, video editing, music creation, chatbots, gaming, and productivity solutions. Users can discover, compare, and explore curated listings of cutting-edge AI software with detailed descriptions, ratings, user stats, and direct links to try tools instantly. Featuring latest additions like Nano Banana for image editing, JSON to Video for structured cinematic clips, OCMaker AI for anime characters, and RoomDesignAI for interior visuals, the platform simplifies finding perfect AI solutions through searchable categories, weekly updates, and sponsored highlights for quick decision-making.

AIToolly

AIToolly is a comprehensive directory showcasing the best AI tools and applications across diverse categories like image generation, video editing, music creation, chatbots, gaming, and productivity solutions. Users can discover, compare, and explore curated listings of cutting-edge AI software with detailed descriptions, ratings, user stats, and direct links to try tools instantly. Featuring latest additions like Nano Banana for image editing, JSON to Video for structured cinematic clips, OCMaker AI for anime characters, and RoomDesignAI for interior visuals, the platform simplifies finding perfect AI solutions through searchable categories, weekly updates, and sponsored highlights for quick decision-making.

AICoursify

AICoursify is a powerful AI-driven platform that enables educators, entrepreneurs, and content creators to develop complete online courses in minutes without any prior experience. By simply entering a course topic or title, users can leverage advanced artificial intelligence to generate structured course outlines, detailed lesson plans, and engaging quizzes automatically. The platform offers white-label functionality, allowing creators to fully own and sell their courses without any attribution to the tool itself. With customizable templates, keyword planning tools, and built-in marketing assistance, AICoursify removes the technical barriers of course creation, making professional e-learning accessible to everyone.

AICoursify

AICoursify is a powerful AI-driven platform that enables educators, entrepreneurs, and content creators to develop complete online courses in minutes without any prior experience. By simply entering a course topic or title, users can leverage advanced artificial intelligence to generate structured course outlines, detailed lesson plans, and engaging quizzes automatically. The platform offers white-label functionality, allowing creators to fully own and sell their courses without any attribution to the tool itself. With customizable templates, keyword planning tools, and built-in marketing assistance, AICoursify removes the technical barriers of course creation, making professional e-learning accessible to everyone.

AICoursify

AICoursify is a powerful AI-driven platform that enables educators, entrepreneurs, and content creators to develop complete online courses in minutes without any prior experience. By simply entering a course topic or title, users can leverage advanced artificial intelligence to generate structured course outlines, detailed lesson plans, and engaging quizzes automatically. The platform offers white-label functionality, allowing creators to fully own and sell their courses without any attribution to the tool itself. With customizable templates, keyword planning tools, and built-in marketing assistance, AICoursify removes the technical barriers of course creation, making professional e-learning accessible to everyone.

GPT Proto

GPT Proto provides developers with a unified API to access top AI models for text, image, video, and audio generation, offering rock-solid uptime, lightning-fast responses, and the lowest prices without managing multiple keys or platforms. It aggregates leading models like GPT-5 series, Claude Opus/Sonnet/Haiku, Gemini variants, Grok, and specialized tools for creators, with significant discounts such as 40% off market rates on many. From solo projects to enterprise scale, it ensures 95% of requests respond within 20 seconds, half in just 6 seconds, via robust infrastructure and failover. Quick setup lets you sign up, add credits, generate one API key, and integrate seamlessly into apps, agents, or workflows for prototyping or production.

GPT Proto

GPT Proto provides developers with a unified API to access top AI models for text, image, video, and audio generation, offering rock-solid uptime, lightning-fast responses, and the lowest prices without managing multiple keys or platforms. It aggregates leading models like GPT-5 series, Claude Opus/Sonnet/Haiku, Gemini variants, Grok, and specialized tools for creators, with significant discounts such as 40% off market rates on many. From solo projects to enterprise scale, it ensures 95% of requests respond within 20 seconds, half in just 6 seconds, via robust infrastructure and failover. Quick setup lets you sign up, add credits, generate one API key, and integrate seamlessly into apps, agents, or workflows for prototyping or production.

GPT Proto

GPT Proto provides developers with a unified API to access top AI models for text, image, video, and audio generation, offering rock-solid uptime, lightning-fast responses, and the lowest prices without managing multiple keys or platforms. It aggregates leading models like GPT-5 series, Claude Opus/Sonnet/Haiku, Gemini variants, Grok, and specialized tools for creators, with significant discounts such as 40% off market rates on many. From solo projects to enterprise scale, it ensures 95% of requests respond within 20 seconds, half in just 6 seconds, via robust infrastructure and failover. Quick setup lets you sign up, add credits, generate one API key, and integrate seamlessly into apps, agents, or workflows for prototyping or production.

Editorial Note

This page was researched and written by the ATB Editorial Team. Our team researches each AI tool by reviewing its official website, testing features, exploring real use cases, and considering user feedback. Every page is fact-checked and regularly updated to ensure the information stays accurate, neutral, and useful for our readers.

If you have any suggestions or questions, email us at hello@aitoolbook.ai