- Startups & Scaleups: The platform is built specifically for growing companies that need to automate tasks to scale their operations.

- Engineers & Developers: The first-class API support makes it an ideal tool for developers who want to build custom integrations and embed AI functionalities into their own applications.

- Business Owners: Leaders who want to streamline workflows and improve productivity across their organization.

How to Use It?

- Create an AI Assistant: Users can deploy custom AI assistants tailored to handle specific tasks, such as managing customer inquiries or project management.

- Choose a Model: The platform offers the flexibility to select from a wide range of AI providers and models, allowing users to match AI capabilities with their task requirements.

- Integrate & Automate: The AI assistants can be used via a chat interface or integrated into existing applications and workflows through Inweave's API.

- Flexible Model Selection: Unlike many AI tools, Inweave gives users the power to choose the best AI model for each specific task, providing a higher degree of customization and control.

- Strong API Support: Its first-class API allows for seamless integration into a company's existing technology stack, enabling deep automation of business processes.

- Built for Scaling: The platform is designed to help businesses grow by automating repetitive tasks, allowing teams to focus on more complex, strategic work.

- Security Focused: Inweave takes a proactive approach to security and data safety, giving users peace of mind.

- Customization: The ability to choose from a wide range of AI models makes it a versatile tool that can be adapted to many different needs.

- Automation: The strong API and easy integration features are ideal for businesses looking to automate their workflows and save time.

- Scalability: It is designed to grow with a business, providing a solution that can handle increasing demands as a company expands.

- Limited Information on Pricing: While the site mentions "transparent pricing" with "no minimums," a lack of detailed pricing on the homepage can make it difficult for businesses to budget.

- Potential Learning Curve: While it offers ease of integration, setting up and managing multiple AI assistants and their APIs may require some technical knowledge.

Paid

custom

Proud of the love you're getting? Show off your AI Toolbook reviews—then invite more fans to share the love and build your credibility.

Add an AI Toolbook badge to your site—an easy way to drive followers, showcase updates, and collect reviews. It's like a mini 24/7 billboard for your AI.

Reviews

Rating Distribution

Average score

Popular Mention

FAQs

Similar AI Tools

Build by Nvidia

Build by NVIDIA is a developer-focused platform showcasing blueprints and microservices for building AI-powered applications using NVIDIA’s NIM (NeMo Inference Microservices) ecosystem. It offers plug-and-play workflows like enterprise research agents, RAG pipelines, video summarization assistants, and AI-powered virtual assistants—all optimized for scalability, latency, and multimodal capabilities.

Build by Nvidia

Build by NVIDIA is a developer-focused platform showcasing blueprints and microservices for building AI-powered applications using NVIDIA’s NIM (NeMo Inference Microservices) ecosystem. It offers plug-and-play workflows like enterprise research agents, RAG pipelines, video summarization assistants, and AI-powered virtual assistants—all optimized for scalability, latency, and multimodal capabilities.

Build by Nvidia

Build by NVIDIA is a developer-focused platform showcasing blueprints and microservices for building AI-powered applications using NVIDIA’s NIM (NeMo Inference Microservices) ecosystem. It offers plug-and-play workflows like enterprise research agents, RAG pipelines, video summarization assistants, and AI-powered virtual assistants—all optimized for scalability, latency, and multimodal capabilities.

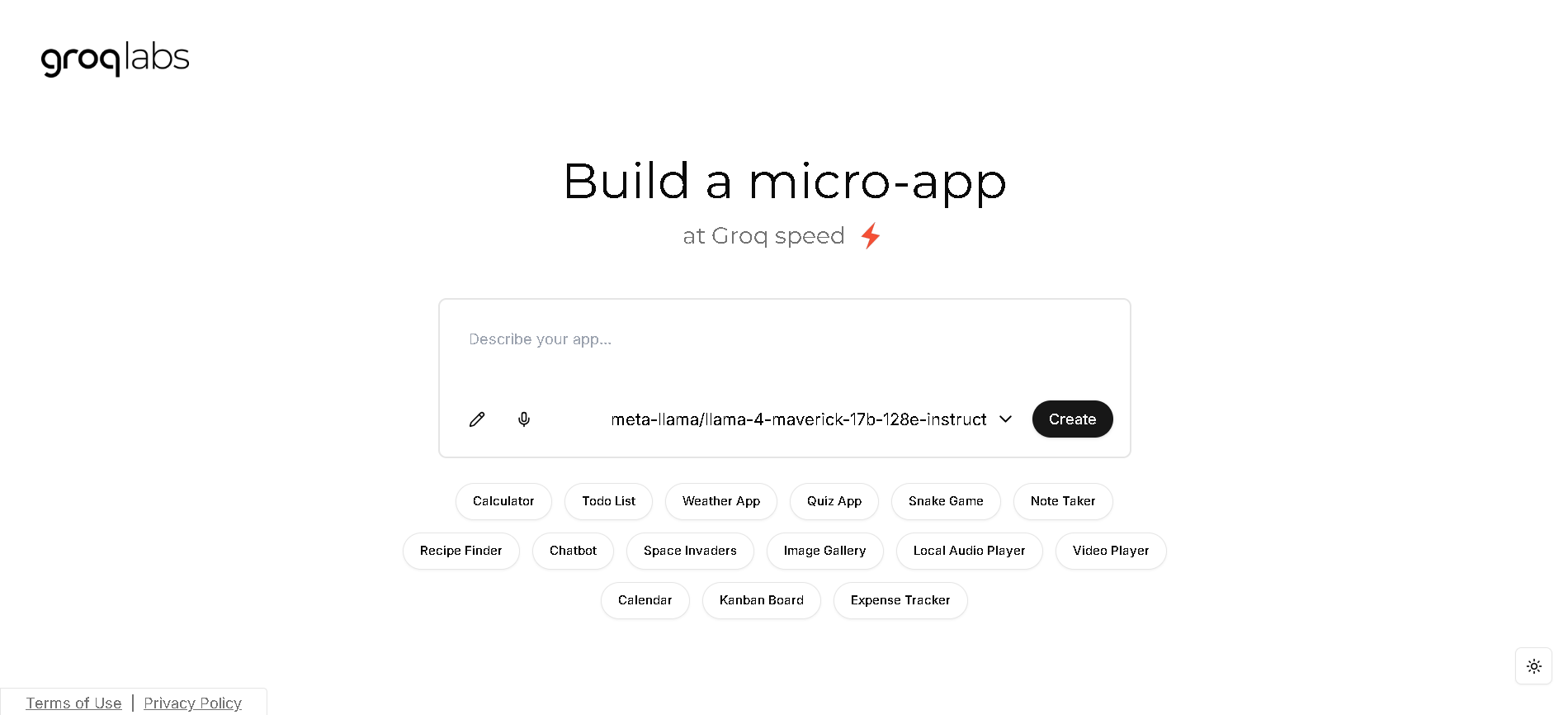

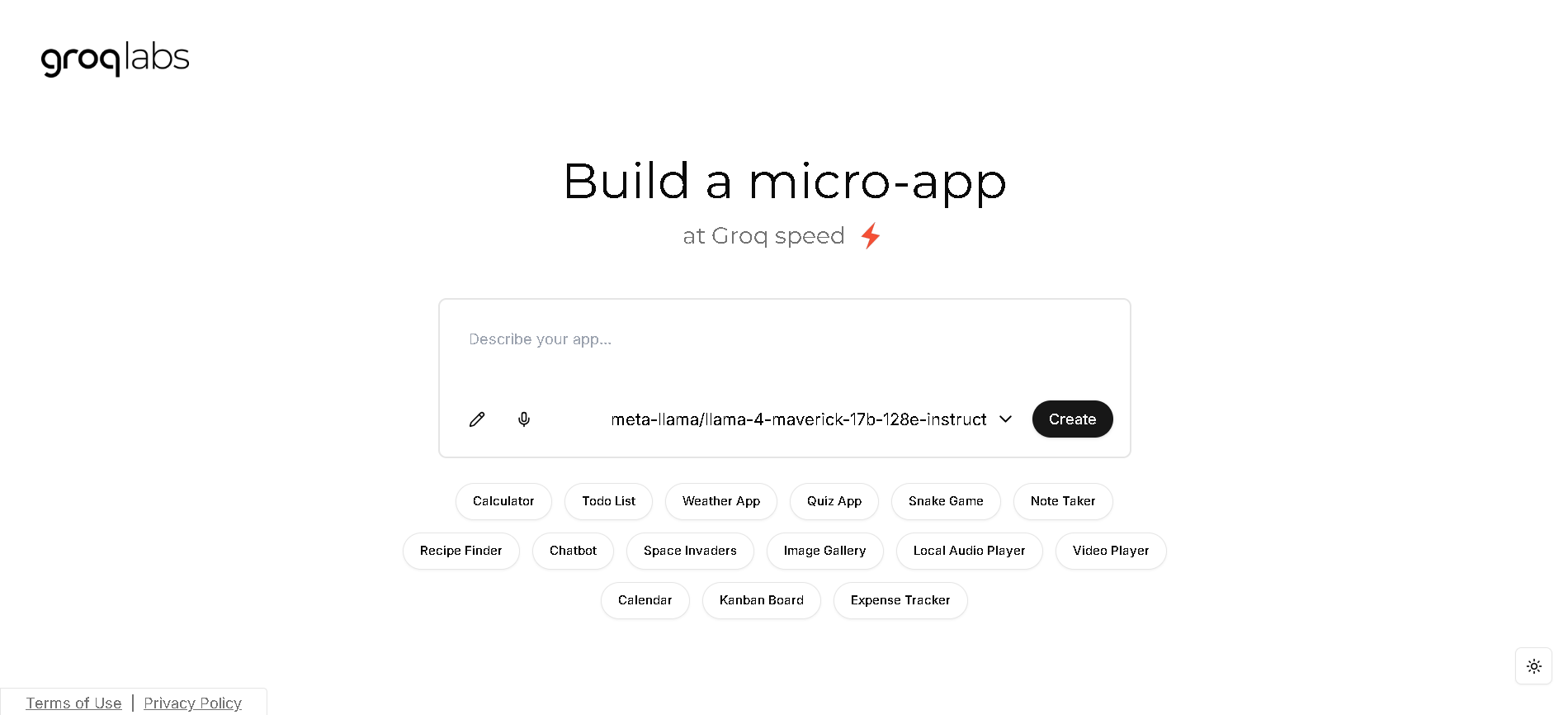

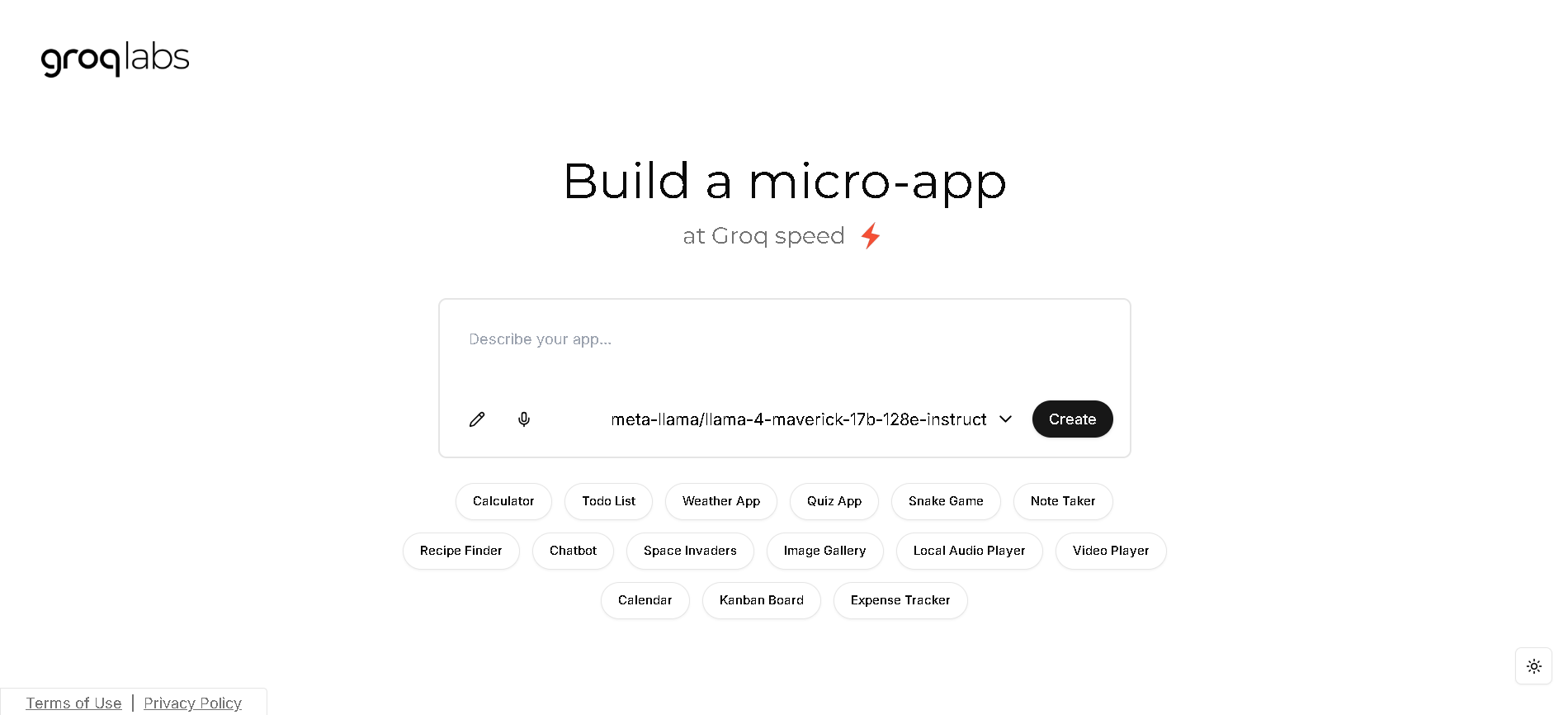

Groq APP Gen

Groq AppGen is an innovative, web-based tool that uses AI to generate and modify web applications in real-time. Powered by Groq's LLM API and the Llama 3.3 70B model, it allows users to create full-stack applications and components using simple, natural language queries. The platform's primary purpose is to dramatically accelerate the development process by generating code in milliseconds, providing an open-source solution for both developers and "no-code" users.

Groq APP Gen

Groq AppGen is an innovative, web-based tool that uses AI to generate and modify web applications in real-time. Powered by Groq's LLM API and the Llama 3.3 70B model, it allows users to create full-stack applications and components using simple, natural language queries. The platform's primary purpose is to dramatically accelerate the development process by generating code in milliseconds, providing an open-source solution for both developers and "no-code" users.

Groq APP Gen

Groq AppGen is an innovative, web-based tool that uses AI to generate and modify web applications in real-time. Powered by Groq's LLM API and the Llama 3.3 70B model, it allows users to create full-stack applications and components using simple, natural language queries. The platform's primary purpose is to dramatically accelerate the development process by generating code in milliseconds, providing an open-source solution for both developers and "no-code" users.

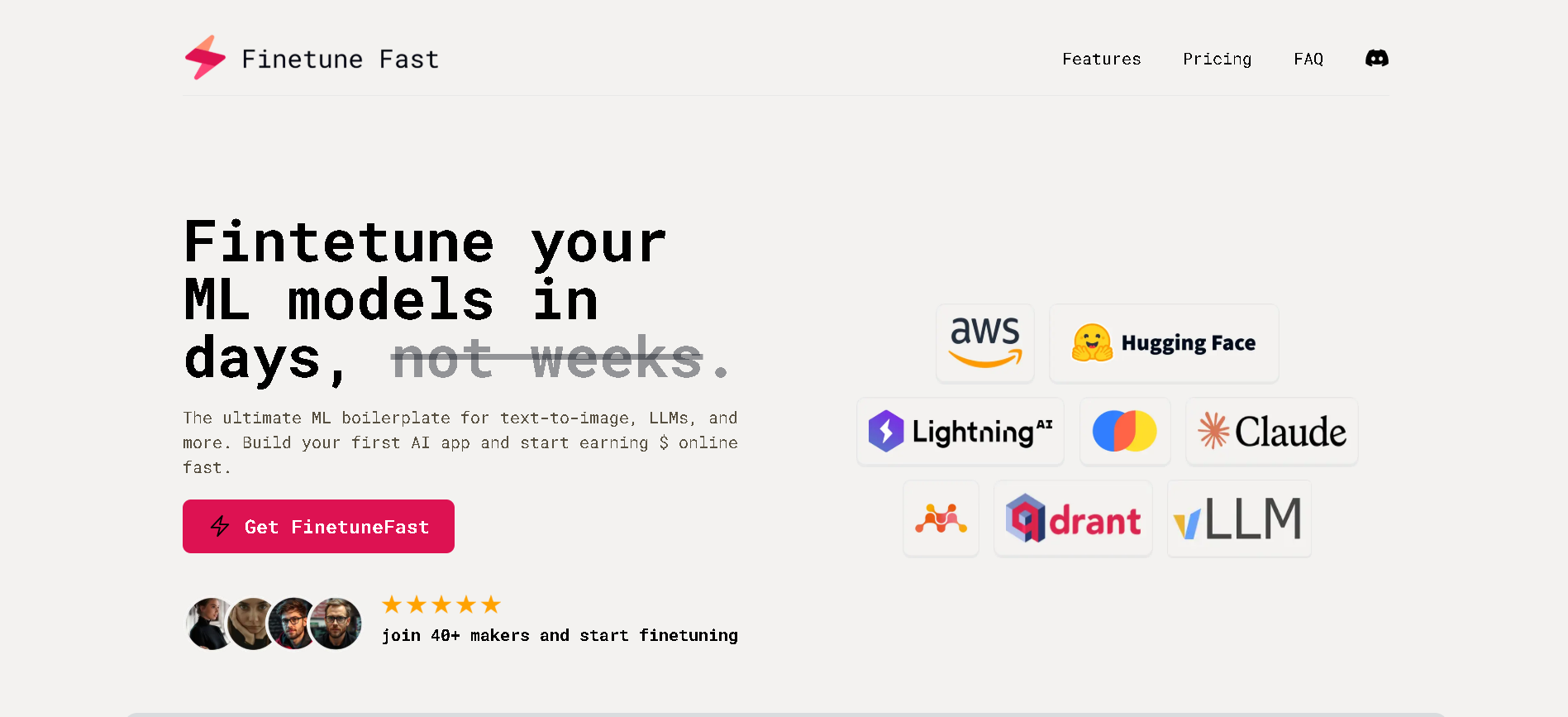

Finetunefast

FinetuneFast.com is a platform designed to drastically reduce the time and complexity of launching AI models, enabling users to fine-tune and deploy machine learning models from weeks to just days. It provides a comprehensive ML boilerplate and a suite of tools for various AI applications, including text-to-image and Large Language Models (LLMs). The platform aims to accelerate the development, production, and monetization of AI applications by offering pre-configured training scripts, efficient data loading, optimized infrastructure, and one-click deployment solutions.

Finetunefast

FinetuneFast.com is a platform designed to drastically reduce the time and complexity of launching AI models, enabling users to fine-tune and deploy machine learning models from weeks to just days. It provides a comprehensive ML boilerplate and a suite of tools for various AI applications, including text-to-image and Large Language Models (LLMs). The platform aims to accelerate the development, production, and monetization of AI applications by offering pre-configured training scripts, efficient data loading, optimized infrastructure, and one-click deployment solutions.

Finetunefast

FinetuneFast.com is a platform designed to drastically reduce the time and complexity of launching AI models, enabling users to fine-tune and deploy machine learning models from weeks to just days. It provides a comprehensive ML boilerplate and a suite of tools for various AI applications, including text-to-image and Large Language Models (LLMs). The platform aims to accelerate the development, production, and monetization of AI applications by offering pre-configured training scripts, efficient data loading, optimized infrastructure, and one-click deployment solutions.

Stakly

Stakly.dev is an AI-powered full-stack app builder that lets users design, code, and deploy web applications without writing manual boilerplate. You describe the app idea in plain language, set up data models, pages, and UI components through an intuitive interface, and Stakly generates production-ready code (including React front-end, Supabase or equivalent backend) and handles deployment to platforms like Vercel or Netlify. It offers a monthly free token allotment so you can experiment, supports live previews so you can see your app as you build, integrates with GitHub for code versioning, and is functional enough to build dashboards, SaaS tools, admin panels, and e-commerce sites. While not replacing full engineering teams for deeply custom or extremely large scale systems, Stakly lowers the technical barrier significantly: non-technical founders, product managers, solo makers, or small agencies can use Stakly to create usable, polished apps in minutes instead of weeks.

Stakly

Stakly.dev is an AI-powered full-stack app builder that lets users design, code, and deploy web applications without writing manual boilerplate. You describe the app idea in plain language, set up data models, pages, and UI components through an intuitive interface, and Stakly generates production-ready code (including React front-end, Supabase or equivalent backend) and handles deployment to platforms like Vercel or Netlify. It offers a monthly free token allotment so you can experiment, supports live previews so you can see your app as you build, integrates with GitHub for code versioning, and is functional enough to build dashboards, SaaS tools, admin panels, and e-commerce sites. While not replacing full engineering teams for deeply custom or extremely large scale systems, Stakly lowers the technical barrier significantly: non-technical founders, product managers, solo makers, or small agencies can use Stakly to create usable, polished apps in minutes instead of weeks.

Stakly

Stakly.dev is an AI-powered full-stack app builder that lets users design, code, and deploy web applications without writing manual boilerplate. You describe the app idea in plain language, set up data models, pages, and UI components through an intuitive interface, and Stakly generates production-ready code (including React front-end, Supabase or equivalent backend) and handles deployment to platforms like Vercel or Netlify. It offers a monthly free token allotment so you can experiment, supports live previews so you can see your app as you build, integrates with GitHub for code versioning, and is functional enough to build dashboards, SaaS tools, admin panels, and e-commerce sites. While not replacing full engineering teams for deeply custom or extremely large scale systems, Stakly lowers the technical barrier significantly: non-technical founders, product managers, solo makers, or small agencies can use Stakly to create usable, polished apps in minutes instead of weeks.

Langchain

LangChain is a powerful open-source framework designed to help developers build context-aware applications that leverage large language models (LLMs). It allows users to connect language models to various data sources, APIs, and memory components, enabling intelligent, multi-step reasoning and decision-making processes. LangChain supports both Python and JavaScript, providing modular building blocks for developers to create chatbots, AI assistants, retrieval-augmented generation (RAG) systems, and agent-based tools. The framework is widely adopted across industries for its flexibility in connecting structured and unstructured data with LLMs.

Langchain

LangChain is a powerful open-source framework designed to help developers build context-aware applications that leverage large language models (LLMs). It allows users to connect language models to various data sources, APIs, and memory components, enabling intelligent, multi-step reasoning and decision-making processes. LangChain supports both Python and JavaScript, providing modular building blocks for developers to create chatbots, AI assistants, retrieval-augmented generation (RAG) systems, and agent-based tools. The framework is widely adopted across industries for its flexibility in connecting structured and unstructured data with LLMs.

Langchain

LangChain is a powerful open-source framework designed to help developers build context-aware applications that leverage large language models (LLMs). It allows users to connect language models to various data sources, APIs, and memory components, enabling intelligent, multi-step reasoning and decision-making processes. LangChain supports both Python and JavaScript, providing modular building blocks for developers to create chatbots, AI assistants, retrieval-augmented generation (RAG) systems, and agent-based tools. The framework is widely adopted across industries for its flexibility in connecting structured and unstructured data with LLMs.

Ask Any Model

AskAnyModel is a unified AI interface that allows users to interact with multiple leading AI models — such as GPT, Claude, Gemini, and Mistral — from a single platform. It eliminates the need for multiple subscriptions and interfaces by bringing top AI models into one streamlined environment. Users can compare responses, analyze outputs, and select the best AI model for specific tasks like content creation, coding, data analysis, or research. AskAnyModel empowers individuals and teams to harness AI diversity efficiently, offering advanced tools for prompt testing, model benchmarking, and workflow integration.

Ask Any Model

AskAnyModel is a unified AI interface that allows users to interact with multiple leading AI models — such as GPT, Claude, Gemini, and Mistral — from a single platform. It eliminates the need for multiple subscriptions and interfaces by bringing top AI models into one streamlined environment. Users can compare responses, analyze outputs, and select the best AI model for specific tasks like content creation, coding, data analysis, or research. AskAnyModel empowers individuals and teams to harness AI diversity efficiently, offering advanced tools for prompt testing, model benchmarking, and workflow integration.

Ask Any Model

AskAnyModel is a unified AI interface that allows users to interact with multiple leading AI models — such as GPT, Claude, Gemini, and Mistral — from a single platform. It eliminates the need for multiple subscriptions and interfaces by bringing top AI models into one streamlined environment. Users can compare responses, analyze outputs, and select the best AI model for specific tasks like content creation, coding, data analysis, or research. AskAnyModel empowers individuals and teams to harness AI diversity efficiently, offering advanced tools for prompt testing, model benchmarking, and workflow integration.

Mobisoft Infotech

MI Team AI is a robust multi-LLM platform designed for enterprises seeking secure, scalable, and cost-effective AI access. It consolidates multiple AI models such as ChatGPT, Claude, Gemini, and various open-source large language models into a single platform, enabling users to switch seamlessly without juggling different tools. The platform supports deployment on private cloud or on-premises infrastructure to ensure complete data privacy and compliance. MI Team AI provides a unified workspace with role-based access controls, single sign-on (SSO), and comprehensive chat logs for transparency and auditability. It offers fixed licensing fees allowing unlimited team access under the company’s brand, making it ideal for organizations needing full control over AI usage.

Mobisoft Infotech

MI Team AI is a robust multi-LLM platform designed for enterprises seeking secure, scalable, and cost-effective AI access. It consolidates multiple AI models such as ChatGPT, Claude, Gemini, and various open-source large language models into a single platform, enabling users to switch seamlessly without juggling different tools. The platform supports deployment on private cloud or on-premises infrastructure to ensure complete data privacy and compliance. MI Team AI provides a unified workspace with role-based access controls, single sign-on (SSO), and comprehensive chat logs for transparency and auditability. It offers fixed licensing fees allowing unlimited team access under the company’s brand, making it ideal for organizations needing full control over AI usage.

Mobisoft Infotech

MI Team AI is a robust multi-LLM platform designed for enterprises seeking secure, scalable, and cost-effective AI access. It consolidates multiple AI models such as ChatGPT, Claude, Gemini, and various open-source large language models into a single platform, enabling users to switch seamlessly without juggling different tools. The platform supports deployment on private cloud or on-premises infrastructure to ensure complete data privacy and compliance. MI Team AI provides a unified workspace with role-based access controls, single sign-on (SSO), and comprehensive chat logs for transparency and auditability. It offers fixed licensing fees allowing unlimited team access under the company’s brand, making it ideal for organizations needing full control over AI usage.

LLM Chat

LLMChat is a privacy-focused, open-source AI chatbot platform designed for advanced research, agentic workflows, and seamless interaction with multiple large language models (LLMs). It offers users a minimalistic and intuitive interface enabling deep exploration of complex topics with modes like Deep Research and Pro Search, which incorporates real-time web integration for current data. The platform emphasizes user privacy by storing all chat history locally in the browser, ensuring conversations never leave the device. LLMChat supports many popular LLM providers such as OpenAI, Anthropic, Google, and more, allowing users to customize AI assistants with personalized instructions and knowledge bases for a wide variety of applications ranging from research to content generation and coding assistance.

LLM Chat

LLMChat is a privacy-focused, open-source AI chatbot platform designed for advanced research, agentic workflows, and seamless interaction with multiple large language models (LLMs). It offers users a minimalistic and intuitive interface enabling deep exploration of complex topics with modes like Deep Research and Pro Search, which incorporates real-time web integration for current data. The platform emphasizes user privacy by storing all chat history locally in the browser, ensuring conversations never leave the device. LLMChat supports many popular LLM providers such as OpenAI, Anthropic, Google, and more, allowing users to customize AI assistants with personalized instructions and knowledge bases for a wide variety of applications ranging from research to content generation and coding assistance.

LLM Chat

LLMChat is a privacy-focused, open-source AI chatbot platform designed for advanced research, agentic workflows, and seamless interaction with multiple large language models (LLMs). It offers users a minimalistic and intuitive interface enabling deep exploration of complex topics with modes like Deep Research and Pro Search, which incorporates real-time web integration for current data. The platform emphasizes user privacy by storing all chat history locally in the browser, ensuring conversations never leave the device. LLMChat supports many popular LLM providers such as OpenAI, Anthropic, Google, and more, allowing users to customize AI assistants with personalized instructions and knowledge bases for a wide variety of applications ranging from research to content generation and coding assistance.

Awan LLM

Awan LLM is a cost-effective, unlimited token large language model inference API platform designed for power users and developers. Unlike traditional API providers that charge per token, Awan LLM offers a monthly subscription model that enables users to send and receive unlimited tokens up to the model's context limit. It supports unrestricted use of LLM models without censorship or constraints. The platform is built on privately owned data centers and GPUs, allowing it to offer efficient and scalable AI services. Awan LLM supports numerous use cases including AI assistants, AI agents, roleplaying, data processing, code completion, and building AI-powered applications without worrying about token limits or costs.

Awan LLM

Awan LLM is a cost-effective, unlimited token large language model inference API platform designed for power users and developers. Unlike traditional API providers that charge per token, Awan LLM offers a monthly subscription model that enables users to send and receive unlimited tokens up to the model's context limit. It supports unrestricted use of LLM models without censorship or constraints. The platform is built on privately owned data centers and GPUs, allowing it to offer efficient and scalable AI services. Awan LLM supports numerous use cases including AI assistants, AI agents, roleplaying, data processing, code completion, and building AI-powered applications without worrying about token limits or costs.

Awan LLM

Awan LLM is a cost-effective, unlimited token large language model inference API platform designed for power users and developers. Unlike traditional API providers that charge per token, Awan LLM offers a monthly subscription model that enables users to send and receive unlimited tokens up to the model's context limit. It supports unrestricted use of LLM models without censorship or constraints. The platform is built on privately owned data centers and GPUs, allowing it to offer efficient and scalable AI services. Awan LLM supports numerous use cases including AI assistants, AI agents, roleplaying, data processing, code completion, and building AI-powered applications without worrying about token limits or costs.

Convolut

Convolut is a smart platform called ConAir that acts as a central hub for managing, organizing, and streaming context snippets to Large Language Models and AI agents. It lets users store knowledge bases, retrieve info quickly, and export data to supercharge AI interactions with relevant context. Perfect for developers and teams, it handles everything from basic storage to advanced organization, making AI responses more accurate and nuanced by feeding in the right details every time. Teams love how it streamlines workflows, cuts down on manual data hunting, and boosts AI performance across projects.

Convolut

Convolut is a smart platform called ConAir that acts as a central hub for managing, organizing, and streaming context snippets to Large Language Models and AI agents. It lets users store knowledge bases, retrieve info quickly, and export data to supercharge AI interactions with relevant context. Perfect for developers and teams, it handles everything from basic storage to advanced organization, making AI responses more accurate and nuanced by feeding in the right details every time. Teams love how it streamlines workflows, cuts down on manual data hunting, and boosts AI performance across projects.

Convolut

Convolut is a smart platform called ConAir that acts as a central hub for managing, organizing, and streaming context snippets to Large Language Models and AI agents. It lets users store knowledge bases, retrieve info quickly, and export data to supercharge AI interactions with relevant context. Perfect for developers and teams, it handles everything from basic storage to advanced organization, making AI responses more accurate and nuanced by feeding in the right details every time. Teams love how it streamlines workflows, cuts down on manual data hunting, and boosts AI performance across projects.

Langdock

Langdock is an enterprise-ready AI platform that allows organizations to deploy AI securely across all employees while giving developers the tools to build and customize advanced AI workflows. It centralizes AI access, policy controls, and workflow automation into one system, making corporate AI adoption organized and compliant. Langdock supports custom workflows, integrations, and internal tools, ensuring that teams across departments can use AI productively while maintaining governance and security. It is designed for full organizational rollout—from individual employees to technical developer teams.

Langdock

Langdock is an enterprise-ready AI platform that allows organizations to deploy AI securely across all employees while giving developers the tools to build and customize advanced AI workflows. It centralizes AI access, policy controls, and workflow automation into one system, making corporate AI adoption organized and compliant. Langdock supports custom workflows, integrations, and internal tools, ensuring that teams across departments can use AI productively while maintaining governance and security. It is designed for full organizational rollout—from individual employees to technical developer teams.

Langdock

Langdock is an enterprise-ready AI platform that allows organizations to deploy AI securely across all employees while giving developers the tools to build and customize advanced AI workflows. It centralizes AI access, policy controls, and workflow automation into one system, making corporate AI adoption organized and compliant. Langdock supports custom workflows, integrations, and internal tools, ensuring that teams across departments can use AI productively while maintaining governance and security. It is designed for full organizational rollout—from individual employees to technical developer teams.

Synorex

Synorex One is a private, secure AI workspace that provides access to multiple leading large language models within a single enterprise platform. Designed specifically to go beyond basic chatbot functionality, Synorex One offers a robust, flexible environment that allows organizations to leverage AI at scale. Teams can work with different models, manage data safely, build internal workflows, and generate business insights securely. The platform emphasizes privacy, enterprise readiness, and multi-model flexibility to support complex professional needs.

Synorex

Synorex One is a private, secure AI workspace that provides access to multiple leading large language models within a single enterprise platform. Designed specifically to go beyond basic chatbot functionality, Synorex One offers a robust, flexible environment that allows organizations to leverage AI at scale. Teams can work with different models, manage data safely, build internal workflows, and generate business insights securely. The platform emphasizes privacy, enterprise readiness, and multi-model flexibility to support complex professional needs.

Synorex

Synorex One is a private, secure AI workspace that provides access to multiple leading large language models within a single enterprise platform. Designed specifically to go beyond basic chatbot functionality, Synorex One offers a robust, flexible environment that allows organizations to leverage AI at scale. Teams can work with different models, manage data safely, build internal workflows, and generate business insights securely. The platform emphasizes privacy, enterprise readiness, and multi-model flexibility to support complex professional needs.

Editorial Note

This page was researched and written by the ATB Editorial Team. Our team researches each AI tool by reviewing its official website, testing features, exploring real use cases, and considering user feedback. Every page is fact-checked and regularly updated to ensure the information stays accurate, neutral, and useful for our readers.

If you have any suggestions or questions, email us at hello@aitoolbook.ai