- Startups & Product Teams: Teams creating new AI-based SaaS products, assistants, or integrations for end-users.

- Enterprise Engineering Teams: Organisations connecting private data to LLMs for internal chatbots and automation workflows.

- Educators & Researchers: Academics experimenting with prompt engineering, multi-step reasoning, and model orchestration.

- Automation Specialists: Teams automating customer support, document summarisation, and content generation workflows.

How to Use It?

- Install the Framework: Set up LangChain in Python or JavaScript depending on your tech stack.

- Connect a Language Model: Link any LLM such as GPT or Claude to the LangChain environment.

- Integrate Data Sources: Connect your structured databases, APIs, or document repositories using retrievers or vector stores.

- Build Chains & Agents: Use LangChain components to create logical steps for data flow, reasoning, and multi-agent communication.

- Add Memory & Context: Configure memory modules to let the model retain conversational or contextual information.

- Deploy & Scale: Integrate with your backend or serve via APIs to make your AI system production-ready.

- Highly Modular Design: Each component (chains, retrievers, agents, prompts) can be used independently or combined flexibly.

- Supports Multi-Modal AI: Enables integration of text, structured data, APIs, and even audio or image models.

- Enterprise-Grade Integration: Can be deployed securely with private data, internal APIs, and fine-tuned models.

- Community & Ecosystem: Supported by a large open-source community with frequent updates and integrations.

- Foundation for RAG Systems: LangChain powers most retrieval-augmented generation systems in the market today.

- Extremely flexible for developers and enterprises.

- Great documentation and active open-source ecosystem.

- Supports integration with numerous data types and APIs.

- Ideal for custom AI solutions and agent-based automation.

- Modular, extensible, and production-ready.

- Requires coding experience; not ideal for non-technical users.

- Setup complexity increases with large-scale applications.

- Heavy dependency management for advanced integrations.

- Performance can vary based on model configuration and data retrieval setup.

Developer

Free

Plus

$ 39.00

Enterprise

Custom

Proud of the love you're getting? Show off your AI Toolbook reviews—then invite more fans to share the love and build your credibility.

Add an AI Toolbook badge to your site—an easy way to drive followers, showcase updates, and collect reviews. It's like a mini 24/7 billboard for your AI.

Reviews

Rating Distribution

Average score

Popular Mention

FAQs

Yes — it is a developer-focused framework for building AI applications.

Yes — you can integrate databases, PDFs, and APIs securely into your workflow.

No — it supports document retrieval, analytics, workflow automation, and more.

Yes — LangChain is model-agnostic and supports all major LLM providers.

Yes — it supports production-level deployment and scaling for enterprise needs.

Similar AI Tools

Unsloth AI

Unsloth.AI is an open-source platform designed to accelerate and simplify the fine-tuning of large language models (LLMs). By leveraging manual mathematical derivations, custom GPU kernels, and efficient optimization techniques, Unsloth achieves up to 30x faster training speeds compared to traditional methods, without compromising model accuracy. It supports a wide range of popular models, including Llama, Mistral, Gemma, and BERT, and works seamlessly on various GPUs, from consumer-grade Tesla T4 to high-end H100, as well as AMD and Intel GPUs. Unsloth empowers developers, researchers, and AI enthusiasts to fine-tune models efficiently, even with limited computational resources, democratizing access to advanced AI model customization. With a focus on performance, scalability, and flexibility, Unsloth.AI is suitable for both academic research and commercial applications, helping users deploy specialized AI solutions faster and more effectively.

Unsloth AI

Unsloth.AI is an open-source platform designed to accelerate and simplify the fine-tuning of large language models (LLMs). By leveraging manual mathematical derivations, custom GPU kernels, and efficient optimization techniques, Unsloth achieves up to 30x faster training speeds compared to traditional methods, without compromising model accuracy. It supports a wide range of popular models, including Llama, Mistral, Gemma, and BERT, and works seamlessly on various GPUs, from consumer-grade Tesla T4 to high-end H100, as well as AMD and Intel GPUs. Unsloth empowers developers, researchers, and AI enthusiasts to fine-tune models efficiently, even with limited computational resources, democratizing access to advanced AI model customization. With a focus on performance, scalability, and flexibility, Unsloth.AI is suitable for both academic research and commercial applications, helping users deploy specialized AI solutions faster and more effectively.

Unsloth AI

Unsloth.AI is an open-source platform designed to accelerate and simplify the fine-tuning of large language models (LLMs). By leveraging manual mathematical derivations, custom GPU kernels, and efficient optimization techniques, Unsloth achieves up to 30x faster training speeds compared to traditional methods, without compromising model accuracy. It supports a wide range of popular models, including Llama, Mistral, Gemma, and BERT, and works seamlessly on various GPUs, from consumer-grade Tesla T4 to high-end H100, as well as AMD and Intel GPUs. Unsloth empowers developers, researchers, and AI enthusiasts to fine-tune models efficiently, even with limited computational resources, democratizing access to advanced AI model customization. With a focus on performance, scalability, and flexibility, Unsloth.AI is suitable for both academic research and commercial applications, helping users deploy specialized AI solutions faster and more effectively.

Genloop AI

Genloop is a platform that empowers enterprises to build, deploy, and manage custom, private large language models (LLMs) tailored to their business data and requirements — all with minimal development effort. It turns enterprise data into intelligent, conversational insights, allowing users to ask business questions in natural language and receive actionable analysis instantly. The platform enables organizations to confidently manage their data-driven decision-making by offering advanced fine-tuning, automation, and deployment tools. Businesses can transform their existing datasets into private AI assistants that deliver accurate insights, while maintaining complete security and compliance. Genloop’s focus is on bridging the gap between AI and enterprise data operations, providing a scalable, trustworthy, and adaptive solution for teams that want to leverage AI without extensive coding or infrastructure complexity.

Genloop AI

Genloop is a platform that empowers enterprises to build, deploy, and manage custom, private large language models (LLMs) tailored to their business data and requirements — all with minimal development effort. It turns enterprise data into intelligent, conversational insights, allowing users to ask business questions in natural language and receive actionable analysis instantly. The platform enables organizations to confidently manage their data-driven decision-making by offering advanced fine-tuning, automation, and deployment tools. Businesses can transform their existing datasets into private AI assistants that deliver accurate insights, while maintaining complete security and compliance. Genloop’s focus is on bridging the gap between AI and enterprise data operations, providing a scalable, trustworthy, and adaptive solution for teams that want to leverage AI without extensive coding or infrastructure complexity.

Genloop AI

Genloop is a platform that empowers enterprises to build, deploy, and manage custom, private large language models (LLMs) tailored to their business data and requirements — all with minimal development effort. It turns enterprise data into intelligent, conversational insights, allowing users to ask business questions in natural language and receive actionable analysis instantly. The platform enables organizations to confidently manage their data-driven decision-making by offering advanced fine-tuning, automation, and deployment tools. Businesses can transform their existing datasets into private AI assistants that deliver accurate insights, while maintaining complete security and compliance. Genloop’s focus is on bridging the gap between AI and enterprise data operations, providing a scalable, trustworthy, and adaptive solution for teams that want to leverage AI without extensive coding or infrastructure complexity.

Soket AI

Soket AI is an Indian deep-tech startup building sovereign, multilingual foundational AI models and real-time voice/speech APIs designed for Indic languages and global scale. By focusing on language diversity, cultural context and ethical AI, Soket AI aims to develop models that recognise and respond across many languages, while delivering enterprise-grade capabilities for sectors such as defence, healthcare, education and governance.

Soket AI

Soket AI is an Indian deep-tech startup building sovereign, multilingual foundational AI models and real-time voice/speech APIs designed for Indic languages and global scale. By focusing on language diversity, cultural context and ethical AI, Soket AI aims to develop models that recognise and respond across many languages, while delivering enterprise-grade capabilities for sectors such as defence, healthcare, education and governance.

Soket AI

Soket AI is an Indian deep-tech startup building sovereign, multilingual foundational AI models and real-time voice/speech APIs designed for Indic languages and global scale. By focusing on language diversity, cultural context and ethical AI, Soket AI aims to develop models that recognise and respond across many languages, while delivering enterprise-grade capabilities for sectors such as defence, healthcare, education and governance.

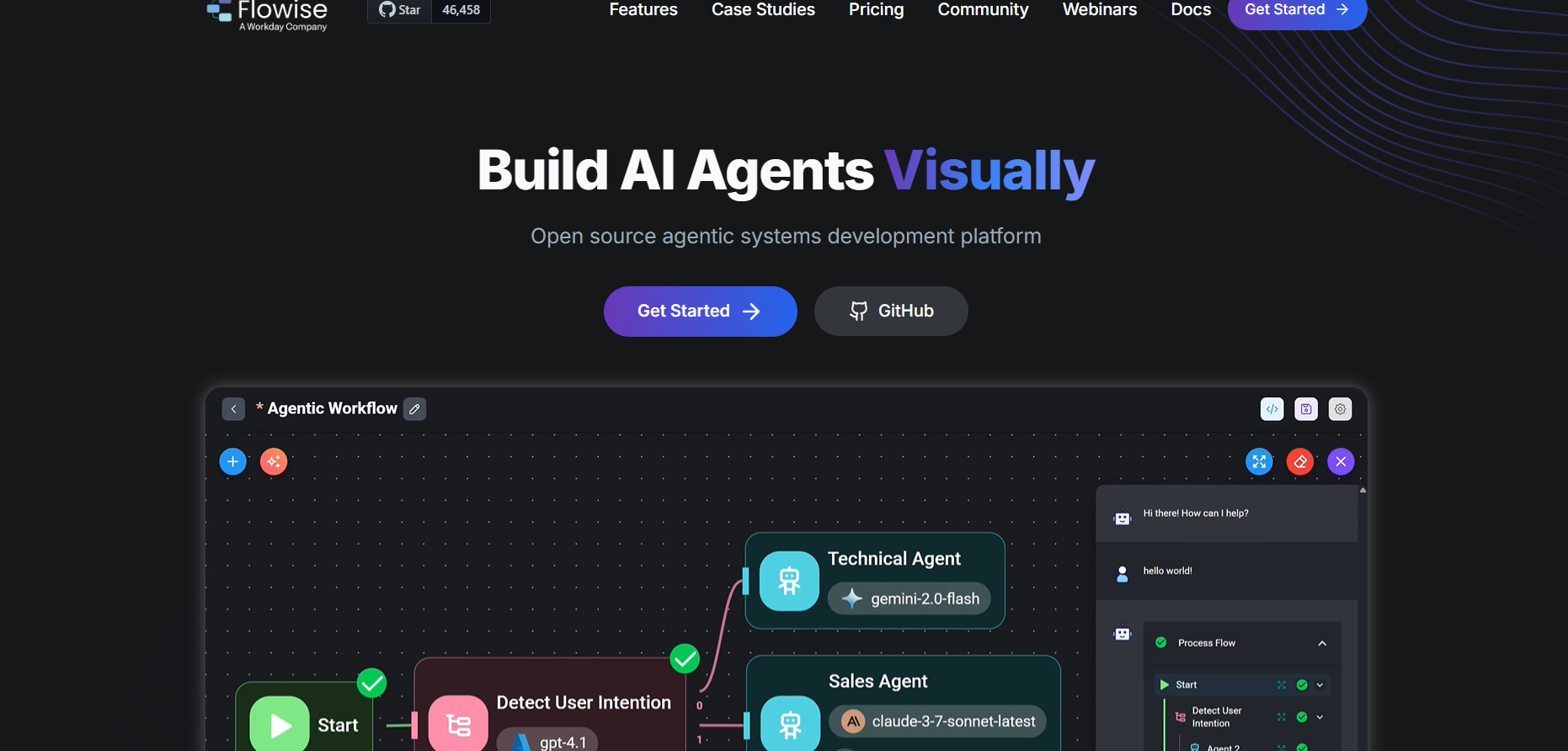

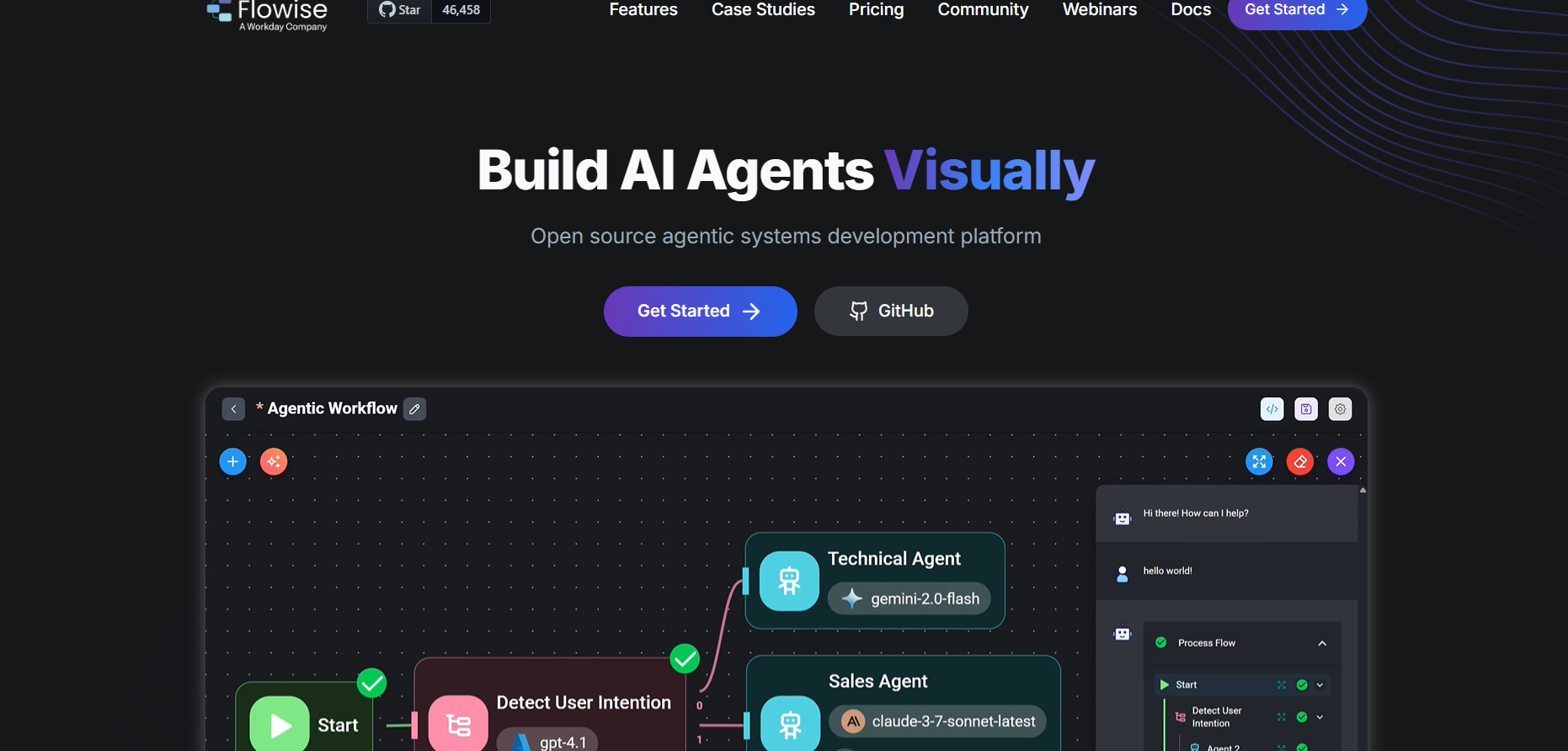

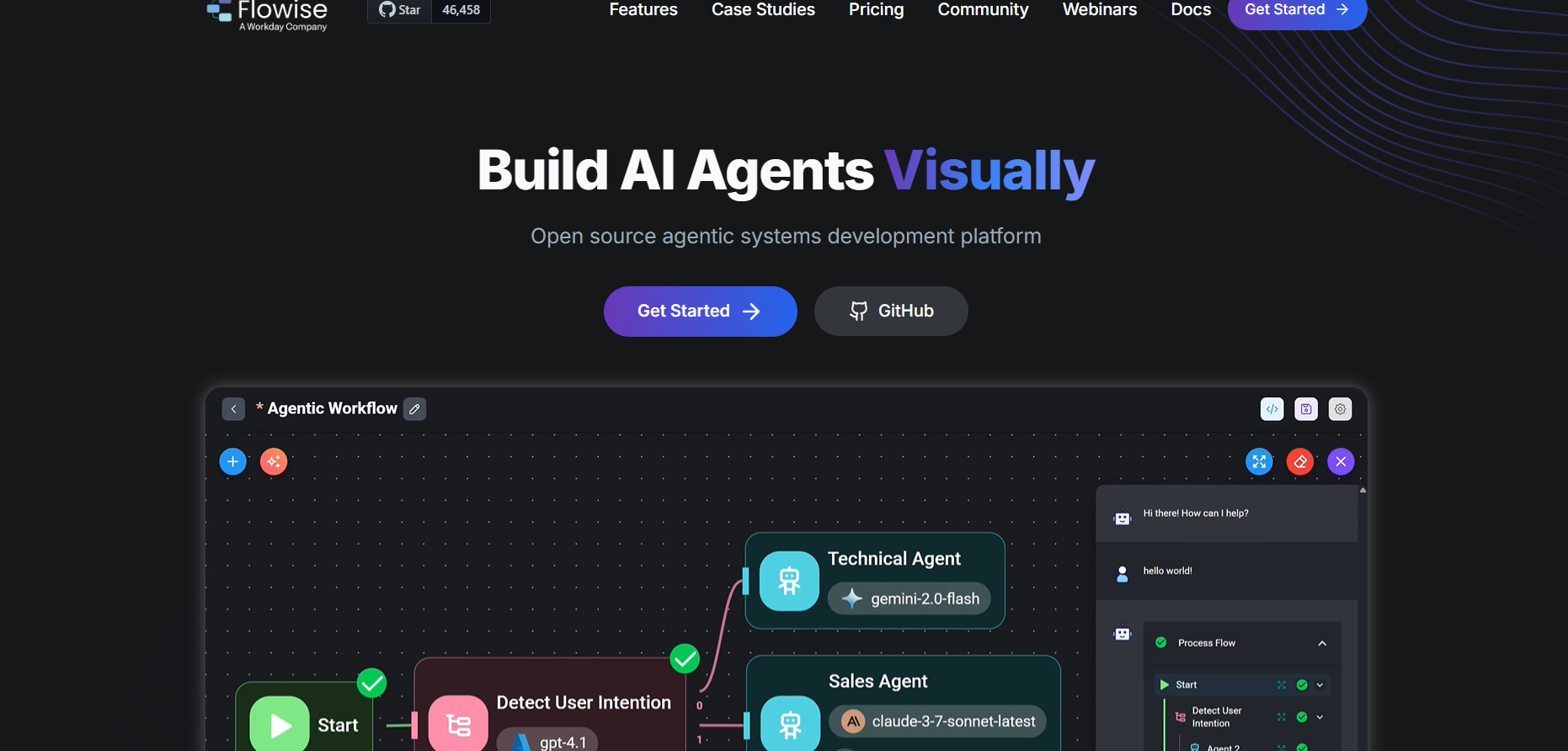

Flowise AI

Flowise AI is an open-source, visual tool that allows users to build, deploy, and manage AI workflows and chatbots powered by large language models without needing to code. It provides a drag-and-drop interface where users can visually connect LangChain components, APIs, data sources, and models to create complex AI systems easily. With Flowise AI, developers, analysts, and businesses can build chatbots, RAG pipelines, or automation systems through an intuitive UI rather than scripting everything manually. Its no-code design accelerates prototyping and deployment, enabling faster experimentation with LLM-powered workflows.

Flowise AI

Flowise AI is an open-source, visual tool that allows users to build, deploy, and manage AI workflows and chatbots powered by large language models without needing to code. It provides a drag-and-drop interface where users can visually connect LangChain components, APIs, data sources, and models to create complex AI systems easily. With Flowise AI, developers, analysts, and businesses can build chatbots, RAG pipelines, or automation systems through an intuitive UI rather than scripting everything manually. Its no-code design accelerates prototyping and deployment, enabling faster experimentation with LLM-powered workflows.

Flowise AI

Flowise AI is an open-source, visual tool that allows users to build, deploy, and manage AI workflows and chatbots powered by large language models without needing to code. It provides a drag-and-drop interface where users can visually connect LangChain components, APIs, data sources, and models to create complex AI systems easily. With Flowise AI, developers, analysts, and businesses can build chatbots, RAG pipelines, or automation systems through an intuitive UI rather than scripting everything manually. Its no-code design accelerates prototyping and deployment, enabling faster experimentation with LLM-powered workflows.

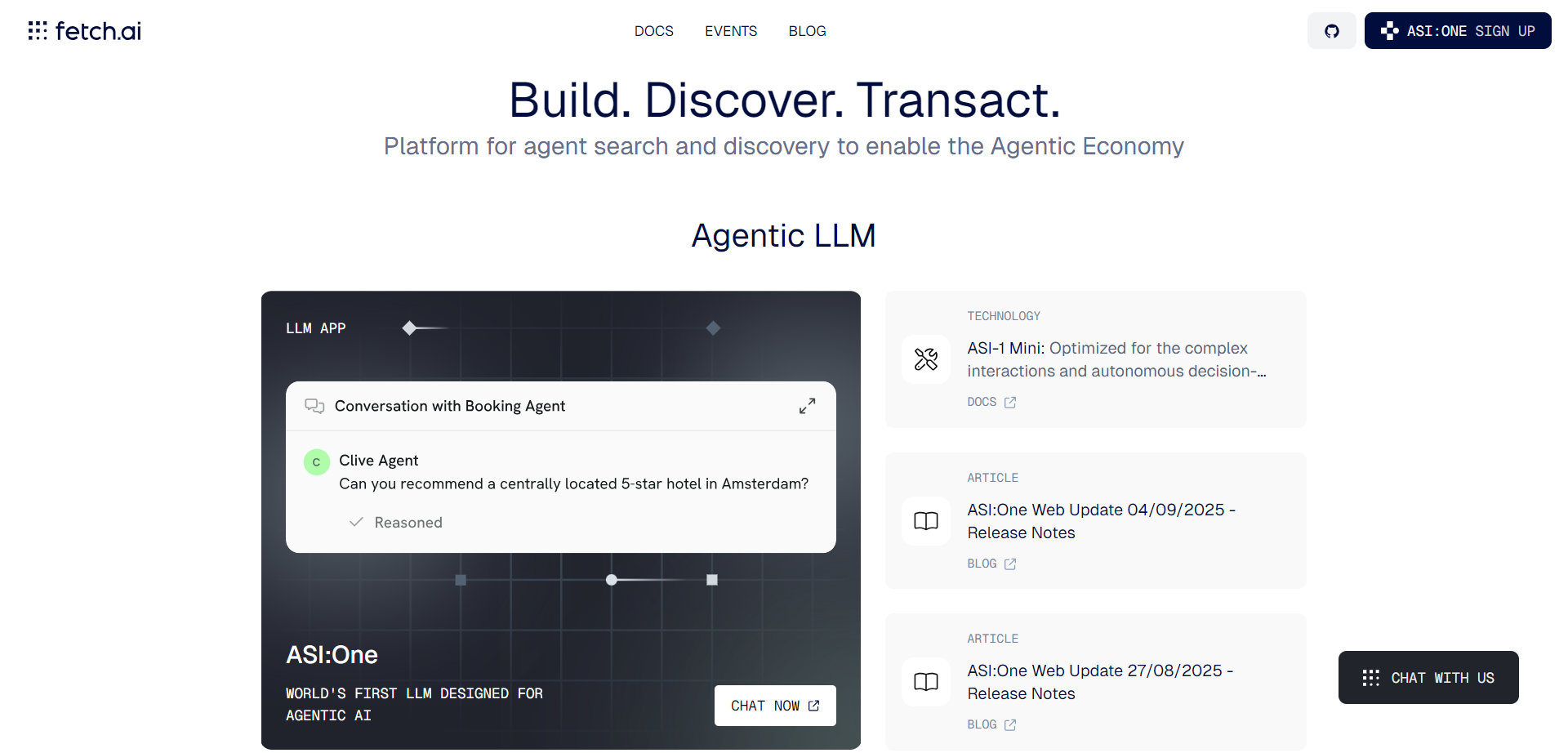

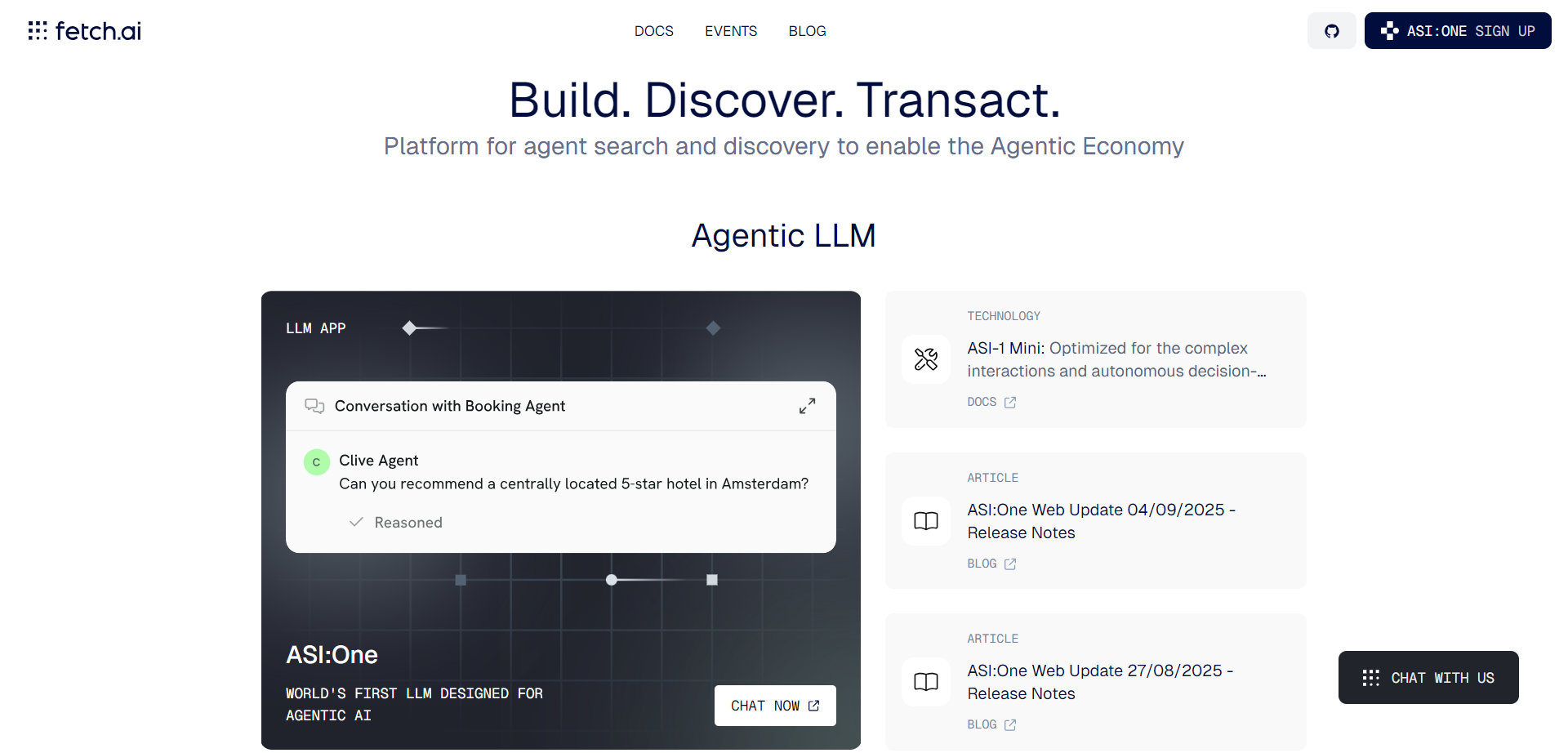

Fetch.ai

Fetch.ai is a decentralized AI platform built to power the emerging agentic economy by enabling autonomous AI agents to interact, transact, and collaborate across digital and real-world environments. The platform combines blockchain technology with advanced machine learning to create a network where millions of AI agents operate independently yet connect seamlessly to solve complex tasks such as supply chain automation, personalized services, and data sharing. Fetch.ai offers a complete technology stack, including personal AI assistants, developer tools, and business automation solutions, designed for real-world impact. Its open ecosystem supports flexibility, privacy, and interoperability, empowering users and enterprises to build, discover, and transact through intelligent, autonomous AI agents.

Fetch.ai

Fetch.ai is a decentralized AI platform built to power the emerging agentic economy by enabling autonomous AI agents to interact, transact, and collaborate across digital and real-world environments. The platform combines blockchain technology with advanced machine learning to create a network where millions of AI agents operate independently yet connect seamlessly to solve complex tasks such as supply chain automation, personalized services, and data sharing. Fetch.ai offers a complete technology stack, including personal AI assistants, developer tools, and business automation solutions, designed for real-world impact. Its open ecosystem supports flexibility, privacy, and interoperability, empowering users and enterprises to build, discover, and transact through intelligent, autonomous AI agents.

Fetch.ai

Fetch.ai is a decentralized AI platform built to power the emerging agentic economy by enabling autonomous AI agents to interact, transact, and collaborate across digital and real-world environments. The platform combines blockchain technology with advanced machine learning to create a network where millions of AI agents operate independently yet connect seamlessly to solve complex tasks such as supply chain automation, personalized services, and data sharing. Fetch.ai offers a complete technology stack, including personal AI assistants, developer tools, and business automation solutions, designed for real-world impact. Its open ecosystem supports flexibility, privacy, and interoperability, empowering users and enterprises to build, discover, and transact through intelligent, autonomous AI agents.

Build My Agents

BuildMyAgents AI is a no-code platform that allows users to create, train, and deploy AI agents for tasks like customer support, data handling, or automation. It simplifies complex AI development by providing a visual builder and pre-configured logic templates that anyone can customize without coding. Users can integrate APIs, connect data sources, and configure multi-agent workflows that collaborate intelligently. Whether for startups or enterprise solutions, BuildMyAgents AI empowers teams to automate operations and deploy AI systems quickly with full transparency and control.

Build My Agents

BuildMyAgents AI is a no-code platform that allows users to create, train, and deploy AI agents for tasks like customer support, data handling, or automation. It simplifies complex AI development by providing a visual builder and pre-configured logic templates that anyone can customize without coding. Users can integrate APIs, connect data sources, and configure multi-agent workflows that collaborate intelligently. Whether for startups or enterprise solutions, BuildMyAgents AI empowers teams to automate operations and deploy AI systems quickly with full transparency and control.

Build My Agents

BuildMyAgents AI is a no-code platform that allows users to create, train, and deploy AI agents for tasks like customer support, data handling, or automation. It simplifies complex AI development by providing a visual builder and pre-configured logic templates that anyone can customize without coding. Users can integrate APIs, connect data sources, and configure multi-agent workflows that collaborate intelligently. Whether for startups or enterprise solutions, BuildMyAgents AI empowers teams to automate operations and deploy AI systems quickly with full transparency and control.

LLM Chat

LLMChat is a privacy-focused, open-source AI chatbot platform designed for advanced research, agentic workflows, and seamless interaction with multiple large language models (LLMs). It offers users a minimalistic and intuitive interface enabling deep exploration of complex topics with modes like Deep Research and Pro Search, which incorporates real-time web integration for current data. The platform emphasizes user privacy by storing all chat history locally in the browser, ensuring conversations never leave the device. LLMChat supports many popular LLM providers such as OpenAI, Anthropic, Google, and more, allowing users to customize AI assistants with personalized instructions and knowledge bases for a wide variety of applications ranging from research to content generation and coding assistance.

LLM Chat

LLMChat is a privacy-focused, open-source AI chatbot platform designed for advanced research, agentic workflows, and seamless interaction with multiple large language models (LLMs). It offers users a minimalistic and intuitive interface enabling deep exploration of complex topics with modes like Deep Research and Pro Search, which incorporates real-time web integration for current data. The platform emphasizes user privacy by storing all chat history locally in the browser, ensuring conversations never leave the device. LLMChat supports many popular LLM providers such as OpenAI, Anthropic, Google, and more, allowing users to customize AI assistants with personalized instructions and knowledge bases for a wide variety of applications ranging from research to content generation and coding assistance.

LLM Chat

LLMChat is a privacy-focused, open-source AI chatbot platform designed for advanced research, agentic workflows, and seamless interaction with multiple large language models (LLMs). It offers users a minimalistic and intuitive interface enabling deep exploration of complex topics with modes like Deep Research and Pro Search, which incorporates real-time web integration for current data. The platform emphasizes user privacy by storing all chat history locally in the browser, ensuring conversations never leave the device. LLMChat supports many popular LLM providers such as OpenAI, Anthropic, Google, and more, allowing users to customize AI assistants with personalized instructions and knowledge bases for a wide variety of applications ranging from research to content generation and coding assistance.

Awan LLM

Awan LLM is a cost-effective, unlimited token large language model inference API platform designed for power users and developers. Unlike traditional API providers that charge per token, Awan LLM offers a monthly subscription model that enables users to send and receive unlimited tokens up to the model's context limit. It supports unrestricted use of LLM models without censorship or constraints. The platform is built on privately owned data centers and GPUs, allowing it to offer efficient and scalable AI services. Awan LLM supports numerous use cases including AI assistants, AI agents, roleplaying, data processing, code completion, and building AI-powered applications without worrying about token limits or costs.

Awan LLM

Awan LLM is a cost-effective, unlimited token large language model inference API platform designed for power users and developers. Unlike traditional API providers that charge per token, Awan LLM offers a monthly subscription model that enables users to send and receive unlimited tokens up to the model's context limit. It supports unrestricted use of LLM models without censorship or constraints. The platform is built on privately owned data centers and GPUs, allowing it to offer efficient and scalable AI services. Awan LLM supports numerous use cases including AI assistants, AI agents, roleplaying, data processing, code completion, and building AI-powered applications without worrying about token limits or costs.

Awan LLM

Awan LLM is a cost-effective, unlimited token large language model inference API platform designed for power users and developers. Unlike traditional API providers that charge per token, Awan LLM offers a monthly subscription model that enables users to send and receive unlimited tokens up to the model's context limit. It supports unrestricted use of LLM models without censorship or constraints. The platform is built on privately owned data centers and GPUs, allowing it to offer efficient and scalable AI services. Awan LLM supports numerous use cases including AI assistants, AI agents, roleplaying, data processing, code completion, and building AI-powered applications without worrying about token limits or costs.

LM Studio

LM Studio is a local large language model (LLM) platform that enables users to run and download powerful AI language models like LLaMa, MPT, and Gemma directly on their own computers. This platform supports Mac, Windows, and Linux operating systems, providing flexibility to users across different devices. LM Studio focuses on privacy and control by allowing users to work with AI models locally without relying on cloud-based services, ensuring data stays on the user’s device. It offers an easy-to-install interface with step-by-step guidance for setup, facilitating access to advanced AI capabilities for developers, researchers, and AI enthusiasts without requiring an internet connection.

LM Studio

LM Studio is a local large language model (LLM) platform that enables users to run and download powerful AI language models like LLaMa, MPT, and Gemma directly on their own computers. This platform supports Mac, Windows, and Linux operating systems, providing flexibility to users across different devices. LM Studio focuses on privacy and control by allowing users to work with AI models locally without relying on cloud-based services, ensuring data stays on the user’s device. It offers an easy-to-install interface with step-by-step guidance for setup, facilitating access to advanced AI capabilities for developers, researchers, and AI enthusiasts without requiring an internet connection.

LM Studio

LM Studio is a local large language model (LLM) platform that enables users to run and download powerful AI language models like LLaMa, MPT, and Gemma directly on their own computers. This platform supports Mac, Windows, and Linux operating systems, providing flexibility to users across different devices. LM Studio focuses on privacy and control by allowing users to work with AI models locally without relying on cloud-based services, ensuring data stays on the user’s device. It offers an easy-to-install interface with step-by-step guidance for setup, facilitating access to advanced AI capabilities for developers, researchers, and AI enthusiasts without requiring an internet connection.

potpie.ai

Potpie.ai is an innovative AI agent platform that enables developers to create intelligent agents for their codebase in just minutes, transforming software development through automated understanding and interaction. Designed specifically for codebase analysis, it allows users to deploy AI agents that comprehend entire repositories, perform tasks like code reviews, bug detection, refactoring suggestions, and feature implementation autonomously. The platform emphasizes speed and simplicity, requiring minimal setup to integrate with existing projects on GitHub or local environments. Key capabilities include natural language queries for code exploration, multi-agent collaboration for complex workflows, and seamless integration with popular IDEs like VS Code. By leveraging advanced LLMs fine-tuned for code, Potpie.ai boosts productivity for solo developers and teams alike, making AI-assisted coding accessible without deep expertise.

potpie.ai

Potpie.ai is an innovative AI agent platform that enables developers to create intelligent agents for their codebase in just minutes, transforming software development through automated understanding and interaction. Designed specifically for codebase analysis, it allows users to deploy AI agents that comprehend entire repositories, perform tasks like code reviews, bug detection, refactoring suggestions, and feature implementation autonomously. The platform emphasizes speed and simplicity, requiring minimal setup to integrate with existing projects on GitHub or local environments. Key capabilities include natural language queries for code exploration, multi-agent collaboration for complex workflows, and seamless integration with popular IDEs like VS Code. By leveraging advanced LLMs fine-tuned for code, Potpie.ai boosts productivity for solo developers and teams alike, making AI-assisted coding accessible without deep expertise.

potpie.ai

Potpie.ai is an innovative AI agent platform that enables developers to create intelligent agents for their codebase in just minutes, transforming software development through automated understanding and interaction. Designed specifically for codebase analysis, it allows users to deploy AI agents that comprehend entire repositories, perform tasks like code reviews, bug detection, refactoring suggestions, and feature implementation autonomously. The platform emphasizes speed and simplicity, requiring minimal setup to integrate with existing projects on GitHub or local environments. Key capabilities include natural language queries for code exploration, multi-agent collaboration for complex workflows, and seamless integration with popular IDEs like VS Code. By leveraging advanced LLMs fine-tuned for code, Potpie.ai boosts productivity for solo developers and teams alike, making AI-assisted coding accessible without deep expertise.

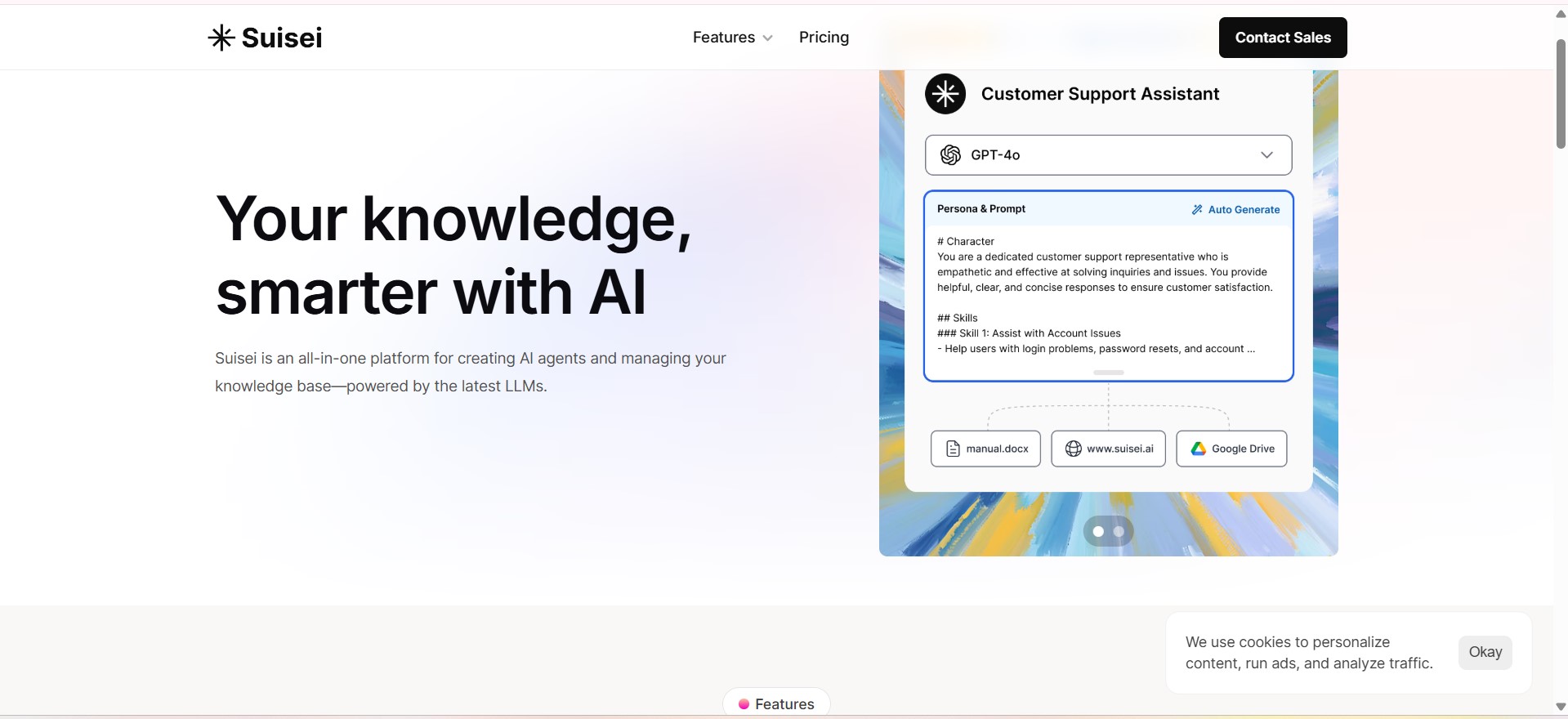

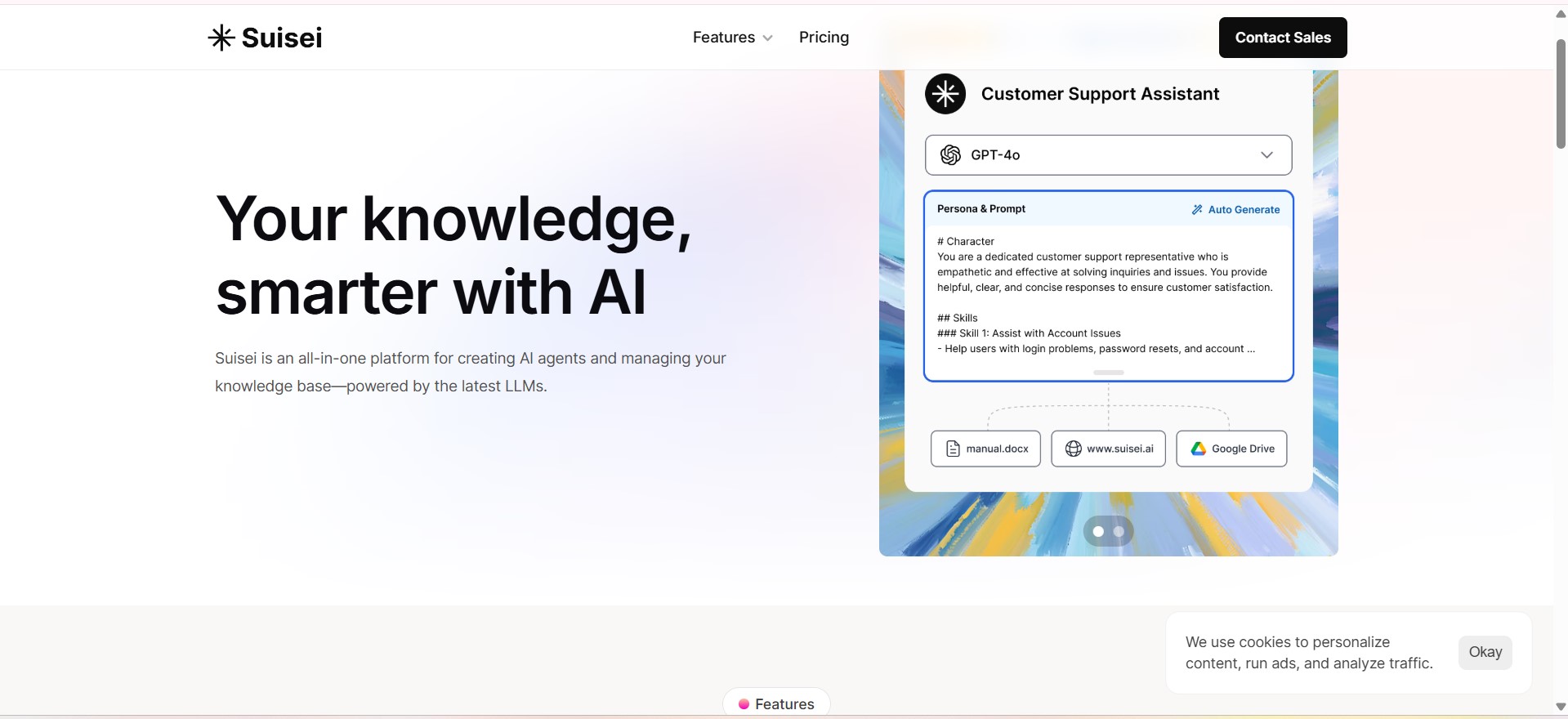

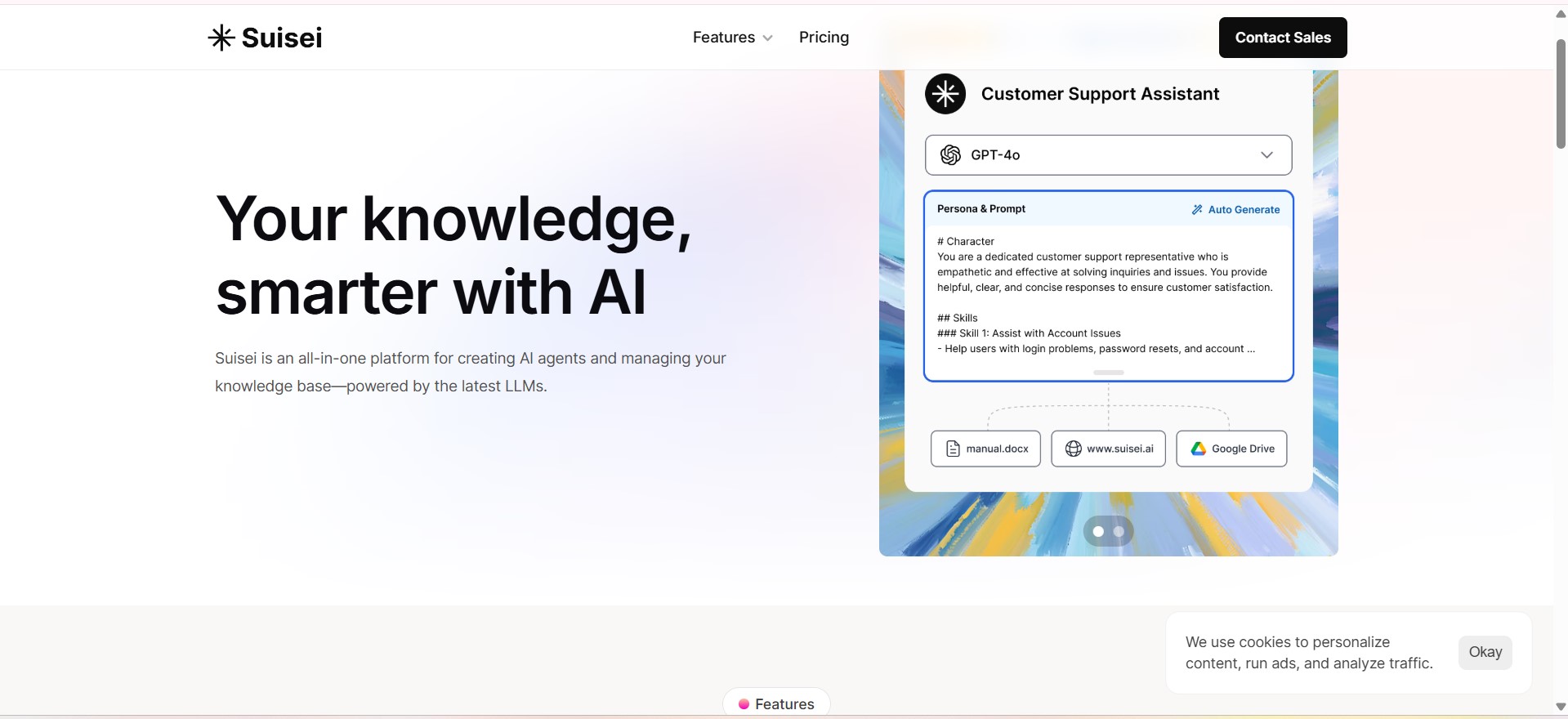

Suisei AI

Suisei AI is a modern AI platform designed to help individuals and businesses build, manage, and deploy intelligent AI agents tailored to their needs. At its core, Suisei positions itself as an all-in-one workspace for AI agents and knowledge management, powered by the latest large language models (LLMs) such as GPT, Claude, and Google’s Gemini.The platform’s primary focus is on AI agents—automated assistants that can perform a wide variety of tasks. Users can create custom AI agents without writing code, using intuitive templates or starting from scratch.

Suisei AI

Suisei AI is a modern AI platform designed to help individuals and businesses build, manage, and deploy intelligent AI agents tailored to their needs. At its core, Suisei positions itself as an all-in-one workspace for AI agents and knowledge management, powered by the latest large language models (LLMs) such as GPT, Claude, and Google’s Gemini.The platform’s primary focus is on AI agents—automated assistants that can perform a wide variety of tasks. Users can create custom AI agents without writing code, using intuitive templates or starting from scratch.

Suisei AI

Suisei AI is a modern AI platform designed to help individuals and businesses build, manage, and deploy intelligent AI agents tailored to their needs. At its core, Suisei positions itself as an all-in-one workspace for AI agents and knowledge management, powered by the latest large language models (LLMs) such as GPT, Claude, and Google’s Gemini.The platform’s primary focus is on AI agents—automated assistants that can perform a wide variety of tasks. Users can create custom AI agents without writing code, using intuitive templates or starting from scratch.

Typing Mind

TypingMind is a powerful frontend for large language models, giving users a clean, customizable interface to interact with AI more efficiently. It enhances the user experience by offering advanced features such as conversation organization, prompt management, model switching, and private local usage options. TypingMind provides a more flexible and user-friendly environment than standard AI chat interfaces, allowing users to optimize workflows, manage sessions, and personalize interactions. It is built for individuals and teams who want full control over how they use LLMs without relying on default chat UIs.

Typing Mind

TypingMind is a powerful frontend for large language models, giving users a clean, customizable interface to interact with AI more efficiently. It enhances the user experience by offering advanced features such as conversation organization, prompt management, model switching, and private local usage options. TypingMind provides a more flexible and user-friendly environment than standard AI chat interfaces, allowing users to optimize workflows, manage sessions, and personalize interactions. It is built for individuals and teams who want full control over how they use LLMs without relying on default chat UIs.

Typing Mind

TypingMind is a powerful frontend for large language models, giving users a clean, customizable interface to interact with AI more efficiently. It enhances the user experience by offering advanced features such as conversation organization, prompt management, model switching, and private local usage options. TypingMind provides a more flexible and user-friendly environment than standard AI chat interfaces, allowing users to optimize workflows, manage sessions, and personalize interactions. It is built for individuals and teams who want full control over how they use LLMs without relying on default chat UIs.

Editorial Note

This page was researched and written by the ATB Editorial Team. Our team researches each AI tool by reviewing its official website, testing features, exploring real use cases, and considering user feedback. Every page is fact-checked and regularly updated to ensure the information stays accurate, neutral, and useful for our readers.

If you have any suggestions or questions, email us at hello@aitoolbook.ai