- Enterprises in India and global: Needing multilingual AI and speech technologies across languages and cultural contexts.

- Government & public sector: With needs for sovereign AI, local language support, and scalable infrastructure.

- Healthcare, Education & Agriculture sectors: Where multilingual access and voice interaction matter.

- Developers of voice-first and language-centric applications: Who need APIs for real-time speech and multilingual modelling.

- Research & AI labs: Working on inclusive AI, low-resource languages and ethical model development.

How to Use It?

- Access APIs & Models: Use Soket’s real-time speech API or foundational model endpoints for your application

- Choose Language Support: Select from supported Indic languages, English and others being added.

- Integrate Into Systems: Embed speech, translation or conversational capabilities into products.

- Fine-Tune and Localise: Work with Soket to customise for your domain, locality, or language variant.

- Deploy & Monitor: Deploy in on-premises or cloud settings, track usage, and optimise for performance and compliance.

- Multilingual Indigenous Models: Building foundational AI with 120 billion parameters optimised for language diversity in India.

- Real-Time Speech & Voice APIs: Allows voice interaction across languages for enterprise use-cases.

- Ethical and Inclusive AI Focus: Aligned to cultural context, low-resource languages and national AI ambitions.

- Sovereign AI Vision: Aiming for independent AI infrastructure and models not dependent on foreign technology.

- Strong focus on multilingual and inclusive AI capabilities, especially for Indic languages.

- Ambitious scale (foundation model + speech API) with enterprise ambitions.

- Supports voice and real-time interaction rather than just text.

- Alignment with national AI initiatives and research ecosystem.

- Flexibility for enterprise use-cases across language and domain boundaries.

- Many models are still under development; full commercial maturity may take time.

- Customisation for enterprise may require deep domain work and engineering.

- Emerging startup: possibly fewer resources compared to global incumbents.

- Access conditions, pricing, and SLA details may not yet be widely established.

- Performance and ecosystem in real world large deployments may still evolve.

Custom

Custom Pricing.

Proud of the love you're getting? Show off your AI Toolbook reviews—then invite more fans to share the love and build your credibility.

Add an AI Toolbook badge to your site—an easy way to drive followers, showcase updates, and collect reviews. It's like a mini 24/7 billboard for your AI.

Reviews

Rating Distribution

Average score

Popular Mention

FAQs

No — while Indian languages are a core focus, the architecture is designed for multilingual global deployment.

Yes — they offer real-time voice/speech APIs for integration into applications.

Some models like Pragna-1B are open-source or planned to be; others may have commercial licensing.

Yes — the company positions itself for sectors like defence, healthcare, education and governance.

Yes — their roadmap indicates expanding language support and cultural contexts.

Similar AI Tools

Vapi AI

Vapi.ai is an advanced developer-focused platform that enables the creation of AI-driven voice and conversational applications. It provides APIs and tools to build intelligent voice agents, handle real-time conversations, and integrate speech recognition, text-to-speech, and natural language processing into apps and services effortlessly.

Vapi AI

Vapi.ai is an advanced developer-focused platform that enables the creation of AI-driven voice and conversational applications. It provides APIs and tools to build intelligent voice agents, handle real-time conversations, and integrate speech recognition, text-to-speech, and natural language processing into apps and services effortlessly.

Vapi AI

Vapi.ai is an advanced developer-focused platform that enables the creation of AI-driven voice and conversational applications. It provides APIs and tools to build intelligent voice agents, handle real-time conversations, and integrate speech recognition, text-to-speech, and natural language processing into apps and services effortlessly.

Abacus.AI

ChatLLM Teams by Abacus.AI is an all‑in‑one AI assistant that unifies access to top LLMs, image and video generators, and powerful agentic tools in a single workspace. It includes DeepAgent for complex, multi‑step tasks, code execution with an editor, document/chat with files, web search, TTS, and slide/doc generation. Users can build custom chatbots, set up AI workflows, generate images and videos from multiple models, and organize work with projects across desktop and mobile apps. The platform is OpenAI‑style in usability but adds operator features for running tasks on a computer, plus DeepAgent Desktop and AppLLM for building and hosting small apps.

Abacus.AI

ChatLLM Teams by Abacus.AI is an all‑in‑one AI assistant that unifies access to top LLMs, image and video generators, and powerful agentic tools in a single workspace. It includes DeepAgent for complex, multi‑step tasks, code execution with an editor, document/chat with files, web search, TTS, and slide/doc generation. Users can build custom chatbots, set up AI workflows, generate images and videos from multiple models, and organize work with projects across desktop and mobile apps. The platform is OpenAI‑style in usability but adds operator features for running tasks on a computer, plus DeepAgent Desktop and AppLLM for building and hosting small apps.

Abacus.AI

ChatLLM Teams by Abacus.AI is an all‑in‑one AI assistant that unifies access to top LLMs, image and video generators, and powerful agentic tools in a single workspace. It includes DeepAgent for complex, multi‑step tasks, code execution with an editor, document/chat with files, web search, TTS, and slide/doc generation. Users can build custom chatbots, set up AI workflows, generate images and videos from multiple models, and organize work with projects across desktop and mobile apps. The platform is OpenAI‑style in usability but adds operator features for running tasks on a computer, plus DeepAgent Desktop and AppLLM for building and hosting small apps.

Genloop AI

Genloop is a platform that empowers enterprises to build, deploy, and manage custom, private large language models (LLMs) tailored to their business data and requirements — all with minimal development effort. It turns enterprise data into intelligent, conversational insights, allowing users to ask business questions in natural language and receive actionable analysis instantly. The platform enables organizations to confidently manage their data-driven decision-making by offering advanced fine-tuning, automation, and deployment tools. Businesses can transform their existing datasets into private AI assistants that deliver accurate insights, while maintaining complete security and compliance. Genloop’s focus is on bridging the gap between AI and enterprise data operations, providing a scalable, trustworthy, and adaptive solution for teams that want to leverage AI without extensive coding or infrastructure complexity.

Genloop AI

Genloop is a platform that empowers enterprises to build, deploy, and manage custom, private large language models (LLMs) tailored to their business data and requirements — all with minimal development effort. It turns enterprise data into intelligent, conversational insights, allowing users to ask business questions in natural language and receive actionable analysis instantly. The platform enables organizations to confidently manage their data-driven decision-making by offering advanced fine-tuning, automation, and deployment tools. Businesses can transform their existing datasets into private AI assistants that deliver accurate insights, while maintaining complete security and compliance. Genloop’s focus is on bridging the gap between AI and enterprise data operations, providing a scalable, trustworthy, and adaptive solution for teams that want to leverage AI without extensive coding or infrastructure complexity.

Genloop AI

Genloop is a platform that empowers enterprises to build, deploy, and manage custom, private large language models (LLMs) tailored to their business data and requirements — all with minimal development effort. It turns enterprise data into intelligent, conversational insights, allowing users to ask business questions in natural language and receive actionable analysis instantly. The platform enables organizations to confidently manage their data-driven decision-making by offering advanced fine-tuning, automation, and deployment tools. Businesses can transform their existing datasets into private AI assistants that deliver accurate insights, while maintaining complete security and compliance. Genloop’s focus is on bridging the gap between AI and enterprise data operations, providing a scalable, trustworthy, and adaptive solution for teams that want to leverage AI without extensive coding or infrastructure complexity.

Shodh AI

Shodh AI is a deep-tech startup focused on building India’s next generation of scientific and domain-aware AI models. It aims to bridge advanced AI research with real applications, working on projects spanning material science models, foundational AI, and educational tools. The company is selected under IndiaAI and backs its mission with substantial compute resources, including access to millions of GPU hours. (Based on job profiles and company announcements.) They also run a statistics software product targeted at students, researchers, and professionals, offering tools for data analysis, hypothesis testing, and visualization in an intuitive interface.

Shodh AI

Shodh AI is a deep-tech startup focused on building India’s next generation of scientific and domain-aware AI models. It aims to bridge advanced AI research with real applications, working on projects spanning material science models, foundational AI, and educational tools. The company is selected under IndiaAI and backs its mission with substantial compute resources, including access to millions of GPU hours. (Based on job profiles and company announcements.) They also run a statistics software product targeted at students, researchers, and professionals, offering tools for data analysis, hypothesis testing, and visualization in an intuitive interface.

Shodh AI

Shodh AI is a deep-tech startup focused on building India’s next generation of scientific and domain-aware AI models. It aims to bridge advanced AI research with real applications, working on projects spanning material science models, foundational AI, and educational tools. The company is selected under IndiaAI and backs its mission with substantial compute resources, including access to millions of GPU hours. (Based on job profiles and company announcements.) They also run a statistics software product targeted at students, researchers, and professionals, offering tools for data analysis, hypothesis testing, and visualization in an intuitive interface.

Gnani AI

Gnani.ai is an enterprise-grade voice-first generative AI platform designed to automate and elevate customer and user interactions via voice, chat, SMS and WhatsApp channels. Founded in 2016 and headquartered in Bengaluru, India, the company emphasizes deep-tech capabilities: multilingual understanding (40+ languages and dialects), real-time voice processing, and domain-specific models trained on large proprietary datasets spanning Indian accents and vernaculars. The platform is used extensively in sectors such as banking, financial services, insurance (BFSI), telecommunications, automotive, and consumer durables, where it supports tasks like automated contact centre interactions, voice biometrics, conversational agents, and analytics dashboards.

Gnani AI

Gnani.ai is an enterprise-grade voice-first generative AI platform designed to automate and elevate customer and user interactions via voice, chat, SMS and WhatsApp channels. Founded in 2016 and headquartered in Bengaluru, India, the company emphasizes deep-tech capabilities: multilingual understanding (40+ languages and dialects), real-time voice processing, and domain-specific models trained on large proprietary datasets spanning Indian accents and vernaculars. The platform is used extensively in sectors such as banking, financial services, insurance (BFSI), telecommunications, automotive, and consumer durables, where it supports tasks like automated contact centre interactions, voice biometrics, conversational agents, and analytics dashboards.

Gnani AI

Gnani.ai is an enterprise-grade voice-first generative AI platform designed to automate and elevate customer and user interactions via voice, chat, SMS and WhatsApp channels. Founded in 2016 and headquartered in Bengaluru, India, the company emphasizes deep-tech capabilities: multilingual understanding (40+ languages and dialects), real-time voice processing, and domain-specific models trained on large proprietary datasets spanning Indian accents and vernaculars. The platform is used extensively in sectors such as banking, financial services, insurance (BFSI), telecommunications, automotive, and consumer durables, where it supports tasks like automated contact centre interactions, voice biometrics, conversational agents, and analytics dashboards.

Hume

Hume AI is a company focused on creating emotionally intelligent voice-AI and speech systems. It advances voice-interfaces by not only converting text to speech, but enabling voices that convey emotion, adapt to the user’s tone, interruptions and context, and integrate conversationally with underlying language models. The technology is built on affective-computing research and aims to give voice agents more human-like responsiveness and emotional awareness. Clients include customer-service, healthcare and consumer-applications requiring nuanced voice interaction beyond a typical voice-bot. Hume AI emphasises real-time voice, emotional intelligence, and human-centric voice experiences.

Hume

Hume AI is a company focused on creating emotionally intelligent voice-AI and speech systems. It advances voice-interfaces by not only converting text to speech, but enabling voices that convey emotion, adapt to the user’s tone, interruptions and context, and integrate conversationally with underlying language models. The technology is built on affective-computing research and aims to give voice agents more human-like responsiveness and emotional awareness. Clients include customer-service, healthcare and consumer-applications requiring nuanced voice interaction beyond a typical voice-bot. Hume AI emphasises real-time voice, emotional intelligence, and human-centric voice experiences.

Hume

Hume AI is a company focused on creating emotionally intelligent voice-AI and speech systems. It advances voice-interfaces by not only converting text to speech, but enabling voices that convey emotion, adapt to the user’s tone, interruptions and context, and integrate conversationally with underlying language models. The technology is built on affective-computing research and aims to give voice agents more human-like responsiveness and emotional awareness. Clients include customer-service, healthcare and consumer-applications requiring nuanced voice interaction beyond a typical voice-bot. Hume AI emphasises real-time voice, emotional intelligence, and human-centric voice experiences.

AI Awaaz

Ai Awaaz is a text-to-speech (TTS) and voice-generation platform developed in India and marketed as India’s first emotion-based TTS AI engine. It enables users to convert text into natural-sounding voiceovers in 20+ Indian languages and 140+ voices, with selectable emotions (e.g., cheerful, sad, whispering) and export formats suitable for videos, podcasts, audiobooks and e-learning modules. The platform emphasises speed and scalability, claiming that a voiceover can be created in just minutes, compared to traditional voice-actor turnaround times. It is positioned for marketers, educators, content creators and agencies needing multi-language voice production with minimal friction.

AI Awaaz

Ai Awaaz is a text-to-speech (TTS) and voice-generation platform developed in India and marketed as India’s first emotion-based TTS AI engine. It enables users to convert text into natural-sounding voiceovers in 20+ Indian languages and 140+ voices, with selectable emotions (e.g., cheerful, sad, whispering) and export formats suitable for videos, podcasts, audiobooks and e-learning modules. The platform emphasises speed and scalability, claiming that a voiceover can be created in just minutes, compared to traditional voice-actor turnaround times. It is positioned for marketers, educators, content creators and agencies needing multi-language voice production with minimal friction.

AI Awaaz

Ai Awaaz is a text-to-speech (TTS) and voice-generation platform developed in India and marketed as India’s first emotion-based TTS AI engine. It enables users to convert text into natural-sounding voiceovers in 20+ Indian languages and 140+ voices, with selectable emotions (e.g., cheerful, sad, whispering) and export formats suitable for videos, podcasts, audiobooks and e-learning modules. The platform emphasises speed and scalability, claiming that a voiceover can be created in just minutes, compared to traditional voice-actor turnaround times. It is positioned for marketers, educators, content creators and agencies needing multi-language voice production with minimal friction.

Langchain

LangChain is a powerful open-source framework designed to help developers build context-aware applications that leverage large language models (LLMs). It allows users to connect language models to various data sources, APIs, and memory components, enabling intelligent, multi-step reasoning and decision-making processes. LangChain supports both Python and JavaScript, providing modular building blocks for developers to create chatbots, AI assistants, retrieval-augmented generation (RAG) systems, and agent-based tools. The framework is widely adopted across industries for its flexibility in connecting structured and unstructured data with LLMs.

Langchain

LangChain is a powerful open-source framework designed to help developers build context-aware applications that leverage large language models (LLMs). It allows users to connect language models to various data sources, APIs, and memory components, enabling intelligent, multi-step reasoning and decision-making processes. LangChain supports both Python and JavaScript, providing modular building blocks for developers to create chatbots, AI assistants, retrieval-augmented generation (RAG) systems, and agent-based tools. The framework is widely adopted across industries for its flexibility in connecting structured and unstructured data with LLMs.

Langchain

LangChain is a powerful open-source framework designed to help developers build context-aware applications that leverage large language models (LLMs). It allows users to connect language models to various data sources, APIs, and memory components, enabling intelligent, multi-step reasoning and decision-making processes. LangChain supports both Python and JavaScript, providing modular building blocks for developers to create chatbots, AI assistants, retrieval-augmented generation (RAG) systems, and agent-based tools. The framework is widely adopted across industries for its flexibility in connecting structured and unstructured data with LLMs.

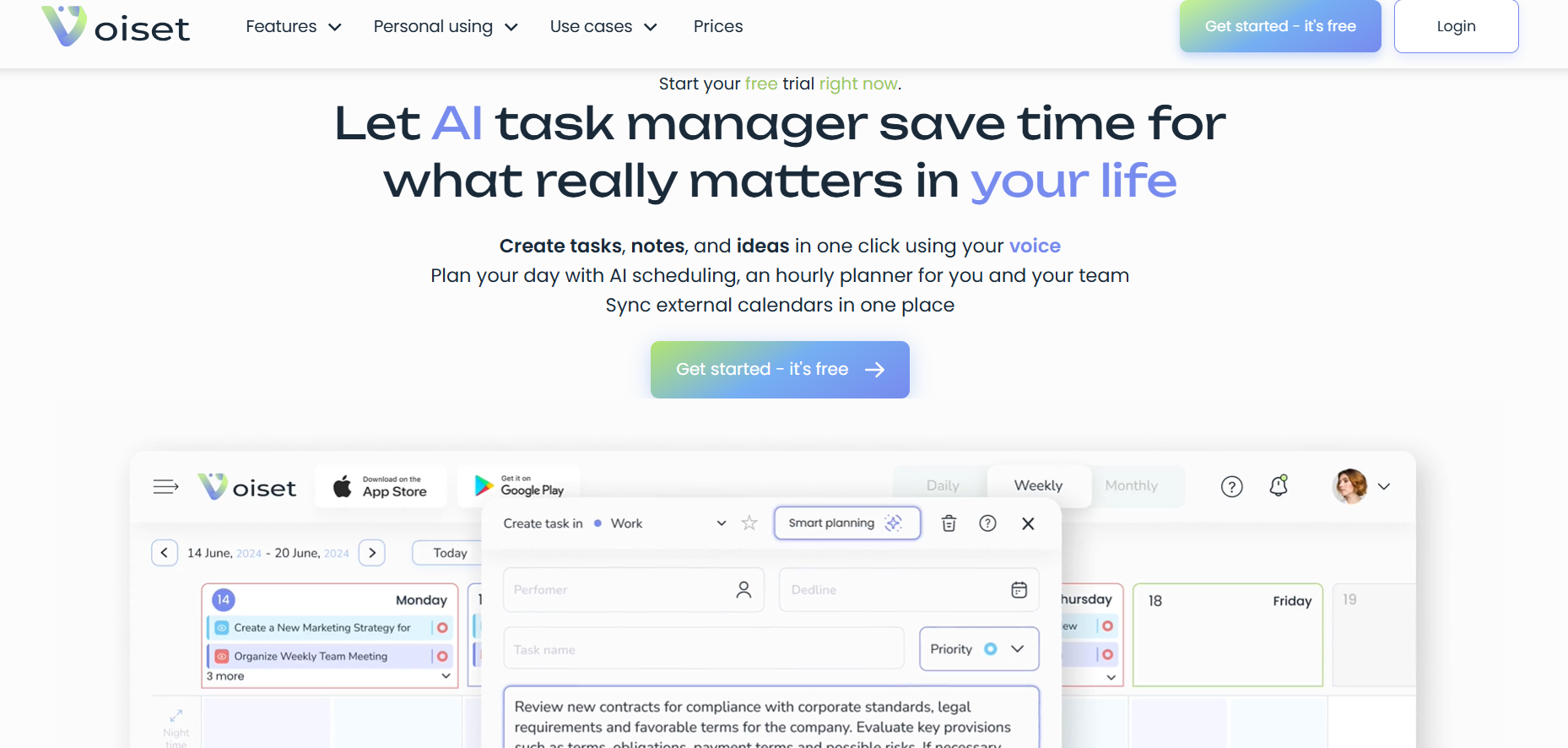

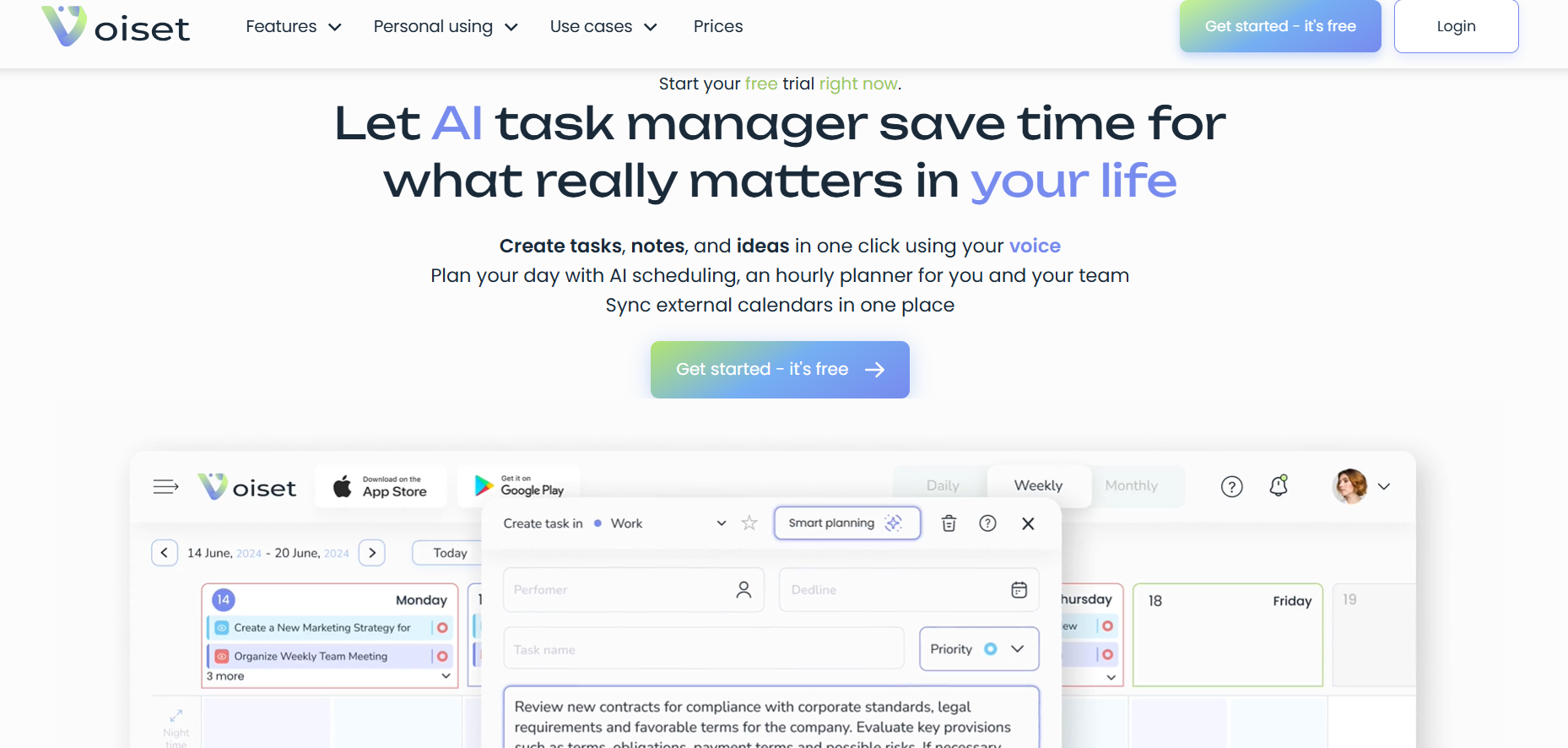

Voiset

Voiset is an AI-driven voice automation and conversational intelligence platform designed to enhance business communication, sales, and customer service. It allows teams to build intelligent voice agents that handle inbound and outbound calls, transcribe conversations, and provide real-time analytics. With advanced natural language processing, Voiset enables companies to automate communication tasks, reduce call handling time, and maintain personalized customer interactions. It integrates seamlessly with CRM tools and supports multiple languages, making it ideal for global teams and enterprises looking to scale voice operations.

Voiset

Voiset is an AI-driven voice automation and conversational intelligence platform designed to enhance business communication, sales, and customer service. It allows teams to build intelligent voice agents that handle inbound and outbound calls, transcribe conversations, and provide real-time analytics. With advanced natural language processing, Voiset enables companies to automate communication tasks, reduce call handling time, and maintain personalized customer interactions. It integrates seamlessly with CRM tools and supports multiple languages, making it ideal for global teams and enterprises looking to scale voice operations.

Voiset

Voiset is an AI-driven voice automation and conversational intelligence platform designed to enhance business communication, sales, and customer service. It allows teams to build intelligent voice agents that handle inbound and outbound calls, transcribe conversations, and provide real-time analytics. With advanced natural language processing, Voiset enables companies to automate communication tasks, reduce call handling time, and maintain personalized customer interactions. It integrates seamlessly with CRM tools and supports multiple languages, making it ideal for global teams and enterprises looking to scale voice operations.

LLM Chat

LLMChat is a privacy-focused, open-source AI chatbot platform designed for advanced research, agentic workflows, and seamless interaction with multiple large language models (LLMs). It offers users a minimalistic and intuitive interface enabling deep exploration of complex topics with modes like Deep Research and Pro Search, which incorporates real-time web integration for current data. The platform emphasizes user privacy by storing all chat history locally in the browser, ensuring conversations never leave the device. LLMChat supports many popular LLM providers such as OpenAI, Anthropic, Google, and more, allowing users to customize AI assistants with personalized instructions and knowledge bases for a wide variety of applications ranging from research to content generation and coding assistance.

LLM Chat

LLMChat is a privacy-focused, open-source AI chatbot platform designed for advanced research, agentic workflows, and seamless interaction with multiple large language models (LLMs). It offers users a minimalistic and intuitive interface enabling deep exploration of complex topics with modes like Deep Research and Pro Search, which incorporates real-time web integration for current data. The platform emphasizes user privacy by storing all chat history locally in the browser, ensuring conversations never leave the device. LLMChat supports many popular LLM providers such as OpenAI, Anthropic, Google, and more, allowing users to customize AI assistants with personalized instructions and knowledge bases for a wide variety of applications ranging from research to content generation and coding assistance.

LLM Chat

LLMChat is a privacy-focused, open-source AI chatbot platform designed for advanced research, agentic workflows, and seamless interaction with multiple large language models (LLMs). It offers users a minimalistic and intuitive interface enabling deep exploration of complex topics with modes like Deep Research and Pro Search, which incorporates real-time web integration for current data. The platform emphasizes user privacy by storing all chat history locally in the browser, ensuring conversations never leave the device. LLMChat supports many popular LLM providers such as OpenAI, Anthropic, Google, and more, allowing users to customize AI assistants with personalized instructions and knowledge bases for a wide variety of applications ranging from research to content generation and coding assistance.

Awan LLM

Awan LLM is a cost-effective, unlimited token large language model inference API platform designed for power users and developers. Unlike traditional API providers that charge per token, Awan LLM offers a monthly subscription model that enables users to send and receive unlimited tokens up to the model's context limit. It supports unrestricted use of LLM models without censorship or constraints. The platform is built on privately owned data centers and GPUs, allowing it to offer efficient and scalable AI services. Awan LLM supports numerous use cases including AI assistants, AI agents, roleplaying, data processing, code completion, and building AI-powered applications without worrying about token limits or costs.

Awan LLM

Awan LLM is a cost-effective, unlimited token large language model inference API platform designed for power users and developers. Unlike traditional API providers that charge per token, Awan LLM offers a monthly subscription model that enables users to send and receive unlimited tokens up to the model's context limit. It supports unrestricted use of LLM models without censorship or constraints. The platform is built on privately owned data centers and GPUs, allowing it to offer efficient and scalable AI services. Awan LLM supports numerous use cases including AI assistants, AI agents, roleplaying, data processing, code completion, and building AI-powered applications without worrying about token limits or costs.

Awan LLM

Awan LLM is a cost-effective, unlimited token large language model inference API platform designed for power users and developers. Unlike traditional API providers that charge per token, Awan LLM offers a monthly subscription model that enables users to send and receive unlimited tokens up to the model's context limit. It supports unrestricted use of LLM models without censorship or constraints. The platform is built on privately owned data centers and GPUs, allowing it to offer efficient and scalable AI services. Awan LLM supports numerous use cases including AI assistants, AI agents, roleplaying, data processing, code completion, and building AI-powered applications without worrying about token limits or costs.

LM Studio

LM Studio is a local large language model (LLM) platform that enables users to run and download powerful AI language models like LLaMa, MPT, and Gemma directly on their own computers. This platform supports Mac, Windows, and Linux operating systems, providing flexibility to users across different devices. LM Studio focuses on privacy and control by allowing users to work with AI models locally without relying on cloud-based services, ensuring data stays on the user’s device. It offers an easy-to-install interface with step-by-step guidance for setup, facilitating access to advanced AI capabilities for developers, researchers, and AI enthusiasts without requiring an internet connection.

LM Studio

LM Studio is a local large language model (LLM) platform that enables users to run and download powerful AI language models like LLaMa, MPT, and Gemma directly on their own computers. This platform supports Mac, Windows, and Linux operating systems, providing flexibility to users across different devices. LM Studio focuses on privacy and control by allowing users to work with AI models locally without relying on cloud-based services, ensuring data stays on the user’s device. It offers an easy-to-install interface with step-by-step guidance for setup, facilitating access to advanced AI capabilities for developers, researchers, and AI enthusiasts without requiring an internet connection.

LM Studio

LM Studio is a local large language model (LLM) platform that enables users to run and download powerful AI language models like LLaMa, MPT, and Gemma directly on their own computers. This platform supports Mac, Windows, and Linux operating systems, providing flexibility to users across different devices. LM Studio focuses on privacy and control by allowing users to work with AI models locally without relying on cloud-based services, ensuring data stays on the user’s device. It offers an easy-to-install interface with step-by-step guidance for setup, facilitating access to advanced AI capabilities for developers, researchers, and AI enthusiasts without requiring an internet connection.

Editorial Note

This page was researched and written by the ATB Editorial Team. Our team researches each AI tool by reviewing its official website, testing features, exploring real use cases, and considering user feedback. Every page is fact-checked and regularly updated to ensure the information stays accurate, neutral, and useful for our readers.

If you have any suggestions or questions, email us at hello@aitoolbook.ai