- AI Developers & Engineers: Access over 45 major AI models through a single API and get real-time analytics on performance and usage.

- DevOps & IT Teams: Deploy AI applications with confidence using flexible options like private cloud, on-premise, or global public cloud regions.

- Security & Compliance Teams: Ensure AI applications adhere to security policies and data regulations with features like content filtering, PII redaction, and audit logging.

- Business Leaders: Optimize AI spend, set budgets, and gain a clear view of AI usage across the organization to control costs.

How to Use UsageGuard?

- Integrate a Single API: Replace your direct LLM API calls with a single, unified endpoint provided by UsageGuard.

- Set Policies & Controls: Configure custom security policies, usage limits, and cost controls for different projects, teams, or environments.

- Develop & Deploy: Build intelligent applications and autonomous agents on the platform, leveraging its tools for document processing and stateful session management.

- Monitor & Optimize: Use the real-time analytics dashboard to monitor performance, track usage, and gain insights to optimize your AI operations and costs.

- Model Agnostic Unified API: The platform offers a single API to access over 45 major AI models, including those from OpenAI, Anthropic, and Meta. This allows developers to easily switch between models without changing their code.

- Robust Security & Governance: UsageGuard acts as a vital security layer, protecting against prompt injection attacks, filtering malicious content, and redacting sensitive data (PII) before it reaches the LLM.

- Cost Control and Optimization: It provides advanced tools to track token usage, set budgets, and reduce costs through features like automatic prompt compression and caching.

- Flexible Deployment Options: Unlike many competitors, UsageGuard offers a variety of deployment options, including on-premise, private cloud, or air-gapped environments, giving enterprises complete control over their infrastructure and data.

- Comprehensive Observability: It provides full visibility into AI systems with real-time monitoring, logging, tracing.

- Consolidated Solution: It integrates development, security, observability, and cost management into one platform, simplifying the AI stack.

- Enterprise-Ready Features: The platform's focus on security, compliance, and flexible deployment makes it ideal for enterprise customers.

- Cost Savings: Its ability to optimize token usage and track spend can lead to significant cost reductions.

- Privacy-First Approach: The company emphasizes data isolation, end-to-end encryption, and adherence to minimal data retention practices.

- New Company: Founded in 2024, it is a relatively new player in the market, which may raise questions about its long-term stability and maturity.

- Unfunded: The company is unfunded, which could be a risk factor for potential clients compared to its well-funded competitors.

- Lack of Public Reviews: There are currently no extensive user reviews from major platforms, making it difficult to gauge real-world performance and customer satisfaction.

Paid

custom

Proud of the love you're getting? Show off your AI Toolbook reviews—then invite more fans to share the love and build your credibility.

Add an AI Toolbook badge to your site—an easy way to drive followers, showcase updates, and collect reviews. It's like a mini 24/7 billboard for your AI.

Reviews

Rating Distribution

Average score

Popular Mention

FAQs

Similar AI Tools

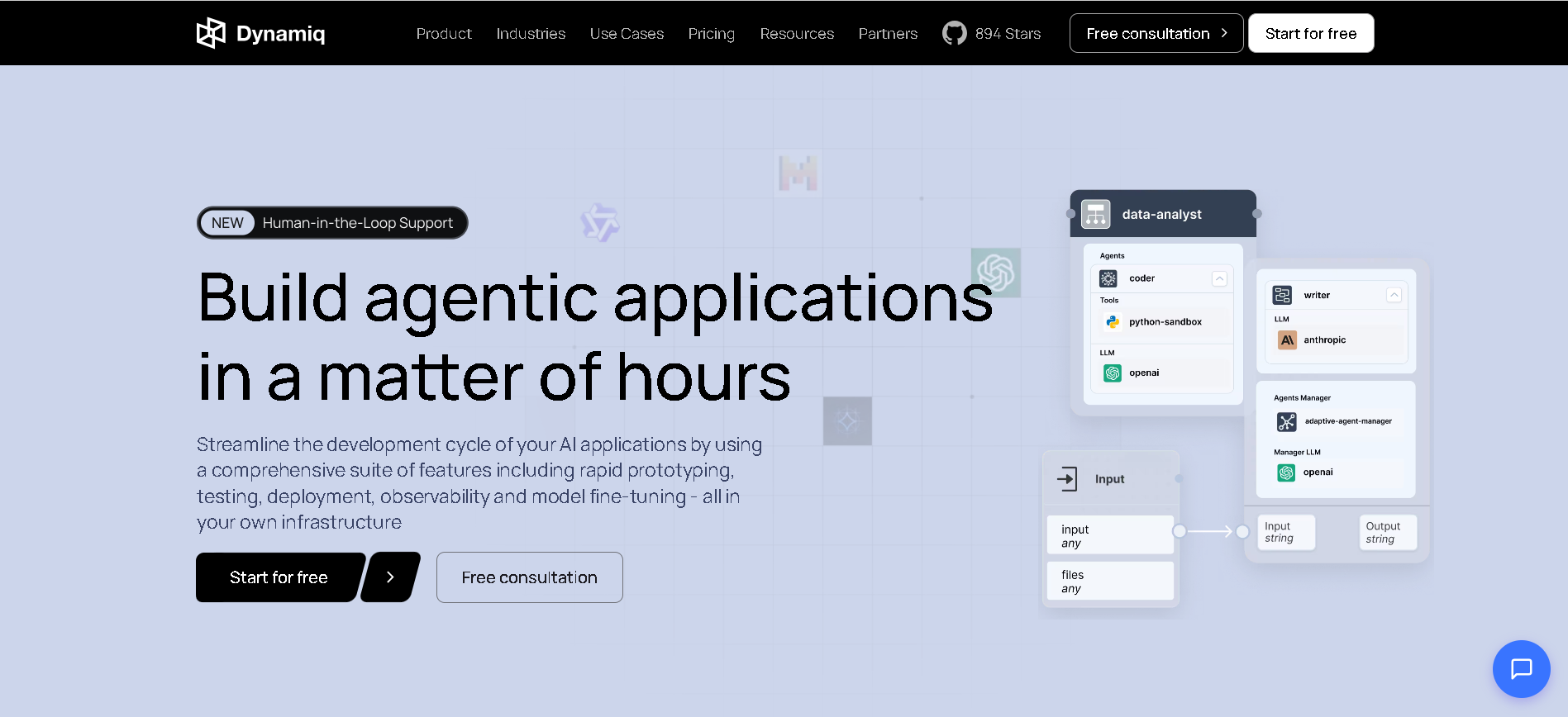

Dynamiq

Dynamiq is an enterprise-grade GenAI operating platform that enables organizations to build, deploy, and manage AI agents and workflows—on-premises, in the cloud, or hybrid. It offers capabilities such as low-code agent and workflow builders, RAG-powered knowledge, model fine-tuning, guardrails, observability, multi-agent orchestration, and seamless integration with open-source or third-party LLMs.

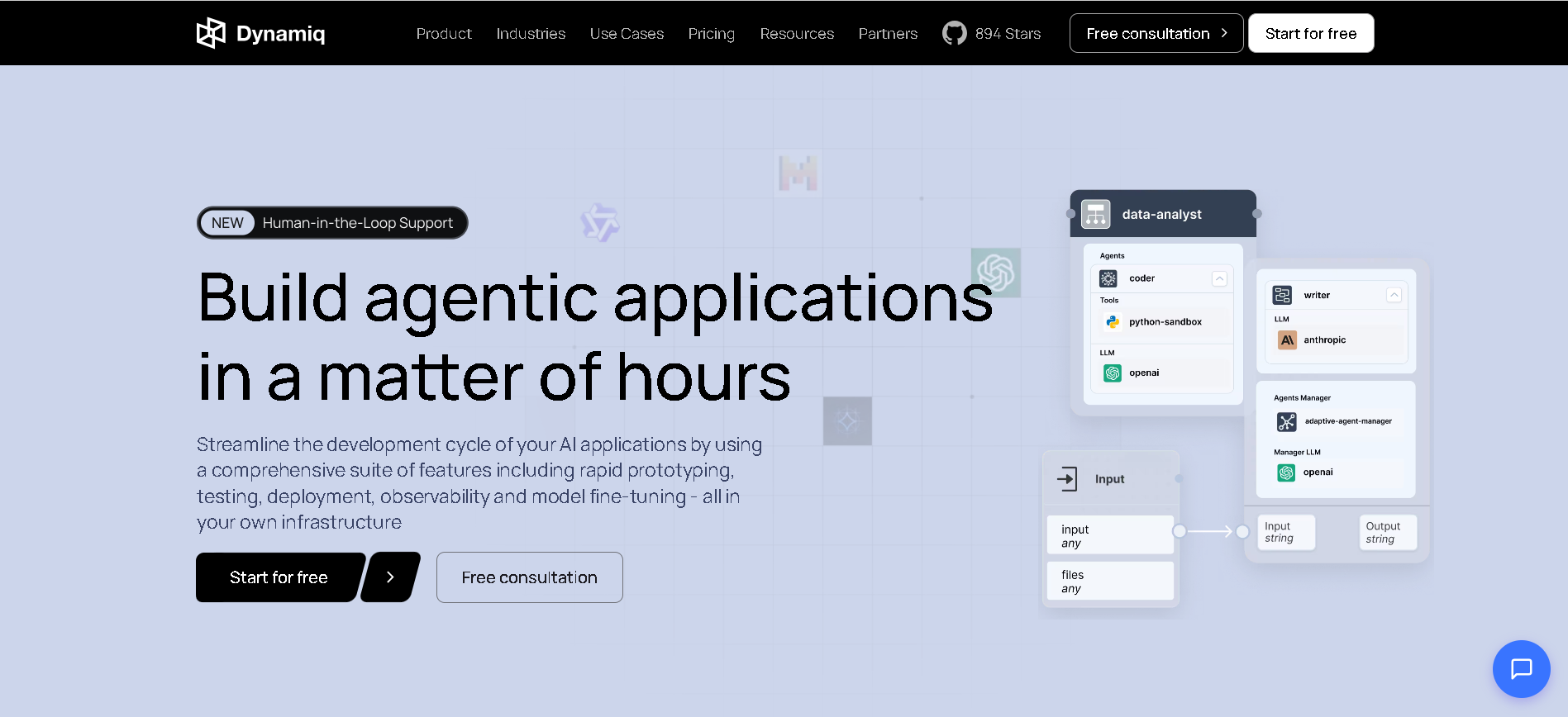

Dynamiq

Dynamiq is an enterprise-grade GenAI operating platform that enables organizations to build, deploy, and manage AI agents and workflows—on-premises, in the cloud, or hybrid. It offers capabilities such as low-code agent and workflow builders, RAG-powered knowledge, model fine-tuning, guardrails, observability, multi-agent orchestration, and seamless integration with open-source or third-party LLMs.

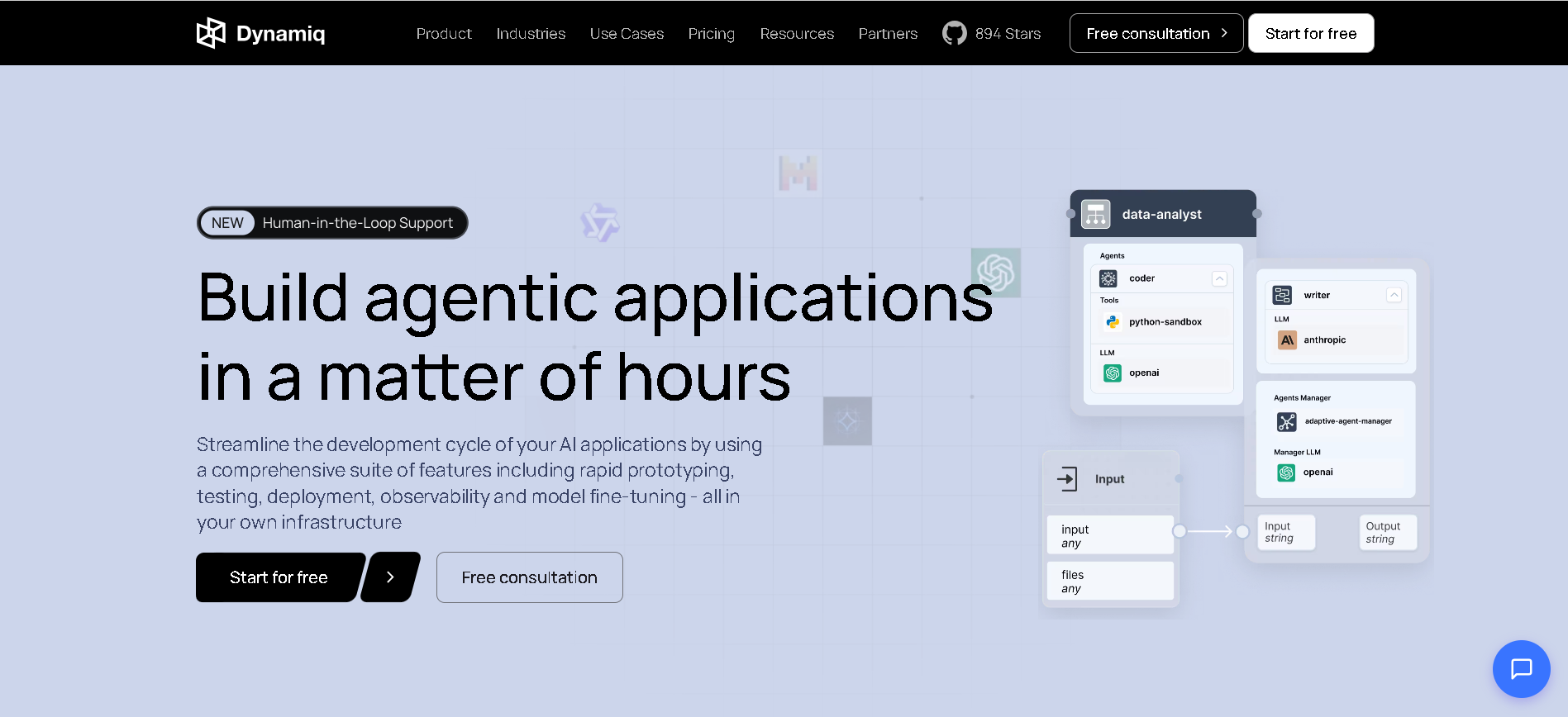

Dynamiq

Dynamiq is an enterprise-grade GenAI operating platform that enables organizations to build, deploy, and manage AI agents and workflows—on-premises, in the cloud, or hybrid. It offers capabilities such as low-code agent and workflow builders, RAG-powered knowledge, model fine-tuning, guardrails, observability, multi-agent orchestration, and seamless integration with open-source or third-party LLMs.

Build by Nvidia

Build by NVIDIA is a developer-focused platform showcasing blueprints and microservices for building AI-powered applications using NVIDIA’s NIM (NeMo Inference Microservices) ecosystem. It offers plug-and-play workflows like enterprise research agents, RAG pipelines, video summarization assistants, and AI-powered virtual assistants—all optimized for scalability, latency, and multimodal capabilities.

Build by Nvidia

Build by NVIDIA is a developer-focused platform showcasing blueprints and microservices for building AI-powered applications using NVIDIA’s NIM (NeMo Inference Microservices) ecosystem. It offers plug-and-play workflows like enterprise research agents, RAG pipelines, video summarization assistants, and AI-powered virtual assistants—all optimized for scalability, latency, and multimodal capabilities.

Build by Nvidia

Build by NVIDIA is a developer-focused platform showcasing blueprints and microservices for building AI-powered applications using NVIDIA’s NIM (NeMo Inference Microservices) ecosystem. It offers plug-and-play workflows like enterprise research agents, RAG pipelines, video summarization assistants, and AI-powered virtual assistants—all optimized for scalability, latency, and multimodal capabilities.

Batteries Included

Batteries Included is a self-hosted AI platform designed to provide the necessary infrastructure for building and deploying AI applications. Its primary purpose is to simplify the deployment of large language models (LLMs), vector databases, and Jupyter notebooks, offering enterprise-grade tools similar to those used by hyperscalers, but within a user's self-hosted environment.

Batteries Included

Batteries Included is a self-hosted AI platform designed to provide the necessary infrastructure for building and deploying AI applications. Its primary purpose is to simplify the deployment of large language models (LLMs), vector databases, and Jupyter notebooks, offering enterprise-grade tools similar to those used by hyperscalers, but within a user's self-hosted environment.

Batteries Included

Batteries Included is a self-hosted AI platform designed to provide the necessary infrastructure for building and deploying AI applications. Its primary purpose is to simplify the deployment of large language models (LLMs), vector databases, and Jupyter notebooks, offering enterprise-grade tools similar to those used by hyperscalers, but within a user's self-hosted environment.

Inweave

Inweave is an AI tool designed to help startups and scaleups automate their workflows. It allows users to create, deploy, and manage tailored AI assistants for a variety of tasks and business processes. By offering flexible model selection and robust API support, Inweave enables businesses to seamlessly integrate AI into their existing applications, boosting productivity and efficiency.

Inweave

Inweave is an AI tool designed to help startups and scaleups automate their workflows. It allows users to create, deploy, and manage tailored AI assistants for a variety of tasks and business processes. By offering flexible model selection and robust API support, Inweave enables businesses to seamlessly integrate AI into their existing applications, boosting productivity and efficiency.

Inweave

Inweave is an AI tool designed to help startups and scaleups automate their workflows. It allows users to create, deploy, and manage tailored AI assistants for a variety of tasks and business processes. By offering flexible model selection and robust API support, Inweave enables businesses to seamlessly integrate AI into their existing applications, boosting productivity and efficiency.

Groq APP Gen

Groq AppGen is an innovative, web-based tool that uses AI to generate and modify web applications in real-time. Powered by Groq's LLM API and the Llama 3.3 70B model, it allows users to create full-stack applications and components using simple, natural language queries. The platform's primary purpose is to dramatically accelerate the development process by generating code in milliseconds, providing an open-source solution for both developers and "no-code" users.

Groq APP Gen

Groq AppGen is an innovative, web-based tool that uses AI to generate and modify web applications in real-time. Powered by Groq's LLM API and the Llama 3.3 70B model, it allows users to create full-stack applications and components using simple, natural language queries. The platform's primary purpose is to dramatically accelerate the development process by generating code in milliseconds, providing an open-source solution for both developers and "no-code" users.

Groq APP Gen

Groq AppGen is an innovative, web-based tool that uses AI to generate and modify web applications in real-time. Powered by Groq's LLM API and the Llama 3.3 70B model, it allows users to create full-stack applications and components using simple, natural language queries. The platform's primary purpose is to dramatically accelerate the development process by generating code in milliseconds, providing an open-source solution for both developers and "no-code" users.

SiliconFlow

SiliconFlow is an AI infrastructure platform built for developers and enterprises who want to deploy, run, and fine-tune large language models (LLMs) and multimodal models efficiently. It offers a unified stack for inference, model hosting, and acceleration so that you don’t have to manage all the infrastructure yourself. The platform supports many open source and commercial models, high throughput, low latency, autoscaling and flexible deployment (serverless, reserved GPUs, private cloud). It also emphasizes cost-effectiveness, data security, and feature-rich tooling such as APIs compatible with OpenAI style, fine-tuning, monitoring, and scalability.

SiliconFlow

SiliconFlow is an AI infrastructure platform built for developers and enterprises who want to deploy, run, and fine-tune large language models (LLMs) and multimodal models efficiently. It offers a unified stack for inference, model hosting, and acceleration so that you don’t have to manage all the infrastructure yourself. The platform supports many open source and commercial models, high throughput, low latency, autoscaling and flexible deployment (serverless, reserved GPUs, private cloud). It also emphasizes cost-effectiveness, data security, and feature-rich tooling such as APIs compatible with OpenAI style, fine-tuning, monitoring, and scalability.

SiliconFlow

SiliconFlow is an AI infrastructure platform built for developers and enterprises who want to deploy, run, and fine-tune large language models (LLMs) and multimodal models efficiently. It offers a unified stack for inference, model hosting, and acceleration so that you don’t have to manage all the infrastructure yourself. The platform supports many open source and commercial models, high throughput, low latency, autoscaling and flexible deployment (serverless, reserved GPUs, private cloud). It also emphasizes cost-effectiveness, data security, and feature-rich tooling such as APIs compatible with OpenAI style, fine-tuning, monitoring, and scalability.

Aisera

Aisera is an AI-driven platform designed to transform enterprise service experiences through the integration of generative AI and advanced automation. It leverages Large Language Models (LLMs) and domain-specific AI capabilities to deliver proactive, personalized, and predictive solutions across various business functions such as IT, customer service, HR, and more.

Aisera

Aisera is an AI-driven platform designed to transform enterprise service experiences through the integration of generative AI and advanced automation. It leverages Large Language Models (LLMs) and domain-specific AI capabilities to deliver proactive, personalized, and predictive solutions across various business functions such as IT, customer service, HR, and more.

Aisera

Aisera is an AI-driven platform designed to transform enterprise service experiences through the integration of generative AI and advanced automation. It leverages Large Language Models (LLMs) and domain-specific AI capabilities to deliver proactive, personalized, and predictive solutions across various business functions such as IT, customer service, HR, and more.

Genloop AI

Genloop is a platform that empowers enterprises to build, deploy, and manage custom, private large language models (LLMs) tailored to their business data and requirements — all with minimal development effort. It turns enterprise data into intelligent, conversational insights, allowing users to ask business questions in natural language and receive actionable analysis instantly. The platform enables organizations to confidently manage their data-driven decision-making by offering advanced fine-tuning, automation, and deployment tools. Businesses can transform their existing datasets into private AI assistants that deliver accurate insights, while maintaining complete security and compliance. Genloop’s focus is on bridging the gap between AI and enterprise data operations, providing a scalable, trustworthy, and adaptive solution for teams that want to leverage AI without extensive coding or infrastructure complexity.

Genloop AI

Genloop is a platform that empowers enterprises to build, deploy, and manage custom, private large language models (LLMs) tailored to their business data and requirements — all with minimal development effort. It turns enterprise data into intelligent, conversational insights, allowing users to ask business questions in natural language and receive actionable analysis instantly. The platform enables organizations to confidently manage their data-driven decision-making by offering advanced fine-tuning, automation, and deployment tools. Businesses can transform their existing datasets into private AI assistants that deliver accurate insights, while maintaining complete security and compliance. Genloop’s focus is on bridging the gap between AI and enterprise data operations, providing a scalable, trustworthy, and adaptive solution for teams that want to leverage AI without extensive coding or infrastructure complexity.

Genloop AI

Genloop is a platform that empowers enterprises to build, deploy, and manage custom, private large language models (LLMs) tailored to their business data and requirements — all with minimal development effort. It turns enterprise data into intelligent, conversational insights, allowing users to ask business questions in natural language and receive actionable analysis instantly. The platform enables organizations to confidently manage their data-driven decision-making by offering advanced fine-tuning, automation, and deployment tools. Businesses can transform their existing datasets into private AI assistants that deliver accurate insights, while maintaining complete security and compliance. Genloop’s focus is on bridging the gap between AI and enterprise data operations, providing a scalable, trustworthy, and adaptive solution for teams that want to leverage AI without extensive coding or infrastructure complexity.

Mobisoft Infotech

MI Team AI is a robust multi-LLM platform designed for enterprises seeking secure, scalable, and cost-effective AI access. It consolidates multiple AI models such as ChatGPT, Claude, Gemini, and various open-source large language models into a single platform, enabling users to switch seamlessly without juggling different tools. The platform supports deployment on private cloud or on-premises infrastructure to ensure complete data privacy and compliance. MI Team AI provides a unified workspace with role-based access controls, single sign-on (SSO), and comprehensive chat logs for transparency and auditability. It offers fixed licensing fees allowing unlimited team access under the company’s brand, making it ideal for organizations needing full control over AI usage.

Mobisoft Infotech

MI Team AI is a robust multi-LLM platform designed for enterprises seeking secure, scalable, and cost-effective AI access. It consolidates multiple AI models such as ChatGPT, Claude, Gemini, and various open-source large language models into a single platform, enabling users to switch seamlessly without juggling different tools. The platform supports deployment on private cloud or on-premises infrastructure to ensure complete data privacy and compliance. MI Team AI provides a unified workspace with role-based access controls, single sign-on (SSO), and comprehensive chat logs for transparency and auditability. It offers fixed licensing fees allowing unlimited team access under the company’s brand, making it ideal for organizations needing full control over AI usage.

Mobisoft Infotech

MI Team AI is a robust multi-LLM platform designed for enterprises seeking secure, scalable, and cost-effective AI access. It consolidates multiple AI models such as ChatGPT, Claude, Gemini, and various open-source large language models into a single platform, enabling users to switch seamlessly without juggling different tools. The platform supports deployment on private cloud or on-premises infrastructure to ensure complete data privacy and compliance. MI Team AI provides a unified workspace with role-based access controls, single sign-on (SSO), and comprehensive chat logs for transparency and auditability. It offers fixed licensing fees allowing unlimited team access under the company’s brand, making it ideal for organizations needing full control over AI usage.

Emby AI

Emby.ai is a secure EU-hosted AI platform and API service that lets developers and businesses access powerful open-source large language models (LLMs) like Llama, DeepSeek, and others with predictable pricing, transparent billing, and strong privacy protections in compliance with GDPR. It provides a way to build AI-powered applications by offering an OpenAI-compatible API and scalable token plans, all hosted in Amsterdam.

Emby AI

Emby.ai is a secure EU-hosted AI platform and API service that lets developers and businesses access powerful open-source large language models (LLMs) like Llama, DeepSeek, and others with predictable pricing, transparent billing, and strong privacy protections in compliance with GDPR. It provides a way to build AI-powered applications by offering an OpenAI-compatible API and scalable token plans, all hosted in Amsterdam.

Emby AI

Emby.ai is a secure EU-hosted AI platform and API service that lets developers and businesses access powerful open-source large language models (LLMs) like Llama, DeepSeek, and others with predictable pricing, transparent billing, and strong privacy protections in compliance with GDPR. It provides a way to build AI-powered applications by offering an OpenAI-compatible API and scalable token plans, all hosted in Amsterdam.

OpenAgentRegistry.ai is a unified platform designed to connect agents, enterprise AI systems, and data providers in one ecosystem. It serves organizations that build, deploy, manage, or integrate AI agents at scale. The platform supports agent development, resource management, data access, and enterprise-level orchestration within a single environment. By providing a central hub for agent operations, OpenAgentRegistry.ai simplifies complex AI deployments across teams, workflows, and infrastructure layers, ensuring interoperability and streamlined management.

Open Agent Registr..

OpenAgentRegistry.ai is a unified platform designed to connect agents, enterprise AI systems, and data providers in one ecosystem. It serves organizations that build, deploy, manage, or integrate AI agents at scale. The platform supports agent development, resource management, data access, and enterprise-level orchestration within a single environment. By providing a central hub for agent operations, OpenAgentRegistry.ai simplifies complex AI deployments across teams, workflows, and infrastructure layers, ensuring interoperability and streamlined management.

Open Agent Registr..

OpenAgentRegistry.ai is a unified platform designed to connect agents, enterprise AI systems, and data providers in one ecosystem. It serves organizations that build, deploy, manage, or integrate AI agents at scale. The platform supports agent development, resource management, data access, and enterprise-level orchestration within a single environment. By providing a central hub for agent operations, OpenAgentRegistry.ai simplifies complex AI deployments across teams, workflows, and infrastructure layers, ensuring interoperability and streamlined management.

Synorex

Synorex One is a private, secure AI workspace that provides access to multiple leading large language models within a single enterprise platform. Designed specifically to go beyond basic chatbot functionality, Synorex One offers a robust, flexible environment that allows organizations to leverage AI at scale. Teams can work with different models, manage data safely, build internal workflows, and generate business insights securely. The platform emphasizes privacy, enterprise readiness, and multi-model flexibility to support complex professional needs.

Synorex

Synorex One is a private, secure AI workspace that provides access to multiple leading large language models within a single enterprise platform. Designed specifically to go beyond basic chatbot functionality, Synorex One offers a robust, flexible environment that allows organizations to leverage AI at scale. Teams can work with different models, manage data safely, build internal workflows, and generate business insights securely. The platform emphasizes privacy, enterprise readiness, and multi-model flexibility to support complex professional needs.

Synorex

Synorex One is a private, secure AI workspace that provides access to multiple leading large language models within a single enterprise platform. Designed specifically to go beyond basic chatbot functionality, Synorex One offers a robust, flexible environment that allows organizations to leverage AI at scale. Teams can work with different models, manage data safely, build internal workflows, and generate business insights securely. The platform emphasizes privacy, enterprise readiness, and multi-model flexibility to support complex professional needs.

Editorial Note

This page was researched and written by the ATB Editorial Team. Our team researches each AI tool by reviewing its official website, testing features, exploring real use cases, and considering user feedback. Every page is fact-checked and regularly updated to ensure the information stays accurate, neutral, and useful for our readers.

If you have any suggestions or questions, email us at hello@aitoolbook.ai