- Developers & Engineers: Integrate EU-hosted AI models into apps, tools, or workflows with standard API calls.

- Startups & Tech Teams: Build AI features without unpredictable usage fees or dependence on third-party training on your data.

- AI Enthusiasts: Experiment with multiple open-source models and customize usage within transparent pricing tiers.

- Product Managers: Add chat, completion, and AI assistants to products with reliable EU data residency.

- Enterprise IT & Compliance Teams: Ensure data protection with GDPR-focused infrastructure and no logging of prompts or completions.

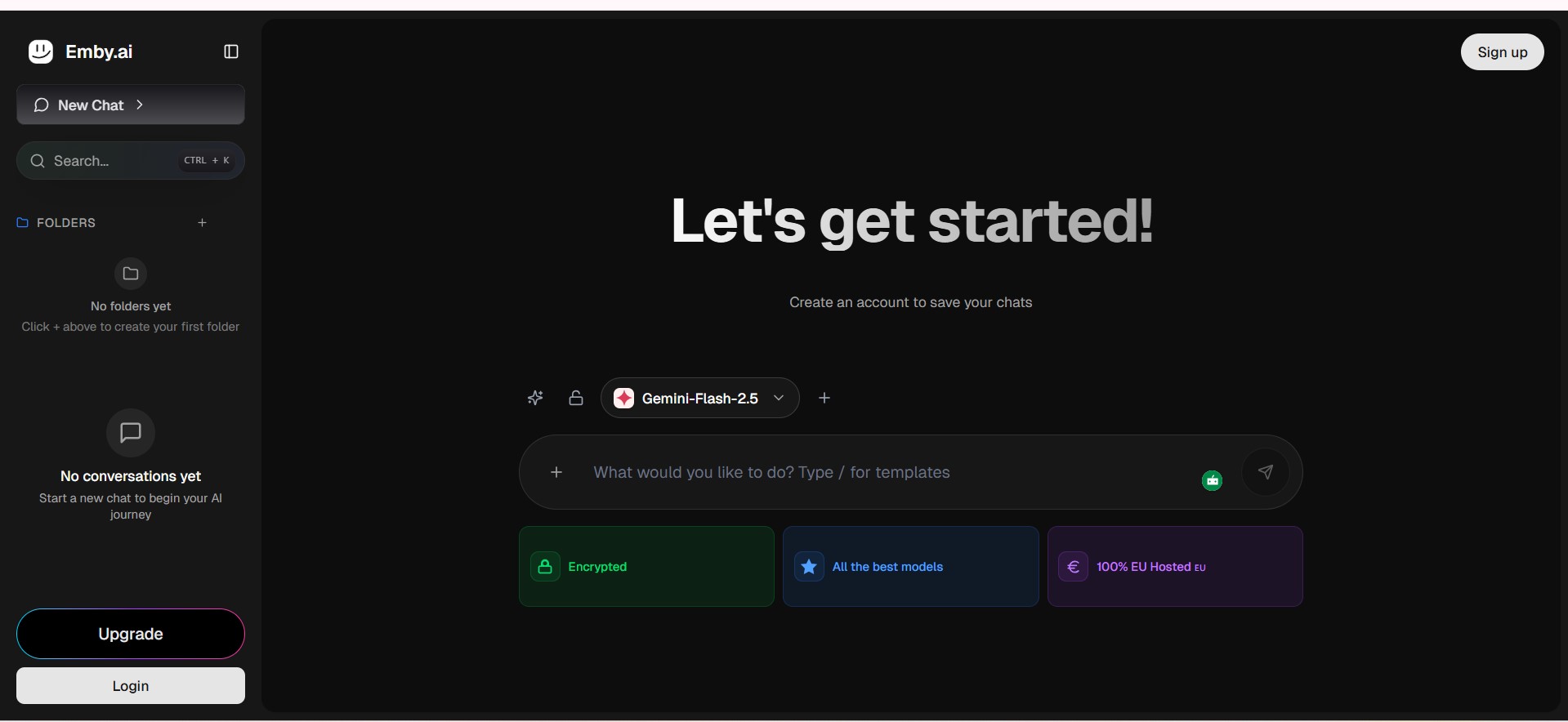

How to Use Emby.ai?

- Sign Up & Get an API Key: Register on the platform and get an API key without needing a credit card.

- Choose a Model & Plan: Pick from open-source models (like Llama 3.1, Llama 3.3, DeepSeek, etc.) and select a subscription that fits your usage.

- Make API Requests: Use standard HTTP clients (curl, Python, etc.) to call the API for chat, completions, or other AI tasks.

- Scale as Needed: Increase token capacity and daily limits by upgrading plans for larger workloads.

- Monitor & Manage: Track usage and optimize requests based on transparent token pricing.

- EU-Hosted AI: Offers data residency and GDPR compliance by hosting all services in the EU (Amsterdam).

- Open-Source Model Options: Use a variety of LLMs like Llama and DeepSeek without being locked into a single proprietary model.

- Transparent Pricing: Predictable token billing with no surprise charges and clear cost structures per model.

- OpenAI-Compatible API: Easily integrates into existing tools and workflows with familiar API formats.

- Scalable Plans: Choose plans with different daily token limits to match development or production needs.

- EU GDPR Compliance & Privacy: Strong focus on hosting and data protection for European users.

- Multiple Model Support: Flexibility to pick open-source LLMs for different use cases.

- Clear, Predictable Pricing: Token-based costs with no hidden fees.

- Developer-Focused: May require coding skills to integrate and use effectively.

- Limited Brand Awareness: Less widely known than major AI API providers, which may affect community support.

- Subscription Cost: Annual plans can be expensive for high token volumes compared to some alternatives.

Custom

Custom Pricing.

Proud of the love you're getting? Show off your AI Toolbook reviews—then invite more fans to share the love and build your credibility.

Add an AI Toolbook badge to your site—an easy way to drive followers, showcase updates, and collect reviews. It's like a mini 24/7 billboard for your AI.

Reviews

Rating Distribution

Average score

Popular Mention

FAQs

Similar AI Tools

All-in-One AI

All-in-One AI is a Japanese platform that provides over 200 pre-configured AI tools in a single application. Its primary purpose is to simplify AI content generation by eliminating the need for users to write complex prompts. The platform, developed by Brightiers Inc., allows users to easily create high-quality text and images for a variety of purposes, from marketing copy to social media posts.

All-in-One AI

All-in-One AI is a Japanese platform that provides over 200 pre-configured AI tools in a single application. Its primary purpose is to simplify AI content generation by eliminating the need for users to write complex prompts. The platform, developed by Brightiers Inc., allows users to easily create high-quality text and images for a variety of purposes, from marketing copy to social media posts.

All-in-One AI

All-in-One AI is a Japanese platform that provides over 200 pre-configured AI tools in a single application. Its primary purpose is to simplify AI content generation by eliminating the need for users to write complex prompts. The platform, developed by Brightiers Inc., allows users to easily create high-quality text and images for a variety of purposes, from marketing copy to social media posts.

SiliconFlow

SiliconFlow is an AI infrastructure platform built for developers and enterprises who want to deploy, run, and fine-tune large language models (LLMs) and multimodal models efficiently. It offers a unified stack for inference, model hosting, and acceleration so that you don’t have to manage all the infrastructure yourself. The platform supports many open source and commercial models, high throughput, low latency, autoscaling and flexible deployment (serverless, reserved GPUs, private cloud). It also emphasizes cost-effectiveness, data security, and feature-rich tooling such as APIs compatible with OpenAI style, fine-tuning, monitoring, and scalability.

SiliconFlow

SiliconFlow is an AI infrastructure platform built for developers and enterprises who want to deploy, run, and fine-tune large language models (LLMs) and multimodal models efficiently. It offers a unified stack for inference, model hosting, and acceleration so that you don’t have to manage all the infrastructure yourself. The platform supports many open source and commercial models, high throughput, low latency, autoscaling and flexible deployment (serverless, reserved GPUs, private cloud). It also emphasizes cost-effectiveness, data security, and feature-rich tooling such as APIs compatible with OpenAI style, fine-tuning, monitoring, and scalability.

SiliconFlow

SiliconFlow is an AI infrastructure platform built for developers and enterprises who want to deploy, run, and fine-tune large language models (LLMs) and multimodal models efficiently. It offers a unified stack for inference, model hosting, and acceleration so that you don’t have to manage all the infrastructure yourself. The platform supports many open source and commercial models, high throughput, low latency, autoscaling and flexible deployment (serverless, reserved GPUs, private cloud). It also emphasizes cost-effectiveness, data security, and feature-rich tooling such as APIs compatible with OpenAI style, fine-tuning, monitoring, and scalability.

Linked API

LinkedAPI.io is a powerful platform providing access to LinkedIn's API, enabling businesses and developers to automate various LinkedIn tasks, extract valuable data, and integrate LinkedIn functionality into their applications. It simplifies the process of interacting with LinkedIn's data, removing the complexities of direct API integration.

Linked API

LinkedAPI.io is a powerful platform providing access to LinkedIn's API, enabling businesses and developers to automate various LinkedIn tasks, extract valuable data, and integrate LinkedIn functionality into their applications. It simplifies the process of interacting with LinkedIn's data, removing the complexities of direct API integration.

Linked API

LinkedAPI.io is a powerful platform providing access to LinkedIn's API, enabling businesses and developers to automate various LinkedIn tasks, extract valuable data, and integrate LinkedIn functionality into their applications. It simplifies the process of interacting with LinkedIn's data, removing the complexities of direct API integration.

Langchain

LangChain is a powerful open-source framework designed to help developers build context-aware applications that leverage large language models (LLMs). It allows users to connect language models to various data sources, APIs, and memory components, enabling intelligent, multi-step reasoning and decision-making processes. LangChain supports both Python and JavaScript, providing modular building blocks for developers to create chatbots, AI assistants, retrieval-augmented generation (RAG) systems, and agent-based tools. The framework is widely adopted across industries for its flexibility in connecting structured and unstructured data with LLMs.

Langchain

LangChain is a powerful open-source framework designed to help developers build context-aware applications that leverage large language models (LLMs). It allows users to connect language models to various data sources, APIs, and memory components, enabling intelligent, multi-step reasoning and decision-making processes. LangChain supports both Python and JavaScript, providing modular building blocks for developers to create chatbots, AI assistants, retrieval-augmented generation (RAG) systems, and agent-based tools. The framework is widely adopted across industries for its flexibility in connecting structured and unstructured data with LLMs.

Langchain

LangChain is a powerful open-source framework designed to help developers build context-aware applications that leverage large language models (LLMs). It allows users to connect language models to various data sources, APIs, and memory components, enabling intelligent, multi-step reasoning and decision-making processes. LangChain supports both Python and JavaScript, providing modular building blocks for developers to create chatbots, AI assistants, retrieval-augmented generation (RAG) systems, and agent-based tools. The framework is widely adopted across industries for its flexibility in connecting structured and unstructured data with LLMs.

Nexos AI

Nexos.ai is a unified AI orchestration platform designed to centralize, secure, and streamline the management of multiple large language models (LLMs) and AI services for businesses and enterprises. The platform provides a single workspace where teams and organizations can connect, manage, and run over 200+ AI models—including those from OpenAI, Google, Anthropic, Meta, and more—through a single interface and API. Nexos.ai includes robust enterprise-grade features for security, compliance, smart routing, and cost optimization. It offers model output comparison, collaborative project spaces, observability tools for monitoring, and guardrails for responsible AI usage. With an AI Gateway and Workspace, tech leaders can govern AI usage, minimize fragmentation, enable rapid experimentation, and scale AI adoption across teams efficiently.

Nexos AI

Nexos.ai is a unified AI orchestration platform designed to centralize, secure, and streamline the management of multiple large language models (LLMs) and AI services for businesses and enterprises. The platform provides a single workspace where teams and organizations can connect, manage, and run over 200+ AI models—including those from OpenAI, Google, Anthropic, Meta, and more—through a single interface and API. Nexos.ai includes robust enterprise-grade features for security, compliance, smart routing, and cost optimization. It offers model output comparison, collaborative project spaces, observability tools for monitoring, and guardrails for responsible AI usage. With an AI Gateway and Workspace, tech leaders can govern AI usage, minimize fragmentation, enable rapid experimentation, and scale AI adoption across teams efficiently.

Nexos AI

Nexos.ai is a unified AI orchestration platform designed to centralize, secure, and streamline the management of multiple large language models (LLMs) and AI services for businesses and enterprises. The platform provides a single workspace where teams and organizations can connect, manage, and run over 200+ AI models—including those from OpenAI, Google, Anthropic, Meta, and more—through a single interface and API. Nexos.ai includes robust enterprise-grade features for security, compliance, smart routing, and cost optimization. It offers model output comparison, collaborative project spaces, observability tools for monitoring, and guardrails for responsible AI usage. With an AI Gateway and Workspace, tech leaders can govern AI usage, minimize fragmentation, enable rapid experimentation, and scale AI adoption across teams efficiently.

Mobisoft Infotech

MI Team AI is a robust multi-LLM platform designed for enterprises seeking secure, scalable, and cost-effective AI access. It consolidates multiple AI models such as ChatGPT, Claude, Gemini, and various open-source large language models into a single platform, enabling users to switch seamlessly without juggling different tools. The platform supports deployment on private cloud or on-premises infrastructure to ensure complete data privacy and compliance. MI Team AI provides a unified workspace with role-based access controls, single sign-on (SSO), and comprehensive chat logs for transparency and auditability. It offers fixed licensing fees allowing unlimited team access under the company’s brand, making it ideal for organizations needing full control over AI usage.

Mobisoft Infotech

MI Team AI is a robust multi-LLM platform designed for enterprises seeking secure, scalable, and cost-effective AI access. It consolidates multiple AI models such as ChatGPT, Claude, Gemini, and various open-source large language models into a single platform, enabling users to switch seamlessly without juggling different tools. The platform supports deployment on private cloud or on-premises infrastructure to ensure complete data privacy and compliance. MI Team AI provides a unified workspace with role-based access controls, single sign-on (SSO), and comprehensive chat logs for transparency and auditability. It offers fixed licensing fees allowing unlimited team access under the company’s brand, making it ideal for organizations needing full control over AI usage.

Mobisoft Infotech

MI Team AI is a robust multi-LLM platform designed for enterprises seeking secure, scalable, and cost-effective AI access. It consolidates multiple AI models such as ChatGPT, Claude, Gemini, and various open-source large language models into a single platform, enabling users to switch seamlessly without juggling different tools. The platform supports deployment on private cloud or on-premises infrastructure to ensure complete data privacy and compliance. MI Team AI provides a unified workspace with role-based access controls, single sign-on (SSO), and comprehensive chat logs for transparency and auditability. It offers fixed licensing fees allowing unlimited team access under the company’s brand, making it ideal for organizations needing full control over AI usage.

Supernovas AI LLM

Supernovas AI is an all-in-one AI chat workspace designed to empower teams with seamless access to the best AI models and data integration. It supports all major AI providers including OpenAI, Anthropic, Google Gemini, Azure OpenAI, and more, allowing users to prompt any AI model through a single subscription and platform. Supernovas AI enables building intelligent AI assistants that can access private data, databases, and APIs via Model Context Protocol (MCP). It offers advanced prompting tools, custom prompt templates, and integrated AI image generation and editing. The platform supports analyzing a wide range of document types such as PDFs, spreadsheets, legal documents, and images to generate rich responses including text and visuals, boosting productivity across teams worldwide.

Supernovas AI LLM

Supernovas AI is an all-in-one AI chat workspace designed to empower teams with seamless access to the best AI models and data integration. It supports all major AI providers including OpenAI, Anthropic, Google Gemini, Azure OpenAI, and more, allowing users to prompt any AI model through a single subscription and platform. Supernovas AI enables building intelligent AI assistants that can access private data, databases, and APIs via Model Context Protocol (MCP). It offers advanced prompting tools, custom prompt templates, and integrated AI image generation and editing. The platform supports analyzing a wide range of document types such as PDFs, spreadsheets, legal documents, and images to generate rich responses including text and visuals, boosting productivity across teams worldwide.

Supernovas AI LLM

Supernovas AI is an all-in-one AI chat workspace designed to empower teams with seamless access to the best AI models and data integration. It supports all major AI providers including OpenAI, Anthropic, Google Gemini, Azure OpenAI, and more, allowing users to prompt any AI model through a single subscription and platform. Supernovas AI enables building intelligent AI assistants that can access private data, databases, and APIs via Model Context Protocol (MCP). It offers advanced prompting tools, custom prompt templates, and integrated AI image generation and editing. The platform supports analyzing a wide range of document types such as PDFs, spreadsheets, legal documents, and images to generate rich responses including text and visuals, boosting productivity across teams worldwide.

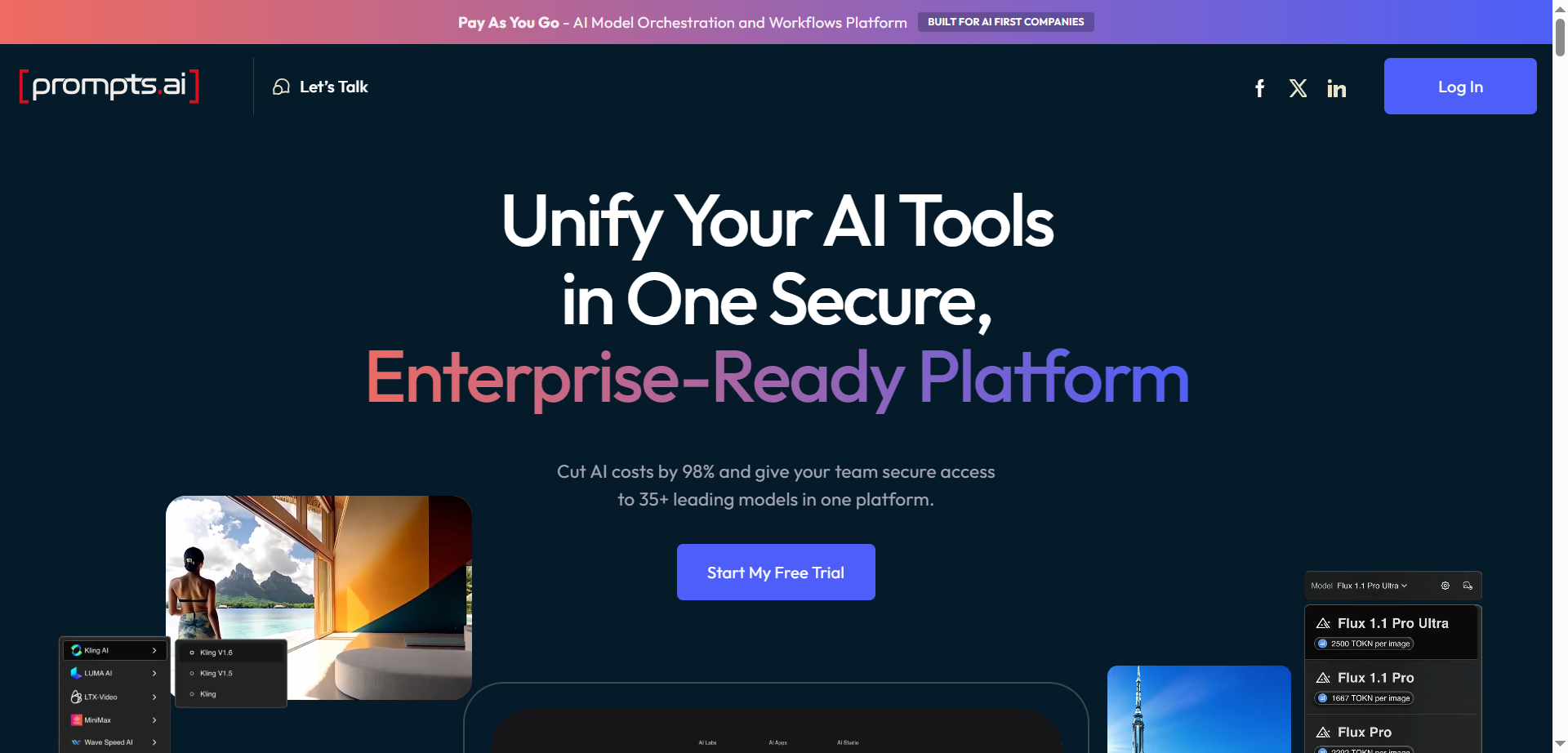

Prompts AI

Prompts.ai is an enterprise-grade AI platform designed to streamline, optimize, and govern generative AI workflows and prompt engineering across organizations. It centralizes access to over 35 large language models (LLMs) and AI tools, allowing teams to automate repetitive workflows, reduce costs, and boost productivity by up to 10 times. The platform emphasizes data security and compliance with standards such as SOC 2 Type II, HIPAA, and GDPR. It supports enterprises in building custom AI workflows, ensuring full visibility, auditability, and governance of AI interactions. Additionally, Prompts.ai fosters collaboration by providing a shared library of expert-built prompts and workflows, enabling businesses to scale AI adoption efficiently and securely.

Prompts AI

Prompts.ai is an enterprise-grade AI platform designed to streamline, optimize, and govern generative AI workflows and prompt engineering across organizations. It centralizes access to over 35 large language models (LLMs) and AI tools, allowing teams to automate repetitive workflows, reduce costs, and boost productivity by up to 10 times. The platform emphasizes data security and compliance with standards such as SOC 2 Type II, HIPAA, and GDPR. It supports enterprises in building custom AI workflows, ensuring full visibility, auditability, and governance of AI interactions. Additionally, Prompts.ai fosters collaboration by providing a shared library of expert-built prompts and workflows, enabling businesses to scale AI adoption efficiently and securely.

Prompts AI

Prompts.ai is an enterprise-grade AI platform designed to streamline, optimize, and govern generative AI workflows and prompt engineering across organizations. It centralizes access to over 35 large language models (LLMs) and AI tools, allowing teams to automate repetitive workflows, reduce costs, and boost productivity by up to 10 times. The platform emphasizes data security and compliance with standards such as SOC 2 Type II, HIPAA, and GDPR. It supports enterprises in building custom AI workflows, ensuring full visibility, auditability, and governance of AI interactions. Additionally, Prompts.ai fosters collaboration by providing a shared library of expert-built prompts and workflows, enabling businesses to scale AI adoption efficiently and securely.

Manuflux

Manuflux Private AI Workspace is a secure, self-hosted AI platform designed to integrate seamlessly with your internal databases, documents, and files, enabling teams to ask natural language questions and receive instant insights, summaries, and visual reports. Unlike cloud-based AI solutions, this workspace runs entirely on your private infrastructure, ensuring full data control, privacy, and compliance with strict governance standards across industries including manufacturing, healthcare, finance, legal, and retail. It combines large language models (LLMs) with your proprietary data, offering adaptive AI-powered decision-making while maintaining security and eliminating risks of data leakage. The platform supports multi-source data integration and is scalable for organizations of all sizes.

Manuflux

Manuflux Private AI Workspace is a secure, self-hosted AI platform designed to integrate seamlessly with your internal databases, documents, and files, enabling teams to ask natural language questions and receive instant insights, summaries, and visual reports. Unlike cloud-based AI solutions, this workspace runs entirely on your private infrastructure, ensuring full data control, privacy, and compliance with strict governance standards across industries including manufacturing, healthcare, finance, legal, and retail. It combines large language models (LLMs) with your proprietary data, offering adaptive AI-powered decision-making while maintaining security and eliminating risks of data leakage. The platform supports multi-source data integration and is scalable for organizations of all sizes.

Manuflux

Manuflux Private AI Workspace is a secure, self-hosted AI platform designed to integrate seamlessly with your internal databases, documents, and files, enabling teams to ask natural language questions and receive instant insights, summaries, and visual reports. Unlike cloud-based AI solutions, this workspace runs entirely on your private infrastructure, ensuring full data control, privacy, and compliance with strict governance standards across industries including manufacturing, healthcare, finance, legal, and retail. It combines large language models (LLMs) with your proprietary data, offering adaptive AI-powered decision-making while maintaining security and eliminating risks of data leakage. The platform supports multi-source data integration and is scalable for organizations of all sizes.

Awan LLM

Awan LLM is a cost-effective, unlimited token large language model inference API platform designed for power users and developers. Unlike traditional API providers that charge per token, Awan LLM offers a monthly subscription model that enables users to send and receive unlimited tokens up to the model's context limit. It supports unrestricted use of LLM models without censorship or constraints. The platform is built on privately owned data centers and GPUs, allowing it to offer efficient and scalable AI services. Awan LLM supports numerous use cases including AI assistants, AI agents, roleplaying, data processing, code completion, and building AI-powered applications without worrying about token limits or costs.

Awan LLM

Awan LLM is a cost-effective, unlimited token large language model inference API platform designed for power users and developers. Unlike traditional API providers that charge per token, Awan LLM offers a monthly subscription model that enables users to send and receive unlimited tokens up to the model's context limit. It supports unrestricted use of LLM models without censorship or constraints. The platform is built on privately owned data centers and GPUs, allowing it to offer efficient and scalable AI services. Awan LLM supports numerous use cases including AI assistants, AI agents, roleplaying, data processing, code completion, and building AI-powered applications without worrying about token limits or costs.

Awan LLM

Awan LLM is a cost-effective, unlimited token large language model inference API platform designed for power users and developers. Unlike traditional API providers that charge per token, Awan LLM offers a monthly subscription model that enables users to send and receive unlimited tokens up to the model's context limit. It supports unrestricted use of LLM models without censorship or constraints. The platform is built on privately owned data centers and GPUs, allowing it to offer efficient and scalable AI services. Awan LLM supports numerous use cases including AI assistants, AI agents, roleplaying, data processing, code completion, and building AI-powered applications without worrying about token limits or costs.

LM Studio

LM Studio is a local large language model (LLM) platform that enables users to run and download powerful AI language models like LLaMa, MPT, and Gemma directly on their own computers. This platform supports Mac, Windows, and Linux operating systems, providing flexibility to users across different devices. LM Studio focuses on privacy and control by allowing users to work with AI models locally without relying on cloud-based services, ensuring data stays on the user’s device. It offers an easy-to-install interface with step-by-step guidance for setup, facilitating access to advanced AI capabilities for developers, researchers, and AI enthusiasts without requiring an internet connection.

LM Studio

LM Studio is a local large language model (LLM) platform that enables users to run and download powerful AI language models like LLaMa, MPT, and Gemma directly on their own computers. This platform supports Mac, Windows, and Linux operating systems, providing flexibility to users across different devices. LM Studio focuses on privacy and control by allowing users to work with AI models locally without relying on cloud-based services, ensuring data stays on the user’s device. It offers an easy-to-install interface with step-by-step guidance for setup, facilitating access to advanced AI capabilities for developers, researchers, and AI enthusiasts without requiring an internet connection.

LM Studio

LM Studio is a local large language model (LLM) platform that enables users to run and download powerful AI language models like LLaMa, MPT, and Gemma directly on their own computers. This platform supports Mac, Windows, and Linux operating systems, providing flexibility to users across different devices. LM Studio focuses on privacy and control by allowing users to work with AI models locally without relying on cloud-based services, ensuring data stays on the user’s device. It offers an easy-to-install interface with step-by-step guidance for setup, facilitating access to advanced AI capabilities for developers, researchers, and AI enthusiasts without requiring an internet connection.

Snowflake - Arctic

Snowflake's Arctic is a family of open-source large language models optimized for enterprise workloads, featuring a unique dense-MoE hybrid architecture that delivers top-tier performance in SQL generation, code tasks, and instruction following at a fraction of comparable model development costs. Released under Apache 2.0 license with fully ungated access to weights, code, open data recipes, and research insights, it excels in complex business scenarios while remaining capable across general tasks. Enterprises can fine-tune or deploy using popular frameworks like LoRA, TRT-LLM, and vLLM, with detailed training and inference cookbooks available. The models lead benchmarks for enterprise intelligence, balancing expert efficiency for fast inference and broad applicability without restrictive gates or proprietary limits.

Snowflake - Arctic

Snowflake's Arctic is a family of open-source large language models optimized for enterprise workloads, featuring a unique dense-MoE hybrid architecture that delivers top-tier performance in SQL generation, code tasks, and instruction following at a fraction of comparable model development costs. Released under Apache 2.0 license with fully ungated access to weights, code, open data recipes, and research insights, it excels in complex business scenarios while remaining capable across general tasks. Enterprises can fine-tune or deploy using popular frameworks like LoRA, TRT-LLM, and vLLM, with detailed training and inference cookbooks available. The models lead benchmarks for enterprise intelligence, balancing expert efficiency for fast inference and broad applicability without restrictive gates or proprietary limits.

Snowflake - Arctic

Snowflake's Arctic is a family of open-source large language models optimized for enterprise workloads, featuring a unique dense-MoE hybrid architecture that delivers top-tier performance in SQL generation, code tasks, and instruction following at a fraction of comparable model development costs. Released under Apache 2.0 license with fully ungated access to weights, code, open data recipes, and research insights, it excels in complex business scenarios while remaining capable across general tasks. Enterprises can fine-tune or deploy using popular frameworks like LoRA, TRT-LLM, and vLLM, with detailed training and inference cookbooks available. The models lead benchmarks for enterprise intelligence, balancing expert efficiency for fast inference and broad applicability without restrictive gates or proprietary limits.

Editorial Note

This page was researched and written by the ATB Editorial Team. Our team researches each AI tool by reviewing its official website, testing features, exploring real use cases, and considering user feedback. Every page is fact-checked and regularly updated to ensure the information stays accurate, neutral, and useful for our readers.

If you have any suggestions or questions, email us at hello@aitoolbook.ai