- Developers & Engineers: Build image-capable assistants for vision tasks—object detection, chart interpretation, OCR, and multimodal chat.

- Analysts & Researchers: Automate visual data extraction, document Q&A, and diagram analysis.

- Educators & Students: Use images to ask and solve math or science problems interactively.

- Content Creators & Designers: Generate and analyze visuals using prompt-based image creation and style evaluation.

- Enterprises & Automation Teams: Deploy multifunctional pipelines combining vision understanding and generation via API.

How to Use Grok 2 Vision?

- Choose the Right Model: Use `grok-2-vision-latest`, `grok-2-vision`, or `grok-2-vision-1212` via xAI’s enterprise API or platforms like LangDB.

- Submit Image + Text Prompts: Send images as base64 or URLs alongside text, within a 32K-token context.

- Generate & Analyze Outputs: Perform object recognition, interpret charts, generate designs, or request captions and style critiques.

- Create New Images: Use FLUX.1 to generate photorealistic or stylized outputs in-app or via API.

- Monitor Usage & Cost: Priced around $2/M input and $10/M output tokens—priced for high-value visual workflows.

- Strong Vision Understanding: Achieves state-of-the-art in MathVista and DocVQA—surpassing GPT-4 Turbo on those benchmarks.

- Integrated Image Generation: Offers FLUX.1-powered photorealistic outputs with fewer restrictions than mainstream tools.

- Multimodal Context: Supports mixed media within a unified pipeline (32K tokens).

- Single API, Dual Function: Handle understanding and generation with the same endpoint—ideal for image-centric apps.

- Research & Automation Ready: Enables structured visual reasoning and design workflows on par with advanced multimodal systems.

- Excellent at visual math and doc QA tasks

- Integrated photorealistic image generation via FLUX.1

- Unified text+image input pipeline

- Fast, multimodal reasoning in a single API

- Reasonably priced for visual workflows

- Context limited to 32K tokens—less suited for long documents

- FLUX.1 is permissive—can generate controversial or misleading images

- Premium-tier pricing may be steep for high-volume image use

Free Tier

$ 0.00

Limited access to DeepSearch

Limited access to DeeperSearch

Super Grok

$30/month

More Aurora Images - 100 Images / 2h

Even Better Memory - 128K Context Window

Extended access to Thinking - 30 Queries / 2h

Extended access to DeepSearch - 30 Queries / 2h

Extended access to DeeperSearch - 10 Queries / 2h

API

$2/$10 per 1M tokens

Image Input - $2/M

Output - $10/M

Proud of the love you're getting? Show off your AI Toolbook reviews—then invite more fans to share the love and build your credibility.

Add an AI Toolbook badge to your site—an easy way to drive followers, showcase updates, and collect reviews. It's like a mini 24/7 billboard for your AI.

Reviews

Rating Distribution

Average score

Popular Mention

FAQs

Similar AI Tools

GPT-4o Realtime Preview is OpenAI’s latest and most advanced multimodal AI model—designed for lightning-fast, real-time interaction across text, vision, and audio. The "o" stands for "omni," reflecting its groundbreaking ability to understand and generate across multiple input and output types. With human-like responsiveness, low latency, and top-tier intelligence, GPT-4o Realtime Preview offers a glimpse into the future of natural AI interfaces. Whether you're building voice assistants, dynamic UIs, or smart multi-input applications, GPT-4o is the new gold standard in real-time AI performance.

OpenAI GPT 4o Real..

GPT-4o Realtime Preview is OpenAI’s latest and most advanced multimodal AI model—designed for lightning-fast, real-time interaction across text, vision, and audio. The "o" stands for "omni," reflecting its groundbreaking ability to understand and generate across multiple input and output types. With human-like responsiveness, low latency, and top-tier intelligence, GPT-4o Realtime Preview offers a glimpse into the future of natural AI interfaces. Whether you're building voice assistants, dynamic UIs, or smart multi-input applications, GPT-4o is the new gold standard in real-time AI performance.

OpenAI GPT 4o Real..

GPT-4o Realtime Preview is OpenAI’s latest and most advanced multimodal AI model—designed for lightning-fast, real-time interaction across text, vision, and audio. The "o" stands for "omni," reflecting its groundbreaking ability to understand and generate across multiple input and output types. With human-like responsiveness, low latency, and top-tier intelligence, GPT-4o Realtime Preview offers a glimpse into the future of natural AI interfaces. Whether you're building voice assistants, dynamic UIs, or smart multi-input applications, GPT-4o is the new gold standard in real-time AI performance.

OpenAI GPT Image 1

GPT-Image-1 is OpenAI's state-of-the-art vision model designed to understand and interpret images with human-like perception. It enables developers and businesses to analyze, summarize, and extract detailed insights from images using natural language. Whether you're building AI agents, accessibility tools, or image-driven workflows, GPT-Image-1 brings powerful multimodal capabilities into your applications with impressive accuracy. Optimized for use via API, it can handle diverse image types—charts, screenshots, photographs, documents, and more—making it one of the most versatile models in OpenAI’s portfolio.

OpenAI GPT Image 1

GPT-Image-1 is OpenAI's state-of-the-art vision model designed to understand and interpret images with human-like perception. It enables developers and businesses to analyze, summarize, and extract detailed insights from images using natural language. Whether you're building AI agents, accessibility tools, or image-driven workflows, GPT-Image-1 brings powerful multimodal capabilities into your applications with impressive accuracy. Optimized for use via API, it can handle diverse image types—charts, screenshots, photographs, documents, and more—making it one of the most versatile models in OpenAI’s portfolio.

OpenAI GPT Image 1

GPT-Image-1 is OpenAI's state-of-the-art vision model designed to understand and interpret images with human-like perception. It enables developers and businesses to analyze, summarize, and extract detailed insights from images using natural language. Whether you're building AI agents, accessibility tools, or image-driven workflows, GPT-Image-1 brings powerful multimodal capabilities into your applications with impressive accuracy. Optimized for use via API, it can handle diverse image types—charts, screenshots, photographs, documents, and more—making it one of the most versatile models in OpenAI’s portfolio.

Meta Llama 4

Meta Llama 4 is the latest generation of Meta’s large language model series. It features a mixture-of-experts (MoE) architecture, making it both highly efficient and powerful. Llama 4 is natively multimodal—supporting text and image inputs—and offers three key variants: Scout (17B active parameters, 10 M token context), Maverick (17B active, 1 M token context), and Behemoth (288B active, 2 T total parameters; still in development). Designed for long-context reasoning, multilingual understanding, and open-weight availability (with license restrictions), Llama 4 excels in benchmarks and versatility.

Meta Llama 4

Meta Llama 4 is the latest generation of Meta’s large language model series. It features a mixture-of-experts (MoE) architecture, making it both highly efficient and powerful. Llama 4 is natively multimodal—supporting text and image inputs—and offers three key variants: Scout (17B active parameters, 10 M token context), Maverick (17B active, 1 M token context), and Behemoth (288B active, 2 T total parameters; still in development). Designed for long-context reasoning, multilingual understanding, and open-weight availability (with license restrictions), Llama 4 excels in benchmarks and versatility.

Meta Llama 4

Meta Llama 4 is the latest generation of Meta’s large language model series. It features a mixture-of-experts (MoE) architecture, making it both highly efficient and powerful. Llama 4 is natively multimodal—supporting text and image inputs—and offers three key variants: Scout (17B active parameters, 10 M token context), Maverick (17B active, 1 M token context), and Behemoth (288B active, 2 T total parameters; still in development). Designed for long-context reasoning, multilingual understanding, and open-weight availability (with license restrictions), Llama 4 excels in benchmarks and versatility.

Meta Llama 3

Meta Llama 3 is Meta’s third-generation open-weight large language model family, released in April 2024 and enhanced in July 2024 with the 3.1 update. It spans three sizes—8B, 70B, and 405B parameters—each offering a 128K‑token context window. Llama 3 excels at reasoning, code generation, multilingual text, and instruction-following, and introduces multimodal vision (image understanding) capabilities in its 3.2 series. Robust safety mechanisms like Llama Guard 3, Code Shield, and CyberSec Eval 2 ensure responsible output.

Meta Llama 3

Meta Llama 3 is Meta’s third-generation open-weight large language model family, released in April 2024 and enhanced in July 2024 with the 3.1 update. It spans three sizes—8B, 70B, and 405B parameters—each offering a 128K‑token context window. Llama 3 excels at reasoning, code generation, multilingual text, and instruction-following, and introduces multimodal vision (image understanding) capabilities in its 3.2 series. Robust safety mechanisms like Llama Guard 3, Code Shield, and CyberSec Eval 2 ensure responsible output.

Meta Llama 3

Meta Llama 3 is Meta’s third-generation open-weight large language model family, released in April 2024 and enhanced in July 2024 with the 3.1 update. It spans three sizes—8B, 70B, and 405B parameters—each offering a 128K‑token context window. Llama 3 excels at reasoning, code generation, multilingual text, and instruction-following, and introduces multimodal vision (image understanding) capabilities in its 3.2 series. Robust safety mechanisms like Llama Guard 3, Code Shield, and CyberSec Eval 2 ensure responsible output.

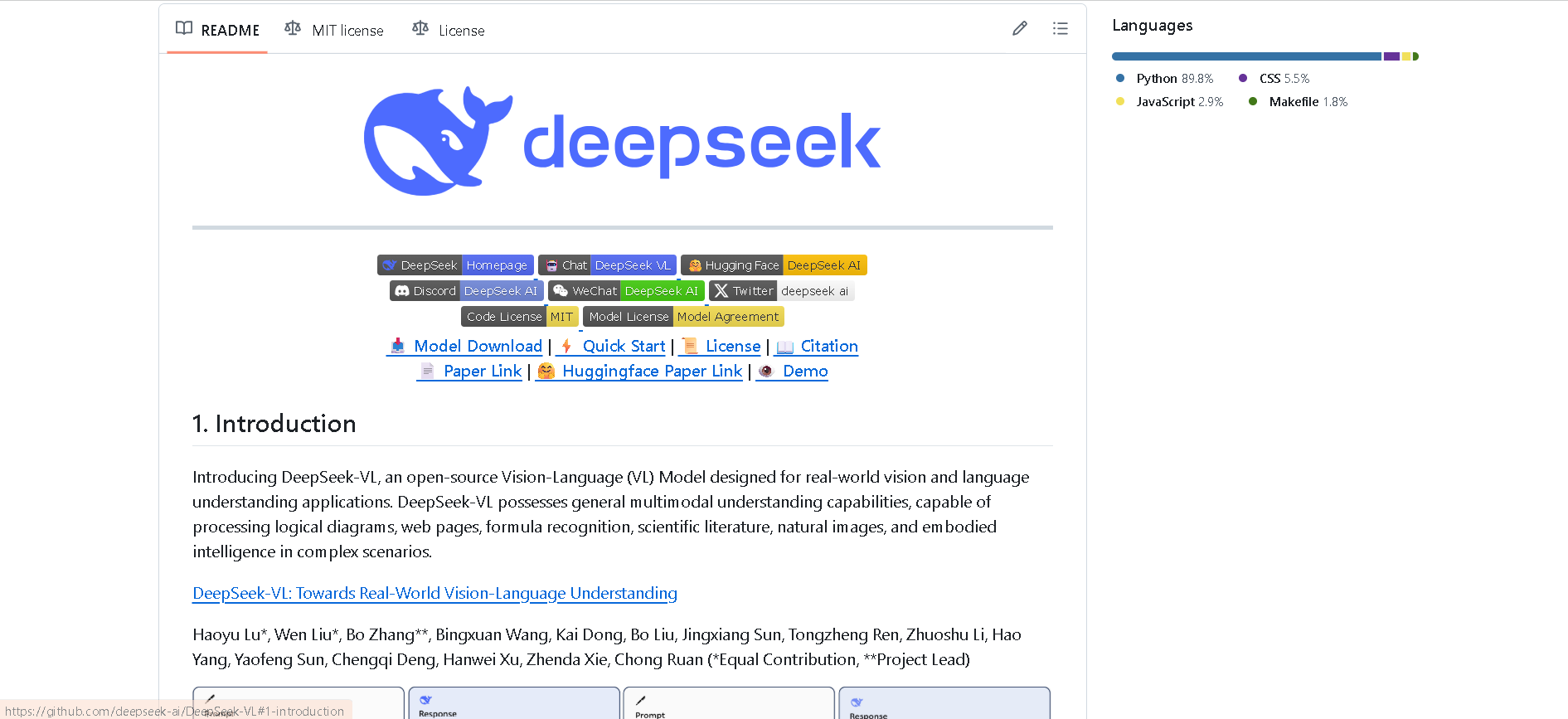

DeepSeek VL

DeepSeek VL is DeepSeek’s open-source vision-language model designed for real-world multimodal understanding. It employs a hybrid vision encoder (SigLIP‑L + SAM), processes high-resolution images (up to 1024×1024), and supports both base and chat variants across two sizes: 1.3B and 7B parameters. It excels on tasks like OCR, diagram reasoning, webpage parsing, and visual Q&A—while preserving strong language ability.

DeepSeek VL

DeepSeek VL is DeepSeek’s open-source vision-language model designed for real-world multimodal understanding. It employs a hybrid vision encoder (SigLIP‑L + SAM), processes high-resolution images (up to 1024×1024), and supports both base and chat variants across two sizes: 1.3B and 7B parameters. It excels on tasks like OCR, diagram reasoning, webpage parsing, and visual Q&A—while preserving strong language ability.

DeepSeek VL

DeepSeek VL is DeepSeek’s open-source vision-language model designed for real-world multimodal understanding. It employs a hybrid vision encoder (SigLIP‑L + SAM), processes high-resolution images (up to 1024×1024), and supports both base and chat variants across two sizes: 1.3B and 7B parameters. It excels on tasks like OCR, diagram reasoning, webpage parsing, and visual Q&A—while preserving strong language ability.

grok-3-mini-latest

Grok 3 Mini is xAI’s compact, reasoning-focused variant of the Grok 3 series. Released in February 2025 alongside the flagship model, it's optimized for cost-effective, transparent chain-of-thought reasoning via "Think" mode, with full multimodal input and access to xAI’s Colossus-trained capabilities. The latest version supports live preview on Azure AI Foundry and GitHub Models—combining speed, affordability, and logic traversal in real-time workflows.

grok-3-mini-latest

Grok 3 Mini is xAI’s compact, reasoning-focused variant of the Grok 3 series. Released in February 2025 alongside the flagship model, it's optimized for cost-effective, transparent chain-of-thought reasoning via "Think" mode, with full multimodal input and access to xAI’s Colossus-trained capabilities. The latest version supports live preview on Azure AI Foundry and GitHub Models—combining speed, affordability, and logic traversal in real-time workflows.

grok-3-mini-latest

Grok 3 Mini is xAI’s compact, reasoning-focused variant of the Grok 3 series. Released in February 2025 alongside the flagship model, it's optimized for cost-effective, transparent chain-of-thought reasoning via "Think" mode, with full multimodal input and access to xAI’s Colossus-trained capabilities. The latest version supports live preview on Azure AI Foundry and GitHub Models—combining speed, affordability, and logic traversal in real-time workflows.

grok-3-mini-fast

Grok 3 Mini Fast is the low-latency, high-performance version of xAI’s Grok 3 Mini model. Released in beta around May 2025, it offers the same visible chain-of-thought reasoning as Grok 3 Mini but delivers responses significantly faster, powered by optimized infrastructure. It supports up to 131,072 tokens of context.

grok-3-mini-fast

Grok 3 Mini Fast is the low-latency, high-performance version of xAI’s Grok 3 Mini model. Released in beta around May 2025, it offers the same visible chain-of-thought reasoning as Grok 3 Mini but delivers responses significantly faster, powered by optimized infrastructure. It supports up to 131,072 tokens of context.

grok-3-mini-fast

Grok 3 Mini Fast is the low-latency, high-performance version of xAI’s Grok 3 Mini model. Released in beta around May 2025, it offers the same visible chain-of-thought reasoning as Grok 3 Mini but delivers responses significantly faster, powered by optimized infrastructure. It supports up to 131,072 tokens of context.

grok-3-mini-fast-l..

Grok 3 Mini Fast is xAI’s most recent, low-latency variant of the compact Grok 3 Mini model. It maintains full chain-of-thought “Think” reasoning and multimodal support while delivering faster response times. The model handles up to 131,072 tokens of context and is now widely accessible in beta via xAI API and select cloud platforms.

grok-3-mini-fast-l..

Grok 3 Mini Fast is xAI’s most recent, low-latency variant of the compact Grok 3 Mini model. It maintains full chain-of-thought “Think” reasoning and multimodal support while delivering faster response times. The model handles up to 131,072 tokens of context and is now widely accessible in beta via xAI API and select cloud platforms.

grok-3-mini-fast-l..

Grok 3 Mini Fast is xAI’s most recent, low-latency variant of the compact Grok 3 Mini model. It maintains full chain-of-thought “Think” reasoning and multimodal support while delivering faster response times. The model handles up to 131,072 tokens of context and is now widely accessible in beta via xAI API and select cloud platforms.

Meta Llama 3.2 Vis..

Llama 3.2 Vision is Meta’s first open-source multimodal Llama model series, released on September 25, 2024. Available in 11 B and 90 B parameter sizes, it merges advanced image understanding with a massive 128 K‑token text context. Optimized for vision reasoning, captioning, document QA, and visual math tasks, it outperforms many closed-source multimodal models.

Meta Llama 3.2 Vis..

Llama 3.2 Vision is Meta’s first open-source multimodal Llama model series, released on September 25, 2024. Available in 11 B and 90 B parameter sizes, it merges advanced image understanding with a massive 128 K‑token text context. Optimized for vision reasoning, captioning, document QA, and visual math tasks, it outperforms many closed-source multimodal models.

Meta Llama 3.2 Vis..

Llama 3.2 Vision is Meta’s first open-source multimodal Llama model series, released on September 25, 2024. Available in 11 B and 90 B parameter sizes, it merges advanced image understanding with a massive 128 K‑token text context. Optimized for vision reasoning, captioning, document QA, and visual math tasks, it outperforms many closed-source multimodal models.

Mistral Large 2

Mistral Large 2 is the second-generation flagship model from Mistral AI, released in July 2024. Also referenced as mistral-large-2407, it’s a 123 B-parameter dense LLM with a 128 K-token context window, supporting dozens of languages and 80+ coding languages. It excels in reasoning, code generation, mathematics, instruction-following, and function calling—designed for high throughput on single-node setups.

Mistral Large 2

Mistral Large 2 is the second-generation flagship model from Mistral AI, released in July 2024. Also referenced as mistral-large-2407, it’s a 123 B-parameter dense LLM with a 128 K-token context window, supporting dozens of languages and 80+ coding languages. It excels in reasoning, code generation, mathematics, instruction-following, and function calling—designed for high throughput on single-node setups.

Mistral Large 2

Mistral Large 2 is the second-generation flagship model from Mistral AI, released in July 2024. Also referenced as mistral-large-2407, it’s a 123 B-parameter dense LLM with a 128 K-token context window, supporting dozens of languages and 80+ coding languages. It excels in reasoning, code generation, mathematics, instruction-following, and function calling—designed for high throughput on single-node setups.

Mistral Pixtral La..

Pixtral Large is Mistral AI’s latest multimodal powerhouse, launched November 18, 2024. Built atop the 123B‑parameter Mistral Large 2, it features a 124B‑parameter multimodal decoder paired with a 1B‑parameter vision encoder, and supports a massive 128K‑token context window—enabling it to process up to 30 high-resolution images or ~300-page documents.

Mistral Pixtral La..

Pixtral Large is Mistral AI’s latest multimodal powerhouse, launched November 18, 2024. Built atop the 123B‑parameter Mistral Large 2, it features a 124B‑parameter multimodal decoder paired with a 1B‑parameter vision encoder, and supports a massive 128K‑token context window—enabling it to process up to 30 high-resolution images or ~300-page documents.

Mistral Pixtral La..

Pixtral Large is Mistral AI’s latest multimodal powerhouse, launched November 18, 2024. Built atop the 123B‑parameter Mistral Large 2, it features a 124B‑parameter multimodal decoder paired with a 1B‑parameter vision encoder, and supports a massive 128K‑token context window—enabling it to process up to 30 high-resolution images or ~300-page documents.

Qwen Chat

Qwen Chat is Alibaba Cloud’s conversational AI assistant built on the Qwen series (e.g., Qwen‑7B‑Chat, Qwen1.5‑7B‑Chat, Qwen‑VL, Qwen‑Audio, and Qwen2.5‑Omni). It supports text, vision, audio, and video understanding, plus image and document processing, web search integration, and image generation—all through a unified chat interface.

Qwen Chat

Qwen Chat is Alibaba Cloud’s conversational AI assistant built on the Qwen series (e.g., Qwen‑7B‑Chat, Qwen1.5‑7B‑Chat, Qwen‑VL, Qwen‑Audio, and Qwen2.5‑Omni). It supports text, vision, audio, and video understanding, plus image and document processing, web search integration, and image generation—all through a unified chat interface.

Qwen Chat

Qwen Chat is Alibaba Cloud’s conversational AI assistant built on the Qwen series (e.g., Qwen‑7B‑Chat, Qwen1.5‑7B‑Chat, Qwen‑VL, Qwen‑Audio, and Qwen2.5‑Omni). It supports text, vision, audio, and video understanding, plus image and document processing, web search integration, and image generation—all through a unified chat interface.

Editorial Note

This page was researched and written by the ATB Editorial Team. Our team researches each AI tool by reviewing its official website, testing features, exploring real use cases, and considering user feedback. Every page is fact-checked and regularly updated to ensure the information stays accurate, neutral, and useful for our readers.

If you have any suggestions or questions, email us at hello@aitoolbook.ai