- Developers & Engineers: Embed fast, transparent reasoning into chatbots or interactive pipelines.

- Students & Educators: Provide on-demand step-by-step logic for math, coding, and analysis.

- Enterprises & SMEs: Deploy reasoning-capable AI affordably for live Q&A or transaction flows.

- Content & Tooling Teams: Automate structured reasoning tasks—debugging, summaries, workflows.

- Researchers: Benchmark transparent chain-of-thought performance in real-world, low-latency settings.

How to Use Grok 3 Mini Fast (Latest)?

- Access via xAI API or Oracle Cloud: Use model ID `grok-3-mini-fast-beta` in supported regions (e.g. US Midwest).

- Include Prompts with “Think”: Use reasoning prompts or set `"reasoning_effort": "high"` to trigger chain-of-thought output.

- Send Multimodal Inputs: Accepts text (and, where supported, images) up to 131,072 tokens.

- Experience Faster Output: Optimized infrastructure yields high throughput (

210 tokens/sec) with quick initial response (0.32 s). - Manage Cost: Input tokens

$0.60/M; output tokens$4.00/M—reflecting performance tier.

- Low-Latency Reasoning: Delivers real-time chain-of-thought insights using fast serving infrastructure.

- Feature-Parity with Mini: Maintains same reasoning quality, context window, and multimodal support as standard Mini.

- Large Context Scope: 131K-token window enables in-depth document, code, or conversation analysis.

- Optimized for Deployment: Ideal for interactive apps needing fast, interpretable AI.

- Premium Pricing Tier: Higher costs reflect the infrastructure advantages.

- Transparent chain-of-thought at low latency

- Same powerful reasoning in compact form

- High throughput (~210 tokens/sec) and fast start (~0.32 s)

- Large context window retained

- Integration via existing API clients or Oracle deployment

- More expensive: $0.60/$4.00 per million tokens vs standard Mini price

- Still in beta—features and cost may change

- Lacks “Big Brain” deep reasoning—only available in full flagship model

Free Tier

$ 0.00

Limited access to DeepSearch

Limited access to DeeperSearch

Super Grok

$30/month

More Aurora Images - 100 Images / 2h

Even Better Memory - 128K Context Window

Extended access to Thinking - 30 Queries / 2h

Extended access to DeepSearch - 30 Queries / 2h

Extended access to DeeperSearch - 10 Queries / 2h

API

$0.60/$4.00 per 1M tokens

Cached Input - $0.15/M

Output - $4.00/M

Proud of the love you're getting? Show off your AI Toolbook reviews—then invite more fans to share the love and build your credibility.

Add an AI Toolbook badge to your site—an easy way to drive followers, showcase updates, and collect reviews. It's like a mini 24/7 billboard for your AI.

Reviews

Rating Distribution

Average score

Popular Mention

FAQs

Similar AI Tools

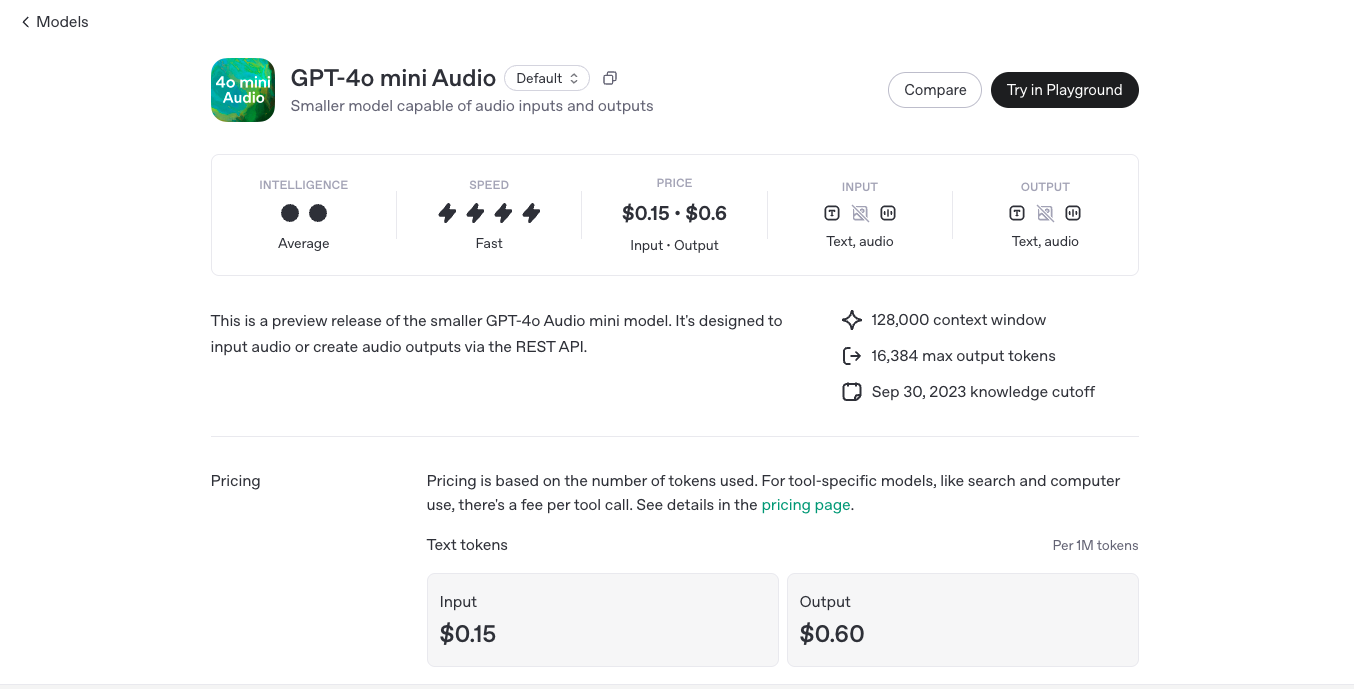

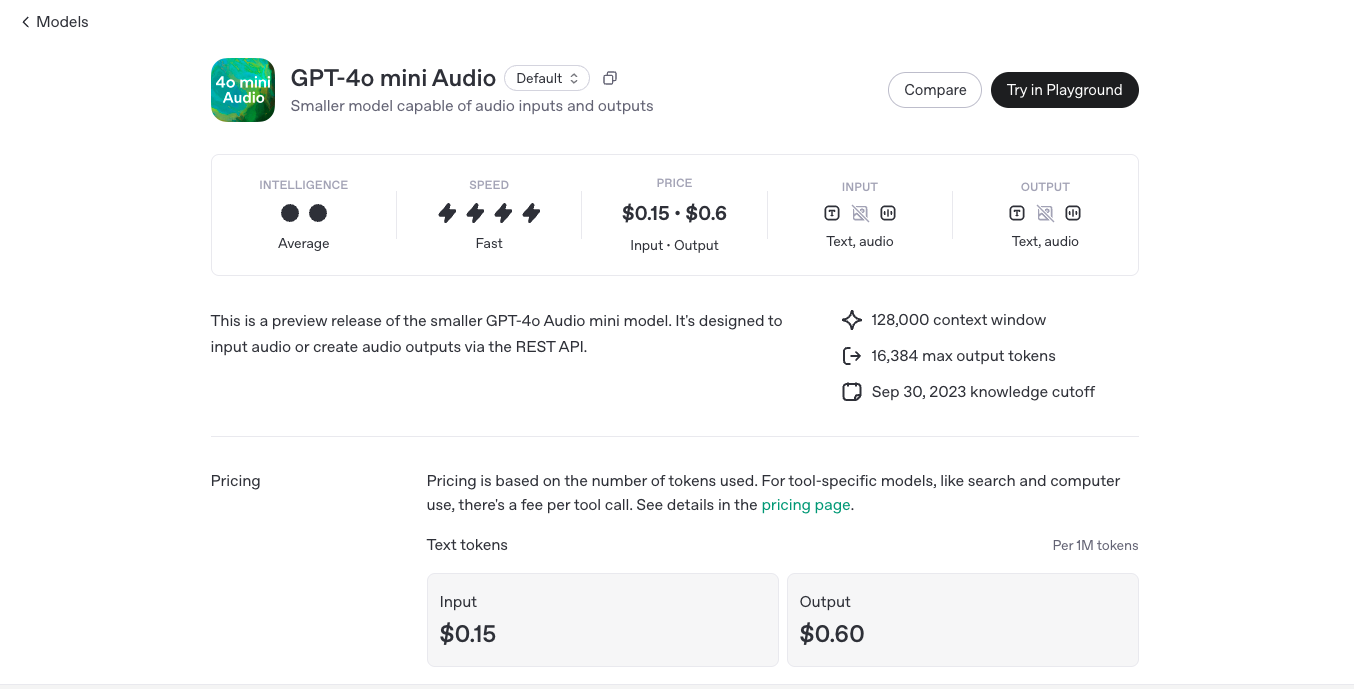

OpenAI GPT-4o Mini Audio is a lighter, faster, and cost-effective version of OpenAI's real-time voice AI, designed for natural and expressive AI conversations. It provides instant voice interactions with low latency, making it ideal for applications like AI assistants, customer service, and real-time translation without the high computational costs of full-scale GPT-4o Audio.

OpenAI GPT 4o mini..

OpenAI GPT-4o Mini Audio is a lighter, faster, and cost-effective version of OpenAI's real-time voice AI, designed for natural and expressive AI conversations. It provides instant voice interactions with low latency, making it ideal for applications like AI assistants, customer service, and real-time translation without the high computational costs of full-scale GPT-4o Audio.

OpenAI GPT 4o mini..

OpenAI GPT-4o Mini Audio is a lighter, faster, and cost-effective version of OpenAI's real-time voice AI, designed for natural and expressive AI conversations. It provides instant voice interactions with low latency, making it ideal for applications like AI assistants, customer service, and real-time translation without the high computational costs of full-scale GPT-4o Audio.

OpenAI GPT 4o mini..

GPT-4o Mini Realtime Preview is a lightweight, high-speed variant of OpenAI’s flagship multimodal model, GPT-4o. Built for blazing-fast, cost-efficient inference across text, vision, and voice inputs, this preview version is optimized for real-time responsiveness—without compromising on core intelligence. Whether you’re building chatbots, interactive voice tools, or lightweight apps, GPT-4o Mini delivers smart performance with minimal latency and compute load. It’s the perfect choice when you need responsiveness, affordability, and multimodal capabilities all in one efficient package.

OpenAI GPT 4o mini..

GPT-4o Mini Realtime Preview is a lightweight, high-speed variant of OpenAI’s flagship multimodal model, GPT-4o. Built for blazing-fast, cost-efficient inference across text, vision, and voice inputs, this preview version is optimized for real-time responsiveness—without compromising on core intelligence. Whether you’re building chatbots, interactive voice tools, or lightweight apps, GPT-4o Mini delivers smart performance with minimal latency and compute load. It’s the perfect choice when you need responsiveness, affordability, and multimodal capabilities all in one efficient package.

OpenAI GPT 4o mini..

GPT-4o Mini Realtime Preview is a lightweight, high-speed variant of OpenAI’s flagship multimodal model, GPT-4o. Built for blazing-fast, cost-efficient inference across text, vision, and voice inputs, this preview version is optimized for real-time responsiveness—without compromising on core intelligence. Whether you’re building chatbots, interactive voice tools, or lightweight apps, GPT-4o Mini delivers smart performance with minimal latency and compute load. It’s the perfect choice when you need responsiveness, affordability, and multimodal capabilities all in one efficient package.

OpenAI GPT 4o mini..

GPT-4o-mini Search Preview is OpenAI’s lightweight semantic search feature powered by the GPT-4o-mini model. Designed for real-time applications and low-latency environments, it brings retrieval-augmented intelligence to any product or tool that needs blazing-fast, accurate information lookup. While compact in size, it offers the power of contextual understanding, enabling smarter, more relevant search results with fewer resources. It’s ideal for startups, embedded systems, or anyone who needs search that just works—fast, efficient, and tuned for integration.

OpenAI GPT 4o mini..

GPT-4o-mini Search Preview is OpenAI’s lightweight semantic search feature powered by the GPT-4o-mini model. Designed for real-time applications and low-latency environments, it brings retrieval-augmented intelligence to any product or tool that needs blazing-fast, accurate information lookup. While compact in size, it offers the power of contextual understanding, enabling smarter, more relevant search results with fewer resources. It’s ideal for startups, embedded systems, or anyone who needs search that just works—fast, efficient, and tuned for integration.

OpenAI GPT 4o mini..

GPT-4o-mini Search Preview is OpenAI’s lightweight semantic search feature powered by the GPT-4o-mini model. Designed for real-time applications and low-latency environments, it brings retrieval-augmented intelligence to any product or tool that needs blazing-fast, accurate information lookup. While compact in size, it offers the power of contextual understanding, enabling smarter, more relevant search results with fewer resources. It’s ideal for startups, embedded systems, or anyone who needs search that just works—fast, efficient, and tuned for integration.

OpenAI Codex mini ..

codex-mini-latest is OpenAI’s lightweight, high-speed AI coding model, fine-tuned from the o4-mini architecture. Designed specifically for use with the Codex CLI, it brings ChatGPT-level reasoning directly to your terminal, enabling efficient code generation, debugging, and editing tasks. Despite its compact size, codex-mini-latest delivers impressive performance, making it ideal for developers seeking a fast, cost-effective coding assistant.

OpenAI Codex mini ..

codex-mini-latest is OpenAI’s lightweight, high-speed AI coding model, fine-tuned from the o4-mini architecture. Designed specifically for use with the Codex CLI, it brings ChatGPT-level reasoning directly to your terminal, enabling efficient code generation, debugging, and editing tasks. Despite its compact size, codex-mini-latest delivers impressive performance, making it ideal for developers seeking a fast, cost-effective coding assistant.

OpenAI Codex mini ..

codex-mini-latest is OpenAI’s lightweight, high-speed AI coding model, fine-tuned from the o4-mini architecture. Designed specifically for use with the Codex CLI, it brings ChatGPT-level reasoning directly to your terminal, enabling efficient code generation, debugging, and editing tasks. Despite its compact size, codex-mini-latest delivers impressive performance, making it ideal for developers seeking a fast, cost-effective coding assistant.

Claude 3 Haiku

Claude 3 Haiku is Anthropic’s fastest and most affordable model in its Claude 3 family. It processes up to 21K tokens per second under 32K token prompts, delivers enterprise-grade vision and text understanding, and can analyze large datasets or image-heavy content in near real-time—all while offering ultra‑low latency and cost.

Claude 3 Haiku

Claude 3 Haiku is Anthropic’s fastest and most affordable model in its Claude 3 family. It processes up to 21K tokens per second under 32K token prompts, delivers enterprise-grade vision and text understanding, and can analyze large datasets or image-heavy content in near real-time—all while offering ultra‑low latency and cost.

Claude 3 Haiku

Claude 3 Haiku is Anthropic’s fastest and most affordable model in its Claude 3 family. It processes up to 21K tokens per second under 32K token prompts, delivers enterprise-grade vision and text understanding, and can analyze large datasets or image-heavy content in near real-time—all while offering ultra‑low latency and cost.

grok-2-vision

Grok 2 Vision (also known as Grok‑2‑Vision‑1212 or grok‑2‑vision‑latest) is xAI’s multimodal variant of Grok 2, designed specifically for advanced image understanding and generation. Launched in December 2024, it supports joint text+image inputs up to 32,768 tokens, excelling in visual math reasoning (MathVista), document question answering (DocVQA), object recognition, and style analysis—while also offering photorealistic image creation via the FLUX.1 model.

grok-2-vision

Grok 2 Vision (also known as Grok‑2‑Vision‑1212 or grok‑2‑vision‑latest) is xAI’s multimodal variant of Grok 2, designed specifically for advanced image understanding and generation. Launched in December 2024, it supports joint text+image inputs up to 32,768 tokens, excelling in visual math reasoning (MathVista), document question answering (DocVQA), object recognition, and style analysis—while also offering photorealistic image creation via the FLUX.1 model.

grok-2-vision

Grok 2 Vision (also known as Grok‑2‑Vision‑1212 or grok‑2‑vision‑latest) is xAI’s multimodal variant of Grok 2, designed specifically for advanced image understanding and generation. Launched in December 2024, it supports joint text+image inputs up to 32,768 tokens, excelling in visual math reasoning (MathVista), document question answering (DocVQA), object recognition, and style analysis—while also offering photorealistic image creation via the FLUX.1 model.

grok-2-vision-late..

Grok 2 Vision is xAI’s advanced vision-enabled variant of Grok 2, launched in December 2024. It supports joint text + image inputs with a 32K-token context window, combining image understanding, document QA, visual math reasoning (e.g., MathVista, DocVQA), and photorealistic image generation via FLUX.1 (later complemented by Aurora). It scores state-of-the-art on multimodal tasks.

grok-2-vision-late..

Grok 2 Vision is xAI’s advanced vision-enabled variant of Grok 2, launched in December 2024. It supports joint text + image inputs with a 32K-token context window, combining image understanding, document QA, visual math reasoning (e.g., MathVista, DocVQA), and photorealistic image generation via FLUX.1 (later complemented by Aurora). It scores state-of-the-art on multimodal tasks.

grok-2-vision-late..

Grok 2 Vision is xAI’s advanced vision-enabled variant of Grok 2, launched in December 2024. It supports joint text + image inputs with a 32K-token context window, combining image understanding, document QA, visual math reasoning (e.g., MathVista, DocVQA), and photorealistic image generation via FLUX.1 (later complemented by Aurora). It scores state-of-the-art on multimodal tasks.

grok-2-vision-1212

Grok 2 Vision – 1212 is a December 2024 release of xAI’s multimodal large language model, fine-tuned specifically for image understanding and generation. It supports combined text and image inputs (up to 32,768 tokens) and excels in document question answering, visual math reasoning, object recognition, and photorealistic image generation powered by FLUX.1. It also supports API deployment for developers and enterprises.

grok-2-vision-1212

Grok 2 Vision – 1212 is a December 2024 release of xAI’s multimodal large language model, fine-tuned specifically for image understanding and generation. It supports combined text and image inputs (up to 32,768 tokens) and excels in document question answering, visual math reasoning, object recognition, and photorealistic image generation powered by FLUX.1. It also supports API deployment for developers and enterprises.

grok-2-vision-1212

Grok 2 Vision – 1212 is a December 2024 release of xAI’s multimodal large language model, fine-tuned specifically for image understanding and generation. It supports combined text and image inputs (up to 32,768 tokens) and excels in document question answering, visual math reasoning, object recognition, and photorealistic image generation powered by FLUX.1. It also supports API deployment for developers and enterprises.

grok-2-image-1212

Grok 2 Image 1212 (also known as grok-2-image-1212) is xAI’s December 2024 release of their unified image generation and understanding model. Built on Grok 2, it combines Aurora-powered photorealistic image creation with strong multimodal comprehension—handling image editing, vision QA, chart interpretation, and document analysis—within a single API and 32,768-token context.

grok-2-image-1212

Grok 2 Image 1212 (also known as grok-2-image-1212) is xAI’s December 2024 release of their unified image generation and understanding model. Built on Grok 2, it combines Aurora-powered photorealistic image creation with strong multimodal comprehension—handling image editing, vision QA, chart interpretation, and document analysis—within a single API and 32,768-token context.

grok-2-image-1212

Grok 2 Image 1212 (also known as grok-2-image-1212) is xAI’s December 2024 release of their unified image generation and understanding model. Built on Grok 2, it combines Aurora-powered photorealistic image creation with strong multimodal comprehension—handling image editing, vision QA, chart interpretation, and document analysis—within a single API and 32,768-token context.

Mistral Small 3.1

Mistral Small 3.1 is the March 17, 2025 update to Mistral AI's open-source 24B-parameter small model. It offers instruction-following, multimodal vision understanding, and an expanded 128K-token context window, delivering performance on par with or better than GPT‑4o Mini, Gemma 3, and Claude 3.5 Haiku—all while maintaining fast inference speeds (~150 tokens/sec) and running on devices like an RTX 4090 or a 32 GB Mac.

Mistral Small 3.1

Mistral Small 3.1 is the March 17, 2025 update to Mistral AI's open-source 24B-parameter small model. It offers instruction-following, multimodal vision understanding, and an expanded 128K-token context window, delivering performance on par with or better than GPT‑4o Mini, Gemma 3, and Claude 3.5 Haiku—all while maintaining fast inference speeds (~150 tokens/sec) and running on devices like an RTX 4090 or a 32 GB Mac.

Mistral Small 3.1

Mistral Small 3.1 is the March 17, 2025 update to Mistral AI's open-source 24B-parameter small model. It offers instruction-following, multimodal vision understanding, and an expanded 128K-token context window, delivering performance on par with or better than GPT‑4o Mini, Gemma 3, and Claude 3.5 Haiku—all while maintaining fast inference speeds (~150 tokens/sec) and running on devices like an RTX 4090 or a 32 GB Mac.

Grok Studio

Grok Studio is a split-screen, AI-assisted collaborative workspace from xAI, designed to elevate productivity with seamless real-time editing across documents, code, data reports, and even browser-based games. Embedded in the Grok AI platform, it transforms traditional chat-like interactions into an interactive creation environment. The right-hand pane displays your content—be it code, docs, or visual snippets—while the left-hand pane hosts Grok AI, offering suggestions, edits, or executing code live. Users can import files directly from Google Drive, supporting Docs, Sheets, and Slides, and write or run code in languages such as Python, JavaScript, TypeScript, C++, and Bash in an instant preview workflow. Released in April 2025, Grok Studio is accessible to both free and premium users, breaking ground in AI-assisted collaboration by integrating content generation, coding, and creative prototyping into one unified interface.

Grok Studio

Grok Studio is a split-screen, AI-assisted collaborative workspace from xAI, designed to elevate productivity with seamless real-time editing across documents, code, data reports, and even browser-based games. Embedded in the Grok AI platform, it transforms traditional chat-like interactions into an interactive creation environment. The right-hand pane displays your content—be it code, docs, or visual snippets—while the left-hand pane hosts Grok AI, offering suggestions, edits, or executing code live. Users can import files directly from Google Drive, supporting Docs, Sheets, and Slides, and write or run code in languages such as Python, JavaScript, TypeScript, C++, and Bash in an instant preview workflow. Released in April 2025, Grok Studio is accessible to both free and premium users, breaking ground in AI-assisted collaboration by integrating content generation, coding, and creative prototyping into one unified interface.

Grok Studio

Grok Studio is a split-screen, AI-assisted collaborative workspace from xAI, designed to elevate productivity with seamless real-time editing across documents, code, data reports, and even browser-based games. Embedded in the Grok AI platform, it transforms traditional chat-like interactions into an interactive creation environment. The right-hand pane displays your content—be it code, docs, or visual snippets—while the left-hand pane hosts Grok AI, offering suggestions, edits, or executing code live. Users can import files directly from Google Drive, supporting Docs, Sheets, and Slides, and write or run code in languages such as Python, JavaScript, TypeScript, C++, and Bash in an instant preview workflow. Released in April 2025, Grok Studio is accessible to both free and premium users, breaking ground in AI-assisted collaboration by integrating content generation, coding, and creative prototyping into one unified interface.

Upstage - Solar Mi..

Solar Mini is Upstage’s compact, high-performance large language model (LLM) with under 30 billion parameters, engineered for exceptional speed and efficiency without sacrificing quality. It outperforms comparable models like Llama2, Mistral 7B, and Ko-Alpaca on major benchmarks, delivering responses similar to GPT-3.5 but 2.5 times faster. Thanks to its innovative Depth Up-scaling (DUS) and continued pre-training, Solar Mini is easily customized for domain-specific tasks, supports on-device deployment, and is especially suited for decentralized, responsive AI applications.

Upstage - Solar Mi..

Solar Mini is Upstage’s compact, high-performance large language model (LLM) with under 30 billion parameters, engineered for exceptional speed and efficiency without sacrificing quality. It outperforms comparable models like Llama2, Mistral 7B, and Ko-Alpaca on major benchmarks, delivering responses similar to GPT-3.5 but 2.5 times faster. Thanks to its innovative Depth Up-scaling (DUS) and continued pre-training, Solar Mini is easily customized for domain-specific tasks, supports on-device deployment, and is especially suited for decentralized, responsive AI applications.

Upstage - Solar Mi..

Solar Mini is Upstage’s compact, high-performance large language model (LLM) with under 30 billion parameters, engineered for exceptional speed and efficiency without sacrificing quality. It outperforms comparable models like Llama2, Mistral 7B, and Ko-Alpaca on major benchmarks, delivering responses similar to GPT-3.5 but 2.5 times faster. Thanks to its innovative Depth Up-scaling (DUS) and continued pre-training, Solar Mini is easily customized for domain-specific tasks, supports on-device deployment, and is especially suited for decentralized, responsive AI applications.

Editorial Note

This page was researched and written by the ATB Editorial Team. Our team researches each AI tool by reviewing its official website, testing features, exploring real use cases, and considering user feedback. Every page is fact-checked and regularly updated to ensure the information stays accurate, neutral, and useful for our readers.

If you have any suggestions or questions, email us at hello@aitoolbook.ai