Unlike larger TTS models, GPT-4o-mini-tts balances performance and efficiency, enabling responsive, engaging voice output even in environments with limited compute resources.

- Voice App Developers: Integrate responsive speech into chatbots, IVR systems, or AI companions.

- Customer Support Teams: Enable realistic voice interfaces for help desks and support workflows.

- Educators & E-learning Creators: Bring lessons to life with engaging, real-time voice narration.

- Accessibility Tool Builders: Offer real-time audio support for visually impaired users.

- Game & AR/VR Developers: Add real-time, low-latency voice to immersive environments.

- IoT & Smart Device Makers: Power voice interfaces in smart home products or wearables.

🛠️ How to Use GPT-4o-mini-tts?

- Step 1: Access the OpenAI API: Use the /v1/audio/speech endpoint with GPT-4o-mini as your selected model.

- Step 2: Input Your Text: Provide the message you'd like to convert to speech. Keep it under 4096 characters per request.

- Step 3: Choose Your Voice: Select from high-quality voices like nova, shimmer, or echo depending on your tone and use case.

- Step 4: Play or Store the Audio: Receive an audio file (MPEG or WAV) for immediate playback or storage in your app.

- Step 5: Optimize for Real-Time: Leverage the model’s speed for live conversations or latency-sensitive environments.

- Optimized for Real-Time Use: Designed for minimal latency while maintaining natural-sounding speech.

- Efficient & Lightweight: Suitable for low-resource environments or apps requiring quick response.

- Part of GPT-4o Ecosystem: Seamless integration with OpenAI’s multimodal GPT-4o models.

- Multiple High-Quality Voices: Choose from diverse voice options that suit different tones and contexts.

- Scalable & Fast: Efficient enough to power enterprise-scale voice deployments or lightweight mobile use.

- Text-to-Speech for Everyone: Great entry point for developers new to TTS or with performance constraints.

- Super Low Latency: Ideal for interactive and real-time applications.

- Natural Sounding Voices: Delivers clarity and expressiveness at a compact model size.

- Easily Integrates with GPT-4o: Enables full voice-based multimodal applications.

- Flexible Deployment: Great fit for mobile, desktop, or web apps with speech needs.

- Developer-Friendly API: Simple endpoint and straightforward parameters make it easy to adopt.

- Less Expressive Than TTS-1-HD: Lacks the emotional range and nuance of larger TTS models.

- Limited Voice Options: Fewer voices compared to premium TTS offerings.

- Output May Sound Robotic at Times: In certain edge cases, tone can be a bit flat.

- Not Ideal for Long Narratives: Best suited for short, responsive voice interactions.

- Voice Calls Still Use Tokens: Every call consumes API credits depending on length and voice used.

API only

$0.60/$12.00 per 1M tokens

Proud of the love you're getting? Show off your AI Toolbook reviews—then invite more fans to share the love and build your credibility.

Add an AI Toolbook badge to your site—an easy way to drive followers, showcase updates, and collect reviews. It's like a mini 24/7 billboard for your AI.

Reviews

Rating Distribution

Average score

Popular Mention

FAQs

Similar AI Tools

OpenAI ChatGPT

ChatGPT is an advanced AI chatbot developed by OpenAI that can generate human-like text, answer questions, assist with creative writing, and engage in natural conversations. Powered by OpenAI’s GPT models, it is widely used for customer support, content creation, tutoring, and even casual chat. ChatGPT is available as a web app, API, and mobile app, making it accessible for personal and business use.

OpenAI ChatGPT

ChatGPT is an advanced AI chatbot developed by OpenAI that can generate human-like text, answer questions, assist with creative writing, and engage in natural conversations. Powered by OpenAI’s GPT models, it is widely used for customer support, content creation, tutoring, and even casual chat. ChatGPT is available as a web app, API, and mobile app, making it accessible for personal and business use.

OpenAI ChatGPT

ChatGPT is an advanced AI chatbot developed by OpenAI that can generate human-like text, answer questions, assist with creative writing, and engage in natural conversations. Powered by OpenAI’s GPT models, it is widely used for customer support, content creation, tutoring, and even casual chat. ChatGPT is available as a web app, API, and mobile app, making it accessible for personal and business use.

OpenAI GPT Image 1

GPT-Image-1 is OpenAI's state-of-the-art vision model designed to understand and interpret images with human-like perception. It enables developers and businesses to analyze, summarize, and extract detailed insights from images using natural language. Whether you're building AI agents, accessibility tools, or image-driven workflows, GPT-Image-1 brings powerful multimodal capabilities into your applications with impressive accuracy. Optimized for use via API, it can handle diverse image types—charts, screenshots, photographs, documents, and more—making it one of the most versatile models in OpenAI’s portfolio.

OpenAI GPT Image 1

GPT-Image-1 is OpenAI's state-of-the-art vision model designed to understand and interpret images with human-like perception. It enables developers and businesses to analyze, summarize, and extract detailed insights from images using natural language. Whether you're building AI agents, accessibility tools, or image-driven workflows, GPT-Image-1 brings powerful multimodal capabilities into your applications with impressive accuracy. Optimized for use via API, it can handle diverse image types—charts, screenshots, photographs, documents, and more—making it one of the most versatile models in OpenAI’s portfolio.

OpenAI GPT Image 1

GPT-Image-1 is OpenAI's state-of-the-art vision model designed to understand and interpret images with human-like perception. It enables developers and businesses to analyze, summarize, and extract detailed insights from images using natural language. Whether you're building AI agents, accessibility tools, or image-driven workflows, GPT-Image-1 brings powerful multimodal capabilities into your applications with impressive accuracy. Optimized for use via API, it can handle diverse image types—charts, screenshots, photographs, documents, and more—making it one of the most versatile models in OpenAI’s portfolio.

GPT-4o Search Preview is a powerful experimental feature of OpenAI’s GPT-4o model, designed to act as a high-performance retrieval system. Rather than just generating answers from training data, it allows the model to search through large datasets, documents, or knowledge bases to surface relevant results with context-aware accuracy. Think of it as your AI assistant with built-in research superpowers—faster, smarter, and surprisingly precise. This preview gives developers a taste of what’s coming next: an intelligent search engine built directly into the GPT-4o ecosystem.

OpenAI GPT 4o Sear..

GPT-4o Search Preview is a powerful experimental feature of OpenAI’s GPT-4o model, designed to act as a high-performance retrieval system. Rather than just generating answers from training data, it allows the model to search through large datasets, documents, or knowledge bases to surface relevant results with context-aware accuracy. Think of it as your AI assistant with built-in research superpowers—faster, smarter, and surprisingly precise. This preview gives developers a taste of what’s coming next: an intelligent search engine built directly into the GPT-4o ecosystem.

OpenAI GPT 4o Sear..

GPT-4o Search Preview is a powerful experimental feature of OpenAI’s GPT-4o model, designed to act as a high-performance retrieval system. Rather than just generating answers from training data, it allows the model to search through large datasets, documents, or knowledge bases to surface relevant results with context-aware accuracy. Think of it as your AI assistant with built-in research superpowers—faster, smarter, and surprisingly precise. This preview gives developers a taste of what’s coming next: an intelligent search engine built directly into the GPT-4o ecosystem.

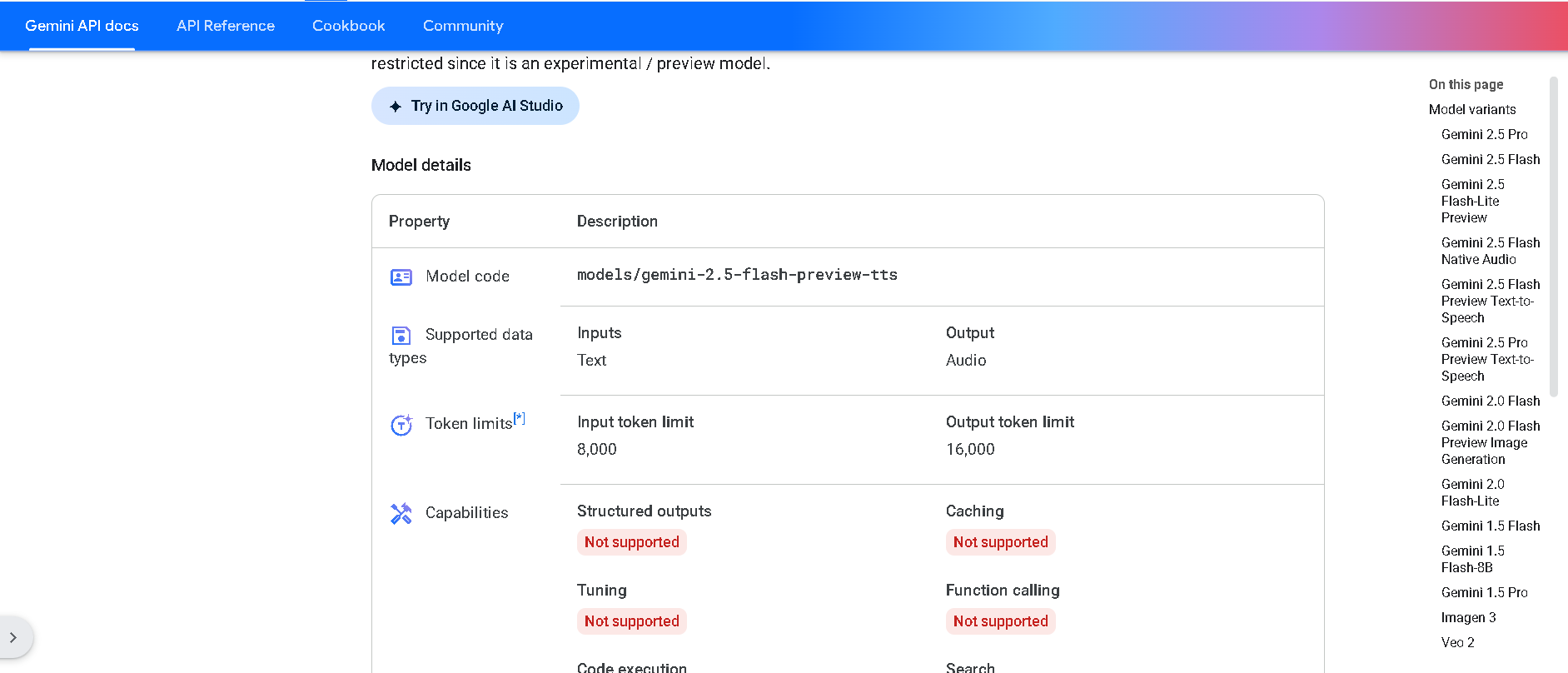

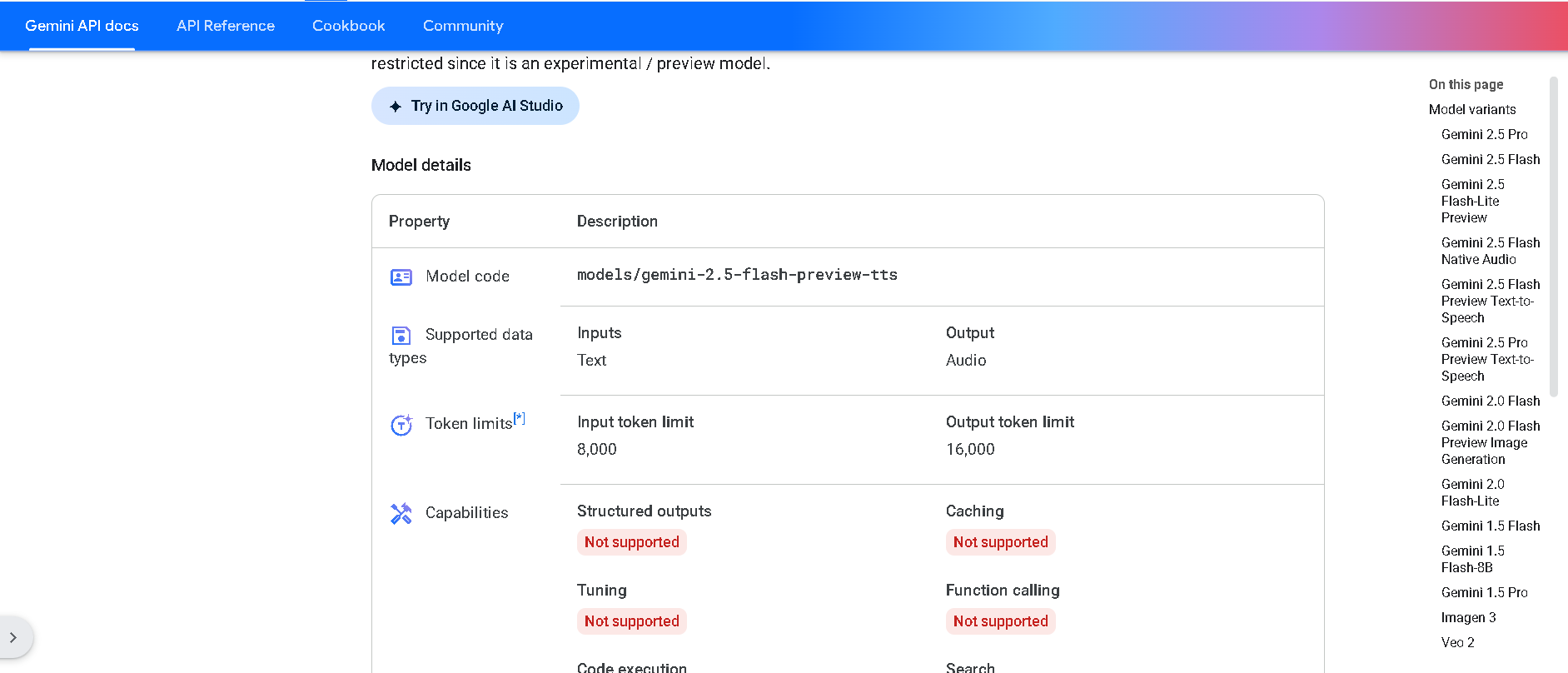

Gemini 2.5 Flash P..

Gemini 2.5 Flash Preview TTS is Google DeepMind’s cutting-edge text-to-speech model that converts text into natural, expressive audio. It supports both single-speaker and multi-speaker output, allowing fine-grained control over style, emotion, pace, and tone. This preview variant is optimized for low latency and structured use cases like podcasts, audiobooks, and customer support workflows .

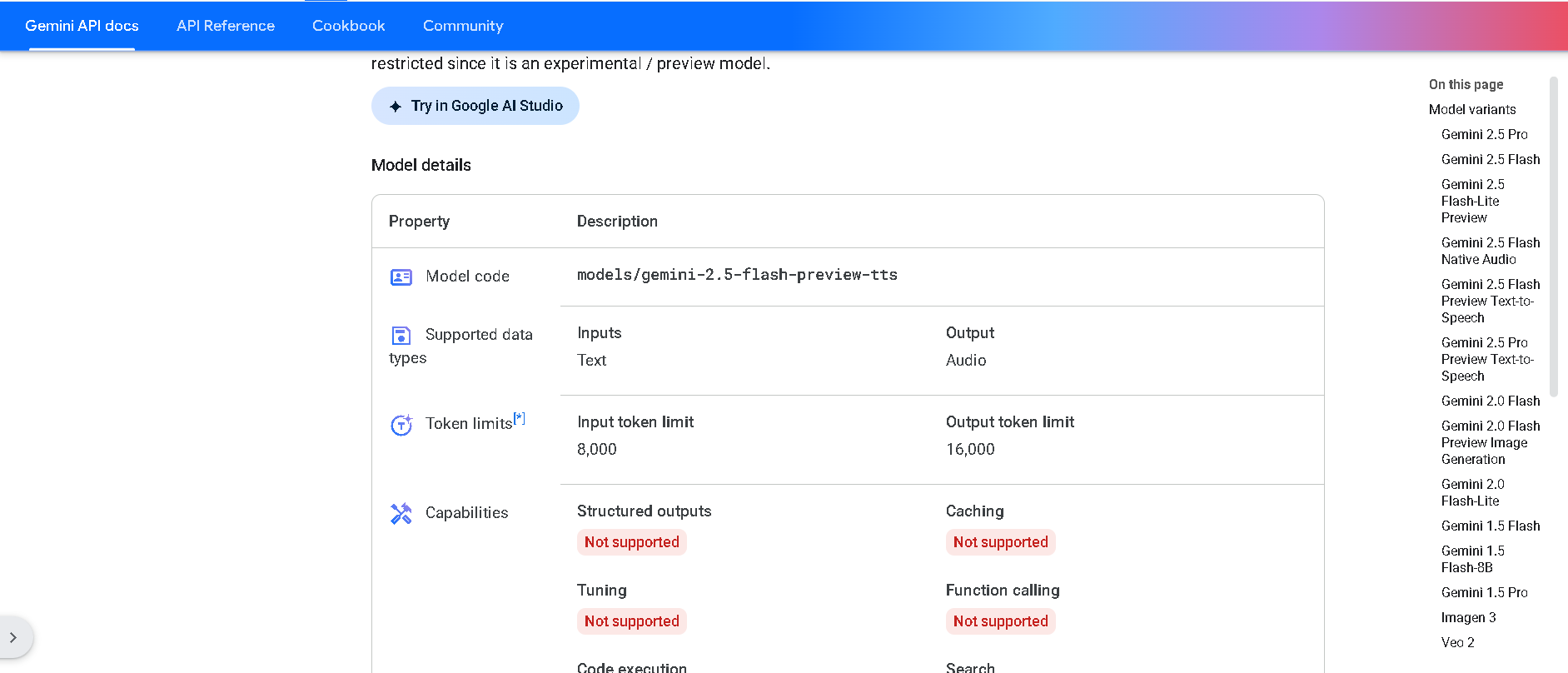

Gemini 2.5 Flash P..

Gemini 2.5 Flash Preview TTS is Google DeepMind’s cutting-edge text-to-speech model that converts text into natural, expressive audio. It supports both single-speaker and multi-speaker output, allowing fine-grained control over style, emotion, pace, and tone. This preview variant is optimized for low latency and structured use cases like podcasts, audiobooks, and customer support workflows .

Gemini 2.5 Flash P..

Gemini 2.5 Flash Preview TTS is Google DeepMind’s cutting-edge text-to-speech model that converts text into natural, expressive audio. It supports both single-speaker and multi-speaker output, allowing fine-grained control over style, emotion, pace, and tone. This preview variant is optimized for low latency and structured use cases like podcasts, audiobooks, and customer support workflows .

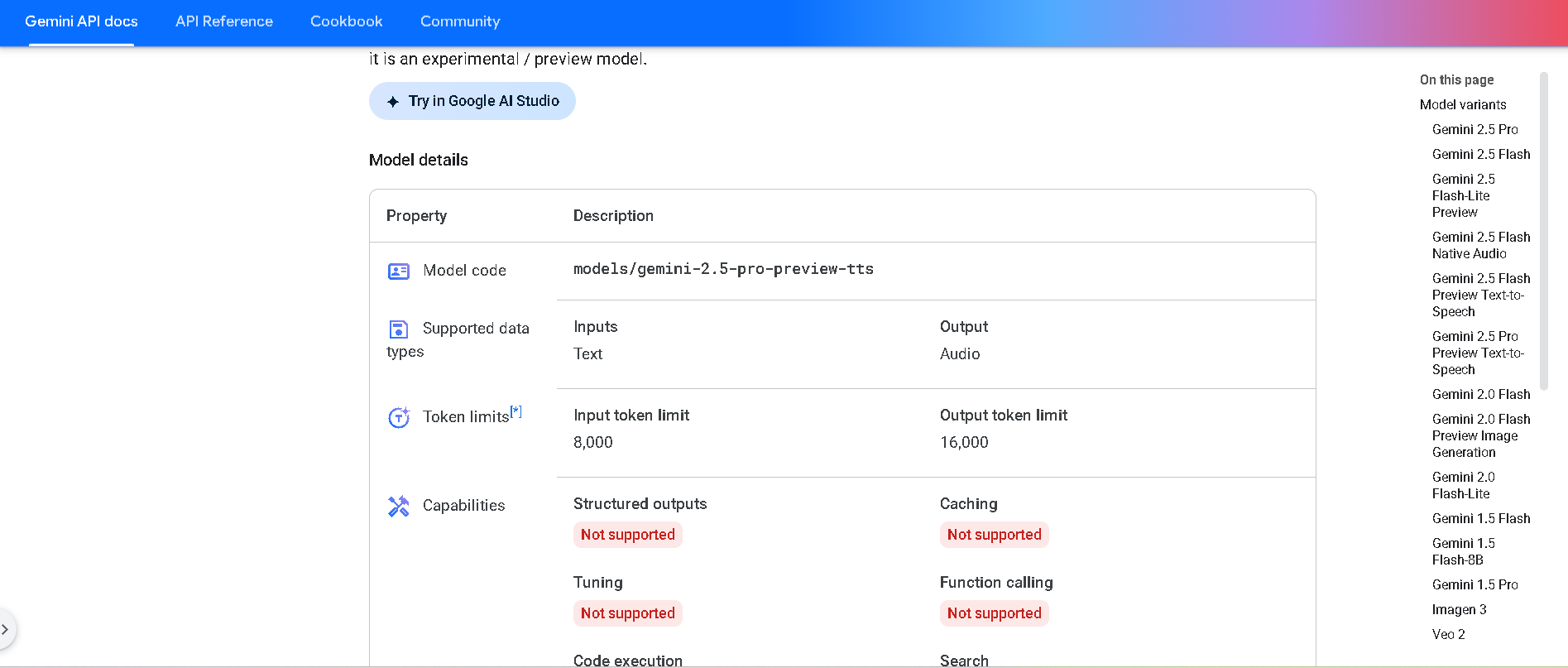

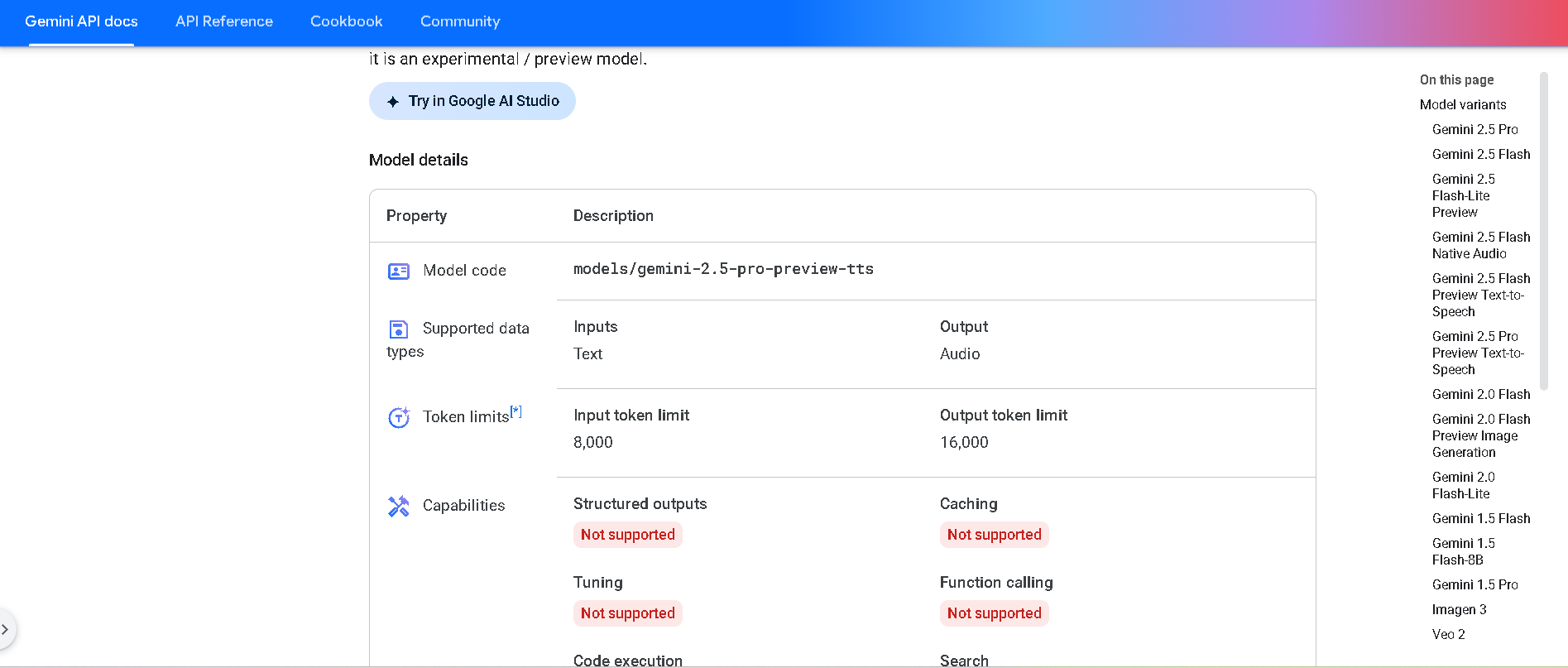

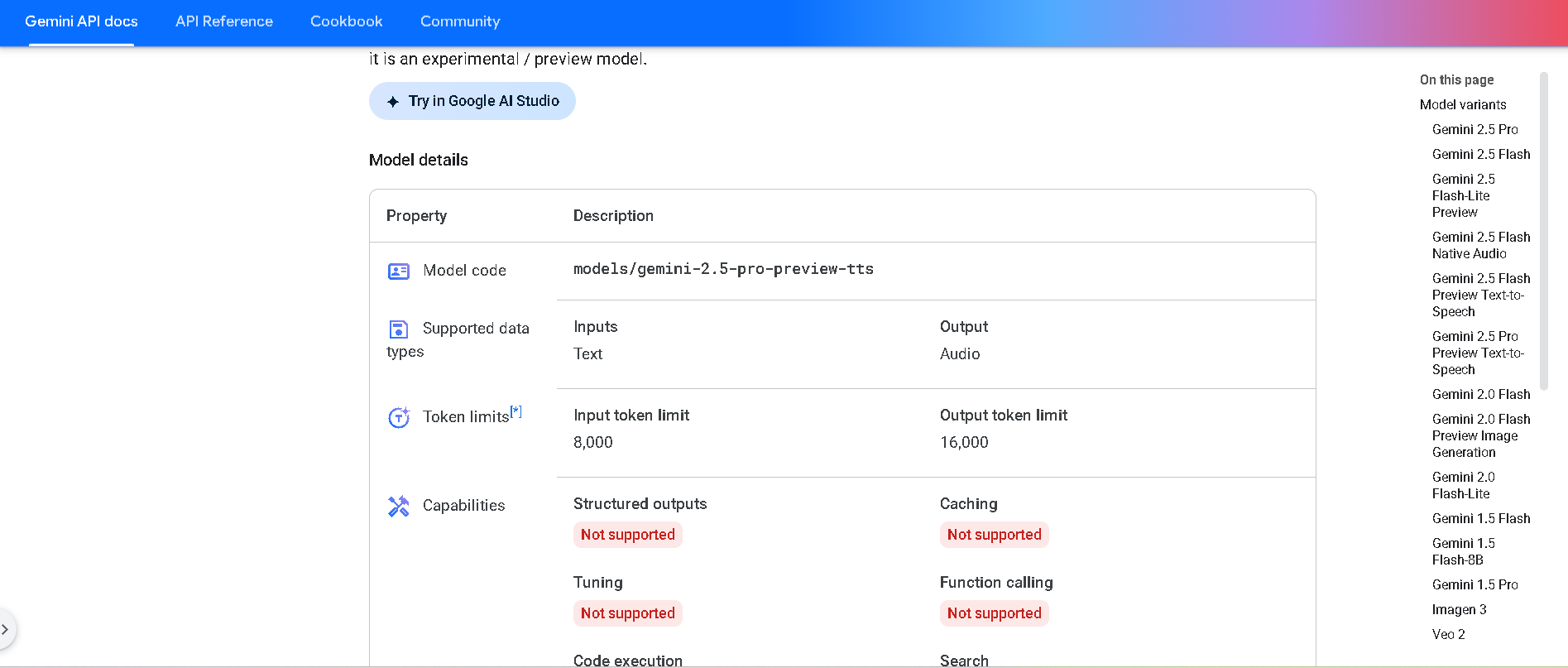

Gemini 2.5 Pro Pre..

Gemini 2.5 Pro Preview TTS is Google DeepMind’s most powerful text-to-speech model in the Gemini 2.5 series, available in preview. It generates natural-sounding audio—from single-speaker readings to multi-speaker dialogue—while offering fine-grained control over voice style, emotion, pacing, and cadence. Designed for high-fidelity podcasts, audiobooks, and professional voice workflows.

Gemini 2.5 Pro Pre..

Gemini 2.5 Pro Preview TTS is Google DeepMind’s most powerful text-to-speech model in the Gemini 2.5 series, available in preview. It generates natural-sounding audio—from single-speaker readings to multi-speaker dialogue—while offering fine-grained control over voice style, emotion, pacing, and cadence. Designed for high-fidelity podcasts, audiobooks, and professional voice workflows.

Gemini 2.5 Pro Pre..

Gemini 2.5 Pro Preview TTS is Google DeepMind’s most powerful text-to-speech model in the Gemini 2.5 series, available in preview. It generates natural-sounding audio—from single-speaker readings to multi-speaker dialogue—while offering fine-grained control over voice style, emotion, pacing, and cadence. Designed for high-fidelity podcasts, audiobooks, and professional voice workflows.

Open AI GPT 5

GPT-5 is OpenAI’s smartest and most versatile AI model yet, delivering expert-level intelligence across coding, writing, math, health, and multimodal tasks. It is a unified system that dynamically determines when to respond quickly or engage in deeper reasoning, providing accurate and context-aware answers. Powered by advanced neural architectures, GPT-5 significantly reduces hallucinations, enhances instruction following, and excels in real-world applications like software development, creative writing, and health guidance, making it a powerful AI assistant for a broad range of complex tasks and everyday needs.

Open AI GPT 5

GPT-5 is OpenAI’s smartest and most versatile AI model yet, delivering expert-level intelligence across coding, writing, math, health, and multimodal tasks. It is a unified system that dynamically determines when to respond quickly or engage in deeper reasoning, providing accurate and context-aware answers. Powered by advanced neural architectures, GPT-5 significantly reduces hallucinations, enhances instruction following, and excels in real-world applications like software development, creative writing, and health guidance, making it a powerful AI assistant for a broad range of complex tasks and everyday needs.

Open AI GPT 5

GPT-5 is OpenAI’s smartest and most versatile AI model yet, delivering expert-level intelligence across coding, writing, math, health, and multimodal tasks. It is a unified system that dynamically determines when to respond quickly or engage in deeper reasoning, providing accurate and context-aware answers. Powered by advanced neural architectures, GPT-5 significantly reduces hallucinations, enhances instruction following, and excels in real-world applications like software development, creative writing, and health guidance, making it a powerful AI assistant for a broad range of complex tasks and everyday needs.

Murf.ai

Murf.ai is an AI voice generator and text-to-speech platform that delivers ultra-realistic voiceovers for creators, teams, and developers. It offers 200+ multilingual voices, 10+ speaking styles, and fine-grained controls over pitch, speed, tone, prosody, and pronunciation. A low-latency TTS model powers conversational agents with sub-200 ms response, while APIs enable voice cloning, voice changing, streaming TTS, and translation/dubbing in 30+ languages. A studio workspace supports scripting, timing, and rapid iteration for ads, training, audiobooks, podcasts, and product audio. Pronunciation libraries, team workspaces, and tool integrations help standardize brand voice at scale without complex audio engineering.

Murf.ai

Murf.ai is an AI voice generator and text-to-speech platform that delivers ultra-realistic voiceovers for creators, teams, and developers. It offers 200+ multilingual voices, 10+ speaking styles, and fine-grained controls over pitch, speed, tone, prosody, and pronunciation. A low-latency TTS model powers conversational agents with sub-200 ms response, while APIs enable voice cloning, voice changing, streaming TTS, and translation/dubbing in 30+ languages. A studio workspace supports scripting, timing, and rapid iteration for ads, training, audiobooks, podcasts, and product audio. Pronunciation libraries, team workspaces, and tool integrations help standardize brand voice at scale without complex audio engineering.

Murf.ai

Murf.ai is an AI voice generator and text-to-speech platform that delivers ultra-realistic voiceovers for creators, teams, and developers. It offers 200+ multilingual voices, 10+ speaking styles, and fine-grained controls over pitch, speed, tone, prosody, and pronunciation. A low-latency TTS model powers conversational agents with sub-200 ms response, while APIs enable voice cloning, voice changing, streaming TTS, and translation/dubbing in 30+ languages. A studio workspace supports scripting, timing, and rapid iteration for ads, training, audiobooks, podcasts, and product audio. Pronunciation libraries, team workspaces, and tool integrations help standardize brand voice at scale without complex audio engineering.

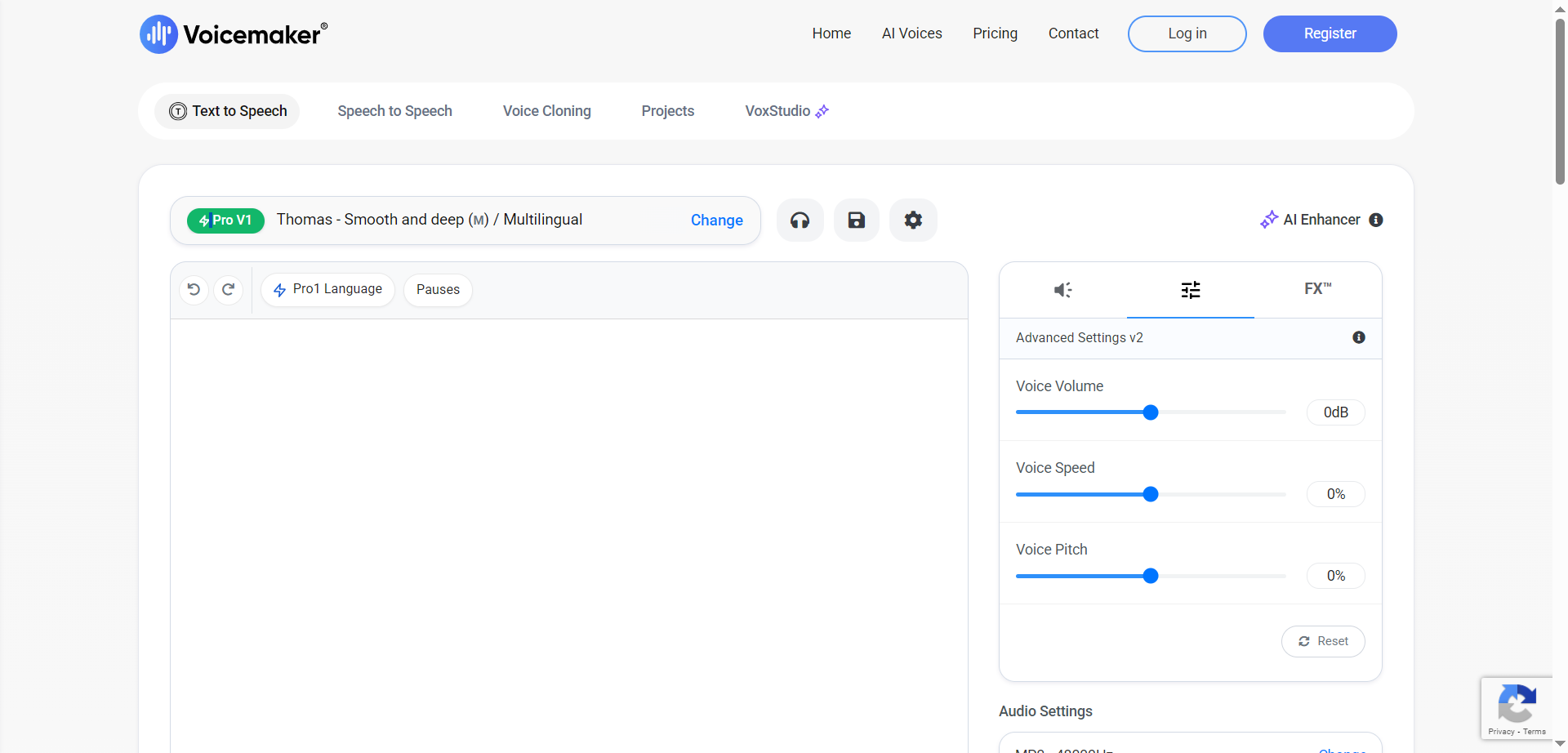

Voicemaker

Voicemaker is an AI-based online text-to-speech platform that turns written text into natural-sounding voiceovers across a wide range of languages and use cases. It offers ultra-fast, low-latency speech suitable for real-time applications, studio-like voices for production-quality narration, and a prompt-based dynamic model for highly expressive storytelling. Creators can fine-tune voice parameters such as volume, speed, pitch, stability, and similarity to match brand or project needs. Generated audio files can be redistributed globally, even after a subscription ends, enabling flexible usage across platforms. With simple controls and scalable voice options, Voicemaker streamlines voiceover creation for content, podcasts, videos, and more.

Voicemaker

Voicemaker is an AI-based online text-to-speech platform that turns written text into natural-sounding voiceovers across a wide range of languages and use cases. It offers ultra-fast, low-latency speech suitable for real-time applications, studio-like voices for production-quality narration, and a prompt-based dynamic model for highly expressive storytelling. Creators can fine-tune voice parameters such as volume, speed, pitch, stability, and similarity to match brand or project needs. Generated audio files can be redistributed globally, even after a subscription ends, enabling flexible usage across platforms. With simple controls and scalable voice options, Voicemaker streamlines voiceover creation for content, podcasts, videos, and more.

Voicemaker

Voicemaker is an AI-based online text-to-speech platform that turns written text into natural-sounding voiceovers across a wide range of languages and use cases. It offers ultra-fast, low-latency speech suitable for real-time applications, studio-like voices for production-quality narration, and a prompt-based dynamic model for highly expressive storytelling. Creators can fine-tune voice parameters such as volume, speed, pitch, stability, and similarity to match brand or project needs. Generated audio files can be redistributed globally, even after a subscription ends, enabling flexible usage across platforms. With simple controls and scalable voice options, Voicemaker streamlines voiceover creation for content, podcasts, videos, and more.

FakeYou

FakeYou is a community-driven AI voice platform that converts text into speech using a large catalog of celebrity, character, and creator-trained voices. It emphasizes ease of use for quick meme audio, voiceovers, and creative projects, while also supporting longer scripts with stable generation. Users select from many fan-made and studio-quality voice models, then fine-tune outputs with controls like pace and emphasis for better delivery. The platform focuses on fun, experimentation, and shareability, letting creators generate clips for videos, streams, and social posts. With a lively community and frequent new voices, FakeYou makes voice cloning and character TTS accessible for everyday content creation.

FakeYou

FakeYou is a community-driven AI voice platform that converts text into speech using a large catalog of celebrity, character, and creator-trained voices. It emphasizes ease of use for quick meme audio, voiceovers, and creative projects, while also supporting longer scripts with stable generation. Users select from many fan-made and studio-quality voice models, then fine-tune outputs with controls like pace and emphasis for better delivery. The platform focuses on fun, experimentation, and shareability, letting creators generate clips for videos, streams, and social posts. With a lively community and frequent new voices, FakeYou makes voice cloning and character TTS accessible for everyday content creation.

FakeYou

FakeYou is a community-driven AI voice platform that converts text into speech using a large catalog of celebrity, character, and creator-trained voices. It emphasizes ease of use for quick meme audio, voiceovers, and creative projects, while also supporting longer scripts with stable generation. Users select from many fan-made and studio-quality voice models, then fine-tune outputs with controls like pace and emphasis for better delivery. The platform focuses on fun, experimentation, and shareability, letting creators generate clips for videos, streams, and social posts. With a lively community and frequent new voices, FakeYou makes voice cloning and character TTS accessible for everyday content creation.

Top Medi AI

TopMediai is an all-in-one AI platform built to supercharge content creation across voice, music, and media. It offers advanced tools for text-to-speech, voice cloning, song generation, music covers, and more—allowing creators to generate realistic voiceovers, custom music tracks, and full audio productions in minutes. With thousands of AI voices, support for hundreds of languages and accents, and smart music-generation from prompts, lyrics or images, you get a creative engine built for speed and scale. Whether you're crafting podcasts, videos, games, songs or dubbing, TopMediai packs studio-grade power into a browser-based workflow. The platform also offers API access so developers and creative teams can integrate voice and music generation into their apps and systems.

Top Medi AI

TopMediai is an all-in-one AI platform built to supercharge content creation across voice, music, and media. It offers advanced tools for text-to-speech, voice cloning, song generation, music covers, and more—allowing creators to generate realistic voiceovers, custom music tracks, and full audio productions in minutes. With thousands of AI voices, support for hundreds of languages and accents, and smart music-generation from prompts, lyrics or images, you get a creative engine built for speed and scale. Whether you're crafting podcasts, videos, games, songs or dubbing, TopMediai packs studio-grade power into a browser-based workflow. The platform also offers API access so developers and creative teams can integrate voice and music generation into their apps and systems.

Top Medi AI

TopMediai is an all-in-one AI platform built to supercharge content creation across voice, music, and media. It offers advanced tools for text-to-speech, voice cloning, song generation, music covers, and more—allowing creators to generate realistic voiceovers, custom music tracks, and full audio productions in minutes. With thousands of AI voices, support for hundreds of languages and accents, and smart music-generation from prompts, lyrics or images, you get a creative engine built for speed and scale. Whether you're crafting podcasts, videos, games, songs or dubbing, TopMediai packs studio-grade power into a browser-based workflow. The platform also offers API access so developers and creative teams can integrate voice and music generation into their apps and systems.

AI Awaaz

Ai Awaaz is a text-to-speech (TTS) and voice-generation platform developed in India and marketed as India’s first emotion-based TTS AI engine. It enables users to convert text into natural-sounding voiceovers in 20+ Indian languages and 140+ voices, with selectable emotions (e.g., cheerful, sad, whispering) and export formats suitable for videos, podcasts, audiobooks and e-learning modules. The platform emphasises speed and scalability, claiming that a voiceover can be created in just minutes, compared to traditional voice-actor turnaround times. It is positioned for marketers, educators, content creators and agencies needing multi-language voice production with minimal friction.

AI Awaaz

Ai Awaaz is a text-to-speech (TTS) and voice-generation platform developed in India and marketed as India’s first emotion-based TTS AI engine. It enables users to convert text into natural-sounding voiceovers in 20+ Indian languages and 140+ voices, with selectable emotions (e.g., cheerful, sad, whispering) and export formats suitable for videos, podcasts, audiobooks and e-learning modules. The platform emphasises speed and scalability, claiming that a voiceover can be created in just minutes, compared to traditional voice-actor turnaround times. It is positioned for marketers, educators, content creators and agencies needing multi-language voice production with minimal friction.

AI Awaaz

Ai Awaaz is a text-to-speech (TTS) and voice-generation platform developed in India and marketed as India’s first emotion-based TTS AI engine. It enables users to convert text into natural-sounding voiceovers in 20+ Indian languages and 140+ voices, with selectable emotions (e.g., cheerful, sad, whispering) and export formats suitable for videos, podcasts, audiobooks and e-learning modules. The platform emphasises speed and scalability, claiming that a voiceover can be created in just minutes, compared to traditional voice-actor turnaround times. It is positioned for marketers, educators, content creators and agencies needing multi-language voice production with minimal friction.

Voice.ai

Voice.ai is an AI voice platform that delivers realistic voice agents, studio-quality text-to-speech, rapid voice cloning, and a free real-time voice changer all in one system. Businesses can deploy human-like AI phone agents for 24/7 inbound and outbound calls, lead qualification, appointment booking, and customer conversations that integrate seamlessly with CRM tools like Salesforce and HubSpot. It generates lifelike TTS audio in 15+ languages with accent localization, clones voices from just 10 seconds of sample audio, and offers a free voice changer for gamers and streamers to switch voices live. With enterprise compliance including GDPR, SOC 2, and HIPAA plus cloud or on-premise deployment options.

Voice.ai

Voice.ai is an AI voice platform that delivers realistic voice agents, studio-quality text-to-speech, rapid voice cloning, and a free real-time voice changer all in one system. Businesses can deploy human-like AI phone agents for 24/7 inbound and outbound calls, lead qualification, appointment booking, and customer conversations that integrate seamlessly with CRM tools like Salesforce and HubSpot. It generates lifelike TTS audio in 15+ languages with accent localization, clones voices from just 10 seconds of sample audio, and offers a free voice changer for gamers and streamers to switch voices live. With enterprise compliance including GDPR, SOC 2, and HIPAA plus cloud or on-premise deployment options.

Voice.ai

Voice.ai is an AI voice platform that delivers realistic voice agents, studio-quality text-to-speech, rapid voice cloning, and a free real-time voice changer all in one system. Businesses can deploy human-like AI phone agents for 24/7 inbound and outbound calls, lead qualification, appointment booking, and customer conversations that integrate seamlessly with CRM tools like Salesforce and HubSpot. It generates lifelike TTS audio in 15+ languages with accent localization, clones voices from just 10 seconds of sample audio, and offers a free voice changer for gamers and streamers to switch voices live. With enterprise compliance including GDPR, SOC 2, and HIPAA plus cloud or on-premise deployment options.

Editorial Note

This page was researched and written by the ATB Editorial Team. Our team researches each AI tool by reviewing its official website, testing features, exploring real use cases, and considering user feedback. Every page is fact-checked and regularly updated to ensure the information stays accurate, neutral, and useful for our readers.

If you have any suggestions or questions, email us at hello@aitoolbook.ai