- Developers & Engineers: Ideal for embedding reasoning with transparency in applications while keeping compute costs low.

- Students & Educators: Use “Think” mode to see step-by-step logic on math, coding, or logic problems.

- Enterprises & SMEs: Deploy reasoning-capable AI affordably for ticketing, document analysis, or chat workflows.

- Content & Tooling Teams: Retrieve structured reasoning traces for automated content generation, debugging, or educational use cases.

- Researchers: Experiment with RL-trained reasoning and evaluate lightweight models against top-tier systems.

How to Use Grok 3 Mini (Latest)?

- Access via Cloud & API: Now in public preview on Azure AI Foundry Models, GitHub Models, and xAI API under `grok-3-mini-beta`.

- Activate “Think” Mode: Tap or set `"reasoning": {"effort":"high"}` to view full chain-of-thought with every response.

- Submit Multimodal Prompts: Supports text and image inputs with a very large context window (~131K tokens).

- Deploy Quickly: Use OpenAI-compatible API to integrate into apps, bots, or pipelines with transparent reasoning.

- Balance Speed & Cost: Choose between standard or fast variants; pricing is around $0.30 per million input and $0.50 per million output tokens.

- Transparent Chain-of-Thought: Offers visible reasoning steps in output—ideal for auditing and education.

- Compact Reasoning Power: High benchmark scores (95.8% AIME 2024, ~80% LiveCodeBench) at lower compute cost.

- Multimodal & Long-Context: Supports image analysis and up to 131K tokens, matching flagship context capacity.

- Wide Preview Access: Available on enterprise-grade platforms—Azure, GitHub Models—empowering easy experimentation.

- Full chain-of-thought transparency per response

- High reasoning accuracy matched with affordability

- Large multimodal context with image support

- Available in public preview on cloud (Azure/GitHub)

- Easy integration with OpenAI-client compatibility

- Slightly less accurate than full Grok 3 on deep tasks

- “Big Brain” deeper reasoning not available

- Beta preview may change; production readiness moving forward

Free Tier

$ 0.00

Limited access to DeepSearch

Limited access to DeeperSearch

Super Grok

$30/month

More Aurora Images - 100 Images / 2h

Even Better Memory - 128K Context Window

Extended access to Thinking - 30 Queries / 2h

Extended access to DeepSearch - 30 Queries / 2h

Extended access to DeeperSearch - 10 Queries / 2h

API

$0.30/$0.50 per 1M tokens

Cached Input - $0.075/M

Output - $0.50/M

Proud of the love you're getting? Show off your AI Toolbook reviews—then invite more fans to share the love and build your credibility.

Add an AI Toolbook badge to your site—an easy way to drive followers, showcase updates, and collect reviews. It's like a mini 24/7 billboard for your AI.

Reviews

Rating Distribution

Average score

Popular Mention

FAQs

Similar AI Tools

GPT-4.1 Mini is a lightweight version of OpenAI’s advanced GPT-4.1 model, designed for efficiency, speed, and affordability without compromising much on performance. Tailored for developers and teams who need capable AI reasoning and natural language processing in smaller-scale or cost-sensitive applications, GPT-4.1 Mini brings the power of GPT-4.1 into a more accessible form factor. Perfect for chatbots, content suggestions, productivity tools, and streamlined AI experiences, this compact model still delivers impressive accuracy, fast responses, and a reliable understanding of nuanced prompts—all while using fewer resources.

OpenAI GPT 4.1 min..

GPT-4.1 Mini is a lightweight version of OpenAI’s advanced GPT-4.1 model, designed for efficiency, speed, and affordability without compromising much on performance. Tailored for developers and teams who need capable AI reasoning and natural language processing in smaller-scale or cost-sensitive applications, GPT-4.1 Mini brings the power of GPT-4.1 into a more accessible form factor. Perfect for chatbots, content suggestions, productivity tools, and streamlined AI experiences, this compact model still delivers impressive accuracy, fast responses, and a reliable understanding of nuanced prompts—all while using fewer resources.

OpenAI GPT 4.1 min..

GPT-4.1 Mini is a lightweight version of OpenAI’s advanced GPT-4.1 model, designed for efficiency, speed, and affordability without compromising much on performance. Tailored for developers and teams who need capable AI reasoning and natural language processing in smaller-scale or cost-sensitive applications, GPT-4.1 Mini brings the power of GPT-4.1 into a more accessible form factor. Perfect for chatbots, content suggestions, productivity tools, and streamlined AI experiences, this compact model still delivers impressive accuracy, fast responses, and a reliable understanding of nuanced prompts—all while using fewer resources.

Meta Llama 3

Meta Llama 3 is Meta’s third-generation open-weight large language model family, released in April 2024 and enhanced in July 2024 with the 3.1 update. It spans three sizes—8B, 70B, and 405B parameters—each offering a 128K‑token context window. Llama 3 excels at reasoning, code generation, multilingual text, and instruction-following, and introduces multimodal vision (image understanding) capabilities in its 3.2 series. Robust safety mechanisms like Llama Guard 3, Code Shield, and CyberSec Eval 2 ensure responsible output.

Meta Llama 3

Meta Llama 3 is Meta’s third-generation open-weight large language model family, released in April 2024 and enhanced in July 2024 with the 3.1 update. It spans three sizes—8B, 70B, and 405B parameters—each offering a 128K‑token context window. Llama 3 excels at reasoning, code generation, multilingual text, and instruction-following, and introduces multimodal vision (image understanding) capabilities in its 3.2 series. Robust safety mechanisms like Llama Guard 3, Code Shield, and CyberSec Eval 2 ensure responsible output.

Meta Llama 3

Meta Llama 3 is Meta’s third-generation open-weight large language model family, released in April 2024 and enhanced in July 2024 with the 3.1 update. It spans three sizes—8B, 70B, and 405B parameters—each offering a 128K‑token context window. Llama 3 excels at reasoning, code generation, multilingual text, and instruction-following, and introduces multimodal vision (image understanding) capabilities in its 3.2 series. Robust safety mechanisms like Llama Guard 3, Code Shield, and CyberSec Eval 2 ensure responsible output.

grok-2-vision

Grok 2 Vision (also known as Grok‑2‑Vision‑1212 or grok‑2‑vision‑latest) is xAI’s multimodal variant of Grok 2, designed specifically for advanced image understanding and generation. Launched in December 2024, it supports joint text+image inputs up to 32,768 tokens, excelling in visual math reasoning (MathVista), document question answering (DocVQA), object recognition, and style analysis—while also offering photorealistic image creation via the FLUX.1 model.

grok-2-vision

Grok 2 Vision (also known as Grok‑2‑Vision‑1212 or grok‑2‑vision‑latest) is xAI’s multimodal variant of Grok 2, designed specifically for advanced image understanding and generation. Launched in December 2024, it supports joint text+image inputs up to 32,768 tokens, excelling in visual math reasoning (MathVista), document question answering (DocVQA), object recognition, and style analysis—while also offering photorealistic image creation via the FLUX.1 model.

grok-2-vision

Grok 2 Vision (also known as Grok‑2‑Vision‑1212 or grok‑2‑vision‑latest) is xAI’s multimodal variant of Grok 2, designed specifically for advanced image understanding and generation. Launched in December 2024, it supports joint text+image inputs up to 32,768 tokens, excelling in visual math reasoning (MathVista), document question answering (DocVQA), object recognition, and style analysis—while also offering photorealistic image creation via the FLUX.1 model.

grok-2-vision-late..

Grok 2 Vision is xAI’s advanced vision-enabled variant of Grok 2, launched in December 2024. It supports joint text + image inputs with a 32K-token context window, combining image understanding, document QA, visual math reasoning (e.g., MathVista, DocVQA), and photorealistic image generation via FLUX.1 (later complemented by Aurora). It scores state-of-the-art on multimodal tasks.

grok-2-vision-late..

Grok 2 Vision is xAI’s advanced vision-enabled variant of Grok 2, launched in December 2024. It supports joint text + image inputs with a 32K-token context window, combining image understanding, document QA, visual math reasoning (e.g., MathVista, DocVQA), and photorealistic image generation via FLUX.1 (later complemented by Aurora). It scores state-of-the-art on multimodal tasks.

grok-2-vision-late..

Grok 2 Vision is xAI’s advanced vision-enabled variant of Grok 2, launched in December 2024. It supports joint text + image inputs with a 32K-token context window, combining image understanding, document QA, visual math reasoning (e.g., MathVista, DocVQA), and photorealistic image generation via FLUX.1 (later complemented by Aurora). It scores state-of-the-art on multimodal tasks.

grok-2-image-1212

Grok 2 Image 1212 (also known as grok-2-image-1212) is xAI’s December 2024 release of their unified image generation and understanding model. Built on Grok 2, it combines Aurora-powered photorealistic image creation with strong multimodal comprehension—handling image editing, vision QA, chart interpretation, and document analysis—within a single API and 32,768-token context.

grok-2-image-1212

Grok 2 Image 1212 (also known as grok-2-image-1212) is xAI’s December 2024 release of their unified image generation and understanding model. Built on Grok 2, it combines Aurora-powered photorealistic image creation with strong multimodal comprehension—handling image editing, vision QA, chart interpretation, and document analysis—within a single API and 32,768-token context.

grok-2-image-1212

Grok 2 Image 1212 (also known as grok-2-image-1212) is xAI’s December 2024 release of their unified image generation and understanding model. Built on Grok 2, it combines Aurora-powered photorealistic image creation with strong multimodal comprehension—handling image editing, vision QA, chart interpretation, and document analysis—within a single API and 32,768-token context.

Meta Llama 3.1

Llama 3.1 is Meta’s most advanced open-source Llama 3 model, released on July 23, 2024. It comes in three sizes—8B, 70B, and 405B parameters—with an expanded 128K-token context window and improved multilingual and multimodal capabilities. It significantly outperforms Llama 3 and rivals proprietary models across benchmarks like GSM8K, MMLU, HumanEval, ARC, and tool-augmented reasoning tasks.

Meta Llama 3.1

Llama 3.1 is Meta’s most advanced open-source Llama 3 model, released on July 23, 2024. It comes in three sizes—8B, 70B, and 405B parameters—with an expanded 128K-token context window and improved multilingual and multimodal capabilities. It significantly outperforms Llama 3 and rivals proprietary models across benchmarks like GSM8K, MMLU, HumanEval, ARC, and tool-augmented reasoning tasks.

Meta Llama 3.1

Llama 3.1 is Meta’s most advanced open-source Llama 3 model, released on July 23, 2024. It comes in three sizes—8B, 70B, and 405B parameters—with an expanded 128K-token context window and improved multilingual and multimodal capabilities. It significantly outperforms Llama 3 and rivals proprietary models across benchmarks like GSM8K, MMLU, HumanEval, ARC, and tool-augmented reasoning tasks.

Meta Llama 3.2

Llama 3.2 is Meta’s multimodal and lightweight update to its Llama 3 line, released on September 25, 2024. The family includes 1B and 3B text-only models optimized for edge devices, as well as 11B and 90B Vision models capable of image understanding. It offers a 128K-token context window, Grouped-Query Attention for efficient inference, and opens up on-device, private AI with strong multilingual (e.g. Hindi, Spanish) support.

Meta Llama 3.2

Llama 3.2 is Meta’s multimodal and lightweight update to its Llama 3 line, released on September 25, 2024. The family includes 1B and 3B text-only models optimized for edge devices, as well as 11B and 90B Vision models capable of image understanding. It offers a 128K-token context window, Grouped-Query Attention for efficient inference, and opens up on-device, private AI with strong multilingual (e.g. Hindi, Spanish) support.

Meta Llama 3.2

Llama 3.2 is Meta’s multimodal and lightweight update to its Llama 3 line, released on September 25, 2024. The family includes 1B and 3B text-only models optimized for edge devices, as well as 11B and 90B Vision models capable of image understanding. It offers a 128K-token context window, Grouped-Query Attention for efficient inference, and opens up on-device, private AI with strong multilingual (e.g. Hindi, Spanish) support.

Mistral Small 3.1

Mistral Small 3.1 is the March 17, 2025 update to Mistral AI's open-source 24B-parameter small model. It offers instruction-following, multimodal vision understanding, and an expanded 128K-token context window, delivering performance on par with or better than GPT‑4o Mini, Gemma 3, and Claude 3.5 Haiku—all while maintaining fast inference speeds (~150 tokens/sec) and running on devices like an RTX 4090 or a 32 GB Mac.

Mistral Small 3.1

Mistral Small 3.1 is the March 17, 2025 update to Mistral AI's open-source 24B-parameter small model. It offers instruction-following, multimodal vision understanding, and an expanded 128K-token context window, delivering performance on par with or better than GPT‑4o Mini, Gemma 3, and Claude 3.5 Haiku—all while maintaining fast inference speeds (~150 tokens/sec) and running on devices like an RTX 4090 or a 32 GB Mac.

Mistral Small 3.1

Mistral Small 3.1 is the March 17, 2025 update to Mistral AI's open-source 24B-parameter small model. It offers instruction-following, multimodal vision understanding, and an expanded 128K-token context window, delivering performance on par with or better than GPT‑4o Mini, Gemma 3, and Claude 3.5 Haiku—all while maintaining fast inference speeds (~150 tokens/sec) and running on devices like an RTX 4090 or a 32 GB Mac.

Grok Studio

Grok Studio is a split-screen, AI-assisted collaborative workspace from xAI, designed to elevate productivity with seamless real-time editing across documents, code, data reports, and even browser-based games. Embedded in the Grok AI platform, it transforms traditional chat-like interactions into an interactive creation environment. The right-hand pane displays your content—be it code, docs, or visual snippets—while the left-hand pane hosts Grok AI, offering suggestions, edits, or executing code live. Users can import files directly from Google Drive, supporting Docs, Sheets, and Slides, and write or run code in languages such as Python, JavaScript, TypeScript, C++, and Bash in an instant preview workflow. Released in April 2025, Grok Studio is accessible to both free and premium users, breaking ground in AI-assisted collaboration by integrating content generation, coding, and creative prototyping into one unified interface.

Grok Studio

Grok Studio is a split-screen, AI-assisted collaborative workspace from xAI, designed to elevate productivity with seamless real-time editing across documents, code, data reports, and even browser-based games. Embedded in the Grok AI platform, it transforms traditional chat-like interactions into an interactive creation environment. The right-hand pane displays your content—be it code, docs, or visual snippets—while the left-hand pane hosts Grok AI, offering suggestions, edits, or executing code live. Users can import files directly from Google Drive, supporting Docs, Sheets, and Slides, and write or run code in languages such as Python, JavaScript, TypeScript, C++, and Bash in an instant preview workflow. Released in April 2025, Grok Studio is accessible to both free and premium users, breaking ground in AI-assisted collaboration by integrating content generation, coding, and creative prototyping into one unified interface.

Grok Studio

Grok Studio is a split-screen, AI-assisted collaborative workspace from xAI, designed to elevate productivity with seamless real-time editing across documents, code, data reports, and even browser-based games. Embedded in the Grok AI platform, it transforms traditional chat-like interactions into an interactive creation environment. The right-hand pane displays your content—be it code, docs, or visual snippets—while the left-hand pane hosts Grok AI, offering suggestions, edits, or executing code live. Users can import files directly from Google Drive, supporting Docs, Sheets, and Slides, and write or run code in languages such as Python, JavaScript, TypeScript, C++, and Bash in an instant preview workflow. Released in April 2025, Grok Studio is accessible to both free and premium users, breaking ground in AI-assisted collaboration by integrating content generation, coding, and creative prototyping into one unified interface.

Upstage - Solar Mi..

Solar Mini is Upstage’s compact, high-performance large language model (LLM) with under 30 billion parameters, engineered for exceptional speed and efficiency without sacrificing quality. It outperforms comparable models like Llama2, Mistral 7B, and Ko-Alpaca on major benchmarks, delivering responses similar to GPT-3.5 but 2.5 times faster. Thanks to its innovative Depth Up-scaling (DUS) and continued pre-training, Solar Mini is easily customized for domain-specific tasks, supports on-device deployment, and is especially suited for decentralized, responsive AI applications.

Upstage - Solar Mi..

Solar Mini is Upstage’s compact, high-performance large language model (LLM) with under 30 billion parameters, engineered for exceptional speed and efficiency without sacrificing quality. It outperforms comparable models like Llama2, Mistral 7B, and Ko-Alpaca on major benchmarks, delivering responses similar to GPT-3.5 but 2.5 times faster. Thanks to its innovative Depth Up-scaling (DUS) and continued pre-training, Solar Mini is easily customized for domain-specific tasks, supports on-device deployment, and is especially suited for decentralized, responsive AI applications.

Upstage - Solar Mi..

Solar Mini is Upstage’s compact, high-performance large language model (LLM) with under 30 billion parameters, engineered for exceptional speed and efficiency without sacrificing quality. It outperforms comparable models like Llama2, Mistral 7B, and Ko-Alpaca on major benchmarks, delivering responses similar to GPT-3.5 but 2.5 times faster. Thanks to its innovative Depth Up-scaling (DUS) and continued pre-training, Solar Mini is easily customized for domain-specific tasks, supports on-device deployment, and is especially suited for decentralized, responsive AI applications.

Grok Imagine

Grok Imagine is an AI-powered image and video generation tool developed by Elon Musk’s xAI under the Grok brand. It transforms text or image inputs into photorealistic images (up to 1024×1024) and short video clips (typically 6 seconds with synchronized audio), all powered by xAI's Aurora engine and designed for fast, creative production.

Grok Imagine

Grok Imagine is an AI-powered image and video generation tool developed by Elon Musk’s xAI under the Grok brand. It transforms text or image inputs into photorealistic images (up to 1024×1024) and short video clips (typically 6 seconds with synchronized audio), all powered by xAI's Aurora engine and designed for fast, creative production.

Grok Imagine

Grok Imagine is an AI-powered image and video generation tool developed by Elon Musk’s xAI under the Grok brand. It transforms text or image inputs into photorealistic images (up to 1024×1024) and short video clips (typically 6 seconds with synchronized audio), all powered by xAI's Aurora engine and designed for fast, creative production.

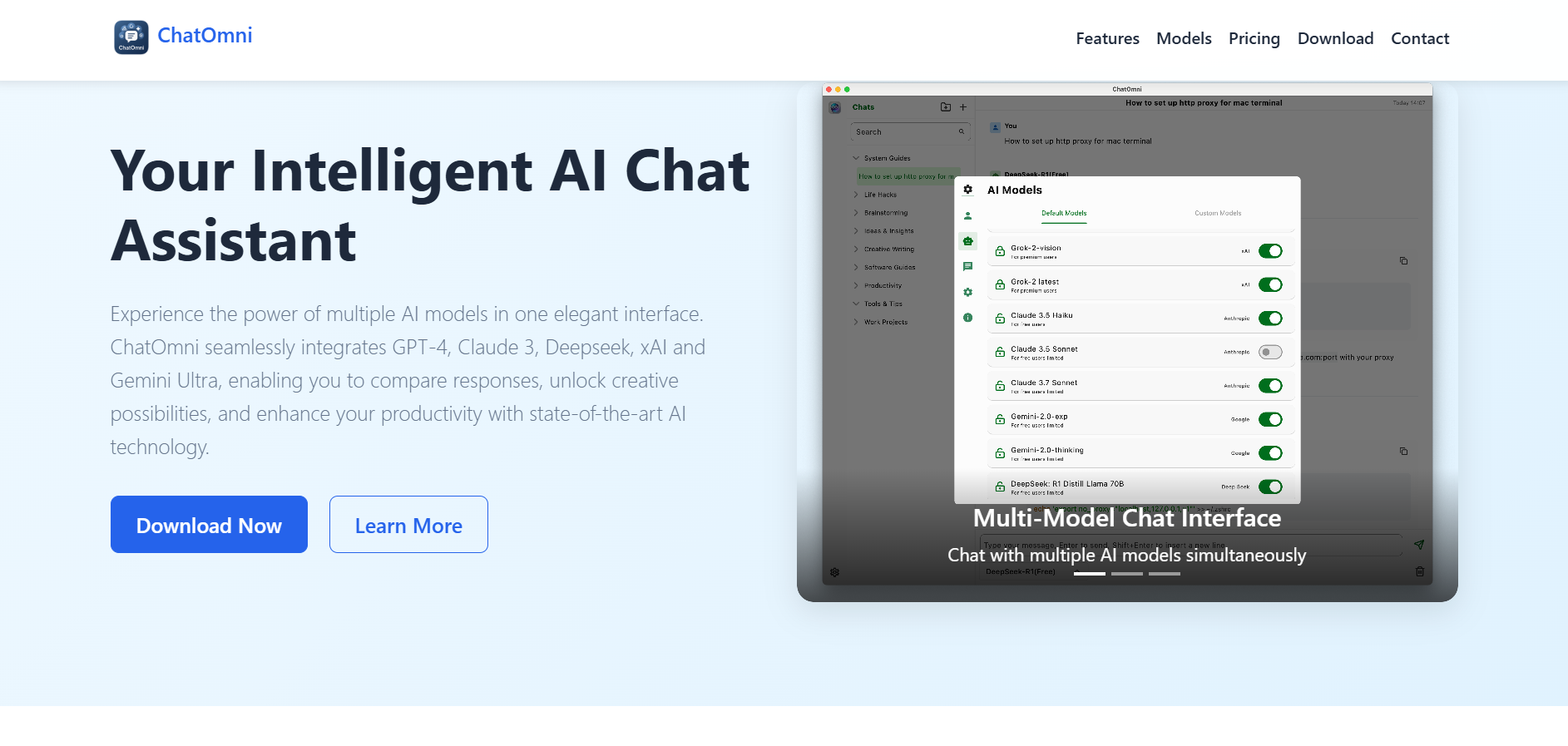

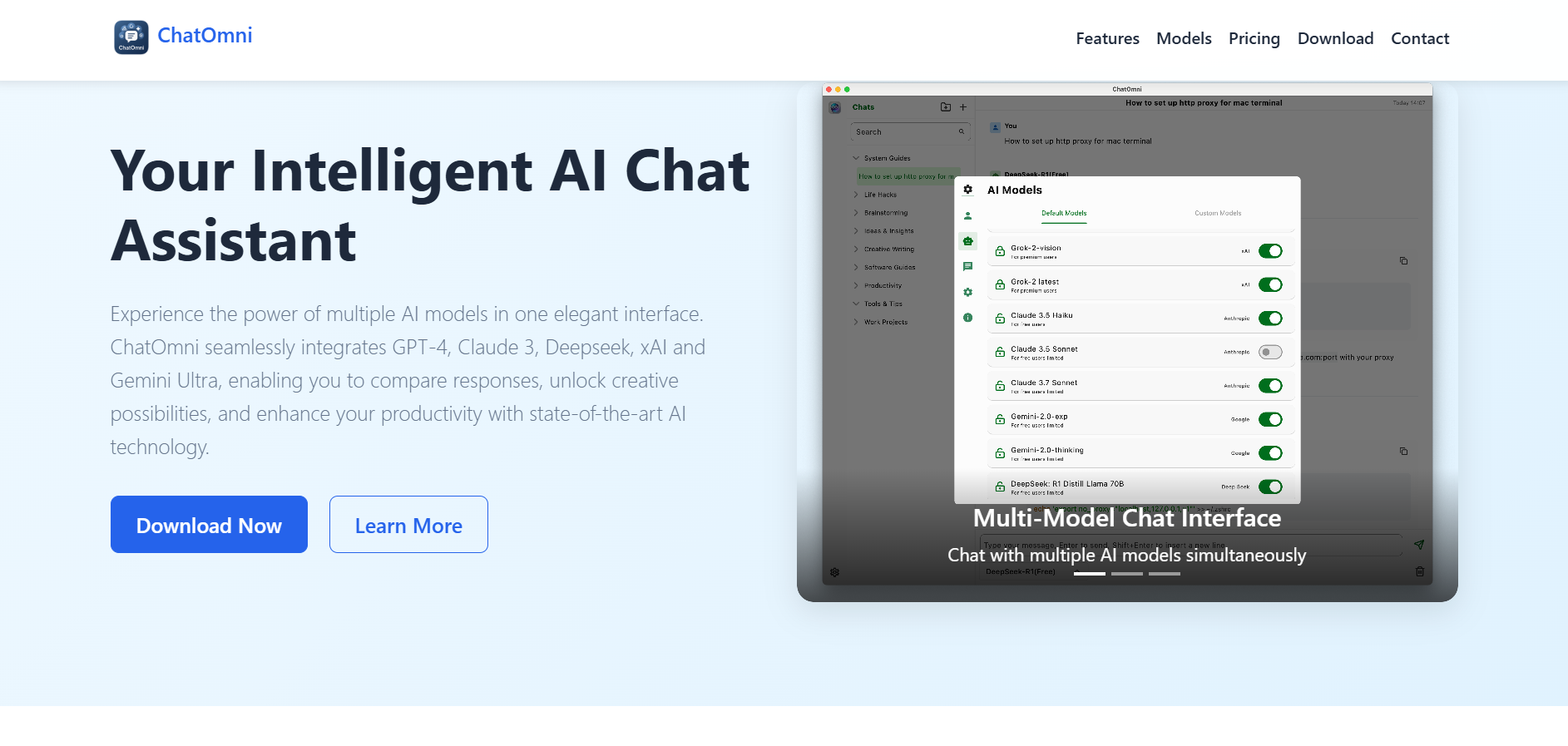

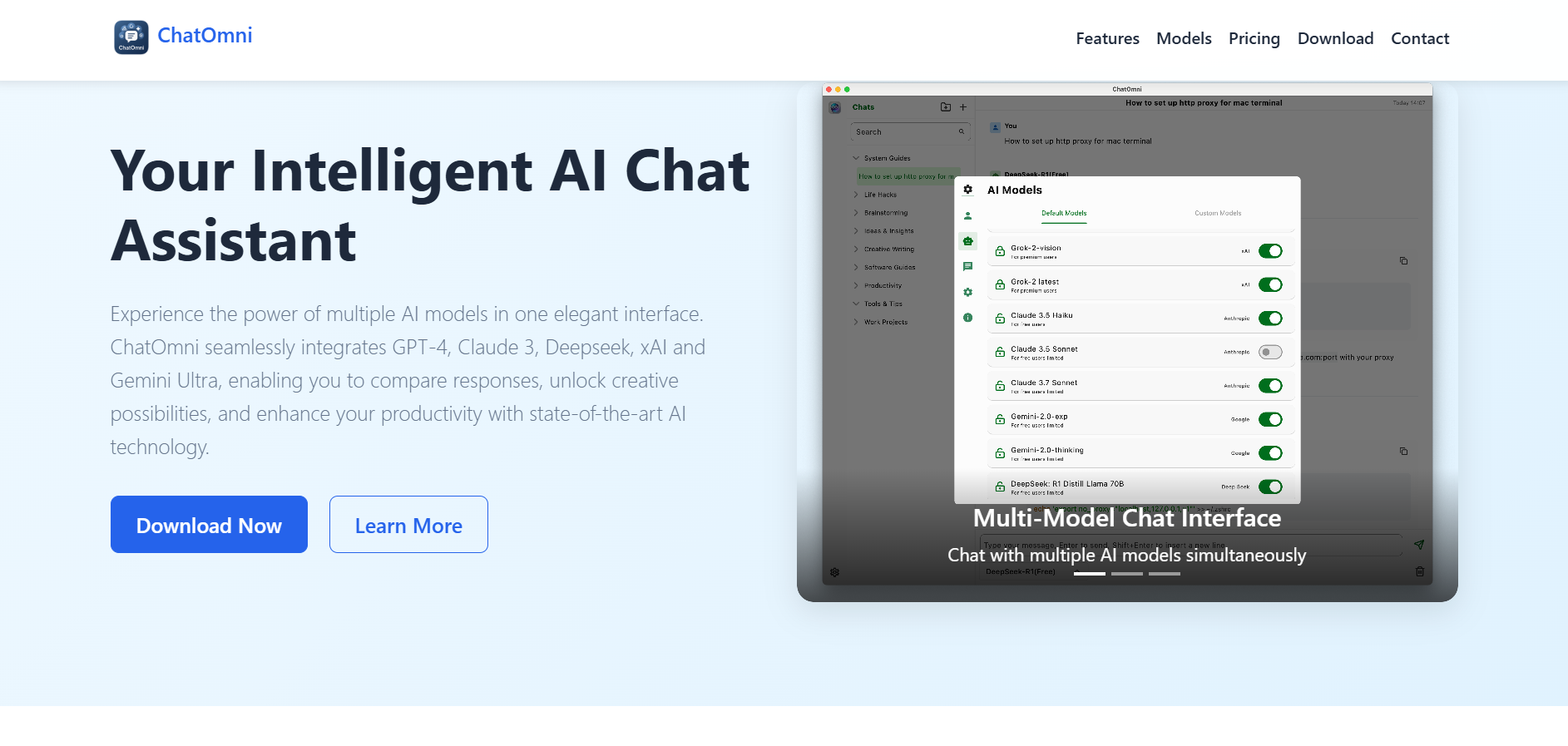

ChatOmni

ChatOmni is a multi-model AI chat platform that brings together multiple leading AI models into a single, unified conversational interface designed to enhance user productivity, creativity, and information depth. The platform supports top-tier large language models such as GPT-4, Claude 3, xAI Grok, Gemini Ultra, and Deepseek, allowing users to chat, compare responses side by side, and tailor outputs to their needs without switching between tools. ChatOmni provides advanced chat management features, including unlimited history, folders, advanced search, and export options, enabling users to organize interactions efficiently. With custom API key support and automatic updates to the latest available models, the platform is positioned as a flexible, evolving AI assistant for research, writing, design, brainstorming, and multi-model comparison workflows across professional and personal contexts.

ChatOmni

ChatOmni is a multi-model AI chat platform that brings together multiple leading AI models into a single, unified conversational interface designed to enhance user productivity, creativity, and information depth. The platform supports top-tier large language models such as GPT-4, Claude 3, xAI Grok, Gemini Ultra, and Deepseek, allowing users to chat, compare responses side by side, and tailor outputs to their needs without switching between tools. ChatOmni provides advanced chat management features, including unlimited history, folders, advanced search, and export options, enabling users to organize interactions efficiently. With custom API key support and automatic updates to the latest available models, the platform is positioned as a flexible, evolving AI assistant for research, writing, design, brainstorming, and multi-model comparison workflows across professional and personal contexts.

ChatOmni

ChatOmni is a multi-model AI chat platform that brings together multiple leading AI models into a single, unified conversational interface designed to enhance user productivity, creativity, and information depth. The platform supports top-tier large language models such as GPT-4, Claude 3, xAI Grok, Gemini Ultra, and Deepseek, allowing users to chat, compare responses side by side, and tailor outputs to their needs without switching between tools. ChatOmni provides advanced chat management features, including unlimited history, folders, advanced search, and export options, enabling users to organize interactions efficiently. With custom API key support and automatic updates to the latest available models, the platform is positioned as a flexible, evolving AI assistant for research, writing, design, brainstorming, and multi-model comparison workflows across professional and personal contexts.

Editorial Note

This page was researched and written by the ATB Editorial Team. Our team researches each AI tool by reviewing its official website, testing features, exploring real use cases, and considering user feedback. Every page is fact-checked and regularly updated to ensure the information stays accurate, neutral, and useful for our readers.

If you have any suggestions or questions, email us at hello@aitoolbook.ai