Despite its “small” label, this model punches well above its weight, providing excellent performance for the vast majority of use cases where embedding is key.

- Search & Retrieval Engineers: Build fast, scalable semantic search engines with compact, high-quality vectors.

- Recommendation System Developers: Generate embeddings for user behavior, product descriptions, or content metadata.

- NLP Practitioners: Train classification or clustering models on top of semantically rich vector representations.

- Startup Teams & Solo Devs: Reduce costs while still getting great embedding quality for chatbots, apps, or internal tools.

- Data Scientists: Use embeddings for anomaly detection, sentiment analysis, or document similarity tasks.

- Academic Researchers: Apply in large-scale corpus analysis, text clustering, or low-cost NLP experiments.

How to Use text-embedding-3-small?

- Step 1: Choose the Model: Select text-embedding-3-small when calling the v1/embeddings API endpoint.

- Step 2: Format Your Input: Send plain text, sentences, paragraphs, or documents—up to 8,192 tokens in a single call.

- Step 3: Get Your Embeddings: Receive a dense vector (1536 dimensions) that numerically represents the meaning of the input text.

- Step 4: Use Embeddings in Applications: Perform similarity comparison, clustering, vector search, classification, or as input to downstream models.

- Step 5: Store and Index Efficiently: Use vector databases like Pinecone, Weaviate, or FAISS to store and search embeddings.

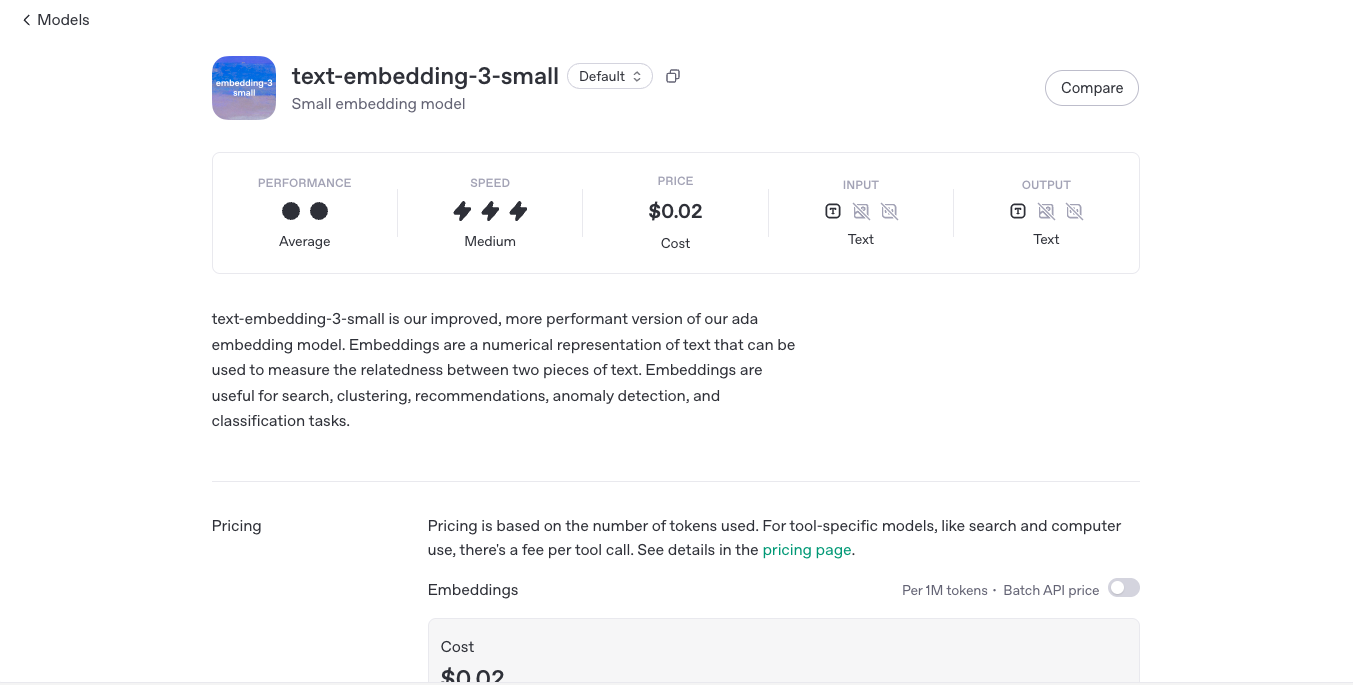

- Incredibly Affordable: 5x cheaper than text-embedding-3-large, making it perfect for large-scale use.

- Compact Size: With just 1536 dimensions, it strikes a balance between speed, cost, and performance.

- High Accuracy: Nearly matches the performance of larger models on most semantic similarity benchmarks.

- Long Context Handling: Accepts inputs of up to 8192 tokens, ideal for embedding large documents or transcripts.

- Updated Architecture: Part of OpenAI’s 2024 embedding series—faster, smarter, and more optimized.

- Smooth Transition Path: Designed as a drop-in replacement for older models like text-embedding-ada-002.

- Ultra Low-Cost Option: Scalable for millions of embeddings without budget blowout.

- Fast & Lightweight: Lower latency and better throughput for real-time applications.

- High-Quality Vectors: Delivers semantically meaningful vectors with strong benchmark performance.

- Handles Long Documents: Supports longer inputs, reducing the need to chunk text aggressively.

- Easily Integrates with Vector DBs: Works seamlessly with Pinecone, Qdrant, FAISS, and others.

- Slightly Lower Accuracy than Large Model: In edge cases, text-embedding-3-large outperforms.

- No Custom Training: Fine-tuning is not supported; you must use the model as-is.

- No Native Multilingual Support Notes: Performance in non-English text may vary.

- 1536-D Vector Still Relatively Large: Might be overkill for ultra-lightweight applications.

- Model Choice Matters: Users must manually select between small vs. large based on tradeoffs.

1 million tokens

$0.02

Proud of the love you're getting? Show off your AI Toolbook reviews—then invite more fans to share the love and build your credibility.

Add an AI Toolbook badge to your site—an easy way to drive followers, showcase updates, and collect reviews. It's like a mini 24/7 billboard for your AI.

Reviews

Rating Distribution

Average score

Popular Mention

FAQs

Similar AI Tools

OpenAI Dall-E 2

DALL·E 2 is an AI model developed by OpenAI that generates images from text descriptions (prompts). It improves upon its predecessor, DALL·E 1, by producing higher-resolution, more realistic, and creative images based on user input. The model can also edit existing images, expand images beyond their original borders (inpainting), and create artistic interpretations of text descriptions. ❗ Note: OpenAI has phased out DALL·E 2 in favor of DALL·E 3, which offers more advanced image generation.

OpenAI Dall-E 2

DALL·E 2 is an AI model developed by OpenAI that generates images from text descriptions (prompts). It improves upon its predecessor, DALL·E 1, by producing higher-resolution, more realistic, and creative images based on user input. The model can also edit existing images, expand images beyond their original borders (inpainting), and create artistic interpretations of text descriptions. ❗ Note: OpenAI has phased out DALL·E 2 in favor of DALL·E 3, which offers more advanced image generation.

OpenAI Dall-E 2

DALL·E 2 is an AI model developed by OpenAI that generates images from text descriptions (prompts). It improves upon its predecessor, DALL·E 1, by producing higher-resolution, more realistic, and creative images based on user input. The model can also edit existing images, expand images beyond their original borders (inpainting), and create artistic interpretations of text descriptions. ❗ Note: OpenAI has phased out DALL·E 2 in favor of DALL·E 3, which offers more advanced image generation.

OpenAI o1-pro

o1-pro is a highly capable AI model developed by OpenAI, designed to deliver efficient, high-quality text generation across a wide range of use cases. As part of OpenAI’s GPT-4 architecture family, o1-pro is optimized for low-latency performance and high accuracy—making it suitable for both everyday tasks and enterprise-scale applications. It powers natural language interactions, content creation, summarization, and more, offering developers a solid balance between performance, cost, and output quality.

OpenAI o1-pro

o1-pro is a highly capable AI model developed by OpenAI, designed to deliver efficient, high-quality text generation across a wide range of use cases. As part of OpenAI’s GPT-4 architecture family, o1-pro is optimized for low-latency performance and high accuracy—making it suitable for both everyday tasks and enterprise-scale applications. It powers natural language interactions, content creation, summarization, and more, offering developers a solid balance between performance, cost, and output quality.

OpenAI o1-pro

o1-pro is a highly capable AI model developed by OpenAI, designed to deliver efficient, high-quality text generation across a wide range of use cases. As part of OpenAI’s GPT-4 architecture family, o1-pro is optimized for low-latency performance and high accuracy—making it suitable for both everyday tasks and enterprise-scale applications. It powers natural language interactions, content creation, summarization, and more, offering developers a solid balance between performance, cost, and output quality.

GPT-4.1 Mini is a lightweight version of OpenAI’s advanced GPT-4.1 model, designed for efficiency, speed, and affordability without compromising much on performance. Tailored for developers and teams who need capable AI reasoning and natural language processing in smaller-scale or cost-sensitive applications, GPT-4.1 Mini brings the power of GPT-4.1 into a more accessible form factor. Perfect for chatbots, content suggestions, productivity tools, and streamlined AI experiences, this compact model still delivers impressive accuracy, fast responses, and a reliable understanding of nuanced prompts—all while using fewer resources.

OpenAI GPT 4.1 min..

GPT-4.1 Mini is a lightweight version of OpenAI’s advanced GPT-4.1 model, designed for efficiency, speed, and affordability without compromising much on performance. Tailored for developers and teams who need capable AI reasoning and natural language processing in smaller-scale or cost-sensitive applications, GPT-4.1 Mini brings the power of GPT-4.1 into a more accessible form factor. Perfect for chatbots, content suggestions, productivity tools, and streamlined AI experiences, this compact model still delivers impressive accuracy, fast responses, and a reliable understanding of nuanced prompts—all while using fewer resources.

OpenAI GPT 4.1 min..

GPT-4.1 Mini is a lightweight version of OpenAI’s advanced GPT-4.1 model, designed for efficiency, speed, and affordability without compromising much on performance. Tailored for developers and teams who need capable AI reasoning and natural language processing in smaller-scale or cost-sensitive applications, GPT-4.1 Mini brings the power of GPT-4.1 into a more accessible form factor. Perfect for chatbots, content suggestions, productivity tools, and streamlined AI experiences, this compact model still delivers impressive accuracy, fast responses, and a reliable understanding of nuanced prompts—all while using fewer resources.

OpenAI GPT 4o Sear..

GPT-4o Search Preview is a powerful experimental feature of OpenAI’s GPT-4o model, designed to act as a high-performance retrieval system. Rather than just generating answers from training data, it allows the model to search through large datasets, documents, or knowledge bases to surface relevant results with context-aware accuracy. Think of it as your AI assistant with built-in research superpowers—faster, smarter, and surprisingly precise. This preview gives developers a taste of what’s coming next: an intelligent search engine built directly into the GPT-4o ecosystem.

OpenAI GPT 4o Sear..

GPT-4o Search Preview is a powerful experimental feature of OpenAI’s GPT-4o model, designed to act as a high-performance retrieval system. Rather than just generating answers from training data, it allows the model to search through large datasets, documents, or knowledge bases to surface relevant results with context-aware accuracy. Think of it as your AI assistant with built-in research superpowers—faster, smarter, and surprisingly precise. This preview gives developers a taste of what’s coming next: an intelligent search engine built directly into the GPT-4o ecosystem.

OpenAI GPT 4o Sear..

GPT-4o Search Preview is a powerful experimental feature of OpenAI’s GPT-4o model, designed to act as a high-performance retrieval system. Rather than just generating answers from training data, it allows the model to search through large datasets, documents, or knowledge bases to surface relevant results with context-aware accuracy. Think of it as your AI assistant with built-in research superpowers—faster, smarter, and surprisingly precise. This preview gives developers a taste of what’s coming next: an intelligent search engine built directly into the GPT-4o ecosystem.

OpenAI GPT 4o mini..

GPT-4o-mini Search Preview is OpenAI’s lightweight semantic search feature powered by the GPT-4o-mini model. Designed for real-time applications and low-latency environments, it brings retrieval-augmented intelligence to any product or tool that needs blazing-fast, accurate information lookup. While compact in size, it offers the power of contextual understanding, enabling smarter, more relevant search results with fewer resources. It’s ideal for startups, embedded systems, or anyone who needs search that just works—fast, efficient, and tuned for integration.

OpenAI GPT 4o mini..

GPT-4o-mini Search Preview is OpenAI’s lightweight semantic search feature powered by the GPT-4o-mini model. Designed for real-time applications and low-latency environments, it brings retrieval-augmented intelligence to any product or tool that needs blazing-fast, accurate information lookup. While compact in size, it offers the power of contextual understanding, enabling smarter, more relevant search results with fewer resources. It’s ideal for startups, embedded systems, or anyone who needs search that just works—fast, efficient, and tuned for integration.

OpenAI GPT 4o mini..

GPT-4o-mini Search Preview is OpenAI’s lightweight semantic search feature powered by the GPT-4o-mini model. Designed for real-time applications and low-latency environments, it brings retrieval-augmented intelligence to any product or tool that needs blazing-fast, accurate information lookup. While compact in size, it offers the power of contextual understanding, enabling smarter, more relevant search results with fewer resources. It’s ideal for startups, embedded systems, or anyone who needs search that just works—fast, efficient, and tuned for integration.

OpenAI Computer Us..

computer-use-preview is OpenAI’s groundbreaking experimental model that enables AI agents to interact with computer interfaces—just like a human would. It combines GPT-4o’s vision and reasoning capabilities with reinforcement learning to perceive, navigate, and control graphical user interfaces (GUIs) using screenshots and natural language instructions . This model can perform tasks such as clicking buttons, typing text, filling out forms, and navigating multi-step workflows across web and desktop applications. It represents a significant step toward general-purpose AI agents capable of automating real-world digital tasks without relying on traditional APIs.

OpenAI Computer Us..

computer-use-preview is OpenAI’s groundbreaking experimental model that enables AI agents to interact with computer interfaces—just like a human would. It combines GPT-4o’s vision and reasoning capabilities with reinforcement learning to perceive, navigate, and control graphical user interfaces (GUIs) using screenshots and natural language instructions . This model can perform tasks such as clicking buttons, typing text, filling out forms, and navigating multi-step workflows across web and desktop applications. It represents a significant step toward general-purpose AI agents capable of automating real-world digital tasks without relying on traditional APIs.

OpenAI Computer Us..

computer-use-preview is OpenAI’s groundbreaking experimental model that enables AI agents to interact with computer interfaces—just like a human would. It combines GPT-4o’s vision and reasoning capabilities with reinforcement learning to perceive, navigate, and control graphical user interfaces (GUIs) using screenshots and natural language instructions . This model can perform tasks such as clicking buttons, typing text, filling out forms, and navigating multi-step workflows across web and desktop applications. It represents a significant step toward general-purpose AI agents capable of automating real-world digital tasks without relying on traditional APIs.

OpenAI GPT 4 Turbo

GPT-4 Turbo is OpenAI’s enhanced version of GPT-4, engineered to deliver faster performance, extended context handling, and more cost-effective usage. Released in November 2023, GPT-4 Turbo boasts a 128,000-token context window, allowing it to process and generate longer and more complex content. It supports multimodal inputs, including text and images, making it versatile for various applications.

OpenAI GPT 4 Turbo

GPT-4 Turbo is OpenAI’s enhanced version of GPT-4, engineered to deliver faster performance, extended context handling, and more cost-effective usage. Released in November 2023, GPT-4 Turbo boasts a 128,000-token context window, allowing it to process and generate longer and more complex content. It supports multimodal inputs, including text and images, making it versatile for various applications.

OpenAI GPT 4 Turbo

GPT-4 Turbo is OpenAI’s enhanced version of GPT-4, engineered to deliver faster performance, extended context handling, and more cost-effective usage. Released in November 2023, GPT-4 Turbo boasts a 128,000-token context window, allowing it to process and generate longer and more complex content. It supports multimodal inputs, including text and images, making it versatile for various applications.

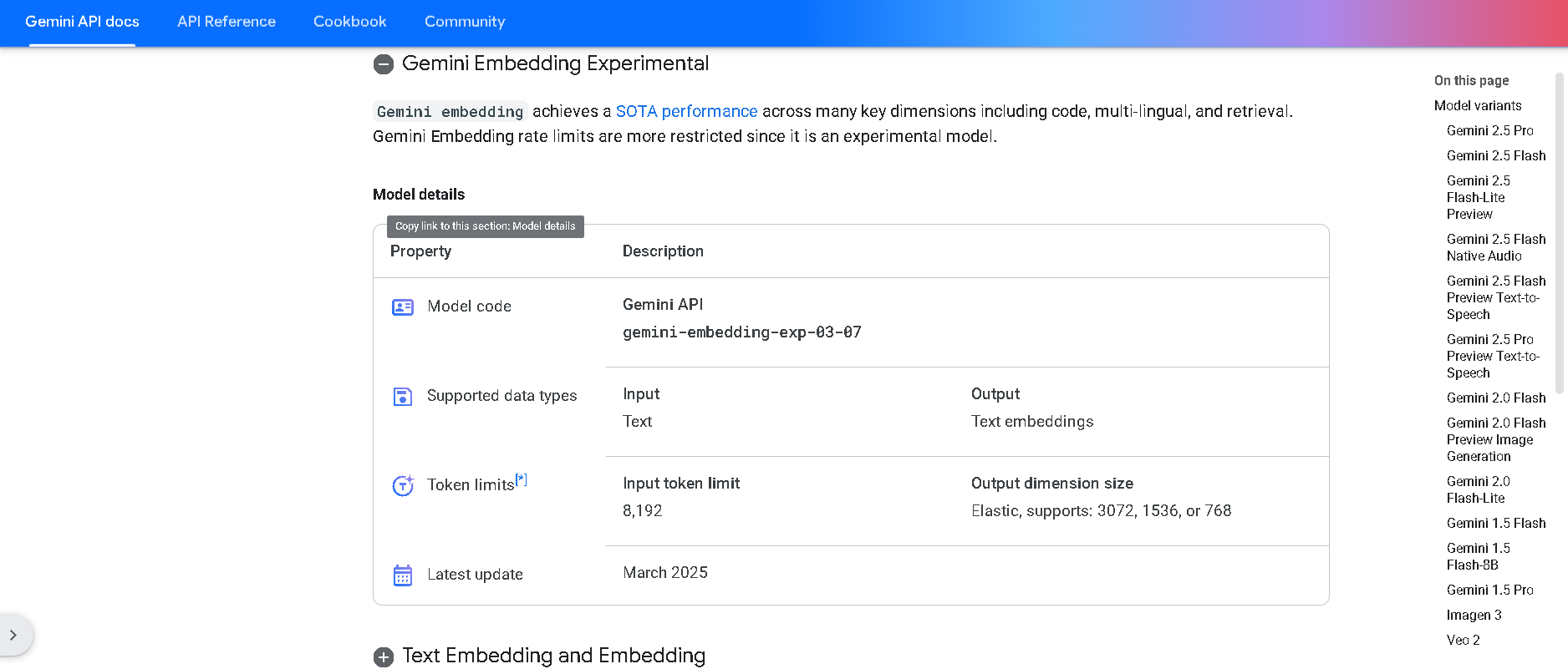

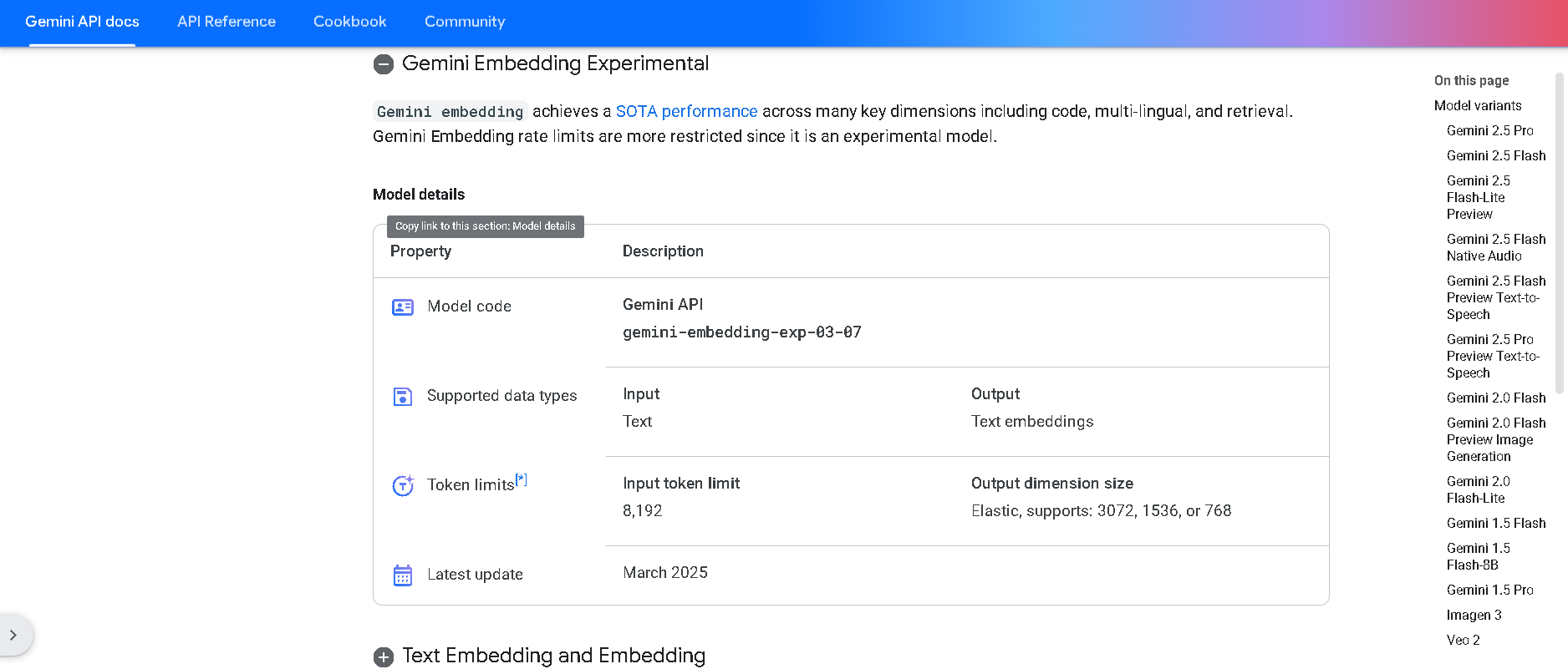

Gemini Embedding

Gemini Embedding is Google DeepMind’s state-of-the-art text embedding model, built on the powerful Gemini family. It transforms text into high-dimensional numerical vectors (up to 3,072 dimensions) with exceptional accuracy and generalization across over 100 languages and multiple modalities—including code. It achieves state-of-the-art results on the Massive Multilingual Text Embedding Benchmark (MMTEB), outperforming prior models across multilingual, English, and code-based tasks

Gemini Embedding

Gemini Embedding is Google DeepMind’s state-of-the-art text embedding model, built on the powerful Gemini family. It transforms text into high-dimensional numerical vectors (up to 3,072 dimensions) with exceptional accuracy and generalization across over 100 languages and multiple modalities—including code. It achieves state-of-the-art results on the Massive Multilingual Text Embedding Benchmark (MMTEB), outperforming prior models across multilingual, English, and code-based tasks

Gemini Embedding

Gemini Embedding is Google DeepMind’s state-of-the-art text embedding model, built on the powerful Gemini family. It transforms text into high-dimensional numerical vectors (up to 3,072 dimensions) with exceptional accuracy and generalization across over 100 languages and multiple modalities—including code. It achieves state-of-the-art results on the Massive Multilingual Text Embedding Benchmark (MMTEB), outperforming prior models across multilingual, English, and code-based tasks

Mistral Embed

Mistral Embed is Mistral AI’s high-performance text embedding model designed for semantic retrieval, clustering, classification, and retrieval-augmented generation (RAG). With support for up to 8,192 tokens and producing 1,024-dimensional vectors, it delivers state-of-the-art semantic similarity and organization capabilities.

Mistral Embed

Mistral Embed is Mistral AI’s high-performance text embedding model designed for semantic retrieval, clustering, classification, and retrieval-augmented generation (RAG). With support for up to 8,192 tokens and producing 1,024-dimensional vectors, it delivers state-of-the-art semantic similarity and organization capabilities.

Mistral Embed

Mistral Embed is Mistral AI’s high-performance text embedding model designed for semantic retrieval, clustering, classification, and retrieval-augmented generation (RAG). With support for up to 8,192 tokens and producing 1,024-dimensional vectors, it delivers state-of-the-art semantic similarity and organization capabilities.

Upstage Document P..

Upstage Document Parse is an advanced AI-powered document processing tool designed to convert complex documents such as PDFs, scanned images, spreadsheets, and slides into structured, machine-readable text formats like HTML and Markdown. It excels at accurately recognizing and preserving complex layouts, tables, charts, and even handwritten elements with unmatched speed—processing over 100 pages in under a minute. The tool improves knowledge retrieval, enables quick decision-making through AI-driven summarization, and enhances accessibility by converting lengthy reports and legal documents into clean digital formats. Upstage Document Parse is scalable, easy to integrate via REST API or on-premises deployment, and certified for enterprise-grade security including SOC2 and ISO 27001.

Upstage Document P..

Upstage Document Parse is an advanced AI-powered document processing tool designed to convert complex documents such as PDFs, scanned images, spreadsheets, and slides into structured, machine-readable text formats like HTML and Markdown. It excels at accurately recognizing and preserving complex layouts, tables, charts, and even handwritten elements with unmatched speed—processing over 100 pages in under a minute. The tool improves knowledge retrieval, enables quick decision-making through AI-driven summarization, and enhances accessibility by converting lengthy reports and legal documents into clean digital formats. Upstage Document Parse is scalable, easy to integrate via REST API or on-premises deployment, and certified for enterprise-grade security including SOC2 and ISO 27001.

Upstage Document P..

Upstage Document Parse is an advanced AI-powered document processing tool designed to convert complex documents such as PDFs, scanned images, spreadsheets, and slides into structured, machine-readable text formats like HTML and Markdown. It excels at accurately recognizing and preserving complex layouts, tables, charts, and even handwritten elements with unmatched speed—processing over 100 pages in under a minute. The tool improves knowledge retrieval, enables quick decision-making through AI-driven summarization, and enhances accessibility by converting lengthy reports and legal documents into clean digital formats. Upstage Document Parse is scalable, easy to integrate via REST API or on-premises deployment, and certified for enterprise-grade security including SOC2 and ISO 27001.

Chat01.ai

OpenAI01.net is a third-party, browser-based chat platform that lets you use OpenAI’s o1 family of advanced reasoning models for free, without needing your own API key or paid account. Branded as Chat01.ai in some places, it focuses on giving users generous access to o1-preview and o1-mini through a simple chat interface so they can tackle complex math, coding, science, and problem-solving tasks. The site often features public question-and-answer threads, allowing you to study other users’ prompts and responses to improve your own prompting skills. It acts as an accessible front-end to powerful OpenAI models, but is not officially operated by OpenAI.

Chat01.ai

OpenAI01.net is a third-party, browser-based chat platform that lets you use OpenAI’s o1 family of advanced reasoning models for free, without needing your own API key or paid account. Branded as Chat01.ai in some places, it focuses on giving users generous access to o1-preview and o1-mini through a simple chat interface so they can tackle complex math, coding, science, and problem-solving tasks. The site often features public question-and-answer threads, allowing you to study other users’ prompts and responses to improve your own prompting skills. It acts as an accessible front-end to powerful OpenAI models, but is not officially operated by OpenAI.

Chat01.ai

OpenAI01.net is a third-party, browser-based chat platform that lets you use OpenAI’s o1 family of advanced reasoning models for free, without needing your own API key or paid account. Branded as Chat01.ai in some places, it focuses on giving users generous access to o1-preview and o1-mini through a simple chat interface so they can tackle complex math, coding, science, and problem-solving tasks. The site often features public question-and-answer threads, allowing you to study other users’ prompts and responses to improve your own prompting skills. It acts as an accessible front-end to powerful OpenAI models, but is not officially operated by OpenAI.

Text to API

Text to API is an LLM-powered API engine that lets you build and deploy AI-driven APIs in seconds using natural language instead of boilerplate backend code. You describe the data or functionality you want, and the engine handles schema creation, integration with providers like Firecrawl and OpenAI, and endpoint deployment behind the scenes. It’s designed to turn messy web data into clean, structured APIs, so developers can focus on product logic rather than wiring infrastructure. With a workflow built around dynamic schema generation, intelligent web scraping, and real-time updates, it’s ideal for quickly prototyping or shipping production-ready AI features.

Text to API

Text to API is an LLM-powered API engine that lets you build and deploy AI-driven APIs in seconds using natural language instead of boilerplate backend code. You describe the data or functionality you want, and the engine handles schema creation, integration with providers like Firecrawl and OpenAI, and endpoint deployment behind the scenes. It’s designed to turn messy web data into clean, structured APIs, so developers can focus on product logic rather than wiring infrastructure. With a workflow built around dynamic schema generation, intelligent web scraping, and real-time updates, it’s ideal for quickly prototyping or shipping production-ready AI features.

Text to API

Text to API is an LLM-powered API engine that lets you build and deploy AI-driven APIs in seconds using natural language instead of boilerplate backend code. You describe the data or functionality you want, and the engine handles schema creation, integration with providers like Firecrawl and OpenAI, and endpoint deployment behind the scenes. It’s designed to turn messy web data into clean, structured APIs, so developers can focus on product logic rather than wiring infrastructure. With a workflow built around dynamic schema generation, intelligent web scraping, and real-time updates, it’s ideal for quickly prototyping or shipping production-ready AI features.

Editorial Note

This page was researched and written by the ATB Editorial Team. Our team researches each AI tool by reviewing its official website, testing features, exploring real use cases, and considering user feedback. Every page is fact-checked and regularly updated to ensure the information stays accurate, neutral, and useful for our readers.

If you have any suggestions or questions, email us at hello@aitoolbook.ai