- Backend Developers: Spin up structured APIs from text descriptions without repetitive boilerplate.

- Full-Stack Engineers: Connect frontends to AI-powered endpoints in minutes.

- Data Engineers: Transform web data into queryable, structured APIs at scale.

- Product Builders & Hackers: Validate ideas fast with working endpoints instead of mockups.

- AI Engineers: Orchestrate OpenAI and Firecrawl-powered pipelines via simple API layers.

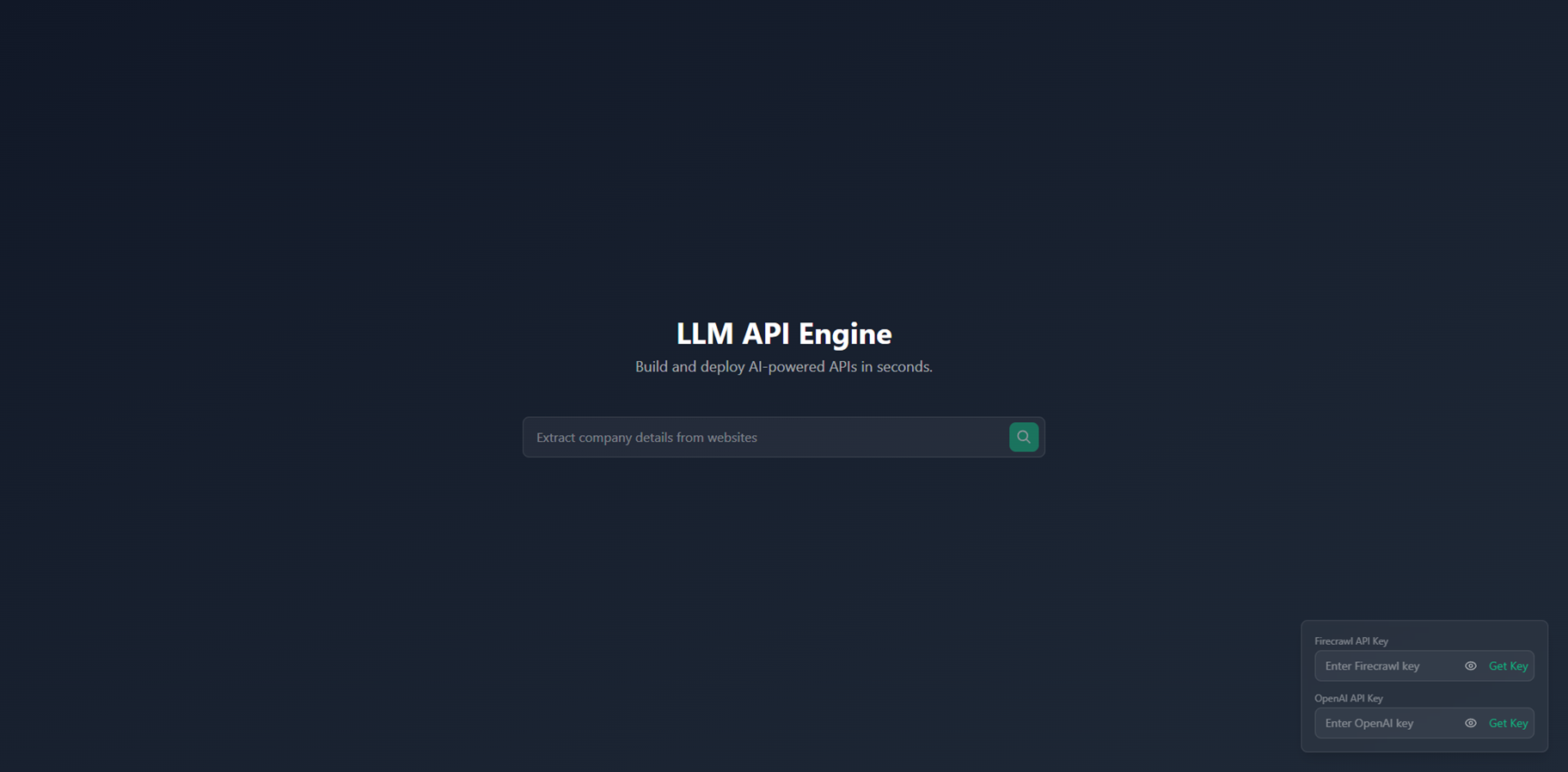

How to Use Text to API?

- Describe Your API: Write in plain language what data or functionality you need.

- Generate Schema: Let the engine create a JSON schema and API definition automatically.

- Connect Providers: Plug in your OpenAI and Firecrawl keys for LLM and web data access.

- Deploy Endpoint: Ship the generated API and start calling it from your app.

- Natural Language to API: Converts plain English descriptions into working endpoints.

- Automated JSON Schemas: Uses LLMs to infer fields, types, and structures for you.

- Firecrawl Integration: Scrapes and structures web data directly into your APIs.

- Instant Deployment: Designed for rapid API creation and hosting with minimal setup.

- Developer-Friendly Stack: Built around modern tooling so you can extend or self-host easily.

- Removes a lot of backend grunt work for quick experiments.

- Great fit for data-heavy, web-scraping-powered applications.

- Tight OpenAI and Firecrawl integrations out of the box.

- Natural language flow lowers the barrier for non-expert backend coders.

- Best suited to web-data-style APIs, not every backend use case.

- Still depends on external LLM and scraping providers for core power.

- Complex production needs may require extra custom infrastructure.

- Documentation and ecosystem are smaller than long-standing API platforms.

Custom

Pricing information is not directly provided.

Proud of the love you're getting? Show off your AI Toolbook reviews—then invite more fans to share the love and build your credibility.

Add an AI Toolbook badge to your site—an easy way to drive followers, showcase updates, and collect reviews. It's like a mini 24/7 billboard for your AI.

Reviews

Rating Distribution

Average score

Popular Mention

FAQs

Similar AI Tools

Radal AI

Radal AI is a no-code platform designed to simplify the training and deployment of small language models (SLMs) without requiring engineering or MLOps expertise. With an intuitive visual interface, you can drag your data, interact with an AI copilot, and train models with a single click. Trained models can be exported in quantized form for edge or local deployment, and seamlessly pushed to Hugging Face for easy sharing and versioning. Radal enables rapid iteration on custom models—making AI accessible to startups, researchers, and teams building domain-specific intelligence.

Radal AI

Radal AI is a no-code platform designed to simplify the training and deployment of small language models (SLMs) without requiring engineering or MLOps expertise. With an intuitive visual interface, you can drag your data, interact with an AI copilot, and train models with a single click. Trained models can be exported in quantized form for edge or local deployment, and seamlessly pushed to Hugging Face for easy sharing and versioning. Radal enables rapid iteration on custom models—making AI accessible to startups, researchers, and teams building domain-specific intelligence.

Radal AI

Radal AI is a no-code platform designed to simplify the training and deployment of small language models (SLMs) without requiring engineering or MLOps expertise. With an intuitive visual interface, you can drag your data, interact with an AI copilot, and train models with a single click. Trained models can be exported in quantized form for edge or local deployment, and seamlessly pushed to Hugging Face for easy sharing and versioning. Radal enables rapid iteration on custom models—making AI accessible to startups, researchers, and teams building domain-specific intelligence.

Sim Studio

Sim.AI is a cloud-native platform designed to streamline the development and deployment of AI agents. It offers a user-friendly, open-source environment that allows developers to create, connect, and automate workflows effortlessly. With seamless integrations and no-code setup, Sim.AI empowers teams to enhance productivity and innovation.

Sim Studio

Sim.AI is a cloud-native platform designed to streamline the development and deployment of AI agents. It offers a user-friendly, open-source environment that allows developers to create, connect, and automate workflows effortlessly. With seamless integrations and no-code setup, Sim.AI empowers teams to enhance productivity and innovation.

Sim Studio

Sim.AI is a cloud-native platform designed to streamline the development and deployment of AI agents. It offers a user-friendly, open-source environment that allows developers to create, connect, and automate workflows effortlessly. With seamless integrations and no-code setup, Sim.AI empowers teams to enhance productivity and innovation.

Linked API

LinkedAPI.io is a powerful platform providing access to LinkedIn's API, enabling businesses and developers to automate various LinkedIn tasks, extract valuable data, and integrate LinkedIn functionality into their applications. It simplifies the process of interacting with LinkedIn's data, removing the complexities of direct API integration.

Linked API

LinkedAPI.io is a powerful platform providing access to LinkedIn's API, enabling businesses and developers to automate various LinkedIn tasks, extract valuable data, and integrate LinkedIn functionality into their applications. It simplifies the process of interacting with LinkedIn's data, removing the complexities of direct API integration.

Linked API

LinkedAPI.io is a powerful platform providing access to LinkedIn's API, enabling businesses and developers to automate various LinkedIn tasks, extract valuable data, and integrate LinkedIn functionality into their applications. It simplifies the process of interacting with LinkedIn's data, removing the complexities of direct API integration.

APIDNA

APIDNA is an AI-powered platform that transforms API integrations by using autonomous AI agents to automate complex tasks such as endpoint integration, client mapping, data handling, and response management. The platform allows developers to connect software systems seamlessly without writing manual code. It streamlines integration processes by analyzing APIs, mapping client requests, transforming data, and generating ready-to-use code automatically. With real-time monitoring, end-to-end testing, and robust security, APIDNA ensures integrations are efficient, reliable, and scalable. It caters to software developers, system integrators, IT managers, and automation engineers across multiple industries, including fintech, healthcare, and e-commerce. By reducing the manual effort involved in connecting APIs, APIDNA frees teams to focus on building innovative features and improving business operations.

APIDNA

APIDNA is an AI-powered platform that transforms API integrations by using autonomous AI agents to automate complex tasks such as endpoint integration, client mapping, data handling, and response management. The platform allows developers to connect software systems seamlessly without writing manual code. It streamlines integration processes by analyzing APIs, mapping client requests, transforming data, and generating ready-to-use code automatically. With real-time monitoring, end-to-end testing, and robust security, APIDNA ensures integrations are efficient, reliable, and scalable. It caters to software developers, system integrators, IT managers, and automation engineers across multiple industries, including fintech, healthcare, and e-commerce. By reducing the manual effort involved in connecting APIs, APIDNA frees teams to focus on building innovative features and improving business operations.

APIDNA

APIDNA is an AI-powered platform that transforms API integrations by using autonomous AI agents to automate complex tasks such as endpoint integration, client mapping, data handling, and response management. The platform allows developers to connect software systems seamlessly without writing manual code. It streamlines integration processes by analyzing APIs, mapping client requests, transforming data, and generating ready-to-use code automatically. With real-time monitoring, end-to-end testing, and robust security, APIDNA ensures integrations are efficient, reliable, and scalable. It caters to software developers, system integrators, IT managers, and automation engineers across multiple industries, including fintech, healthcare, and e-commerce. By reducing the manual effort involved in connecting APIs, APIDNA frees teams to focus on building innovative features and improving business operations.

Vertesia HQ

Vertesia is an enterprise generative AI platform built to help organizations design, deploy, and operate AI applications and agents at scale using a low-code approach. Its unified system offers multi-model support, trust/security controls, and components like Agentic RAG, autonomous agent builders, and document processing tools, all packaged in a way that lets teams move from prototype to production rapidly.

Vertesia HQ

Vertesia is an enterprise generative AI platform built to help organizations design, deploy, and operate AI applications and agents at scale using a low-code approach. Its unified system offers multi-model support, trust/security controls, and components like Agentic RAG, autonomous agent builders, and document processing tools, all packaged in a way that lets teams move from prototype to production rapidly.

Vertesia HQ

Vertesia is an enterprise generative AI platform built to help organizations design, deploy, and operate AI applications and agents at scale using a low-code approach. Its unified system offers multi-model support, trust/security controls, and components like Agentic RAG, autonomous agent builders, and document processing tools, all packaged in a way that lets teams move from prototype to production rapidly.

Soket AI

Soket AI is an Indian deep-tech startup building sovereign, multilingual foundational AI models and real-time voice/speech APIs designed for Indic languages and global scale. By focusing on language diversity, cultural context and ethical AI, Soket AI aims to develop models that recognise and respond across many languages, while delivering enterprise-grade capabilities for sectors such as defence, healthcare, education and governance.

Soket AI

Soket AI is an Indian deep-tech startup building sovereign, multilingual foundational AI models and real-time voice/speech APIs designed for Indic languages and global scale. By focusing on language diversity, cultural context and ethical AI, Soket AI aims to develop models that recognise and respond across many languages, while delivering enterprise-grade capabilities for sectors such as defence, healthcare, education and governance.

Soket AI

Soket AI is an Indian deep-tech startup building sovereign, multilingual foundational AI models and real-time voice/speech APIs designed for Indic languages and global scale. By focusing on language diversity, cultural context and ethical AI, Soket AI aims to develop models that recognise and respond across many languages, while delivering enterprise-grade capabilities for sectors such as defence, healthcare, education and governance.

Developer Toolkit

DeveloperToolkit.ai is an advanced AI-assisted development platform designed to help developers build production-grade, scalable, and maintainable software. It leverages powerful models like Claude Code and Cursor to generate production-ready code that’s secure, tested, and optimized for real-world deployment. Unlike tools that stop at quick prototypes, DeveloperToolkit.ai focuses on long-term code quality, maintainability, and best practices. Whether writing API endpoints, components, or full-fledged systems, it accelerates the entire development process while ensuring cleaner architectures and stable results fit for teams that ship with confidence.

Developer Toolkit

DeveloperToolkit.ai is an advanced AI-assisted development platform designed to help developers build production-grade, scalable, and maintainable software. It leverages powerful models like Claude Code and Cursor to generate production-ready code that’s secure, tested, and optimized for real-world deployment. Unlike tools that stop at quick prototypes, DeveloperToolkit.ai focuses on long-term code quality, maintainability, and best practices. Whether writing API endpoints, components, or full-fledged systems, it accelerates the entire development process while ensuring cleaner architectures and stable results fit for teams that ship with confidence.

Developer Toolkit

DeveloperToolkit.ai is an advanced AI-assisted development platform designed to help developers build production-grade, scalable, and maintainable software. It leverages powerful models like Claude Code and Cursor to generate production-ready code that’s secure, tested, and optimized for real-world deployment. Unlike tools that stop at quick prototypes, DeveloperToolkit.ai focuses on long-term code quality, maintainability, and best practices. Whether writing API endpoints, components, or full-fledged systems, it accelerates the entire development process while ensuring cleaner architectures and stable results fit for teams that ship with confidence.

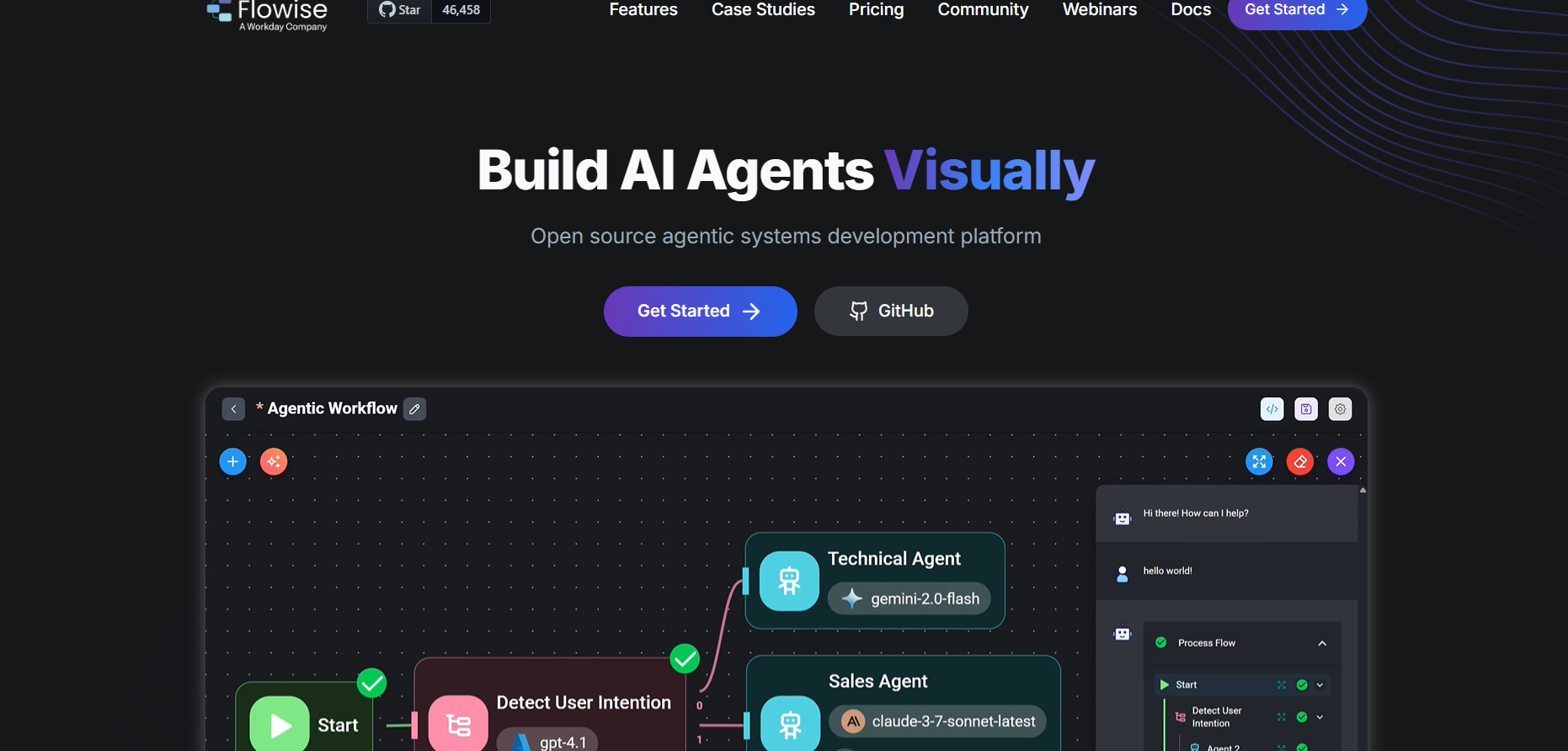

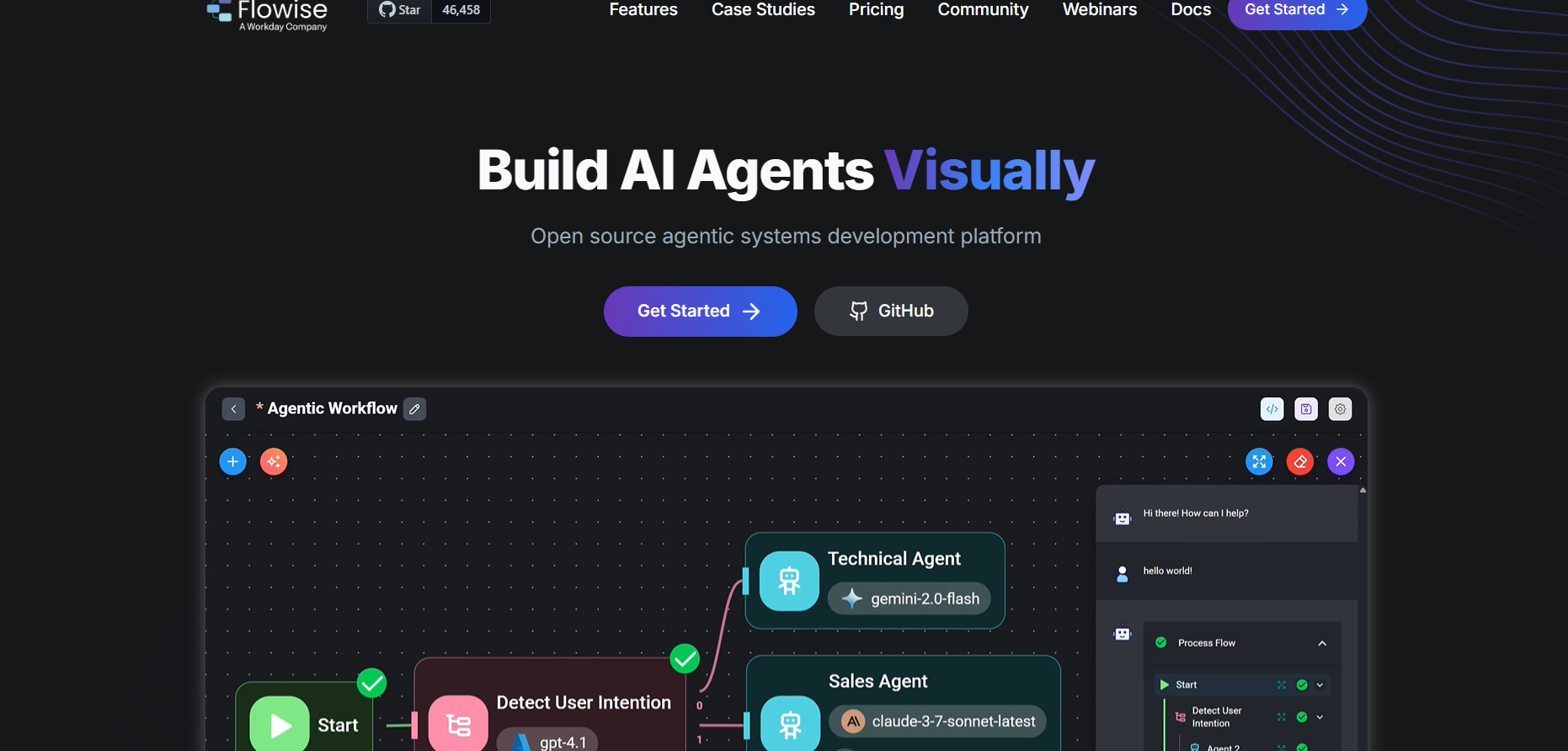

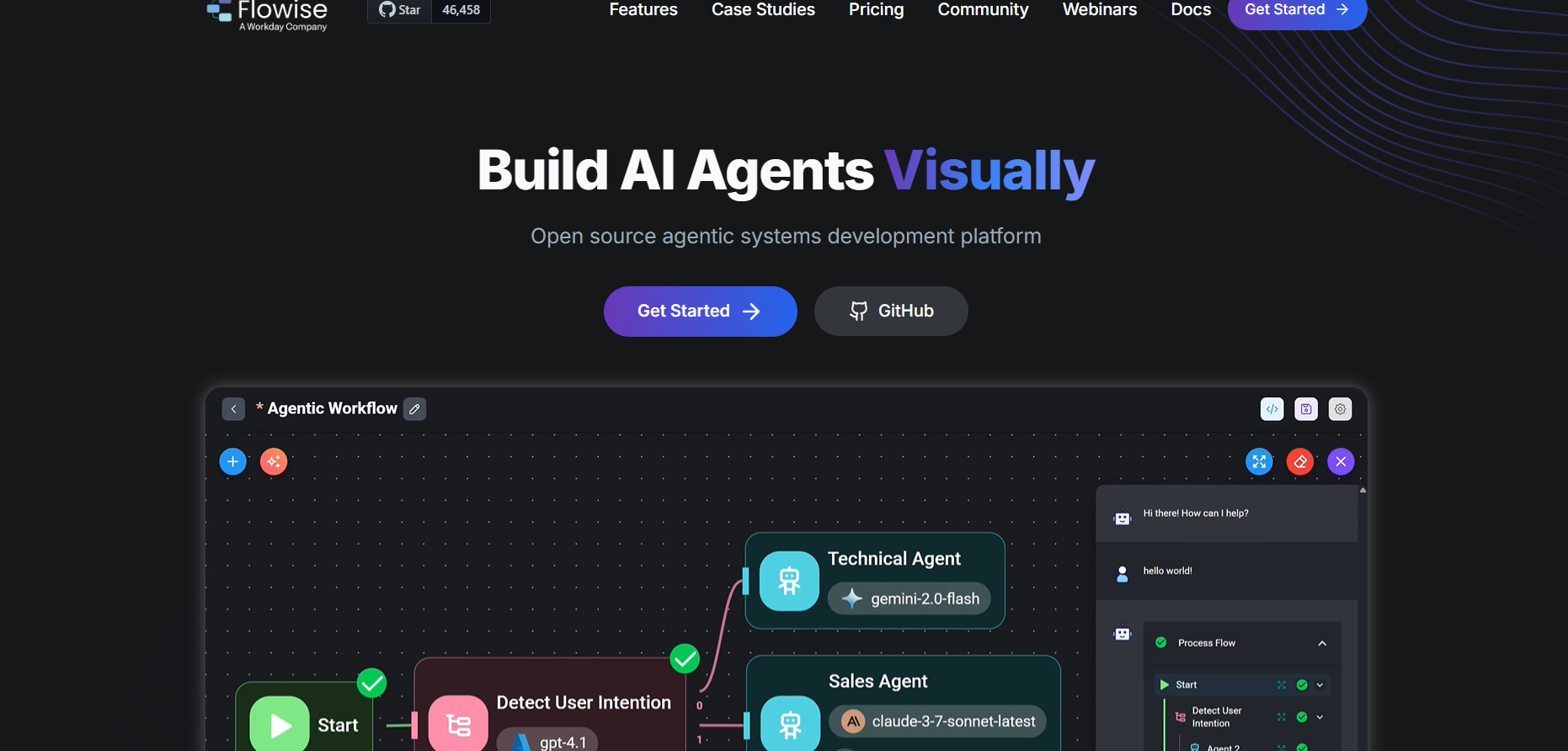

Flowise AI

Flowise AI is an open-source, visual tool that allows users to build, deploy, and manage AI workflows and chatbots powered by large language models without needing to code. It provides a drag-and-drop interface where users can visually connect LangChain components, APIs, data sources, and models to create complex AI systems easily. With Flowise AI, developers, analysts, and businesses can build chatbots, RAG pipelines, or automation systems through an intuitive UI rather than scripting everything manually. Its no-code design accelerates prototyping and deployment, enabling faster experimentation with LLM-powered workflows.

Flowise AI

Flowise AI is an open-source, visual tool that allows users to build, deploy, and manage AI workflows and chatbots powered by large language models without needing to code. It provides a drag-and-drop interface where users can visually connect LangChain components, APIs, data sources, and models to create complex AI systems easily. With Flowise AI, developers, analysts, and businesses can build chatbots, RAG pipelines, or automation systems through an intuitive UI rather than scripting everything manually. Its no-code design accelerates prototyping and deployment, enabling faster experimentation with LLM-powered workflows.

Flowise AI

Flowise AI is an open-source, visual tool that allows users to build, deploy, and manage AI workflows and chatbots powered by large language models without needing to code. It provides a drag-and-drop interface where users can visually connect LangChain components, APIs, data sources, and models to create complex AI systems easily. With Flowise AI, developers, analysts, and businesses can build chatbots, RAG pipelines, or automation systems through an intuitive UI rather than scripting everything manually. Its no-code design accelerates prototyping and deployment, enabling faster experimentation with LLM-powered workflows.

Haystack is an open-source framework developed by deepset for building production-ready search and question-answering systems powered by language models. It enables developers to connect LLMs with structured and unstructured data sources, perform retrieval-augmented generation, and create semantic search pipelines. Haystack provides flexibility to integrate various retrievers, readers, and document stores like Elasticsearch, FAISS, and Pinecone. It’s widely used for enterprise document Q&A, chatbots, and knowledge management systems, helping teams deploy scalable, high-performance AI-powered search.

Haystack by Deepse..

Haystack is an open-source framework developed by deepset for building production-ready search and question-answering systems powered by language models. It enables developers to connect LLMs with structured and unstructured data sources, perform retrieval-augmented generation, and create semantic search pipelines. Haystack provides flexibility to integrate various retrievers, readers, and document stores like Elasticsearch, FAISS, and Pinecone. It’s widely used for enterprise document Q&A, chatbots, and knowledge management systems, helping teams deploy scalable, high-performance AI-powered search.

Haystack by Deepse..

Haystack is an open-source framework developed by deepset for building production-ready search and question-answering systems powered by language models. It enables developers to connect LLMs with structured and unstructured data sources, perform retrieval-augmented generation, and create semantic search pipelines. Haystack provides flexibility to integrate various retrievers, readers, and document stores like Elasticsearch, FAISS, and Pinecone. It’s widely used for enterprise document Q&A, chatbots, and knowledge management systems, helping teams deploy scalable, high-performance AI-powered search.

Awan LLM

Awan LLM is a cost-effective, unlimited token large language model inference API platform designed for power users and developers. Unlike traditional API providers that charge per token, Awan LLM offers a monthly subscription model that enables users to send and receive unlimited tokens up to the model's context limit. It supports unrestricted use of LLM models without censorship or constraints. The platform is built on privately owned data centers and GPUs, allowing it to offer efficient and scalable AI services. Awan LLM supports numerous use cases including AI assistants, AI agents, roleplaying, data processing, code completion, and building AI-powered applications without worrying about token limits or costs.

Awan LLM

Awan LLM is a cost-effective, unlimited token large language model inference API platform designed for power users and developers. Unlike traditional API providers that charge per token, Awan LLM offers a monthly subscription model that enables users to send and receive unlimited tokens up to the model's context limit. It supports unrestricted use of LLM models without censorship or constraints. The platform is built on privately owned data centers and GPUs, allowing it to offer efficient and scalable AI services. Awan LLM supports numerous use cases including AI assistants, AI agents, roleplaying, data processing, code completion, and building AI-powered applications without worrying about token limits or costs.

Awan LLM

Awan LLM is a cost-effective, unlimited token large language model inference API platform designed for power users and developers. Unlike traditional API providers that charge per token, Awan LLM offers a monthly subscription model that enables users to send and receive unlimited tokens up to the model's context limit. It supports unrestricted use of LLM models without censorship or constraints. The platform is built on privately owned data centers and GPUs, allowing it to offer efficient and scalable AI services. Awan LLM supports numerous use cases including AI assistants, AI agents, roleplaying, data processing, code completion, and building AI-powered applications without worrying about token limits or costs.

LLM as-a-service

LLM.co LLM-as-a-Service (LLMaaS) is a secure, enterprise-grade AI platform that provides private and fully managed large language model deployments tailored to an organization’s specific industry, workflows, and data. Unlike public LLM APIs, each client receives a dedicated, single-tenant model hosted in private clouds or virtual private clouds (VPCs), ensuring complete data privacy and compliance. The platform offers model fine-tuning on proprietary internal documents, semantic search, multi-document Q&A, custom AI agents, contract review, and offline AI capabilities for regulated industries. It removes infrastructure burdens by handling deployment, scaling, and monitoring, while enabling businesses to customize models for domain-specific language, regulatory compliance, and unique operational needs.

LLM as-a-service

LLM.co LLM-as-a-Service (LLMaaS) is a secure, enterprise-grade AI platform that provides private and fully managed large language model deployments tailored to an organization’s specific industry, workflows, and data. Unlike public LLM APIs, each client receives a dedicated, single-tenant model hosted in private clouds or virtual private clouds (VPCs), ensuring complete data privacy and compliance. The platform offers model fine-tuning on proprietary internal documents, semantic search, multi-document Q&A, custom AI agents, contract review, and offline AI capabilities for regulated industries. It removes infrastructure burdens by handling deployment, scaling, and monitoring, while enabling businesses to customize models for domain-specific language, regulatory compliance, and unique operational needs.

LLM as-a-service

LLM.co LLM-as-a-Service (LLMaaS) is a secure, enterprise-grade AI platform that provides private and fully managed large language model deployments tailored to an organization’s specific industry, workflows, and data. Unlike public LLM APIs, each client receives a dedicated, single-tenant model hosted in private clouds or virtual private clouds (VPCs), ensuring complete data privacy and compliance. The platform offers model fine-tuning on proprietary internal documents, semantic search, multi-document Q&A, custom AI agents, contract review, and offline AI capabilities for regulated industries. It removes infrastructure burdens by handling deployment, scaling, and monitoring, while enabling businesses to customize models for domain-specific language, regulatory compliance, and unique operational needs.

FastRouter.ai

FastRouter.ai serves as a unified API gateway for large language models, simplifying access to over 100 LLMs from providers like OpenAI, Anthropic, Google, Meta, and more through a single OpenAI-compatible endpoint. Developers and enterprises route requests intelligently based on speed, cost, reliability, and performance, with automatic failover, real-time analytics, and no transaction fees. Key features include multimodal support for text, images, video, embeddings, web search integration, custom model lists, project governance with budgets and permissions, a model playground for comparisons, and seamless IDE integrations like Cursor and Cline. It handles high-scale workloads with superior latency and uptime.

FastRouter.ai

FastRouter.ai serves as a unified API gateway for large language models, simplifying access to over 100 LLMs from providers like OpenAI, Anthropic, Google, Meta, and more through a single OpenAI-compatible endpoint. Developers and enterprises route requests intelligently based on speed, cost, reliability, and performance, with automatic failover, real-time analytics, and no transaction fees. Key features include multimodal support for text, images, video, embeddings, web search integration, custom model lists, project governance with budgets and permissions, a model playground for comparisons, and seamless IDE integrations like Cursor and Cline. It handles high-scale workloads with superior latency and uptime.

FastRouter.ai

FastRouter.ai serves as a unified API gateway for large language models, simplifying access to over 100 LLMs from providers like OpenAI, Anthropic, Google, Meta, and more through a single OpenAI-compatible endpoint. Developers and enterprises route requests intelligently based on speed, cost, reliability, and performance, with automatic failover, real-time analytics, and no transaction fees. Key features include multimodal support for text, images, video, embeddings, web search integration, custom model lists, project governance with budgets and permissions, a model playground for comparisons, and seamless IDE integrations like Cursor and Cline. It handles high-scale workloads with superior latency and uptime.

Editorial Note

This page was researched and written by the ATB Editorial Team. Our team researches each AI tool by reviewing its official website, testing features, exploring real use cases, and considering user feedback. Every page is fact-checked and regularly updated to ensure the information stays accurate, neutral, and useful for our readers.

If you have any suggestions or questions, email us at hello@aitoolbook.ai