- Developers & Engineers: Integrate multiple LLMs easily with one API for coding, chat, and multimodal apps.

- AI Product Builders: Optimize routing for cost, speed, and reliability in production apps.

- CTO & Tech Leads: Manage teams, budgets, and governance across LLM providers centrally.

- Enterprises: Scale to millions of QPS with failover, analytics, and compliance tools.

- Startups & Teams: Test models side-by-side and track usage without vendor lock-in.

How to Use FastRouter.ai?

- Sign Up & Get API Key: Register at fastrouter.ai for free credits and generate your API key.

- Configure Endpoint: Use https://go.fastrouter.ai/api/v1 as base URL in OpenAI-compatible clients.

- Select Models & Route: Choose from model directory or create virtual lists for auto-routing.

- Monitor & Analyze: Track usage, latency, costs via dashboard with tags and logs.

- Intelligent Auto-Routing: Automatically picks optimal model balancing speed, cost, reliability.

- No Transaction Fees: Pay only usage costs without markups for predictable budgeting.

- Multimodal Full Support: Handles chat, images, video, embeddings, web search in one gateway.

- Enterprise Governance: Project budgets, rate limits, permissions, real-time analytics.

- Model Playground: Side-by-side testing and custom virtual model sets for quick eval.

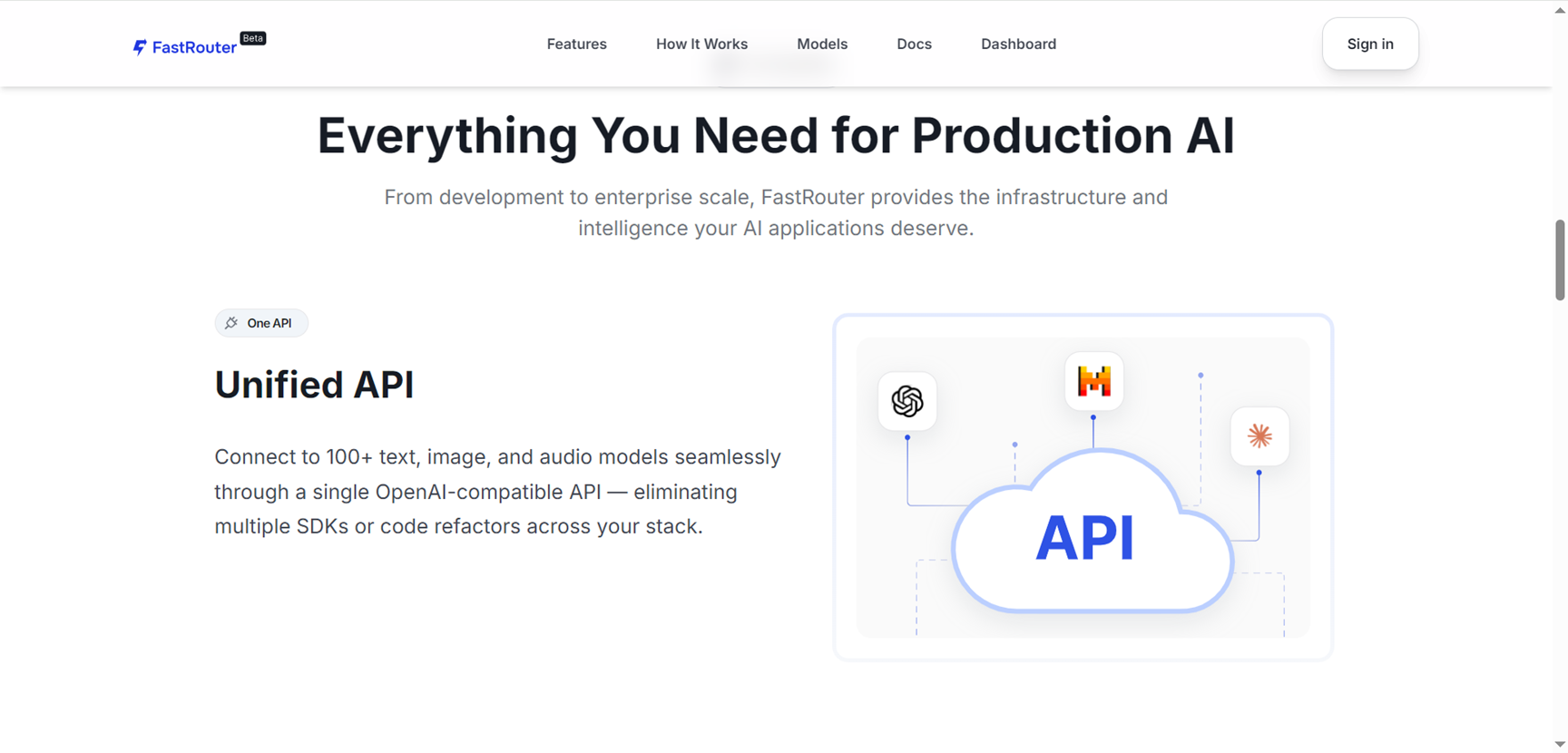

- One API accesses 100+ models from top providers seamlessly.

- Auto-routing optimizes for performance without manual tweaks.

- Built-in failover and analytics boost reliability and insights.

- Free credits on signup make testing easy and risk-free.

- Beta phase may limit some advanced features initially.

- Relies on underlying provider limits for certain models.

- Learning curve for custom routing strategies.

- Web search add-on incurs extra per-answer costs.

Custom

Pricing information is not directly provided.

Proud of the love you're getting? Show off your AI Toolbook reviews—then invite more fans to share the love and build your credibility.

Add an AI Toolbook badge to your site—an easy way to drive followers, showcase updates, and collect reviews. It's like a mini 24/7 billboard for your AI.

Reviews

Rating Distribution

Average score

Popular Mention

FAQs

Similar AI Tools

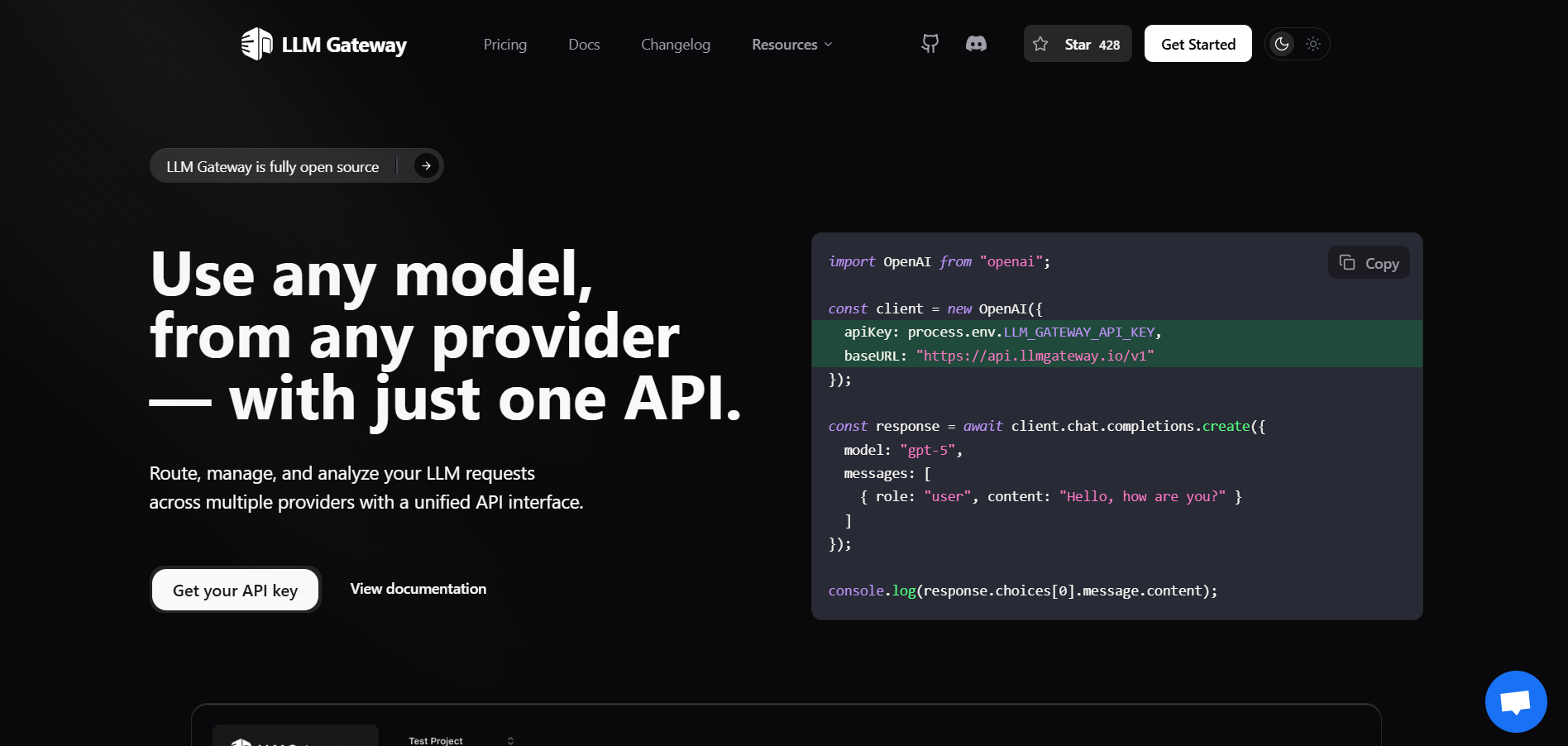

LLM Gateway

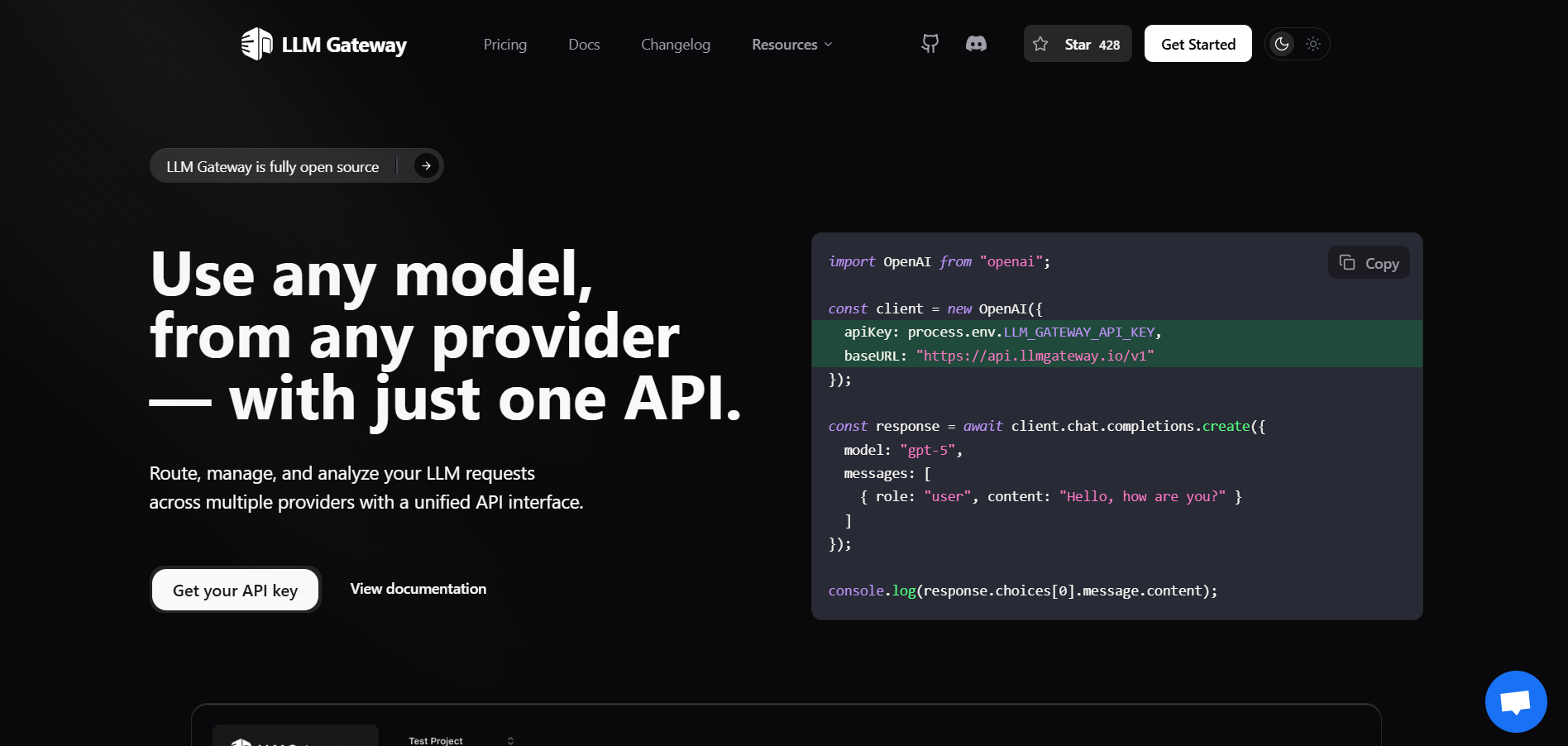

LLM Gateway is a unified API gateway designed to simplify working with large language models (LLMs) from multiple providers by offering a single, OpenAI-compatible endpoint. Whether using OpenAI, Anthropic, Google Vertex AI, or others, developers can route, monitor, and manage requests—all without altering existing code. Available as an open-source self-hosted option (MIT-licensed) or hosted service, it combines powerful features for analytics, cost optimization, and performance management—all under one roof.

LLM Gateway

LLM Gateway is a unified API gateway designed to simplify working with large language models (LLMs) from multiple providers by offering a single, OpenAI-compatible endpoint. Whether using OpenAI, Anthropic, Google Vertex AI, or others, developers can route, monitor, and manage requests—all without altering existing code. Available as an open-source self-hosted option (MIT-licensed) or hosted service, it combines powerful features for analytics, cost optimization, and performance management—all under one roof.

LLM Gateway

LLM Gateway is a unified API gateway designed to simplify working with large language models (LLMs) from multiple providers by offering a single, OpenAI-compatible endpoint. Whether using OpenAI, Anthropic, Google Vertex AI, or others, developers can route, monitor, and manage requests—all without altering existing code. Available as an open-source self-hosted option (MIT-licensed) or hosted service, it combines powerful features for analytics, cost optimization, and performance management—all under one roof.

SiliconFlow

SiliconFlow is an AI infrastructure platform built for developers and enterprises who want to deploy, run, and fine-tune large language models (LLMs) and multimodal models efficiently. It offers a unified stack for inference, model hosting, and acceleration so that you don’t have to manage all the infrastructure yourself. The platform supports many open source and commercial models, high throughput, low latency, autoscaling and flexible deployment (serverless, reserved GPUs, private cloud). It also emphasizes cost-effectiveness, data security, and feature-rich tooling such as APIs compatible with OpenAI style, fine-tuning, monitoring, and scalability.

SiliconFlow

SiliconFlow is an AI infrastructure platform built for developers and enterprises who want to deploy, run, and fine-tune large language models (LLMs) and multimodal models efficiently. It offers a unified stack for inference, model hosting, and acceleration so that you don’t have to manage all the infrastructure yourself. The platform supports many open source and commercial models, high throughput, low latency, autoscaling and flexible deployment (serverless, reserved GPUs, private cloud). It also emphasizes cost-effectiveness, data security, and feature-rich tooling such as APIs compatible with OpenAI style, fine-tuning, monitoring, and scalability.

SiliconFlow

SiliconFlow is an AI infrastructure platform built for developers and enterprises who want to deploy, run, and fine-tune large language models (LLMs) and multimodal models efficiently. It offers a unified stack for inference, model hosting, and acceleration so that you don’t have to manage all the infrastructure yourself. The platform supports many open source and commercial models, high throughput, low latency, autoscaling and flexible deployment (serverless, reserved GPUs, private cloud). It also emphasizes cost-effectiveness, data security, and feature-rich tooling such as APIs compatible with OpenAI style, fine-tuning, monitoring, and scalability.

Kiro AI

Kiro.dev is a powerful AI-powered code generation tool designed to accelerate the software development process. It leverages advanced machine learning models to help developers write code faster, more efficiently, and with fewer errors. Kiro.dev offers various features, including code completion, code generation from natural language prompts, and code explanation, making it a valuable asset for developers of all skill levels.

Kiro AI

Kiro.dev is a powerful AI-powered code generation tool designed to accelerate the software development process. It leverages advanced machine learning models to help developers write code faster, more efficiently, and with fewer errors. Kiro.dev offers various features, including code completion, code generation from natural language prompts, and code explanation, making it a valuable asset for developers of all skill levels.

Kiro AI

Kiro.dev is a powerful AI-powered code generation tool designed to accelerate the software development process. It leverages advanced machine learning models to help developers write code faster, more efficiently, and with fewer errors. Kiro.dev offers various features, including code completion, code generation from natural language prompts, and code explanation, making it a valuable asset for developers of all skill levels.

Claude Code Router

Claude.co Router is a powerful AI-powered routing tool designed to streamline and optimize your workflows by intelligently directing tasks and queries to the most appropriate AI model. It leverages the strengths of various AI models, providing a single point of access for users seeking diverse AI capabilities. This allows for efficient task completion and avoids the limitations of relying on a single AI model.

Claude Code Router

Claude.co Router is a powerful AI-powered routing tool designed to streamline and optimize your workflows by intelligently directing tasks and queries to the most appropriate AI model. It leverages the strengths of various AI models, providing a single point of access for users seeking diverse AI capabilities. This allows for efficient task completion and avoids the limitations of relying on a single AI model.

Claude Code Router

Claude.co Router is a powerful AI-powered routing tool designed to streamline and optimize your workflows by intelligently directing tasks and queries to the most appropriate AI model. It leverages the strengths of various AI models, providing a single point of access for users seeking diverse AI capabilities. This allows for efficient task completion and avoids the limitations of relying on a single AI model.

APIDNA

APIDNA is an AI-powered platform that transforms API integrations by using autonomous AI agents to automate complex tasks such as endpoint integration, client mapping, data handling, and response management. The platform allows developers to connect software systems seamlessly without writing manual code. It streamlines integration processes by analyzing APIs, mapping client requests, transforming data, and generating ready-to-use code automatically. With real-time monitoring, end-to-end testing, and robust security, APIDNA ensures integrations are efficient, reliable, and scalable. It caters to software developers, system integrators, IT managers, and automation engineers across multiple industries, including fintech, healthcare, and e-commerce. By reducing the manual effort involved in connecting APIs, APIDNA frees teams to focus on building innovative features and improving business operations.

APIDNA

APIDNA is an AI-powered platform that transforms API integrations by using autonomous AI agents to automate complex tasks such as endpoint integration, client mapping, data handling, and response management. The platform allows developers to connect software systems seamlessly without writing manual code. It streamlines integration processes by analyzing APIs, mapping client requests, transforming data, and generating ready-to-use code automatically. With real-time monitoring, end-to-end testing, and robust security, APIDNA ensures integrations are efficient, reliable, and scalable. It caters to software developers, system integrators, IT managers, and automation engineers across multiple industries, including fintech, healthcare, and e-commerce. By reducing the manual effort involved in connecting APIs, APIDNA frees teams to focus on building innovative features and improving business operations.

APIDNA

APIDNA is an AI-powered platform that transforms API integrations by using autonomous AI agents to automate complex tasks such as endpoint integration, client mapping, data handling, and response management. The platform allows developers to connect software systems seamlessly without writing manual code. It streamlines integration processes by analyzing APIs, mapping client requests, transforming data, and generating ready-to-use code automatically. With real-time monitoring, end-to-end testing, and robust security, APIDNA ensures integrations are efficient, reliable, and scalable. It caters to software developers, system integrators, IT managers, and automation engineers across multiple industries, including fintech, healthcare, and e-commerce. By reducing the manual effort involved in connecting APIs, APIDNA frees teams to focus on building innovative features and improving business operations.

inception

Inception Labs is an AI research company that develops Mercury, the world's first commercial diffusion-based large language models. Unlike traditional autoregressive LLMs that generate tokens sequentially, Mercury models use diffusion architecture to generate text through parallel refinement passes. This breakthrough approach enables ultra-fast inference speeds of over 1,000 tokens per second while maintaining frontier-level quality. The platform offers Mercury for general-purpose tasks and Mercury Coder for development workflows, both featuring streaming capabilities, tool use, structured output, and 128K context windows. These models serve as drop-in replacements for traditional LLMs through OpenAI-compatible APIs and are available across major cloud providers including AWS Bedrock, Azure Foundry, and various AI platforms for enterprise deployment.

inception

Inception Labs is an AI research company that develops Mercury, the world's first commercial diffusion-based large language models. Unlike traditional autoregressive LLMs that generate tokens sequentially, Mercury models use diffusion architecture to generate text through parallel refinement passes. This breakthrough approach enables ultra-fast inference speeds of over 1,000 tokens per second while maintaining frontier-level quality. The platform offers Mercury for general-purpose tasks and Mercury Coder for development workflows, both featuring streaming capabilities, tool use, structured output, and 128K context windows. These models serve as drop-in replacements for traditional LLMs through OpenAI-compatible APIs and are available across major cloud providers including AWS Bedrock, Azure Foundry, and various AI platforms for enterprise deployment.

inception

Inception Labs is an AI research company that develops Mercury, the world's first commercial diffusion-based large language models. Unlike traditional autoregressive LLMs that generate tokens sequentially, Mercury models use diffusion architecture to generate text through parallel refinement passes. This breakthrough approach enables ultra-fast inference speeds of over 1,000 tokens per second while maintaining frontier-level quality. The platform offers Mercury for general-purpose tasks and Mercury Coder for development workflows, both featuring streaming capabilities, tool use, structured output, and 128K context windows. These models serve as drop-in replacements for traditional LLMs through OpenAI-compatible APIs and are available across major cloud providers including AWS Bedrock, Azure Foundry, and various AI platforms for enterprise deployment.

Abacus.AI

ChatLLM Teams by Abacus.AI is an all‑in‑one AI assistant that unifies access to top LLMs, image and video generators, and powerful agentic tools in a single workspace. It includes DeepAgent for complex, multi‑step tasks, code execution with an editor, document/chat with files, web search, TTS, and slide/doc generation. Users can build custom chatbots, set up AI workflows, generate images and videos from multiple models, and organize work with projects across desktop and mobile apps. The platform is OpenAI‑style in usability but adds operator features for running tasks on a computer, plus DeepAgent Desktop and AppLLM for building and hosting small apps.

Abacus.AI

ChatLLM Teams by Abacus.AI is an all‑in‑one AI assistant that unifies access to top LLMs, image and video generators, and powerful agentic tools in a single workspace. It includes DeepAgent for complex, multi‑step tasks, code execution with an editor, document/chat with files, web search, TTS, and slide/doc generation. Users can build custom chatbots, set up AI workflows, generate images and videos from multiple models, and organize work with projects across desktop and mobile apps. The platform is OpenAI‑style in usability but adds operator features for running tasks on a computer, plus DeepAgent Desktop and AppLLM for building and hosting small apps.

Abacus.AI

ChatLLM Teams by Abacus.AI is an all‑in‑one AI assistant that unifies access to top LLMs, image and video generators, and powerful agentic tools in a single workspace. It includes DeepAgent for complex, multi‑step tasks, code execution with an editor, document/chat with files, web search, TTS, and slide/doc generation. Users can build custom chatbots, set up AI workflows, generate images and videos from multiple models, and organize work with projects across desktop and mobile apps. The platform is OpenAI‑style in usability but adds operator features for running tasks on a computer, plus DeepAgent Desktop and AppLLM for building and hosting small apps.

Langchain

LangChain is a powerful open-source framework designed to help developers build context-aware applications that leverage large language models (LLMs). It allows users to connect language models to various data sources, APIs, and memory components, enabling intelligent, multi-step reasoning and decision-making processes. LangChain supports both Python and JavaScript, providing modular building blocks for developers to create chatbots, AI assistants, retrieval-augmented generation (RAG) systems, and agent-based tools. The framework is widely adopted across industries for its flexibility in connecting structured and unstructured data with LLMs.

Langchain

LangChain is a powerful open-source framework designed to help developers build context-aware applications that leverage large language models (LLMs). It allows users to connect language models to various data sources, APIs, and memory components, enabling intelligent, multi-step reasoning and decision-making processes. LangChain supports both Python and JavaScript, providing modular building blocks for developers to create chatbots, AI assistants, retrieval-augmented generation (RAG) systems, and agent-based tools. The framework is widely adopted across industries for its flexibility in connecting structured and unstructured data with LLMs.

Langchain

LangChain is a powerful open-source framework designed to help developers build context-aware applications that leverage large language models (LLMs). It allows users to connect language models to various data sources, APIs, and memory components, enabling intelligent, multi-step reasoning and decision-making processes. LangChain supports both Python and JavaScript, providing modular building blocks for developers to create chatbots, AI assistants, retrieval-augmented generation (RAG) systems, and agent-based tools. The framework is widely adopted across industries for its flexibility in connecting structured and unstructured data with LLMs.

Nexos AI

Nexos.ai is a unified AI orchestration platform designed to centralize, secure, and streamline the management of multiple large language models (LLMs) and AI services for businesses and enterprises. The platform provides a single workspace where teams and organizations can connect, manage, and run over 200+ AI models—including those from OpenAI, Google, Anthropic, Meta, and more—through a single interface and API. Nexos.ai includes robust enterprise-grade features for security, compliance, smart routing, and cost optimization. It offers model output comparison, collaborative project spaces, observability tools for monitoring, and guardrails for responsible AI usage. With an AI Gateway and Workspace, tech leaders can govern AI usage, minimize fragmentation, enable rapid experimentation, and scale AI adoption across teams efficiently.

Nexos AI

Nexos.ai is a unified AI orchestration platform designed to centralize, secure, and streamline the management of multiple large language models (LLMs) and AI services for businesses and enterprises. The platform provides a single workspace where teams and organizations can connect, manage, and run over 200+ AI models—including those from OpenAI, Google, Anthropic, Meta, and more—through a single interface and API. Nexos.ai includes robust enterprise-grade features for security, compliance, smart routing, and cost optimization. It offers model output comparison, collaborative project spaces, observability tools for monitoring, and guardrails for responsible AI usage. With an AI Gateway and Workspace, tech leaders can govern AI usage, minimize fragmentation, enable rapid experimentation, and scale AI adoption across teams efficiently.

Nexos AI

Nexos.ai is a unified AI orchestration platform designed to centralize, secure, and streamline the management of multiple large language models (LLMs) and AI services for businesses and enterprises. The platform provides a single workspace where teams and organizations can connect, manage, and run over 200+ AI models—including those from OpenAI, Google, Anthropic, Meta, and more—through a single interface and API. Nexos.ai includes robust enterprise-grade features for security, compliance, smart routing, and cost optimization. It offers model output comparison, collaborative project spaces, observability tools for monitoring, and guardrails for responsible AI usage. With an AI Gateway and Workspace, tech leaders can govern AI usage, minimize fragmentation, enable rapid experimentation, and scale AI adoption across teams efficiently.

Awan LLM

Awan LLM is a cost-effective, unlimited token large language model inference API platform designed for power users and developers. Unlike traditional API providers that charge per token, Awan LLM offers a monthly subscription model that enables users to send and receive unlimited tokens up to the model's context limit. It supports unrestricted use of LLM models without censorship or constraints. The platform is built on privately owned data centers and GPUs, allowing it to offer efficient and scalable AI services. Awan LLM supports numerous use cases including AI assistants, AI agents, roleplaying, data processing, code completion, and building AI-powered applications without worrying about token limits or costs.

Awan LLM

Awan LLM is a cost-effective, unlimited token large language model inference API platform designed for power users and developers. Unlike traditional API providers that charge per token, Awan LLM offers a monthly subscription model that enables users to send and receive unlimited tokens up to the model's context limit. It supports unrestricted use of LLM models without censorship or constraints. The platform is built on privately owned data centers and GPUs, allowing it to offer efficient and scalable AI services. Awan LLM supports numerous use cases including AI assistants, AI agents, roleplaying, data processing, code completion, and building AI-powered applications without worrying about token limits or costs.

Awan LLM

Awan LLM is a cost-effective, unlimited token large language model inference API platform designed for power users and developers. Unlike traditional API providers that charge per token, Awan LLM offers a monthly subscription model that enables users to send and receive unlimited tokens up to the model's context limit. It supports unrestricted use of LLM models without censorship or constraints. The platform is built on privately owned data centers and GPUs, allowing it to offer efficient and scalable AI services. Awan LLM supports numerous use cases including AI assistants, AI agents, roleplaying, data processing, code completion, and building AI-powered applications without worrying about token limits or costs.

LM Studio

LM Studio is a local large language model (LLM) platform that enables users to run and download powerful AI language models like LLaMa, MPT, and Gemma directly on their own computers. This platform supports Mac, Windows, and Linux operating systems, providing flexibility to users across different devices. LM Studio focuses on privacy and control by allowing users to work with AI models locally without relying on cloud-based services, ensuring data stays on the user’s device. It offers an easy-to-install interface with step-by-step guidance for setup, facilitating access to advanced AI capabilities for developers, researchers, and AI enthusiasts without requiring an internet connection.

LM Studio

LM Studio is a local large language model (LLM) platform that enables users to run and download powerful AI language models like LLaMa, MPT, and Gemma directly on their own computers. This platform supports Mac, Windows, and Linux operating systems, providing flexibility to users across different devices. LM Studio focuses on privacy and control by allowing users to work with AI models locally without relying on cloud-based services, ensuring data stays on the user’s device. It offers an easy-to-install interface with step-by-step guidance for setup, facilitating access to advanced AI capabilities for developers, researchers, and AI enthusiasts without requiring an internet connection.

LM Studio

LM Studio is a local large language model (LLM) platform that enables users to run and download powerful AI language models like LLaMa, MPT, and Gemma directly on their own computers. This platform supports Mac, Windows, and Linux operating systems, providing flexibility to users across different devices. LM Studio focuses on privacy and control by allowing users to work with AI models locally without relying on cloud-based services, ensuring data stays on the user’s device. It offers an easy-to-install interface with step-by-step guidance for setup, facilitating access to advanced AI capabilities for developers, researchers, and AI enthusiasts without requiring an internet connection.

ModelRiver

ModelRiver is a unified AI integration platform that allows developers to access multiple AI providers through a single API. Instead of integrating each model separately, teams integrate once and gain access to a wide range of AI services. Built-in failover ensures applications remain online even if one provider experiences issues. ModelRiver is designed for reliability, scalability, and simplicity, making it ideal for production environments that depend on AI availability.

ModelRiver

ModelRiver is a unified AI integration platform that allows developers to access multiple AI providers through a single API. Instead of integrating each model separately, teams integrate once and gain access to a wide range of AI services. Built-in failover ensures applications remain online even if one provider experiences issues. ModelRiver is designed for reliability, scalability, and simplicity, making it ideal for production environments that depend on AI availability.

ModelRiver

ModelRiver is a unified AI integration platform that allows developers to access multiple AI providers through a single API. Instead of integrating each model separately, teams integrate once and gain access to a wide range of AI services. Built-in failover ensures applications remain online even if one provider experiences issues. ModelRiver is designed for reliability, scalability, and simplicity, making it ideal for production environments that depend on AI availability.

Editorial Note

This page was researched and written by the ATB Editorial Team. Our team researches each AI tool by reviewing its official website, testing features, exploring real use cases, and considering user feedback. Every page is fact-checked and regularly updated to ensure the information stays accurate, neutral, and useful for our readers.

If you have any suggestions or questions, email us at hello@aitoolbook.ai