- Developers: Simplifying AI integrations.

- Startups: Building reliable AI-powered products.

- Enterprises: Ensuring uptime across providers.

- Platform Teams: Managing AI infrastructure.

How to Use It?

- Integrate API: Connect once to ModelRiver.

- Choose Providers: Access multiple AI services.

- Enable Failover: Ensure continuity.

- Scale Usage: Grow without re-integration.

- One API Access: Multiple providers behind one integration.

- Built-In Failover: High reliability by design.

- Provider Flexibility: Switch models easily.

- Production-Ready: Built for uptime.

- Simplifies multi-provider AI usage.

- Improves system reliability.

- Reduces vendor lock-in.

- Requires technical integration.

- Abstracts some provider-specific features.

- Best for production-scale needs.

Free

Free

No additional requests

Plan Features

All features included

1 team member

Community support

Starter

$ 9.00

$0.004 per additional request

Plan Features

All features included

Up to 5 team members

Email support

Core

$ 29.00

$0.0025 per additional request

Plan Features

All features included

Unlimited team members

Priority email support

Growth

$ 99.00

$0.0018 per additional request

Plan Features

All features included

Unlimited team members

Priority support

Proud of the love you're getting? Show off your AI Toolbook reviews—then invite more fans to share the love and build your credibility.

Add an AI Toolbook badge to your site—an easy way to drive followers, showcase updates, and collect reviews. It's like a mini 24/7 billboard for your AI.

Reviews

Rating Distribution

Average score

Popular Mention

FAQs

Similar AI Tools

SiliconFlow

SiliconFlow is an AI infrastructure platform built for developers and enterprises who want to deploy, run, and fine-tune large language models (LLMs) and multimodal models efficiently. It offers a unified stack for inference, model hosting, and acceleration so that you don’t have to manage all the infrastructure yourself. The platform supports many open source and commercial models, high throughput, low latency, autoscaling and flexible deployment (serverless, reserved GPUs, private cloud). It also emphasizes cost-effectiveness, data security, and feature-rich tooling such as APIs compatible with OpenAI style, fine-tuning, monitoring, and scalability.

SiliconFlow

SiliconFlow is an AI infrastructure platform built for developers and enterprises who want to deploy, run, and fine-tune large language models (LLMs) and multimodal models efficiently. It offers a unified stack for inference, model hosting, and acceleration so that you don’t have to manage all the infrastructure yourself. The platform supports many open source and commercial models, high throughput, low latency, autoscaling and flexible deployment (serverless, reserved GPUs, private cloud). It also emphasizes cost-effectiveness, data security, and feature-rich tooling such as APIs compatible with OpenAI style, fine-tuning, monitoring, and scalability.

SiliconFlow

SiliconFlow is an AI infrastructure platform built for developers and enterprises who want to deploy, run, and fine-tune large language models (LLMs) and multimodal models efficiently. It offers a unified stack for inference, model hosting, and acceleration so that you don’t have to manage all the infrastructure yourself. The platform supports many open source and commercial models, high throughput, low latency, autoscaling and flexible deployment (serverless, reserved GPUs, private cloud). It also emphasizes cost-effectiveness, data security, and feature-rich tooling such as APIs compatible with OpenAI style, fine-tuning, monitoring, and scalability.

Kiro AI

Kiro.dev is a powerful AI-powered code generation tool designed to accelerate the software development process. It leverages advanced machine learning models to help developers write code faster, more efficiently, and with fewer errors. Kiro.dev offers various features, including code completion, code generation from natural language prompts, and code explanation, making it a valuable asset for developers of all skill levels.

Kiro AI

Kiro.dev is a powerful AI-powered code generation tool designed to accelerate the software development process. It leverages advanced machine learning models to help developers write code faster, more efficiently, and with fewer errors. Kiro.dev offers various features, including code completion, code generation from natural language prompts, and code explanation, making it a valuable asset for developers of all skill levels.

Kiro AI

Kiro.dev is a powerful AI-powered code generation tool designed to accelerate the software development process. It leverages advanced machine learning models to help developers write code faster, more efficiently, and with fewer errors. Kiro.dev offers various features, including code completion, code generation from natural language prompts, and code explanation, making it a valuable asset for developers of all skill levels.

Unsloth AI

Unsloth.AI is an open-source platform designed to accelerate and simplify the fine-tuning of large language models (LLMs). By leveraging manual mathematical derivations, custom GPU kernels, and efficient optimization techniques, Unsloth achieves up to 30x faster training speeds compared to traditional methods, without compromising model accuracy. It supports a wide range of popular models, including Llama, Mistral, Gemma, and BERT, and works seamlessly on various GPUs, from consumer-grade Tesla T4 to high-end H100, as well as AMD and Intel GPUs. Unsloth empowers developers, researchers, and AI enthusiasts to fine-tune models efficiently, even with limited computational resources, democratizing access to advanced AI model customization. With a focus on performance, scalability, and flexibility, Unsloth.AI is suitable for both academic research and commercial applications, helping users deploy specialized AI solutions faster and more effectively.

Unsloth AI

Unsloth.AI is an open-source platform designed to accelerate and simplify the fine-tuning of large language models (LLMs). By leveraging manual mathematical derivations, custom GPU kernels, and efficient optimization techniques, Unsloth achieves up to 30x faster training speeds compared to traditional methods, without compromising model accuracy. It supports a wide range of popular models, including Llama, Mistral, Gemma, and BERT, and works seamlessly on various GPUs, from consumer-grade Tesla T4 to high-end H100, as well as AMD and Intel GPUs. Unsloth empowers developers, researchers, and AI enthusiasts to fine-tune models efficiently, even with limited computational resources, democratizing access to advanced AI model customization. With a focus on performance, scalability, and flexibility, Unsloth.AI is suitable for both academic research and commercial applications, helping users deploy specialized AI solutions faster and more effectively.

Unsloth AI

Unsloth.AI is an open-source platform designed to accelerate and simplify the fine-tuning of large language models (LLMs). By leveraging manual mathematical derivations, custom GPU kernels, and efficient optimization techniques, Unsloth achieves up to 30x faster training speeds compared to traditional methods, without compromising model accuracy. It supports a wide range of popular models, including Llama, Mistral, Gemma, and BERT, and works seamlessly on various GPUs, from consumer-grade Tesla T4 to high-end H100, as well as AMD and Intel GPUs. Unsloth empowers developers, researchers, and AI enthusiasts to fine-tune models efficiently, even with limited computational resources, democratizing access to advanced AI model customization. With a focus on performance, scalability, and flexibility, Unsloth.AI is suitable for both academic research and commercial applications, helping users deploy specialized AI solutions faster and more effectively.

Genloop AI

Genloop is a platform that empowers enterprises to build, deploy, and manage custom, private large language models (LLMs) tailored to their business data and requirements — all with minimal development effort. It turns enterprise data into intelligent, conversational insights, allowing users to ask business questions in natural language and receive actionable analysis instantly. The platform enables organizations to confidently manage their data-driven decision-making by offering advanced fine-tuning, automation, and deployment tools. Businesses can transform their existing datasets into private AI assistants that deliver accurate insights, while maintaining complete security and compliance. Genloop’s focus is on bridging the gap between AI and enterprise data operations, providing a scalable, trustworthy, and adaptive solution for teams that want to leverage AI without extensive coding or infrastructure complexity.

Genloop AI

Genloop is a platform that empowers enterprises to build, deploy, and manage custom, private large language models (LLMs) tailored to their business data and requirements — all with minimal development effort. It turns enterprise data into intelligent, conversational insights, allowing users to ask business questions in natural language and receive actionable analysis instantly. The platform enables organizations to confidently manage their data-driven decision-making by offering advanced fine-tuning, automation, and deployment tools. Businesses can transform their existing datasets into private AI assistants that deliver accurate insights, while maintaining complete security and compliance. Genloop’s focus is on bridging the gap between AI and enterprise data operations, providing a scalable, trustworthy, and adaptive solution for teams that want to leverage AI without extensive coding or infrastructure complexity.

Genloop AI

Genloop is a platform that empowers enterprises to build, deploy, and manage custom, private large language models (LLMs) tailored to their business data and requirements — all with minimal development effort. It turns enterprise data into intelligent, conversational insights, allowing users to ask business questions in natural language and receive actionable analysis instantly. The platform enables organizations to confidently manage their data-driven decision-making by offering advanced fine-tuning, automation, and deployment tools. Businesses can transform their existing datasets into private AI assistants that deliver accurate insights, while maintaining complete security and compliance. Genloop’s focus is on bridging the gap between AI and enterprise data operations, providing a scalable, trustworthy, and adaptive solution for teams that want to leverage AI without extensive coding or infrastructure complexity.

Soket AI

Soket AI is an Indian deep-tech startup building sovereign, multilingual foundational AI models and real-time voice/speech APIs designed for Indic languages and global scale. By focusing on language diversity, cultural context and ethical AI, Soket AI aims to develop models that recognise and respond across many languages, while delivering enterprise-grade capabilities for sectors such as defence, healthcare, education and governance.

Soket AI

Soket AI is an Indian deep-tech startup building sovereign, multilingual foundational AI models and real-time voice/speech APIs designed for Indic languages and global scale. By focusing on language diversity, cultural context and ethical AI, Soket AI aims to develop models that recognise and respond across many languages, while delivering enterprise-grade capabilities for sectors such as defence, healthcare, education and governance.

Soket AI

Soket AI is an Indian deep-tech startup building sovereign, multilingual foundational AI models and real-time voice/speech APIs designed for Indic languages and global scale. By focusing on language diversity, cultural context and ethical AI, Soket AI aims to develop models that recognise and respond across many languages, while delivering enterprise-grade capabilities for sectors such as defence, healthcare, education and governance.

Pruna AI

Pruna.ai is an AI optimization engine designed to make machine learning models faster, smaller, cheaper, and greener with minimal overhead. It leverages advanced compression algorithms like pruning, quantization, distillation, caching, and compilation to reduce model size and accelerate inference times. The platform supports various AI models including large language models, vision transformers, and speech recognition models, making it ideal for real-time applications such as autonomous systems and recommendation engines. Pruna.ai aims to lower computational costs, decrease energy consumption, and improve deployment scalability across cloud and on-premise environments while ensuring minimal loss of model quality.

Pruna AI

Pruna.ai is an AI optimization engine designed to make machine learning models faster, smaller, cheaper, and greener with minimal overhead. It leverages advanced compression algorithms like pruning, quantization, distillation, caching, and compilation to reduce model size and accelerate inference times. The platform supports various AI models including large language models, vision transformers, and speech recognition models, making it ideal for real-time applications such as autonomous systems and recommendation engines. Pruna.ai aims to lower computational costs, decrease energy consumption, and improve deployment scalability across cloud and on-premise environments while ensuring minimal loss of model quality.

Pruna AI

Pruna.ai is an AI optimization engine designed to make machine learning models faster, smaller, cheaper, and greener with minimal overhead. It leverages advanced compression algorithms like pruning, quantization, distillation, caching, and compilation to reduce model size and accelerate inference times. The platform supports various AI models including large language models, vision transformers, and speech recognition models, making it ideal for real-time applications such as autonomous systems and recommendation engines. Pruna.ai aims to lower computational costs, decrease energy consumption, and improve deployment scalability across cloud and on-premise environments while ensuring minimal loss of model quality.

Ask Any Model

AskAnyModel is a unified AI interface that allows users to interact with multiple leading AI models — such as GPT, Claude, Gemini, and Mistral — from a single platform. It eliminates the need for multiple subscriptions and interfaces by bringing top AI models into one streamlined environment. Users can compare responses, analyze outputs, and select the best AI model for specific tasks like content creation, coding, data analysis, or research. AskAnyModel empowers individuals and teams to harness AI diversity efficiently, offering advanced tools for prompt testing, model benchmarking, and workflow integration.

Ask Any Model

AskAnyModel is a unified AI interface that allows users to interact with multiple leading AI models — such as GPT, Claude, Gemini, and Mistral — from a single platform. It eliminates the need for multiple subscriptions and interfaces by bringing top AI models into one streamlined environment. Users can compare responses, analyze outputs, and select the best AI model for specific tasks like content creation, coding, data analysis, or research. AskAnyModel empowers individuals and teams to harness AI diversity efficiently, offering advanced tools for prompt testing, model benchmarking, and workflow integration.

Ask Any Model

AskAnyModel is a unified AI interface that allows users to interact with multiple leading AI models — such as GPT, Claude, Gemini, and Mistral — from a single platform. It eliminates the need for multiple subscriptions and interfaces by bringing top AI models into one streamlined environment. Users can compare responses, analyze outputs, and select the best AI model for specific tasks like content creation, coding, data analysis, or research. AskAnyModel empowers individuals and teams to harness AI diversity efficiently, offering advanced tools for prompt testing, model benchmarking, and workflow integration.

LLM Chat

LLMChat is a privacy-focused, open-source AI chatbot platform designed for advanced research, agentic workflows, and seamless interaction with multiple large language models (LLMs). It offers users a minimalistic and intuitive interface enabling deep exploration of complex topics with modes like Deep Research and Pro Search, which incorporates real-time web integration for current data. The platform emphasizes user privacy by storing all chat history locally in the browser, ensuring conversations never leave the device. LLMChat supports many popular LLM providers such as OpenAI, Anthropic, Google, and more, allowing users to customize AI assistants with personalized instructions and knowledge bases for a wide variety of applications ranging from research to content generation and coding assistance.

LLM Chat

LLMChat is a privacy-focused, open-source AI chatbot platform designed for advanced research, agentic workflows, and seamless interaction with multiple large language models (LLMs). It offers users a minimalistic and intuitive interface enabling deep exploration of complex topics with modes like Deep Research and Pro Search, which incorporates real-time web integration for current data. The platform emphasizes user privacy by storing all chat history locally in the browser, ensuring conversations never leave the device. LLMChat supports many popular LLM providers such as OpenAI, Anthropic, Google, and more, allowing users to customize AI assistants with personalized instructions and knowledge bases for a wide variety of applications ranging from research to content generation and coding assistance.

LLM Chat

LLMChat is a privacy-focused, open-source AI chatbot platform designed for advanced research, agentic workflows, and seamless interaction with multiple large language models (LLMs). It offers users a minimalistic and intuitive interface enabling deep exploration of complex topics with modes like Deep Research and Pro Search, which incorporates real-time web integration for current data. The platform emphasizes user privacy by storing all chat history locally in the browser, ensuring conversations never leave the device. LLMChat supports many popular LLM providers such as OpenAI, Anthropic, Google, and more, allowing users to customize AI assistants with personalized instructions and knowledge bases for a wide variety of applications ranging from research to content generation and coding assistance.

Awan LLM

Awan LLM is a cost-effective, unlimited token large language model inference API platform designed for power users and developers. Unlike traditional API providers that charge per token, Awan LLM offers a monthly subscription model that enables users to send and receive unlimited tokens up to the model's context limit. It supports unrestricted use of LLM models without censorship or constraints. The platform is built on privately owned data centers and GPUs, allowing it to offer efficient and scalable AI services. Awan LLM supports numerous use cases including AI assistants, AI agents, roleplaying, data processing, code completion, and building AI-powered applications without worrying about token limits or costs.

Awan LLM

Awan LLM is a cost-effective, unlimited token large language model inference API platform designed for power users and developers. Unlike traditional API providers that charge per token, Awan LLM offers a monthly subscription model that enables users to send and receive unlimited tokens up to the model's context limit. It supports unrestricted use of LLM models without censorship or constraints. The platform is built on privately owned data centers and GPUs, allowing it to offer efficient and scalable AI services. Awan LLM supports numerous use cases including AI assistants, AI agents, roleplaying, data processing, code completion, and building AI-powered applications without worrying about token limits or costs.

Awan LLM

Awan LLM is a cost-effective, unlimited token large language model inference API platform designed for power users and developers. Unlike traditional API providers that charge per token, Awan LLM offers a monthly subscription model that enables users to send and receive unlimited tokens up to the model's context limit. It supports unrestricted use of LLM models without censorship or constraints. The platform is built on privately owned data centers and GPUs, allowing it to offer efficient and scalable AI services. Awan LLM supports numerous use cases including AI assistants, AI agents, roleplaying, data processing, code completion, and building AI-powered applications without worrying about token limits or costs.

Tinker

Tinker is a specialized training API designed for researchers to efficiently control every aspect of model training and fine-tuning while offloading the infrastructure management. It allows seamless experimentation with large language models by abstracting hardware complexities. Tinker supports key functionalities such as performing forward and backward passes, optimizing weights, generating token samples, and saving training progress. Built with LoRA technology, it enables fine-tuning through small add-ons rather than modifying original model weights. This makes Tinker an ideal platform for researchers focusing on datasets, algorithms, and reinforcement learning without infrastructure hassles.

Tinker

Tinker is a specialized training API designed for researchers to efficiently control every aspect of model training and fine-tuning while offloading the infrastructure management. It allows seamless experimentation with large language models by abstracting hardware complexities. Tinker supports key functionalities such as performing forward and backward passes, optimizing weights, generating token samples, and saving training progress. Built with LoRA technology, it enables fine-tuning through small add-ons rather than modifying original model weights. This makes Tinker an ideal platform for researchers focusing on datasets, algorithms, and reinforcement learning without infrastructure hassles.

Tinker

Tinker is a specialized training API designed for researchers to efficiently control every aspect of model training and fine-tuning while offloading the infrastructure management. It allows seamless experimentation with large language models by abstracting hardware complexities. Tinker supports key functionalities such as performing forward and backward passes, optimizing weights, generating token samples, and saving training progress. Built with LoRA technology, it enables fine-tuning through small add-ons rather than modifying original model weights. This makes Tinker an ideal platform for researchers focusing on datasets, algorithms, and reinforcement learning without infrastructure hassles.

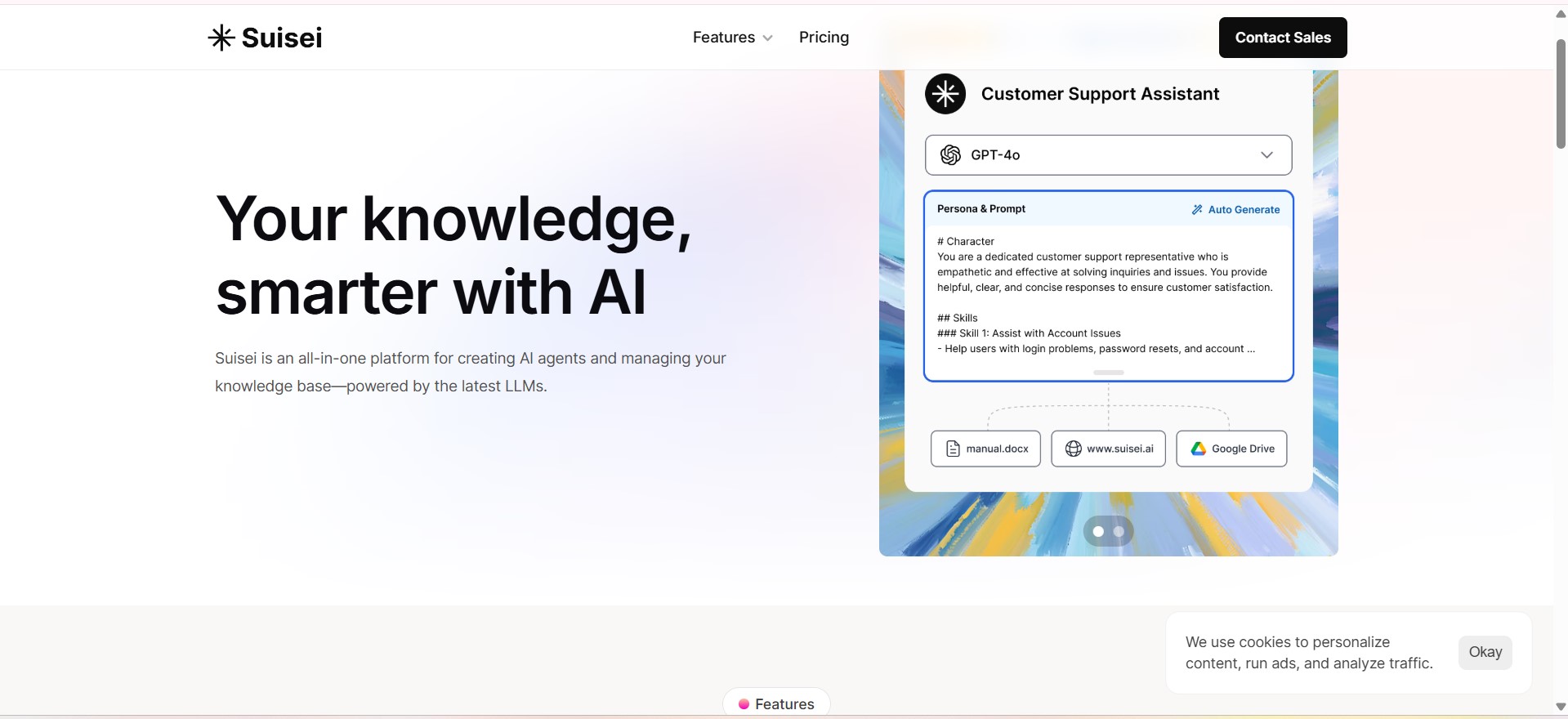

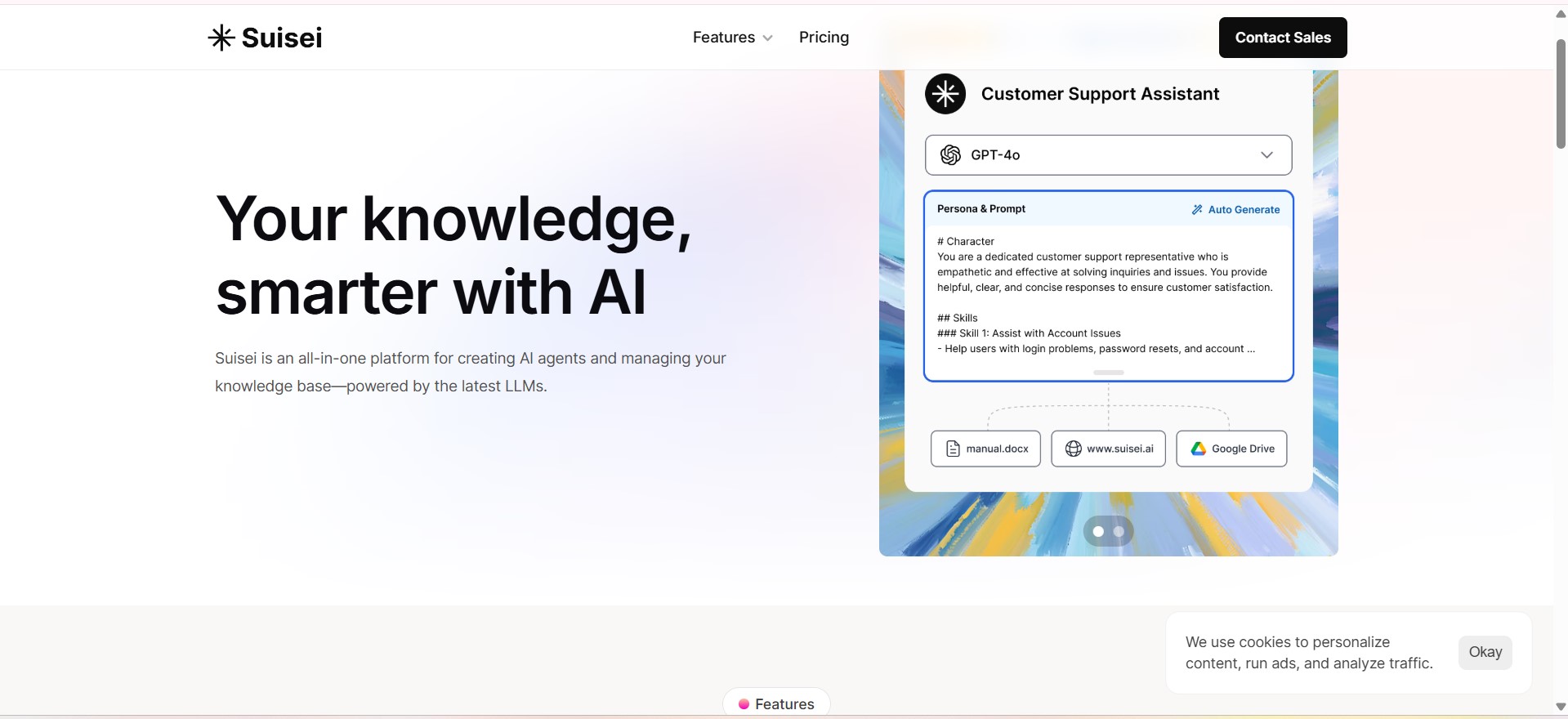

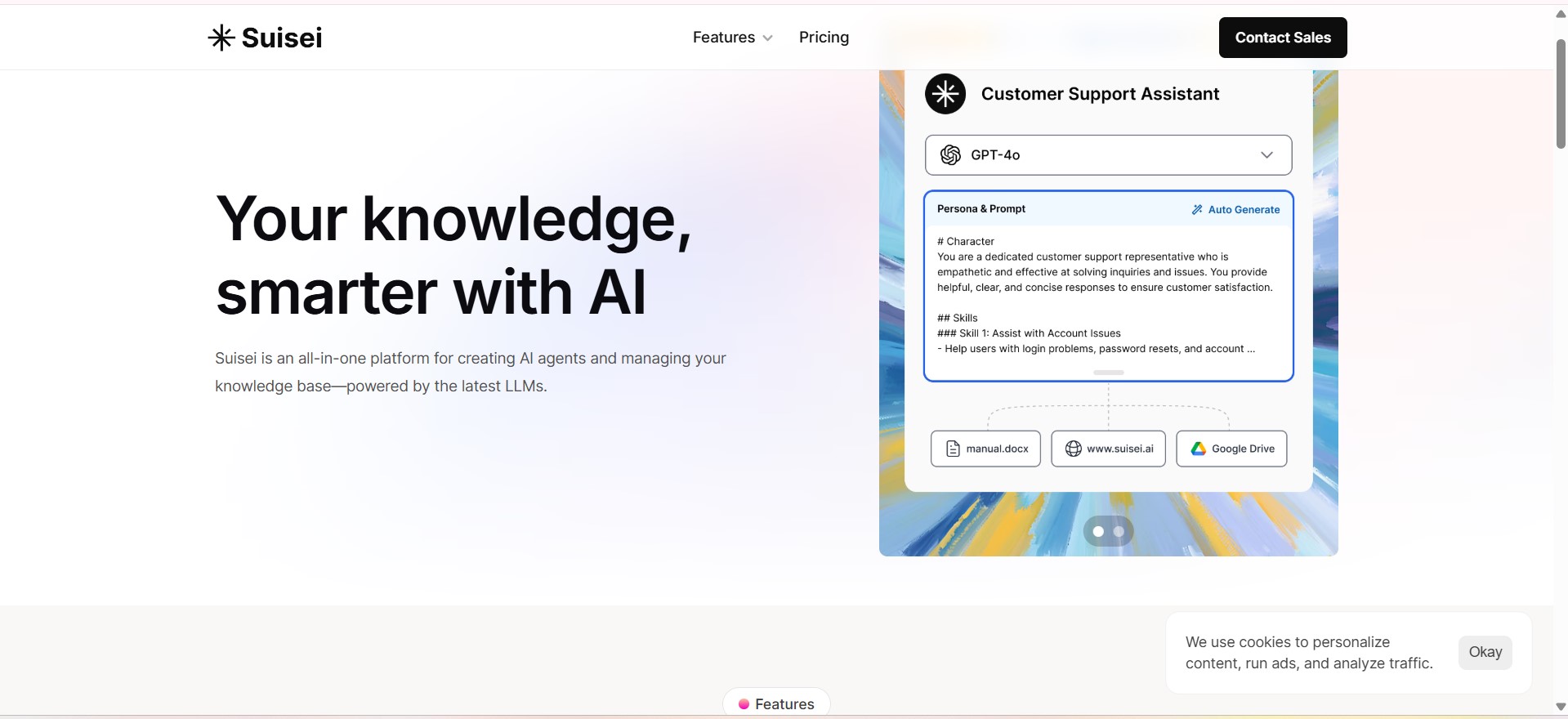

Suisei AI

Suisei AI is a modern AI platform designed to help individuals and businesses build, manage, and deploy intelligent AI agents tailored to their needs. At its core, Suisei positions itself as an all-in-one workspace for AI agents and knowledge management, powered by the latest large language models (LLMs) such as GPT, Claude, and Google’s Gemini.The platform’s primary focus is on AI agents—automated assistants that can perform a wide variety of tasks. Users can create custom AI agents without writing code, using intuitive templates or starting from scratch.

Suisei AI

Suisei AI is a modern AI platform designed to help individuals and businesses build, manage, and deploy intelligent AI agents tailored to their needs. At its core, Suisei positions itself as an all-in-one workspace for AI agents and knowledge management, powered by the latest large language models (LLMs) such as GPT, Claude, and Google’s Gemini.The platform’s primary focus is on AI agents—automated assistants that can perform a wide variety of tasks. Users can create custom AI agents without writing code, using intuitive templates or starting from scratch.

Suisei AI

Suisei AI is a modern AI platform designed to help individuals and businesses build, manage, and deploy intelligent AI agents tailored to their needs. At its core, Suisei positions itself as an all-in-one workspace for AI agents and knowledge management, powered by the latest large language models (LLMs) such as GPT, Claude, and Google’s Gemini.The platform’s primary focus is on AI agents—automated assistants that can perform a wide variety of tasks. Users can create custom AI agents without writing code, using intuitive templates or starting from scratch.

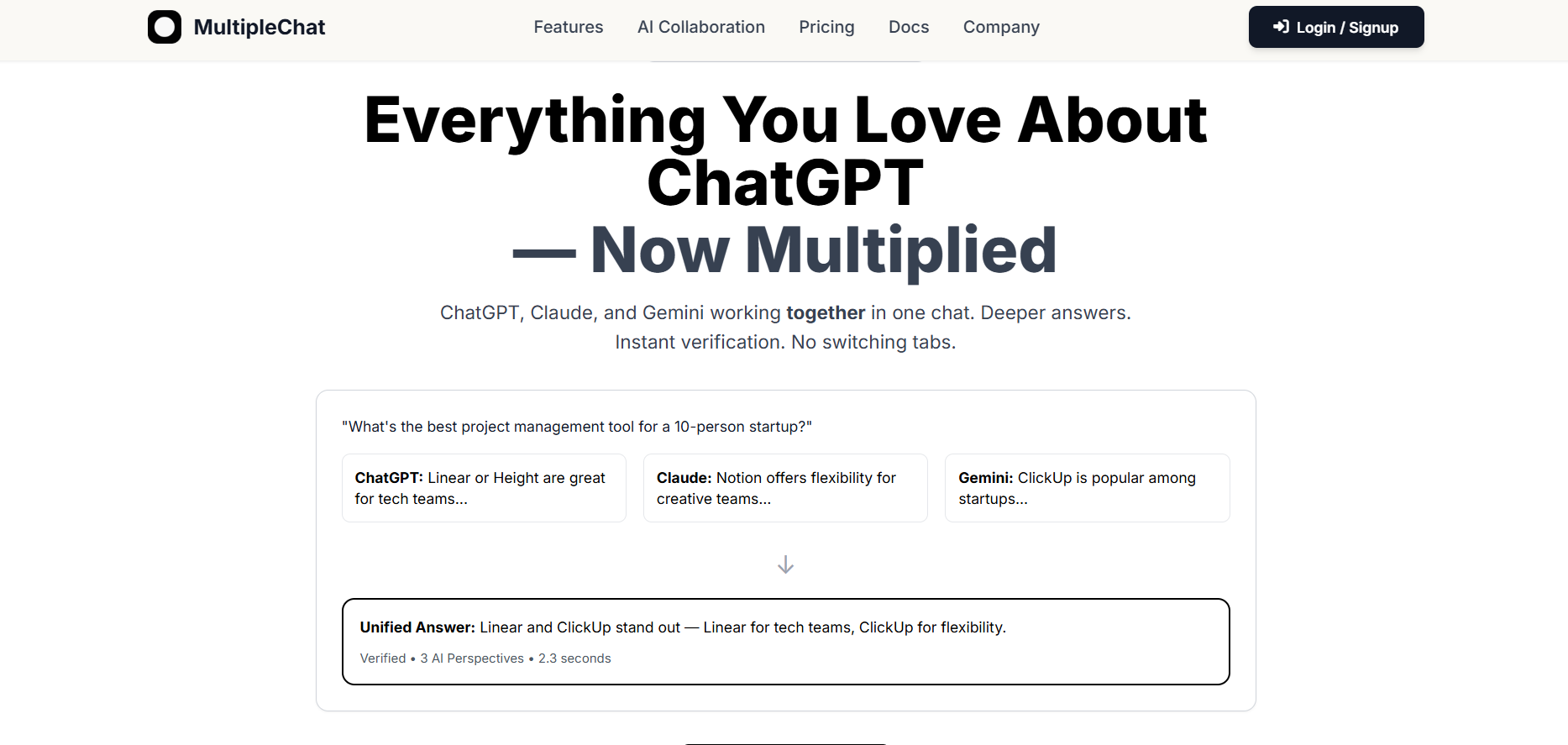

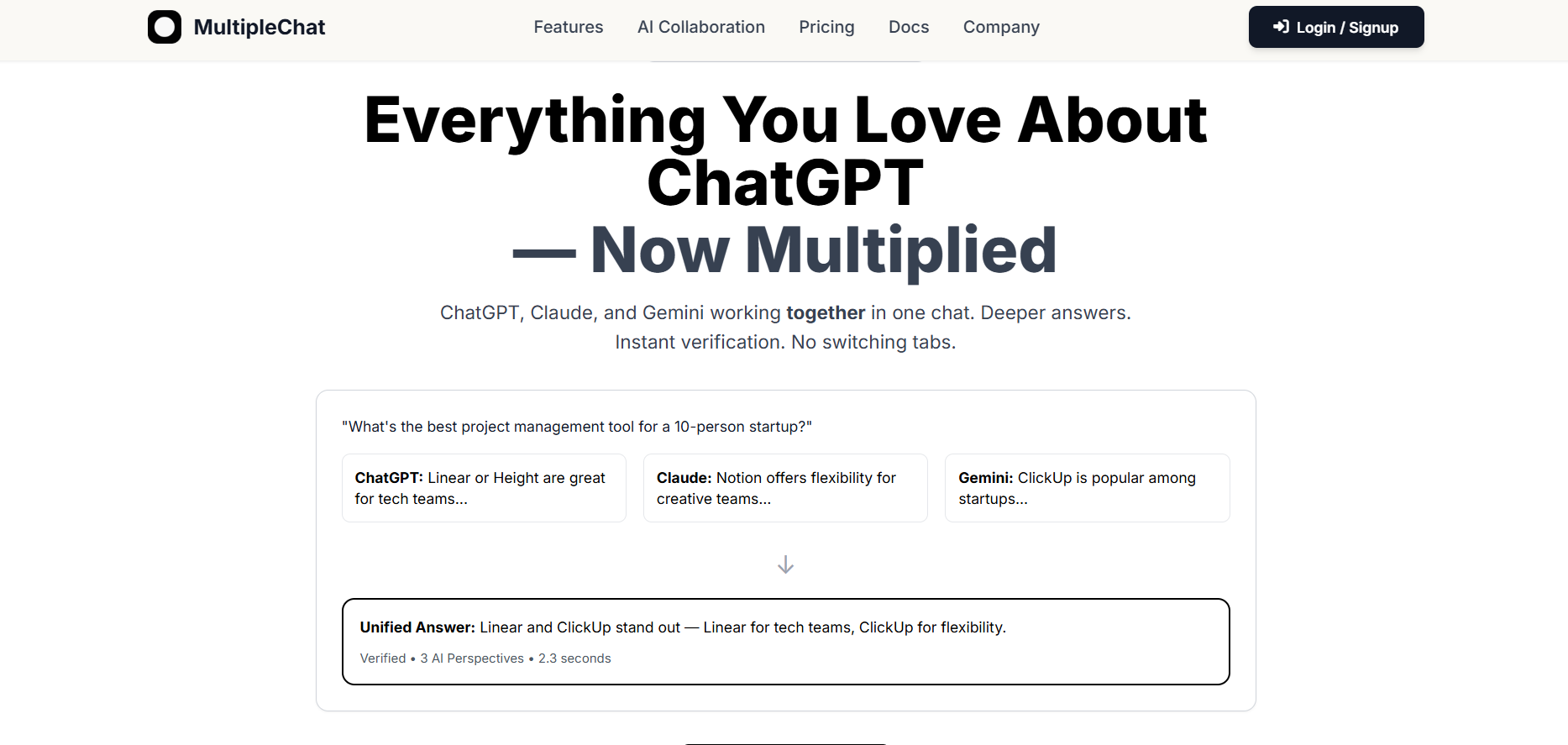

Multiple Chat

Multiple is a multi-model AI chat platform that allows users to interact with several leading AI models within a single interface. By combining responses from different AI systems, it enables deeper exploration, comparison, and verification of answers without switching tabs. The platform is designed for users who rely heavily on conversational AI and want improved accuracy, broader perspectives, and more confident outputs.

Multiple Chat

Multiple is a multi-model AI chat platform that allows users to interact with several leading AI models within a single interface. By combining responses from different AI systems, it enables deeper exploration, comparison, and verification of answers without switching tabs. The platform is designed for users who rely heavily on conversational AI and want improved accuracy, broader perspectives, and more confident outputs.

Multiple Chat

Multiple is a multi-model AI chat platform that allows users to interact with several leading AI models within a single interface. By combining responses from different AI systems, it enables deeper exploration, comparison, and verification of answers without switching tabs. The platform is designed for users who rely heavily on conversational AI and want improved accuracy, broader perspectives, and more confident outputs.

Editorial Note

This page was researched and written by the ATB Editorial Team. Our team researches each AI tool by reviewing its official website, testing features, exploring real use cases, and considering user feedback. Every page is fact-checked and regularly updated to ensure the information stays accurate, neutral, and useful for our readers.

If you have any suggestions or questions, email us at hello@aitoolbook.ai