- AI Developers & Engineers: Optimize and compress models for faster, efficient deployment.

- Data Scientists: Reduce inference time and resource usage without sacrificing accuracy.

- Enterprises & Tech Teams: Scale AI workloads while controlling cloud compute costs.

- Machine Learning Researchers: Experiment with cutting-edge compression algorithms easily.

- Edge Computing Specialists: Deploy slimmed-down AI models for resource-constrained devices.

How to Use Pruna.ai?

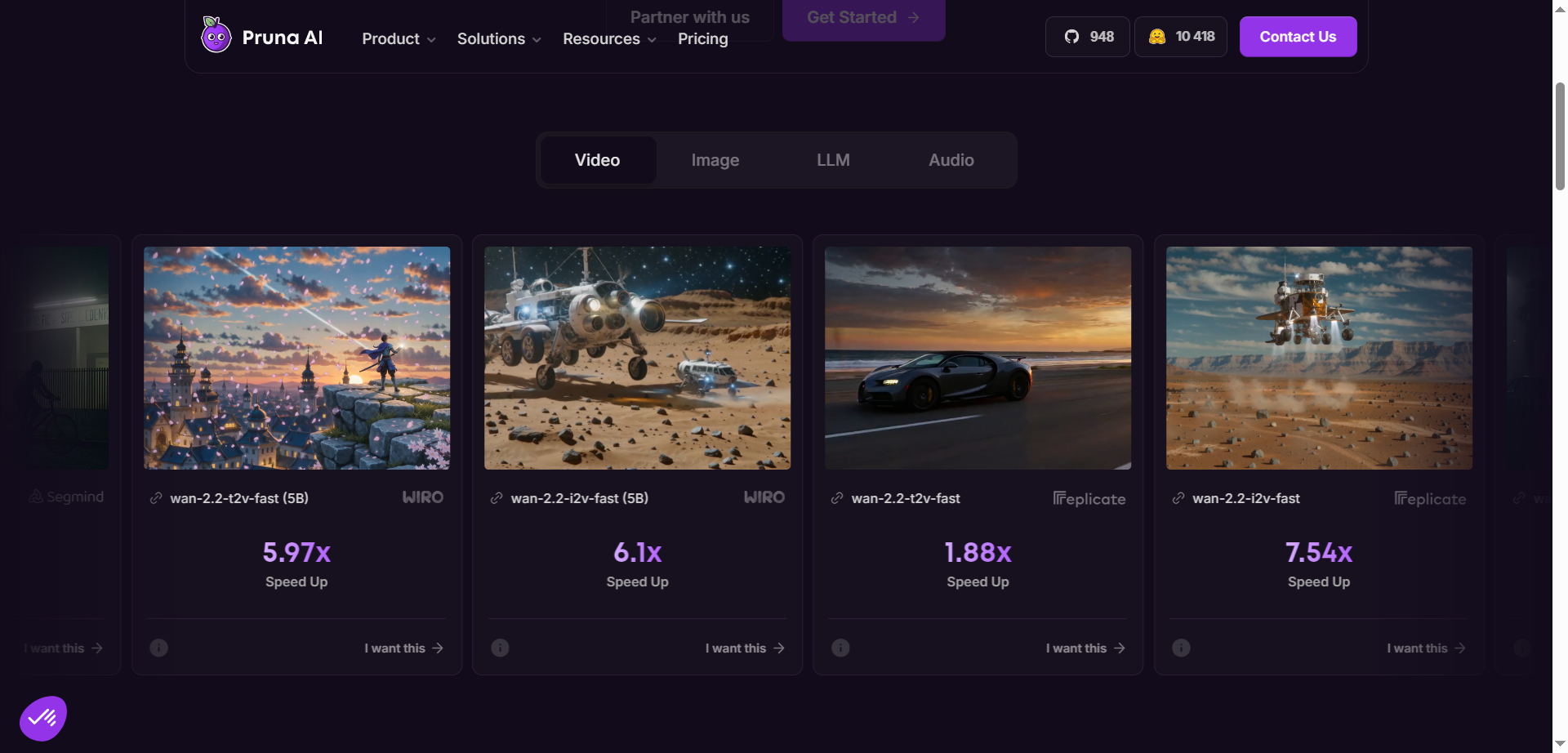

- Install & Set Up: Install via pip or clone from GitHub to access the optimization framework.

- Prepare Your Model: Load your existing AI models for optimization in supported formats.

- Optimize with One Line: Apply compression algorithms like pruning or quantization with minimal code.

- Deploy & Monitor: Serve optimized models using standard inference frameworks and track usage.

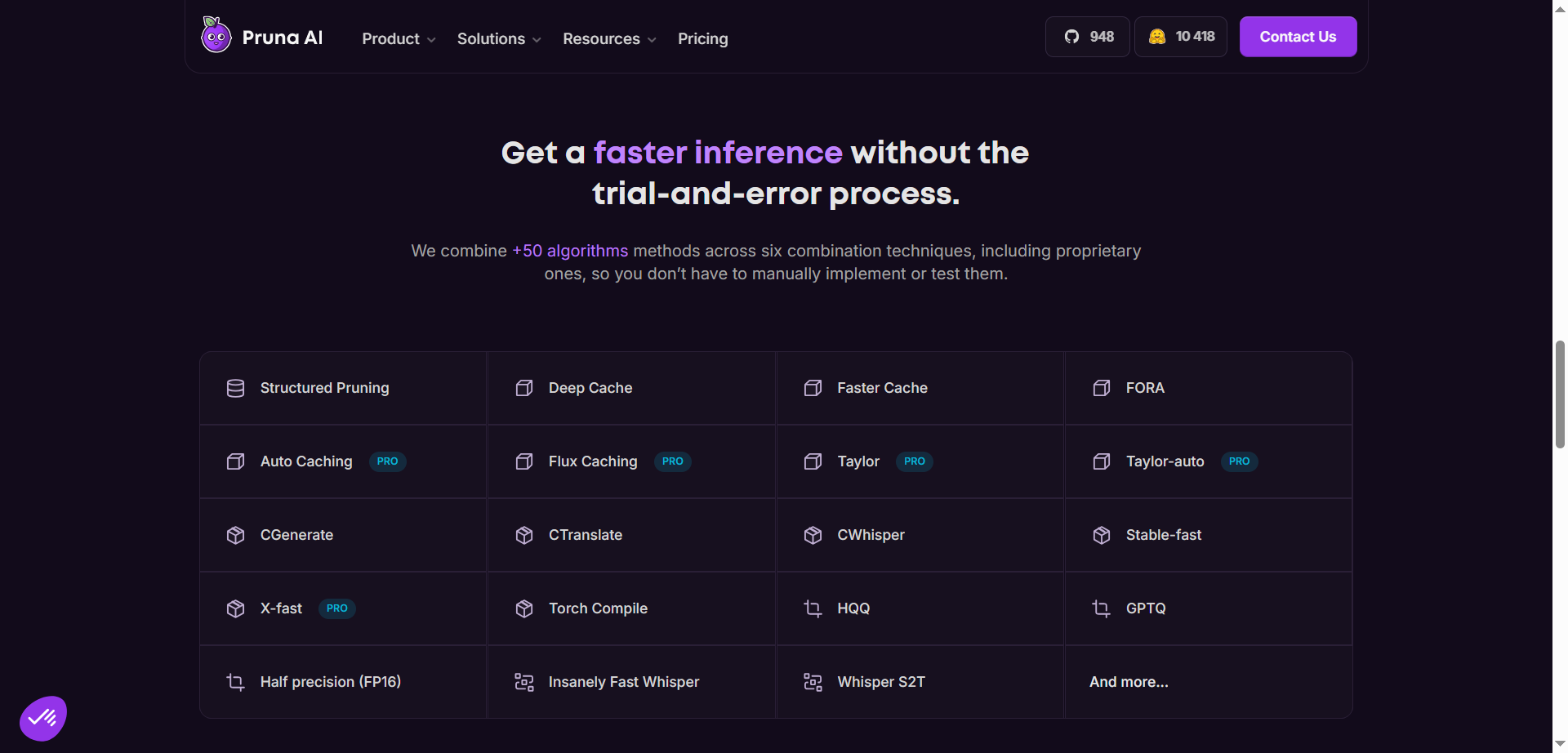

- Comprehensive Optimization Suite: Combines pruning, distillation, caching, and more for max efficiency.

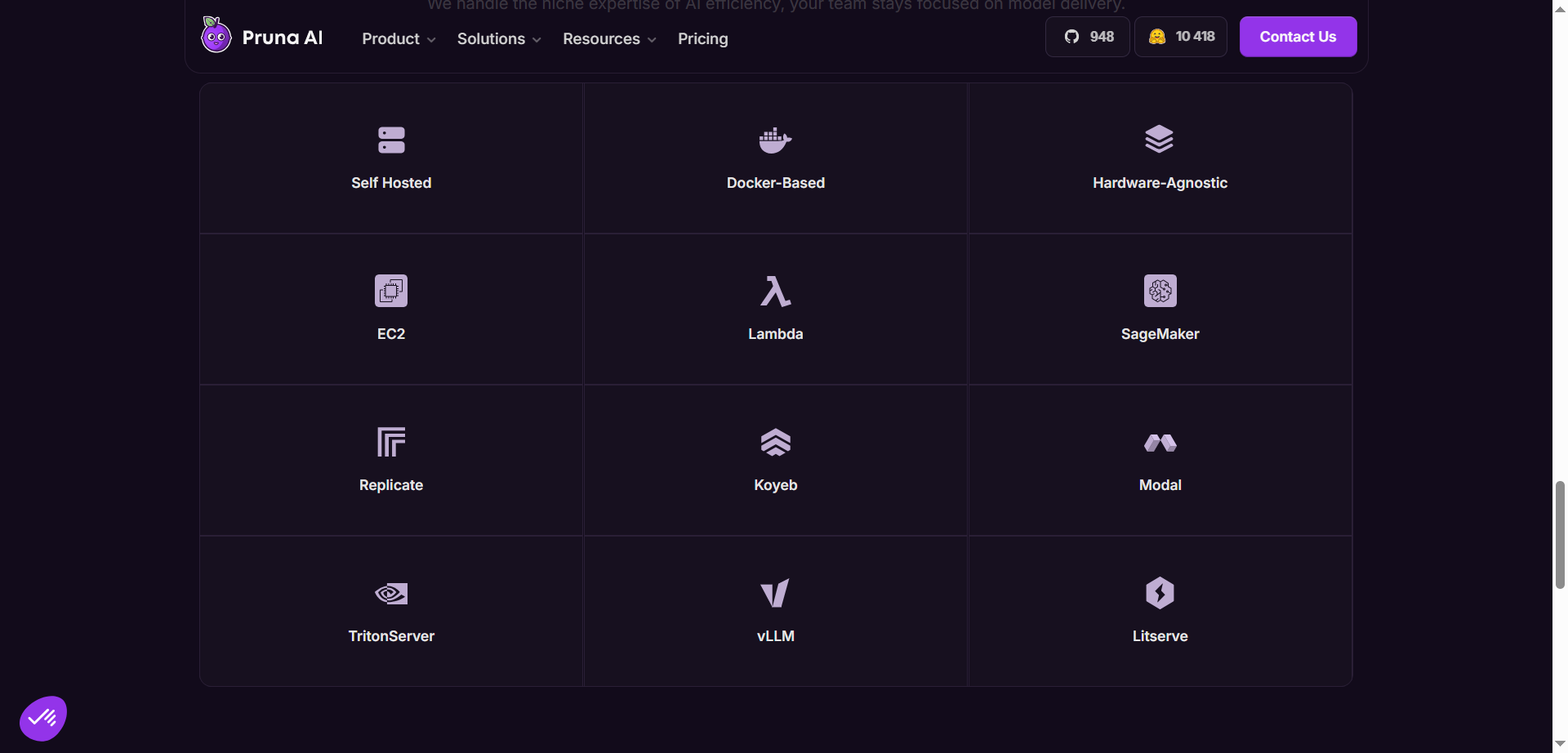

- Cross-Platform Support: Compatible with various hardware, cloud, and serving platforms.

- Minimal Quality Loss: Evaluates and ensures model accuracy post-compression.

- Open Source Framework: Accessible to developers for customization and transparency.

- Cost & Energy Efficient: Reduces resource usage and environmental impact significantly.

- Streamlines model optimization with simple integration.

- Supports a wide range of AI models and applications.

- Helps reduce cloud compute and hardware costs effectively.

- Promotes greener AI with energy-saving compression techniques.

- Certain algorithms require specific hardware like GPUs.

- Some advanced features are locked behind a Pro subscription.

- Requires technical knowledge to leverage fully.

- Optimization results depend heavily on the quality of the original model.

Open-Source

$ 0.00

Combination Engine

Accelerate Library

Ultra-Low Warm-Up Time

Hot LoRA Swapping

Evaluation Toolkit

Compatibility Layer

Discord Community

Enterprise

Contact sales

Pre-optimized Models Deployed for you

Early access to our most Advanced Algorithms

Closed-Source and Custom Model Adaptation

Automatic Optimization Updates

Support

Priority Deployment for new OSS Models

Expert Guidance on Model Library

Model Benchmarks

Priority Support & Private Slack

Proud of the love you're getting? Show off your AI Toolbook reviews—then invite more fans to share the love and build your credibility.

Add an AI Toolbook badge to your site—an easy way to drive followers, showcase updates, and collect reviews. It's like a mini 24/7 billboard for your AI.

Reviews

Rating Distribution

Average score

Popular Mention

FAQs

Similar AI Tools

Mirai

TryMirai is an on-device AI infrastructure platform that enables developers to integrate high-performance AI models directly into their apps with minimal latency, full data privacy, and no inference costs. The platform includes an optimized library of models (ranging in parameter sizes such as 0.3B, 0.5B, 1B, 3B, and 7B) to match different business goals, ensuring both efficiency and adaptability. It offers a smart routing engine to balance performance, privacy, and cost, and tools like SDKs for Apple platforms (with upcoming support for Android) to simplify integration. Users can deploy AI capabilities—such as summarization, classification, general chat, and custom use cases—without relying on cloud offloading, which reduces dependencies on network connectivity and protects user data.

Mirai

TryMirai is an on-device AI infrastructure platform that enables developers to integrate high-performance AI models directly into their apps with minimal latency, full data privacy, and no inference costs. The platform includes an optimized library of models (ranging in parameter sizes such as 0.3B, 0.5B, 1B, 3B, and 7B) to match different business goals, ensuring both efficiency and adaptability. It offers a smart routing engine to balance performance, privacy, and cost, and tools like SDKs for Apple platforms (with upcoming support for Android) to simplify integration. Users can deploy AI capabilities—such as summarization, classification, general chat, and custom use cases—without relying on cloud offloading, which reduces dependencies on network connectivity and protects user data.

Mirai

TryMirai is an on-device AI infrastructure platform that enables developers to integrate high-performance AI models directly into their apps with minimal latency, full data privacy, and no inference costs. The platform includes an optimized library of models (ranging in parameter sizes such as 0.3B, 0.5B, 1B, 3B, and 7B) to match different business goals, ensuring both efficiency and adaptability. It offers a smart routing engine to balance performance, privacy, and cost, and tools like SDKs for Apple platforms (with upcoming support for Android) to simplify integration. Users can deploy AI capabilities—such as summarization, classification, general chat, and custom use cases—without relying on cloud offloading, which reduces dependencies on network connectivity and protects user data.

Unsloth AI

Unsloth.AI is an open-source platform designed to accelerate and simplify the fine-tuning of large language models (LLMs). By leveraging manual mathematical derivations, custom GPU kernels, and efficient optimization techniques, Unsloth achieves up to 30x faster training speeds compared to traditional methods, without compromising model accuracy. It supports a wide range of popular models, including Llama, Mistral, Gemma, and BERT, and works seamlessly on various GPUs, from consumer-grade Tesla T4 to high-end H100, as well as AMD and Intel GPUs. Unsloth empowers developers, researchers, and AI enthusiasts to fine-tune models efficiently, even with limited computational resources, democratizing access to advanced AI model customization. With a focus on performance, scalability, and flexibility, Unsloth.AI is suitable for both academic research and commercial applications, helping users deploy specialized AI solutions faster and more effectively.

Unsloth AI

Unsloth.AI is an open-source platform designed to accelerate and simplify the fine-tuning of large language models (LLMs). By leveraging manual mathematical derivations, custom GPU kernels, and efficient optimization techniques, Unsloth achieves up to 30x faster training speeds compared to traditional methods, without compromising model accuracy. It supports a wide range of popular models, including Llama, Mistral, Gemma, and BERT, and works seamlessly on various GPUs, from consumer-grade Tesla T4 to high-end H100, as well as AMD and Intel GPUs. Unsloth empowers developers, researchers, and AI enthusiasts to fine-tune models efficiently, even with limited computational resources, democratizing access to advanced AI model customization. With a focus on performance, scalability, and flexibility, Unsloth.AI is suitable for both academic research and commercial applications, helping users deploy specialized AI solutions faster and more effectively.

Unsloth AI

Unsloth.AI is an open-source platform designed to accelerate and simplify the fine-tuning of large language models (LLMs). By leveraging manual mathematical derivations, custom GPU kernels, and efficient optimization techniques, Unsloth achieves up to 30x faster training speeds compared to traditional methods, without compromising model accuracy. It supports a wide range of popular models, including Llama, Mistral, Gemma, and BERT, and works seamlessly on various GPUs, from consumer-grade Tesla T4 to high-end H100, as well as AMD and Intel GPUs. Unsloth empowers developers, researchers, and AI enthusiasts to fine-tune models efficiently, even with limited computational resources, democratizing access to advanced AI model customization. With a focus on performance, scalability, and flexibility, Unsloth.AI is suitable for both academic research and commercial applications, helping users deploy specialized AI solutions faster and more effectively.

Abacus.AI

ChatLLM Teams by Abacus.AI is an all‑in‑one AI assistant that unifies access to top LLMs, image and video generators, and powerful agentic tools in a single workspace. It includes DeepAgent for complex, multi‑step tasks, code execution with an editor, document/chat with files, web search, TTS, and slide/doc generation. Users can build custom chatbots, set up AI workflows, generate images and videos from multiple models, and organize work with projects across desktop and mobile apps. The platform is OpenAI‑style in usability but adds operator features for running tasks on a computer, plus DeepAgent Desktop and AppLLM for building and hosting small apps.

Abacus.AI

ChatLLM Teams by Abacus.AI is an all‑in‑one AI assistant that unifies access to top LLMs, image and video generators, and powerful agentic tools in a single workspace. It includes DeepAgent for complex, multi‑step tasks, code execution with an editor, document/chat with files, web search, TTS, and slide/doc generation. Users can build custom chatbots, set up AI workflows, generate images and videos from multiple models, and organize work with projects across desktop and mobile apps. The platform is OpenAI‑style in usability but adds operator features for running tasks on a computer, plus DeepAgent Desktop and AppLLM for building and hosting small apps.

Abacus.AI

ChatLLM Teams by Abacus.AI is an all‑in‑one AI assistant that unifies access to top LLMs, image and video generators, and powerful agentic tools in a single workspace. It includes DeepAgent for complex, multi‑step tasks, code execution with an editor, document/chat with files, web search, TTS, and slide/doc generation. Users can build custom chatbots, set up AI workflows, generate images and videos from multiple models, and organize work with projects across desktop and mobile apps. The platform is OpenAI‑style in usability but adds operator features for running tasks on a computer, plus DeepAgent Desktop and AppLLM for building and hosting small apps.

Genloop AI

Genloop is a platform that empowers enterprises to build, deploy, and manage custom, private large language models (LLMs) tailored to their business data and requirements — all with minimal development effort. It turns enterprise data into intelligent, conversational insights, allowing users to ask business questions in natural language and receive actionable analysis instantly. The platform enables organizations to confidently manage their data-driven decision-making by offering advanced fine-tuning, automation, and deployment tools. Businesses can transform their existing datasets into private AI assistants that deliver accurate insights, while maintaining complete security and compliance. Genloop’s focus is on bridging the gap between AI and enterprise data operations, providing a scalable, trustworthy, and adaptive solution for teams that want to leverage AI without extensive coding or infrastructure complexity.

Genloop AI

Genloop is a platform that empowers enterprises to build, deploy, and manage custom, private large language models (LLMs) tailored to their business data and requirements — all with minimal development effort. It turns enterprise data into intelligent, conversational insights, allowing users to ask business questions in natural language and receive actionable analysis instantly. The platform enables organizations to confidently manage their data-driven decision-making by offering advanced fine-tuning, automation, and deployment tools. Businesses can transform their existing datasets into private AI assistants that deliver accurate insights, while maintaining complete security and compliance. Genloop’s focus is on bridging the gap between AI and enterprise data operations, providing a scalable, trustworthy, and adaptive solution for teams that want to leverage AI without extensive coding or infrastructure complexity.

Genloop AI

Genloop is a platform that empowers enterprises to build, deploy, and manage custom, private large language models (LLMs) tailored to their business data and requirements — all with minimal development effort. It turns enterprise data into intelligent, conversational insights, allowing users to ask business questions in natural language and receive actionable analysis instantly. The platform enables organizations to confidently manage their data-driven decision-making by offering advanced fine-tuning, automation, and deployment tools. Businesses can transform their existing datasets into private AI assistants that deliver accurate insights, while maintaining complete security and compliance. Genloop’s focus is on bridging the gap between AI and enterprise data operations, providing a scalable, trustworthy, and adaptive solution for teams that want to leverage AI without extensive coding or infrastructure complexity.

Nexa AI

Nexa.ai is an enterprise-grade AI optimization and deployment platform focused on accelerating generative AI performance on any device. It allows businesses to run advanced multimodal models—covering text, audio, visuals, and function calling—up to 9x faster and with 4x less memory usage. By using intelligent compression techniques like quantization, pruning, and distillation, Nexa enables models to operate efficiently without loss of accuracy. The platform supports a wide range of hardware—CPU, GPU, and NPU—from major chipmakers and ensures high accuracy, privacy, and cost efficiency for AI deployments at scale.

Nexa AI

Nexa.ai is an enterprise-grade AI optimization and deployment platform focused on accelerating generative AI performance on any device. It allows businesses to run advanced multimodal models—covering text, audio, visuals, and function calling—up to 9x faster and with 4x less memory usage. By using intelligent compression techniques like quantization, pruning, and distillation, Nexa enables models to operate efficiently without loss of accuracy. The platform supports a wide range of hardware—CPU, GPU, and NPU—from major chipmakers and ensures high accuracy, privacy, and cost efficiency for AI deployments at scale.

Nexa AI

Nexa.ai is an enterprise-grade AI optimization and deployment platform focused on accelerating generative AI performance on any device. It allows businesses to run advanced multimodal models—covering text, audio, visuals, and function calling—up to 9x faster and with 4x less memory usage. By using intelligent compression techniques like quantization, pruning, and distillation, Nexa enables models to operate efficiently without loss of accuracy. The platform supports a wide range of hardware—CPU, GPU, and NPU—from major chipmakers and ensures high accuracy, privacy, and cost efficiency for AI deployments at scale.

Langchain

LangChain is a powerful open-source framework designed to help developers build context-aware applications that leverage large language models (LLMs). It allows users to connect language models to various data sources, APIs, and memory components, enabling intelligent, multi-step reasoning and decision-making processes. LangChain supports both Python and JavaScript, providing modular building blocks for developers to create chatbots, AI assistants, retrieval-augmented generation (RAG) systems, and agent-based tools. The framework is widely adopted across industries for its flexibility in connecting structured and unstructured data with LLMs.

Langchain

LangChain is a powerful open-source framework designed to help developers build context-aware applications that leverage large language models (LLMs). It allows users to connect language models to various data sources, APIs, and memory components, enabling intelligent, multi-step reasoning and decision-making processes. LangChain supports both Python and JavaScript, providing modular building blocks for developers to create chatbots, AI assistants, retrieval-augmented generation (RAG) systems, and agent-based tools. The framework is widely adopted across industries for its flexibility in connecting structured and unstructured data with LLMs.

Langchain

LangChain is a powerful open-source framework designed to help developers build context-aware applications that leverage large language models (LLMs). It allows users to connect language models to various data sources, APIs, and memory components, enabling intelligent, multi-step reasoning and decision-making processes. LangChain supports both Python and JavaScript, providing modular building blocks for developers to create chatbots, AI assistants, retrieval-augmented generation (RAG) systems, and agent-based tools. The framework is widely adopted across industries for its flexibility in connecting structured and unstructured data with LLMs.

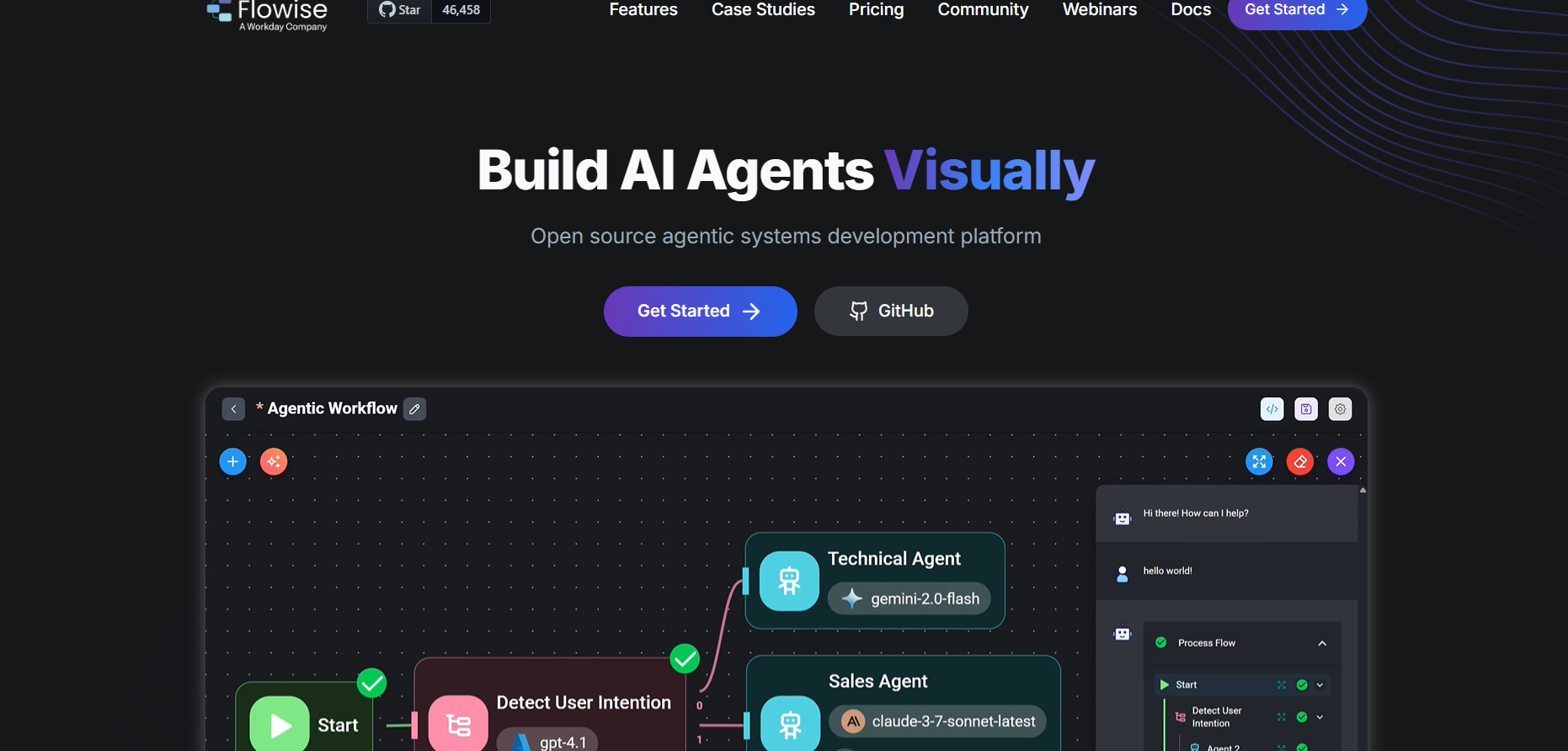

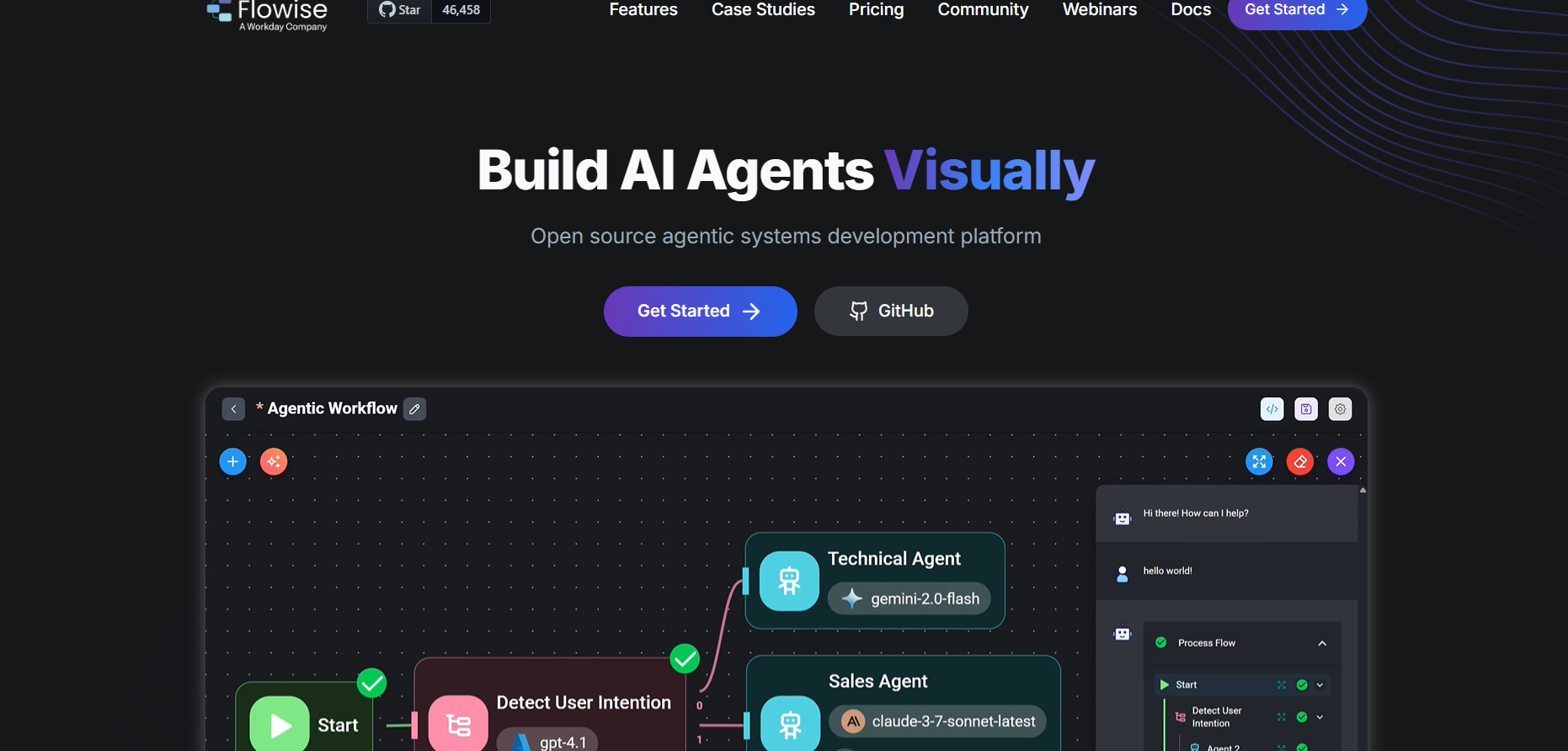

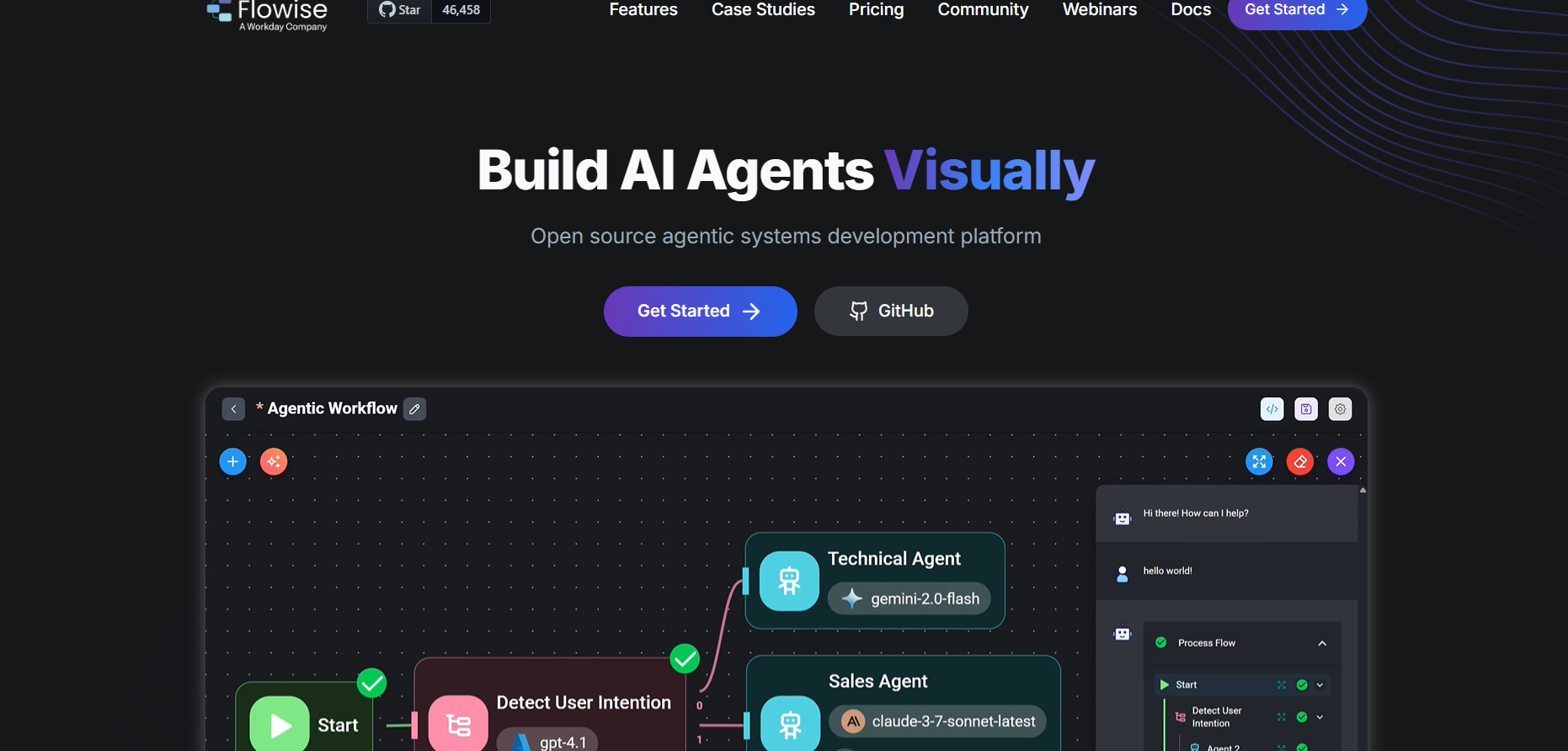

Flowise AI

Flowise AI is an open-source, visual tool that allows users to build, deploy, and manage AI workflows and chatbots powered by large language models without needing to code. It provides a drag-and-drop interface where users can visually connect LangChain components, APIs, data sources, and models to create complex AI systems easily. With Flowise AI, developers, analysts, and businesses can build chatbots, RAG pipelines, or automation systems through an intuitive UI rather than scripting everything manually. Its no-code design accelerates prototyping and deployment, enabling faster experimentation with LLM-powered workflows.

Flowise AI

Flowise AI is an open-source, visual tool that allows users to build, deploy, and manage AI workflows and chatbots powered by large language models without needing to code. It provides a drag-and-drop interface where users can visually connect LangChain components, APIs, data sources, and models to create complex AI systems easily. With Flowise AI, developers, analysts, and businesses can build chatbots, RAG pipelines, or automation systems through an intuitive UI rather than scripting everything manually. Its no-code design accelerates prototyping and deployment, enabling faster experimentation with LLM-powered workflows.

Flowise AI

Flowise AI is an open-source, visual tool that allows users to build, deploy, and manage AI workflows and chatbots powered by large language models without needing to code. It provides a drag-and-drop interface where users can visually connect LangChain components, APIs, data sources, and models to create complex AI systems easily. With Flowise AI, developers, analysts, and businesses can build chatbots, RAG pipelines, or automation systems through an intuitive UI rather than scripting everything manually. Its no-code design accelerates prototyping and deployment, enabling faster experimentation with LLM-powered workflows.

Ask Any Model

AskAnyModel is a unified AI interface that allows users to interact with multiple leading AI models — such as GPT, Claude, Gemini, and Mistral — from a single platform. It eliminates the need for multiple subscriptions and interfaces by bringing top AI models into one streamlined environment. Users can compare responses, analyze outputs, and select the best AI model for specific tasks like content creation, coding, data analysis, or research. AskAnyModel empowers individuals and teams to harness AI diversity efficiently, offering advanced tools for prompt testing, model benchmarking, and workflow integration.

Ask Any Model

AskAnyModel is a unified AI interface that allows users to interact with multiple leading AI models — such as GPT, Claude, Gemini, and Mistral — from a single platform. It eliminates the need for multiple subscriptions and interfaces by bringing top AI models into one streamlined environment. Users can compare responses, analyze outputs, and select the best AI model for specific tasks like content creation, coding, data analysis, or research. AskAnyModel empowers individuals and teams to harness AI diversity efficiently, offering advanced tools for prompt testing, model benchmarking, and workflow integration.

Ask Any Model

AskAnyModel is a unified AI interface that allows users to interact with multiple leading AI models — such as GPT, Claude, Gemini, and Mistral — from a single platform. It eliminates the need for multiple subscriptions and interfaces by bringing top AI models into one streamlined environment. Users can compare responses, analyze outputs, and select the best AI model for specific tasks like content creation, coding, data analysis, or research. AskAnyModel empowers individuals and teams to harness AI diversity efficiently, offering advanced tools for prompt testing, model benchmarking, and workflow integration.

Awan LLM

Awan LLM is a cost-effective, unlimited token large language model inference API platform designed for power users and developers. Unlike traditional API providers that charge per token, Awan LLM offers a monthly subscription model that enables users to send and receive unlimited tokens up to the model's context limit. It supports unrestricted use of LLM models without censorship or constraints. The platform is built on privately owned data centers and GPUs, allowing it to offer efficient and scalable AI services. Awan LLM supports numerous use cases including AI assistants, AI agents, roleplaying, data processing, code completion, and building AI-powered applications without worrying about token limits or costs.

Awan LLM

Awan LLM is a cost-effective, unlimited token large language model inference API platform designed for power users and developers. Unlike traditional API providers that charge per token, Awan LLM offers a monthly subscription model that enables users to send and receive unlimited tokens up to the model's context limit. It supports unrestricted use of LLM models without censorship or constraints. The platform is built on privately owned data centers and GPUs, allowing it to offer efficient and scalable AI services. Awan LLM supports numerous use cases including AI assistants, AI agents, roleplaying, data processing, code completion, and building AI-powered applications without worrying about token limits or costs.

Awan LLM

Awan LLM is a cost-effective, unlimited token large language model inference API platform designed for power users and developers. Unlike traditional API providers that charge per token, Awan LLM offers a monthly subscription model that enables users to send and receive unlimited tokens up to the model's context limit. It supports unrestricted use of LLM models without censorship or constraints. The platform is built on privately owned data centers and GPUs, allowing it to offer efficient and scalable AI services. Awan LLM supports numerous use cases including AI assistants, AI agents, roleplaying, data processing, code completion, and building AI-powered applications without worrying about token limits or costs.

LM Studio

LM Studio is a local large language model (LLM) platform that enables users to run and download powerful AI language models like LLaMa, MPT, and Gemma directly on their own computers. This platform supports Mac, Windows, and Linux operating systems, providing flexibility to users across different devices. LM Studio focuses on privacy and control by allowing users to work with AI models locally without relying on cloud-based services, ensuring data stays on the user’s device. It offers an easy-to-install interface with step-by-step guidance for setup, facilitating access to advanced AI capabilities for developers, researchers, and AI enthusiasts without requiring an internet connection.

LM Studio

LM Studio is a local large language model (LLM) platform that enables users to run and download powerful AI language models like LLaMa, MPT, and Gemma directly on their own computers. This platform supports Mac, Windows, and Linux operating systems, providing flexibility to users across different devices. LM Studio focuses on privacy and control by allowing users to work with AI models locally without relying on cloud-based services, ensuring data stays on the user’s device. It offers an easy-to-install interface with step-by-step guidance for setup, facilitating access to advanced AI capabilities for developers, researchers, and AI enthusiasts without requiring an internet connection.

LM Studio

LM Studio is a local large language model (LLM) platform that enables users to run and download powerful AI language models like LLaMa, MPT, and Gemma directly on their own computers. This platform supports Mac, Windows, and Linux operating systems, providing flexibility to users across different devices. LM Studio focuses on privacy and control by allowing users to work with AI models locally without relying on cloud-based services, ensuring data stays on the user’s device. It offers an easy-to-install interface with step-by-step guidance for setup, facilitating access to advanced AI capabilities for developers, researchers, and AI enthusiasts without requiring an internet connection.

ModelRiver

ModelRiver is a unified AI integration platform that allows developers to access multiple AI providers through a single API. Instead of integrating each model separately, teams integrate once and gain access to a wide range of AI services. Built-in failover ensures applications remain online even if one provider experiences issues. ModelRiver is designed for reliability, scalability, and simplicity, making it ideal for production environments that depend on AI availability.

ModelRiver

ModelRiver is a unified AI integration platform that allows developers to access multiple AI providers through a single API. Instead of integrating each model separately, teams integrate once and gain access to a wide range of AI services. Built-in failover ensures applications remain online even if one provider experiences issues. ModelRiver is designed for reliability, scalability, and simplicity, making it ideal for production environments that depend on AI availability.

ModelRiver

ModelRiver is a unified AI integration platform that allows developers to access multiple AI providers through a single API. Instead of integrating each model separately, teams integrate once and gain access to a wide range of AI services. Built-in failover ensures applications remain online even if one provider experiences issues. ModelRiver is designed for reliability, scalability, and simplicity, making it ideal for production environments that depend on AI availability.

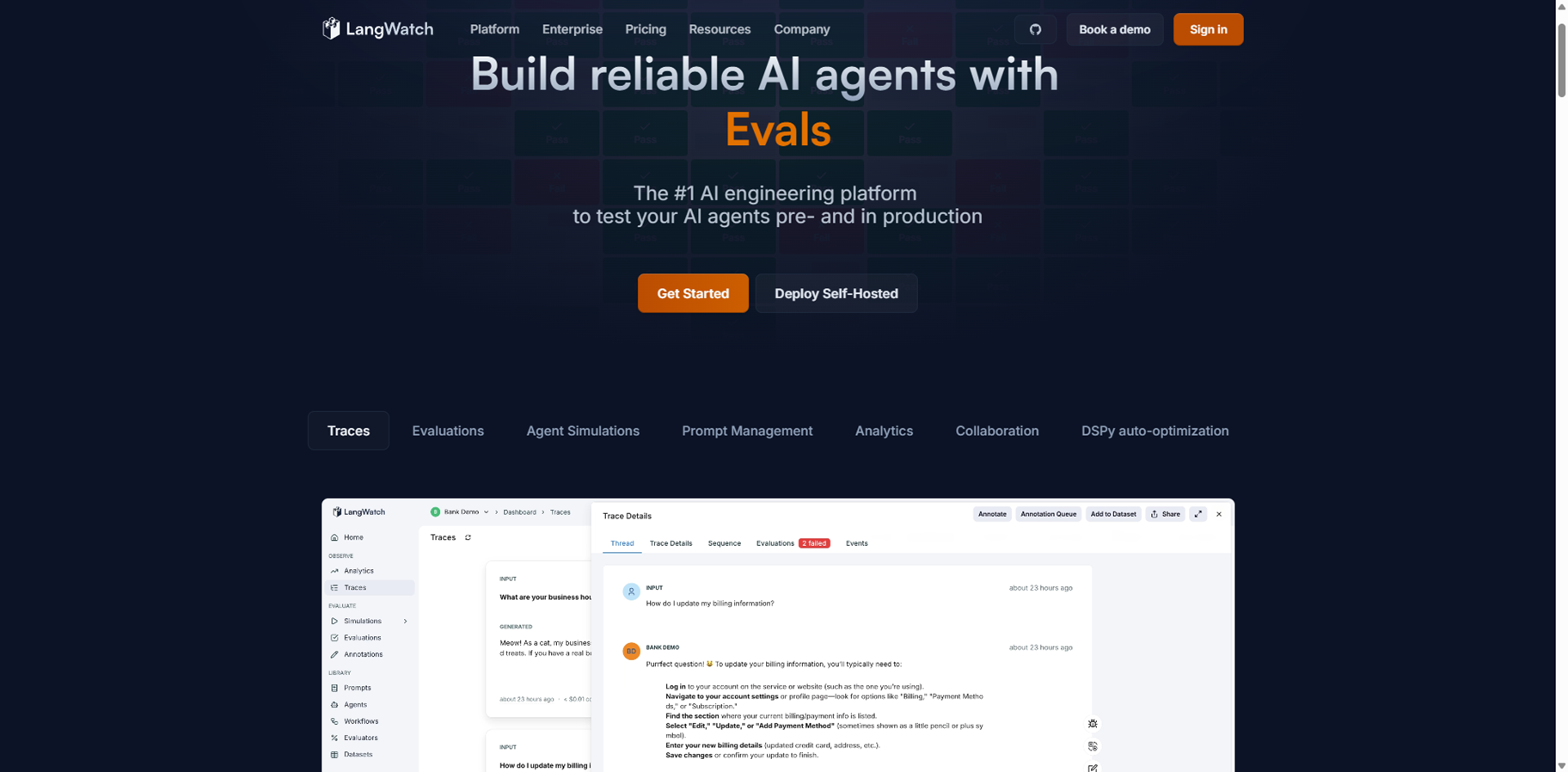

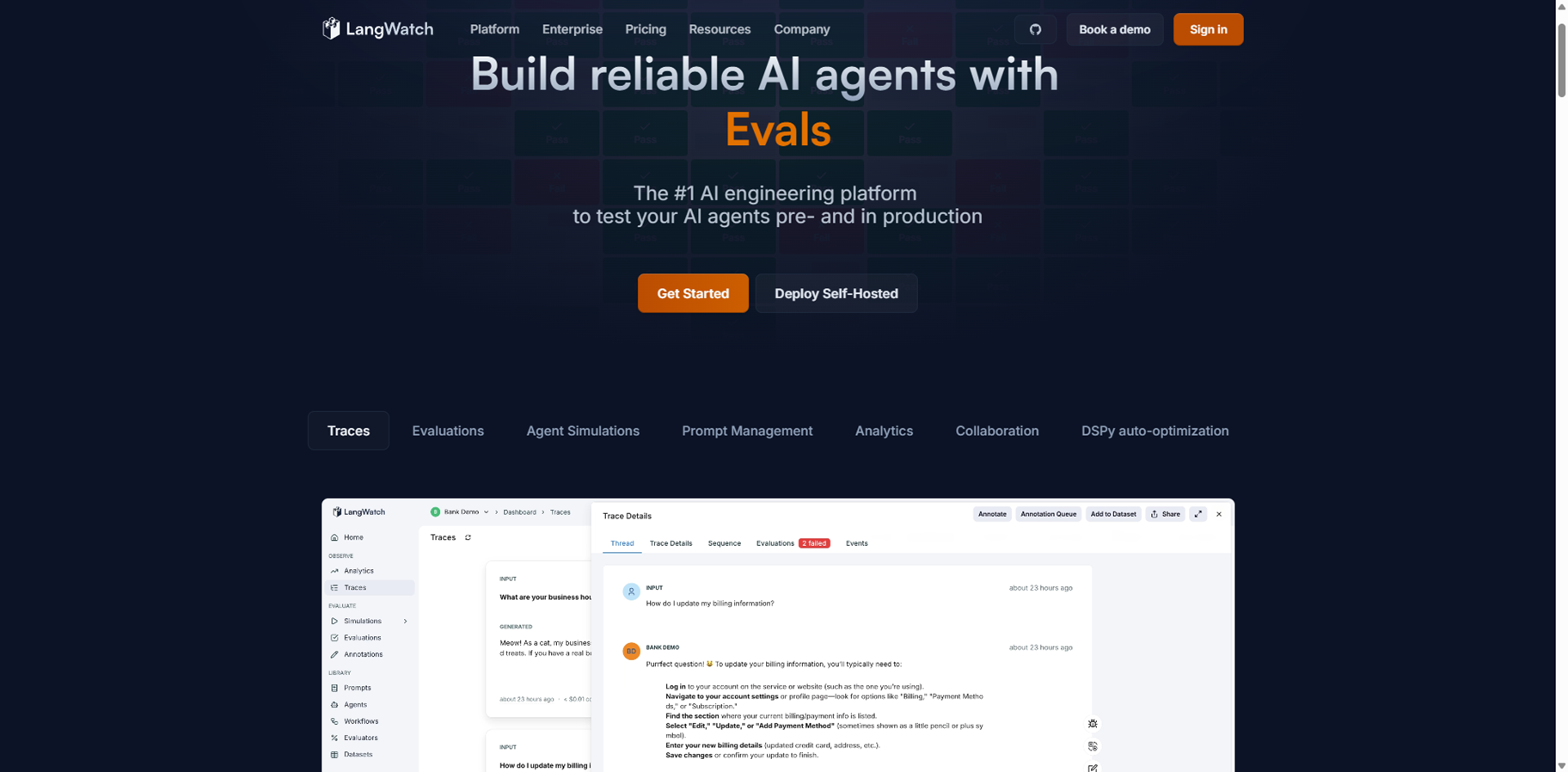

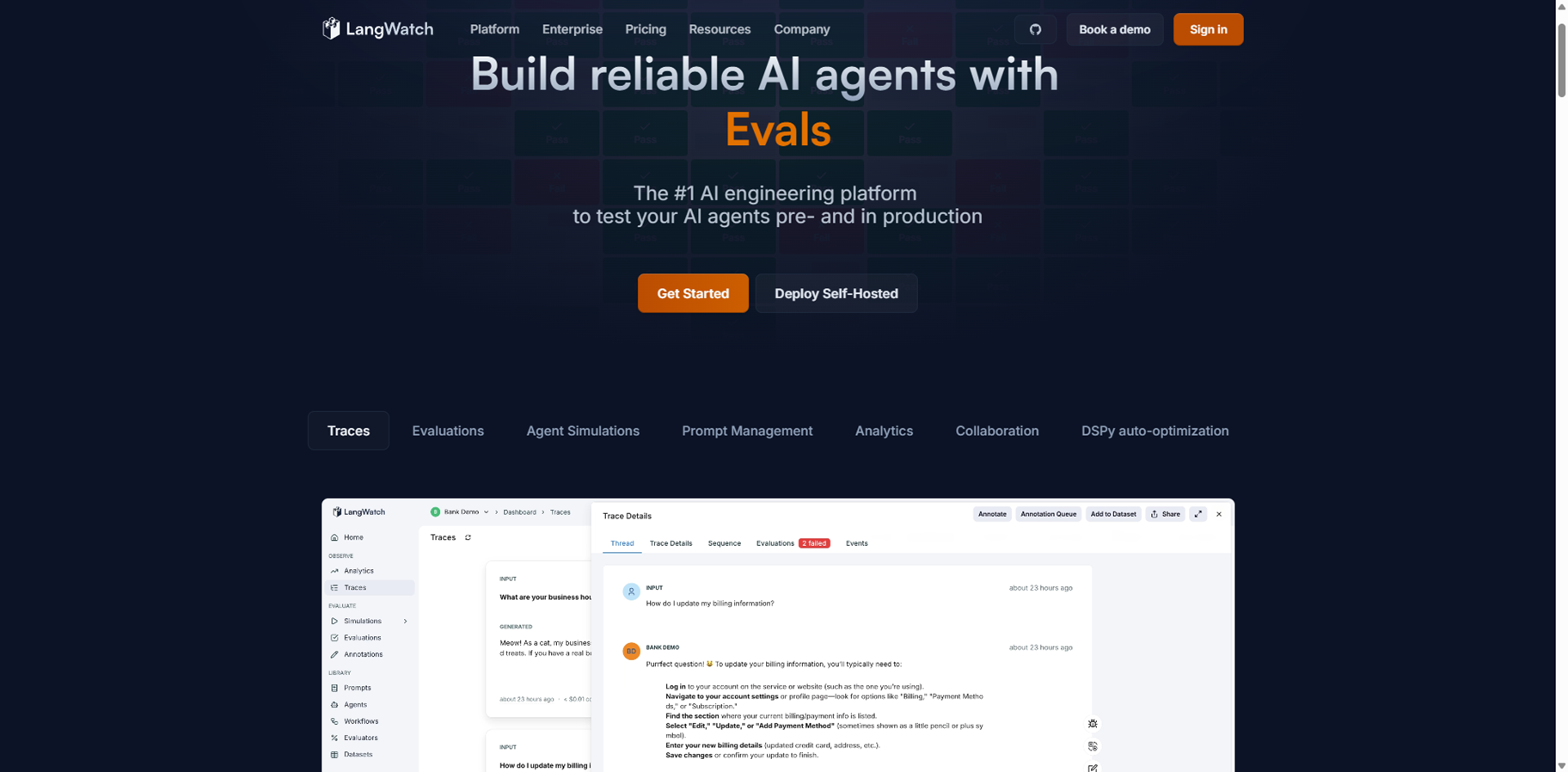

LangWatch

LangWatch.ai is the leading AI engineering platform built specifically to test, evaluate, and monitor AI agents from prototype through production, helping thousands of developers ship reliable complex AI without guesswork. It creates a continuous quality loop with tools like traces, custom evaluations, agent simulations, prompt management, analytics, collaboration features, and DSPy auto-optimization, boasting 400k monthly installs, 500k daily evaluations to curb hallucinations, and 5k GitHub stars. Teams use it to build prompts and models with version control and safe rollouts, run batch tests and synthetic conversations across scenarios, and track every change's impact programmatically or via UI. Fully open-source with OpenTelemetry integration, it works with any LLM, agent framework, or model via simple Python/TypeScript installs, offering self-hosting, enterprise security like ISO27001/SOC2, and no data lock-in for seamless tech stack fit.

LangWatch

LangWatch.ai is the leading AI engineering platform built specifically to test, evaluate, and monitor AI agents from prototype through production, helping thousands of developers ship reliable complex AI without guesswork. It creates a continuous quality loop with tools like traces, custom evaluations, agent simulations, prompt management, analytics, collaboration features, and DSPy auto-optimization, boasting 400k monthly installs, 500k daily evaluations to curb hallucinations, and 5k GitHub stars. Teams use it to build prompts and models with version control and safe rollouts, run batch tests and synthetic conversations across scenarios, and track every change's impact programmatically or via UI. Fully open-source with OpenTelemetry integration, it works with any LLM, agent framework, or model via simple Python/TypeScript installs, offering self-hosting, enterprise security like ISO27001/SOC2, and no data lock-in for seamless tech stack fit.

LangWatch

LangWatch.ai is the leading AI engineering platform built specifically to test, evaluate, and monitor AI agents from prototype through production, helping thousands of developers ship reliable complex AI without guesswork. It creates a continuous quality loop with tools like traces, custom evaluations, agent simulations, prompt management, analytics, collaboration features, and DSPy auto-optimization, boasting 400k monthly installs, 500k daily evaluations to curb hallucinations, and 5k GitHub stars. Teams use it to build prompts and models with version control and safe rollouts, run batch tests and synthetic conversations across scenarios, and track every change's impact programmatically or via UI. Fully open-source with OpenTelemetry integration, it works with any LLM, agent framework, or model via simple Python/TypeScript installs, offering self-hosting, enterprise security like ISO27001/SOC2, and no data lock-in for seamless tech stack fit.

Editorial Note

This page was researched and written by the ATB Editorial Team. Our team researches each AI tool by reviewing its official website, testing features, exploring real use cases, and considering user feedback. Every page is fact-checked and regularly updated to ensure the information stays accurate, neutral, and useful for our readers.

If you have any suggestions or questions, email us at hello@aitoolbook.ai