The model can also edit existing images, expand images beyond their original borders (inpainting), and create artistic interpretations of text descriptions.

❗ Note: OpenAI has phased out DALL·E 2 in favor of DALL·E 3, which offers more advanced image generation.

✅ Artists & Designers – Generate unique artwork, illustrations, or concepts.

✅ Marketers & Content Creators – Create custom visuals for ads, social media, and branding.

✅ Writers & Storytellers – Design book covers, concept art, and AI-assisted storytelling visuals.

✅ Educators & Students – Create educational diagrams and engaging graphics.

✅ Anyone Needing Custom AI-Generated Images – Turn ideas into stunning visuals effortlessly.

How to Use DALL·E 2?

❗ Note: OpenAI has phased out DALL·E 2 in favor of DALL·E 3, which offers more advanced image generation. While DALL·E 2 is no longer actively supported, here’s how it worked:

1️⃣ Access DALL·E 2: Previously available through OpenAI’s platform or API, but now replaced by DALL·E 3.

2️⃣ Enter a Prompt: Users would type a detailed description like: “A futuristic cityscape at sunset, in cyberpunk style.”

3️⃣ Generate & Refine: The model created multiple image variations based on the prompt. Users could refine results by tweaking descriptions.

4️⃣ Edit & Expand (Inpainting): DALL·E 2 allowed users to edit parts of an image or extend the canvas beyond its borders.

5️⃣ Download & Use the Image: Once satisfied, users could download the image for personal or commercial use (within OpenAI’s guidelines).Since OpenAI recommends DALL·E 3 for all new image-generation tasks, users should now explore DALL·E 3 for better quality and functionality.

- Creative & Detailed Images: Generates high-quality, original artwork from text.

- Inpainting & Editing: Users could modify or expand images, adding new elements seamlessly.

- Easy & Fast AI Art Generation: No advanced design skills required.

- Realistic & Artistic Styles: Capable of creating both photo-realistic and artistic visuals.

- Simple & Intuitive – Easy for beginners to generate high-quality AI images.

- Variety of Styles – From photorealistic images to digital art and fantasy.

- AI-Assisted Editing – Allowed users to edit, retouch, or expand images.

- No Longer Available – OpenAI has replaced DALL·E 2 with DALL·E 3.

- Limited Control – DALL·E 3 now provides better adherence to prompts and user input.

- Lower Image Quality Compared to Newer Models – DALL·E 3 generates more detailed, high-resolution outputs.

Free

$ 0.00

Standard voice mode

Real-time data from the web with search

Limited access to GPT-4o and o4-mini

Limited access to file uploads, advanced data analysis, and image generation

Use custom GPTs

Plus

$ 20.00

Extended limits on messaging, file uploads, advanced data analysis, and image generation

Standard and advanced voice mode

Access to deep research, multiple reasoning models (o4-mini, o4-mini-high, and o3), and a research preview of GPT-4.5

Create and use tasks, projects, and custom GPTs

Limited access to Sora video generation

Opportunities to test new features

Pro

$ 200.00

Unlimited access to all reasoning models and GPT-4o

Unlimited access to advanced voice

Extended access to deep research, which conducts multi-step online research for complex tasks

Access to research previews of GPT-4.5 and Operator

Access to o1 pro mode, which uses more compute for the best answers to the hardest questions

Extended access to Sora video generation

Access to a research preview of Codex agent

Proud of the love you're getting? Show off your AI Toolbook reviews—then invite more fans to share the love and build your credibility.

Add an AI Toolbook badge to your site—an easy way to drive followers, showcase updates, and collect reviews. It's like a mini 24/7 billboard for your AI.

Reviews

Rating Distribution

Average score

Popular Mention

FAQs

Similar AI Tools

OpenAI GPT-4o

GPT-4o is OpenAI’s latest and most advanced AI model, offering faster, more powerful, and cost-efficient natural language processing. It can handle text, vision, and audio in real time, making it the first OpenAI model to process multimodal inputs natively. It’s significantly faster and cheaper than GPT-4 Turbo while improving accuracy, reasoning, and multilingual support.

OpenAI GPT-4o

GPT-4o is OpenAI’s latest and most advanced AI model, offering faster, more powerful, and cost-efficient natural language processing. It can handle text, vision, and audio in real time, making it the first OpenAI model to process multimodal inputs natively. It’s significantly faster and cheaper than GPT-4 Turbo while improving accuracy, reasoning, and multilingual support.

OpenAI GPT-4o

GPT-4o is OpenAI’s latest and most advanced AI model, offering faster, more powerful, and cost-efficient natural language processing. It can handle text, vision, and audio in real time, making it the first OpenAI model to process multimodal inputs natively. It’s significantly faster and cheaper than GPT-4 Turbo while improving accuracy, reasoning, and multilingual support.

OpenAI o1

o1 is a fast, highly capable language model developed by OpenAI, optimized for performance, cost-efficiency, and general-purpose use. It represents the entry point into OpenAI’s GPT-4 class of models, delivering high-quality natural language generation, comprehension, and interaction at lower latency and cost than GPT-4 Turbo. Despite being a newer and smaller variant, o1 is robust enough for most AI applications—from content generation to customer support—making it a reliable choice for developers looking to build intelligent and responsive systems.

OpenAI o1

o1 is a fast, highly capable language model developed by OpenAI, optimized for performance, cost-efficiency, and general-purpose use. It represents the entry point into OpenAI’s GPT-4 class of models, delivering high-quality natural language generation, comprehension, and interaction at lower latency and cost than GPT-4 Turbo. Despite being a newer and smaller variant, o1 is robust enough for most AI applications—from content generation to customer support—making it a reliable choice for developers looking to build intelligent and responsive systems.

OpenAI o1

o1 is a fast, highly capable language model developed by OpenAI, optimized for performance, cost-efficiency, and general-purpose use. It represents the entry point into OpenAI’s GPT-4 class of models, delivering high-quality natural language generation, comprehension, and interaction at lower latency and cost than GPT-4 Turbo. Despite being a newer and smaller variant, o1 is robust enough for most AI applications—from content generation to customer support—making it a reliable choice for developers looking to build intelligent and responsive systems.

OpenAI o3-mini

OpenAI o3-mini is a lightweight, efficient AI model from OpenAI’s "o3" series, designed to balance cost, speed, and intelligence. It is optimized for faster inference and lower computational costs, making it an ideal choice for businesses and developers who need AI-powered applications without the high expense of larger models like GPT-4o.

OpenAI o3-mini

OpenAI o3-mini is a lightweight, efficient AI model from OpenAI’s "o3" series, designed to balance cost, speed, and intelligence. It is optimized for faster inference and lower computational costs, making it an ideal choice for businesses and developers who need AI-powered applications without the high expense of larger models like GPT-4o.

OpenAI o3-mini

OpenAI o3-mini is a lightweight, efficient AI model from OpenAI’s "o3" series, designed to balance cost, speed, and intelligence. It is optimized for faster inference and lower computational costs, making it an ideal choice for businesses and developers who need AI-powered applications without the high expense of larger models like GPT-4o.

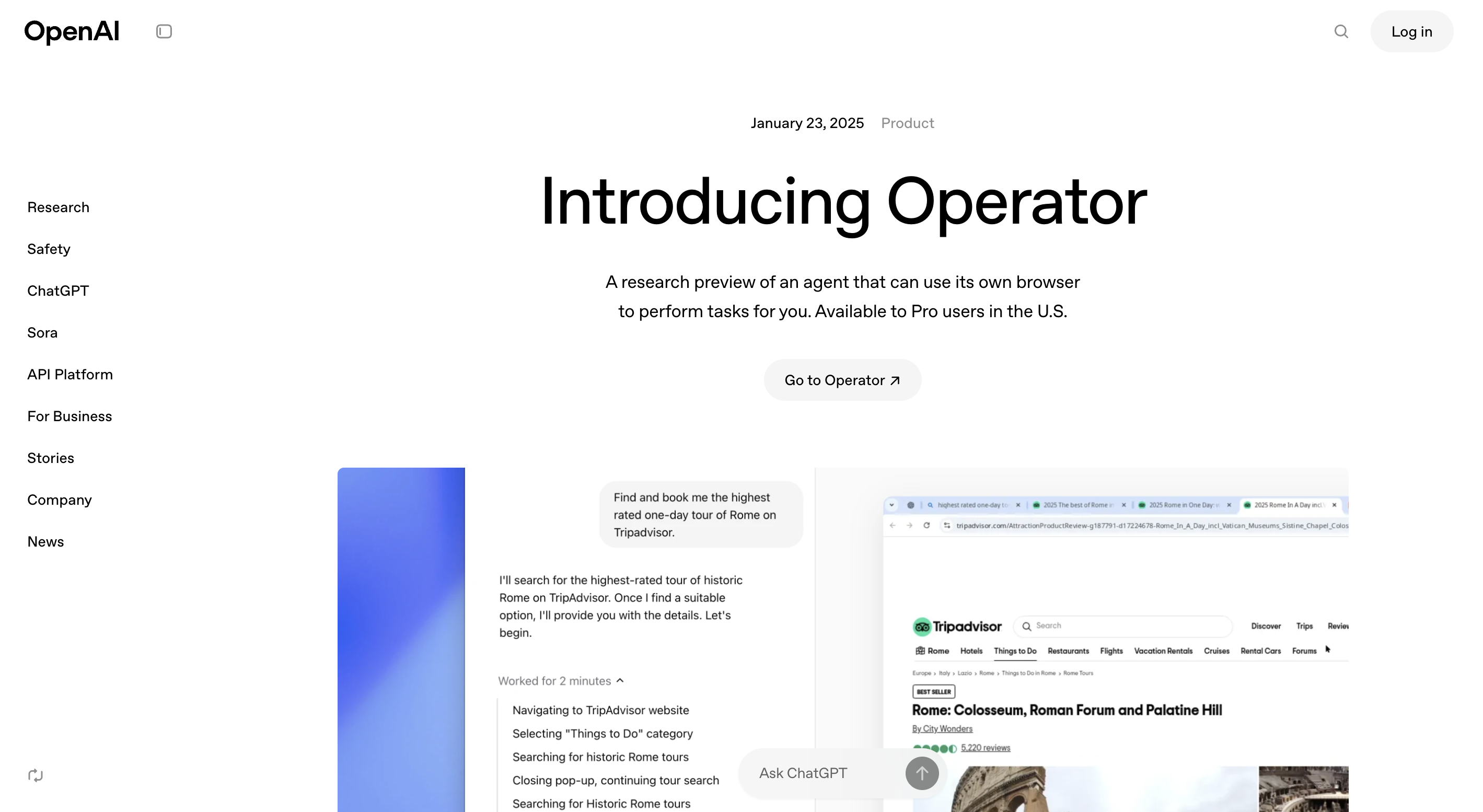

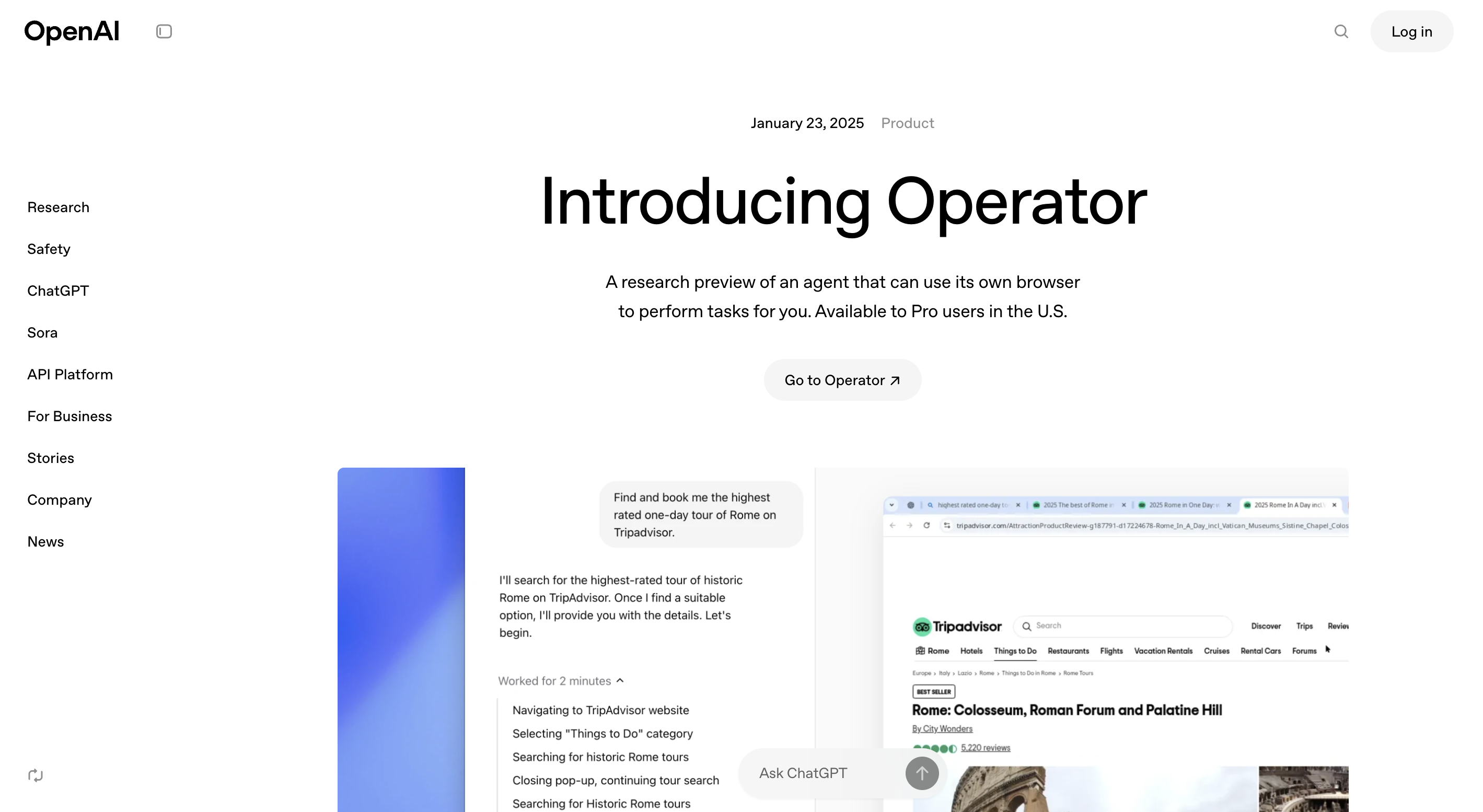

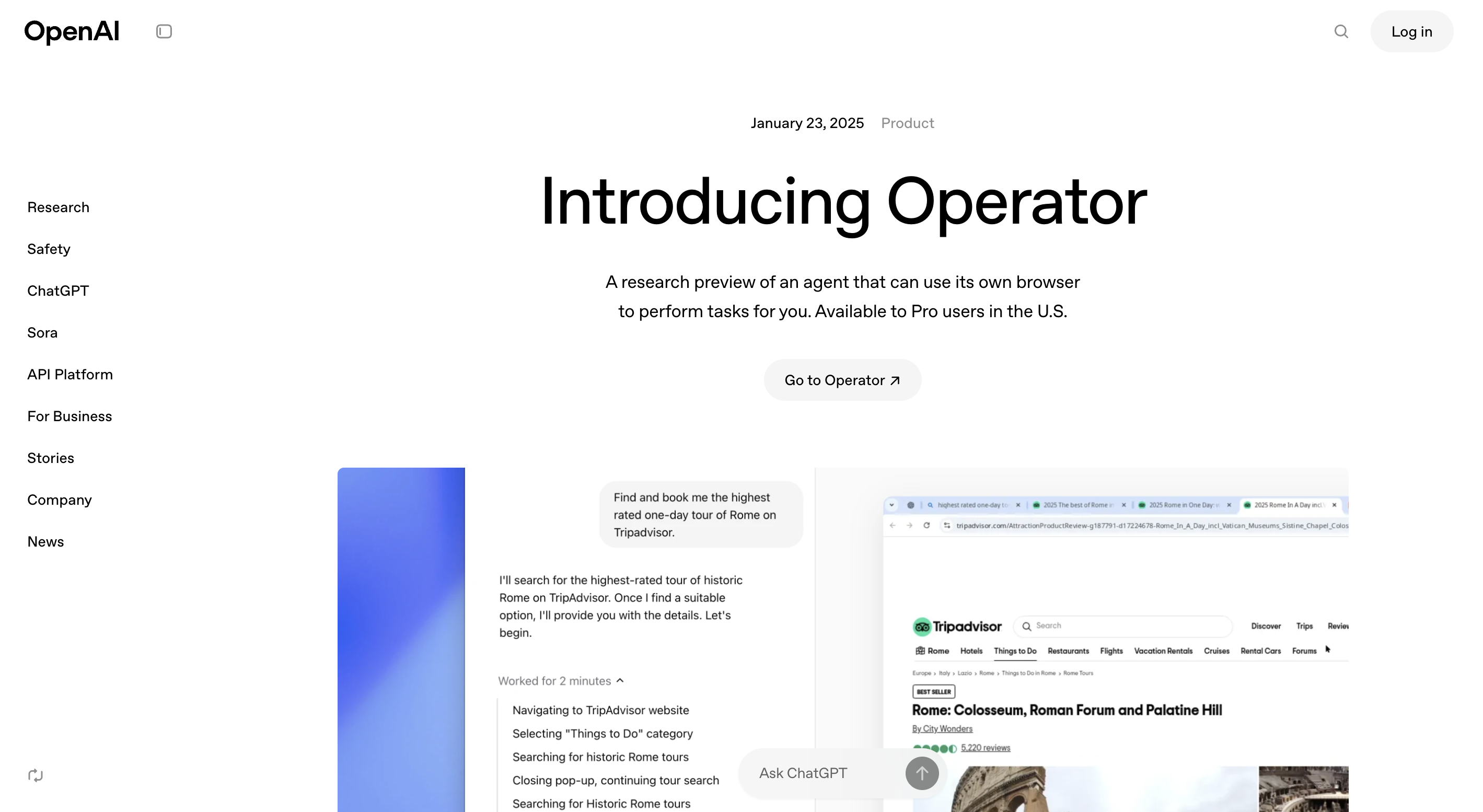

OpenAI Operator

OpenAI Operator is a cloud-native orchestration layer designed to help businesses deploy and manage AI models at scale. It optimizes performance, cost, and efficiency by dynamically selecting and running AI models based on workload demands. Operator enables seamless AI model deployment, monitoring, and scaling for enterprises, ensuring that AI-powered applications run efficiently and cost-effectively.

OpenAI Operator

OpenAI Operator is a cloud-native orchestration layer designed to help businesses deploy and manage AI models at scale. It optimizes performance, cost, and efficiency by dynamically selecting and running AI models based on workload demands. Operator enables seamless AI model deployment, monitoring, and scaling for enterprises, ensuring that AI-powered applications run efficiently and cost-effectively.

OpenAI Operator

OpenAI Operator is a cloud-native orchestration layer designed to help businesses deploy and manage AI models at scale. It optimizes performance, cost, and efficiency by dynamically selecting and running AI models based on workload demands. Operator enables seamless AI model deployment, monitoring, and scaling for enterprises, ensuring that AI-powered applications run efficiently and cost-effectively.

Deep Research is an AI-powered agent that autonomously browses the web, interprets and analyzes text, images, and PDFs, and generates comprehensive, cited reports on user-specified topics. It leverages OpenAI's advanced o3 model to conduct multi-step research tasks, delivering results within 5 to 30 minutes.

OpenAI Deep Resear..

Deep Research is an AI-powered agent that autonomously browses the web, interprets and analyzes text, images, and PDFs, and generates comprehensive, cited reports on user-specified topics. It leverages OpenAI's advanced o3 model to conduct multi-step research tasks, delivering results within 5 to 30 minutes.

OpenAI Deep Resear..

Deep Research is an AI-powered agent that autonomously browses the web, interprets and analyzes text, images, and PDFs, and generates comprehensive, cited reports on user-specified topics. It leverages OpenAI's advanced o3 model to conduct multi-step research tasks, delivering results within 5 to 30 minutes.

OpenAI Realtime AP..

OpenAI’s Real-Time API is a game-changing advancement in AI interaction, enabling developers to build apps that respond instantly—literally in milliseconds—to user inputs. It drastically reduces the response latency of OpenAI’s GPT-4o model to as low as 100 milliseconds, unlocking a whole new world of AI-powered experiences that feel more human, responsive, and conversational in real time. Whether you're building a live voice assistant, a responsive chatbot, or interactive multiplayer tools powered by AI, this API puts real in real-time AI.

OpenAI Realtime AP..

OpenAI’s Real-Time API is a game-changing advancement in AI interaction, enabling developers to build apps that respond instantly—literally in milliseconds—to user inputs. It drastically reduces the response latency of OpenAI’s GPT-4o model to as low as 100 milliseconds, unlocking a whole new world of AI-powered experiences that feel more human, responsive, and conversational in real time. Whether you're building a live voice assistant, a responsive chatbot, or interactive multiplayer tools powered by AI, this API puts real in real-time AI.

OpenAI Realtime AP..

OpenAI’s Real-Time API is a game-changing advancement in AI interaction, enabling developers to build apps that respond instantly—literally in milliseconds—to user inputs. It drastically reduces the response latency of OpenAI’s GPT-4o model to as low as 100 milliseconds, unlocking a whole new world of AI-powered experiences that feel more human, responsive, and conversational in real time. Whether you're building a live voice assistant, a responsive chatbot, or interactive multiplayer tools powered by AI, this API puts real in real-time AI.

OpenAI Omni Modera..

omni-moderation-latest is OpenAI’s most advanced content moderation model, designed to detect and flag harmful, unsafe, or policy-violating content across a wide range of modalities and languages. Built on the GPT-4o architecture, it leverages multimodal understanding and multilingual capabilities to provide robust moderation for text, images, and audio inputs. This model is particularly effective in identifying nuanced and culturally specific toxic content, including implicit insults, sarcasm, and aggression that general-purpose systems might overlook.

OpenAI Omni Modera..

omni-moderation-latest is OpenAI’s most advanced content moderation model, designed to detect and flag harmful, unsafe, or policy-violating content across a wide range of modalities and languages. Built on the GPT-4o architecture, it leverages multimodal understanding and multilingual capabilities to provide robust moderation for text, images, and audio inputs. This model is particularly effective in identifying nuanced and culturally specific toxic content, including implicit insults, sarcasm, and aggression that general-purpose systems might overlook.

OpenAI Omni Modera..

omni-moderation-latest is OpenAI’s most advanced content moderation model, designed to detect and flag harmful, unsafe, or policy-violating content across a wide range of modalities and languages. Built on the GPT-4o architecture, it leverages multimodal understanding and multilingual capabilities to provide robust moderation for text, images, and audio inputs. This model is particularly effective in identifying nuanced and culturally specific toxic content, including implicit insults, sarcasm, and aggression that general-purpose systems might overlook.

Grok 2

Grok 2 is xAI’s second-generation chatbot that extends Grok’s capabilities to include real-time web access, multimodal output (text, vision, image generation via FLUX.1), and improved reasoning performance. It’s available to X Premium and Premium+ users and through xAI’s enterprise API.

Grok 2

Grok 2 is xAI’s second-generation chatbot that extends Grok’s capabilities to include real-time web access, multimodal output (text, vision, image generation via FLUX.1), and improved reasoning performance. It’s available to X Premium and Premium+ users and through xAI’s enterprise API.

Grok 2

Grok 2 is xAI’s second-generation chatbot that extends Grok’s capabilities to include real-time web access, multimodal output (text, vision, image generation via FLUX.1), and improved reasoning performance. It’s available to X Premium and Premium+ users and through xAI’s enterprise API.

grok-2-image-lates..

Grok 2 Image (a.k.a. Grok 2‑image‑latest) is xAI’s vision-forward extension of its Grok 2 model. Released in December 2024, it merges photorealistic image generation via Aurora with strong image understanding capabilities—supporting object detection, chart analysis, OCR, and visual reasoning tasks. Operates in a unified multimodal pipeline using text+image inputs up to 32K-token context.

grok-2-image-lates..

Grok 2 Image (a.k.a. Grok 2‑image‑latest) is xAI’s vision-forward extension of its Grok 2 model. Released in December 2024, it merges photorealistic image generation via Aurora with strong image understanding capabilities—supporting object detection, chart analysis, OCR, and visual reasoning tasks. Operates in a unified multimodal pipeline using text+image inputs up to 32K-token context.

grok-2-image-lates..

Grok 2 Image (a.k.a. Grok 2‑image‑latest) is xAI’s vision-forward extension of its Grok 2 model. Released in December 2024, it merges photorealistic image generation via Aurora with strong image understanding capabilities—supporting object detection, chart analysis, OCR, and visual reasoning tasks. Operates in a unified multimodal pipeline using text+image inputs up to 32K-token context.

grok-2-image-1212

Grok 2 Image 1212 (also known as grok-2-image-1212) is xAI’s December 2024 release of their unified image generation and understanding model. Built on Grok 2, it combines Aurora-powered photorealistic image creation with strong multimodal comprehension—handling image editing, vision QA, chart interpretation, and document analysis—within a single API and 32,768-token context.

grok-2-image-1212

Grok 2 Image 1212 (also known as grok-2-image-1212) is xAI’s December 2024 release of their unified image generation and understanding model. Built on Grok 2, it combines Aurora-powered photorealistic image creation with strong multimodal comprehension—handling image editing, vision QA, chart interpretation, and document analysis—within a single API and 32,768-token context.

grok-2-image-1212

Grok 2 Image 1212 (also known as grok-2-image-1212) is xAI’s December 2024 release of their unified image generation and understanding model. Built on Grok 2, it combines Aurora-powered photorealistic image creation with strong multimodal comprehension—handling image editing, vision QA, chart interpretation, and document analysis—within a single API and 32,768-token context.

ChatBetter

ChatBetter is an AI platform designed to unify access to all major large language models (LLMs) within a single chat interface. Built for productivity and accuracy, ChatBetter leverages automatic model selection to route every query to the most capable AI—eliminating guesswork about which model to use. Users can directly compare responses from OpenAI, Anthropic, Google, Meta, DeepSeek, Perplexity, Mistral, xAI, and Cohere models side by side, or merge answers for comprehensive insights. The system is crafted for teams and individuals alike, enabling complex research, planning, and writing tasks to be accomplished efficiently in one place.

ChatBetter

ChatBetter is an AI platform designed to unify access to all major large language models (LLMs) within a single chat interface. Built for productivity and accuracy, ChatBetter leverages automatic model selection to route every query to the most capable AI—eliminating guesswork about which model to use. Users can directly compare responses from OpenAI, Anthropic, Google, Meta, DeepSeek, Perplexity, Mistral, xAI, and Cohere models side by side, or merge answers for comprehensive insights. The system is crafted for teams and individuals alike, enabling complex research, planning, and writing tasks to be accomplished efficiently in one place.

ChatBetter

ChatBetter is an AI platform designed to unify access to all major large language models (LLMs) within a single chat interface. Built for productivity and accuracy, ChatBetter leverages automatic model selection to route every query to the most capable AI—eliminating guesswork about which model to use. Users can directly compare responses from OpenAI, Anthropic, Google, Meta, DeepSeek, Perplexity, Mistral, xAI, and Cohere models side by side, or merge answers for comprehensive insights. The system is crafted for teams and individuals alike, enabling complex research, planning, and writing tasks to be accomplished efficiently in one place.

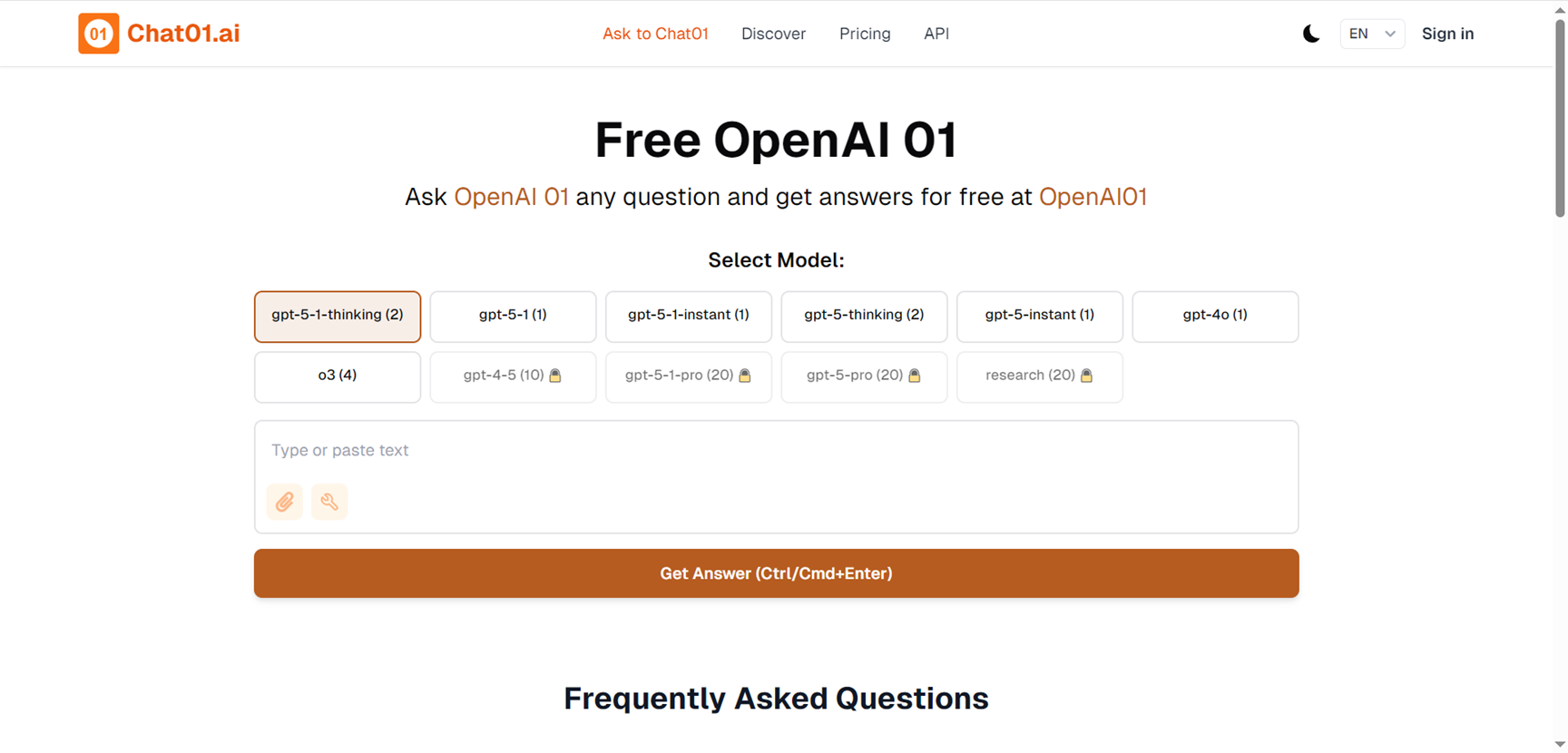

Chat01.ai

OpenAI01.net is a third-party, browser-based chat platform that lets you use OpenAI’s o1 family of advanced reasoning models for free, without needing your own API key or paid account. Branded as Chat01.ai in some places, it focuses on giving users generous access to o1-preview and o1-mini through a simple chat interface so they can tackle complex math, coding, science, and problem-solving tasks. The site often features public question-and-answer threads, allowing you to study other users’ prompts and responses to improve your own prompting skills. It acts as an accessible front-end to powerful OpenAI models, but is not officially operated by OpenAI.

Chat01.ai

OpenAI01.net is a third-party, browser-based chat platform that lets you use OpenAI’s o1 family of advanced reasoning models for free, without needing your own API key or paid account. Branded as Chat01.ai in some places, it focuses on giving users generous access to o1-preview and o1-mini through a simple chat interface so they can tackle complex math, coding, science, and problem-solving tasks. The site often features public question-and-answer threads, allowing you to study other users’ prompts and responses to improve your own prompting skills. It acts as an accessible front-end to powerful OpenAI models, but is not officially operated by OpenAI.

Chat01.ai

OpenAI01.net is a third-party, browser-based chat platform that lets you use OpenAI’s o1 family of advanced reasoning models for free, without needing your own API key or paid account. Branded as Chat01.ai in some places, it focuses on giving users generous access to o1-preview and o1-mini through a simple chat interface so they can tackle complex math, coding, science, and problem-solving tasks. The site often features public question-and-answer threads, allowing you to study other users’ prompts and responses to improve your own prompting skills. It acts as an accessible front-end to powerful OpenAI models, but is not officially operated by OpenAI.

Editorial Note

This page was researched and written by the ATB Editorial Team. Our team researches each AI tool by reviewing its official website, testing features, exploring real use cases, and considering user feedback. Every page is fact-checked and regularly updated to ensure the information stays accurate, neutral, and useful for our readers.

If you have any suggestions or questions, email us at hello@aitoolbook.ai