Whether you're building a live voice assistant, a responsive chatbot, or interactive multiplayer tools powered by AI, this API puts real in real-time AI.

- Conversational AI Developers: Build ultra-responsive chatbots, assistants, and agents that interact with users like real humans.

- Voice App Builders: Integrate with speech-to-text and text-to-speech for voice assistants that talk back without awkward pauses.

- Customer Support Platforms: Reduce wait time between user queries and AI-generated responses to elevate UX.

- Gaming & Virtual Worlds Creators: Enable NPCs and avatars to respond dynamically and instantly.

- Education & Training Products: Deliver tutoring, real-time feedback, and adaptive learning tools powered by lightning-fast AI.

- Enterprise Teams: Integrate real-time LLMs into productivity tools, search, automation, and co-pilot workflows.

🤖 How Does It Work?

- Step 1: Access the Streaming API: Use the /v1/chat/completions endpoint with GPT-4o and stream=true. You’ll start receiving tokens immediately as the model thinks—no need to wait for the full answer.

- Step 2: Connect to the Real-Time API: The new low-latency real-time API can be accessed via a WebSocket connection for the absolute fastest response (sub-300ms round-trip), enabling seamless, conversational use cases.

- Step 3: Process and Display Output: As tokens stream in, render them in your UI or feed them to a TTS engine or virtual character in real time.

- Step 4: Continue Interaction Smoothly: Because of the low latency and smarter turn-taking, users feel like they’re talking to someone live—not just prompting an AI.

- Bonus: Multimodal Capabilities: Real-time response isn't just for text. The API also supports vision and audio inputs—meaning truly multimodal interaction is finally here.

- Ultra-Low Latency (Under 300ms): It’s one of the first large-scale APIs to offer sub-second AI interactions at scale.

- WebSocket Support: Persistent connections allow for faster streaming and turn-taking in conversations.

- Multimodal Input Support: Text, images, and audio—seamlessly processed in real time.

- Human-Like Turn-Taking: GPT-4o’s enhanced capabilities let it listen and respond simultaneously, enabling more natural conversation flow.

- Built on GPT-4o: The most advanced OpenAI model to date, with faster inference and native audio + vision understanding.

- 🚀 Insanely Fast: Ideal for voice assistants, chatbots, and tools that need near-instant replies.

- 🧠 Smarter Conversations: GPT-4o handles interruptions, rapid turns, and mixed-modal inputs better than previous models.

- 🎙️ Natural Speech UX: When paired with text-to-speech, it's the closest thing yet to speaking with a human.

- 🧩 Flexible Integration: REST and WebSocket support make it easy to plug into nearly any tech stack.

- 🔧 Still Requires Engineering Finesse: To hit ultra-low latency, you'll need solid WebSocket architecture and fast client rendering.

- 💸 Cost Considerations for Scale: Fast = frequent calls = higher usage. Watch those bills if you’re building at volume.

- 📚 Limited Docs for Edge Use Cases: Some use cases (like real-time audio-visual blending) still lack deep implementation examples.

Free

$ 0.00

Standard voice mode

Real-time data from the web with search

Limited access to GPT-4o and o4-mini

Limited access to file uploads, advanced data analysis, and image generation

Use custom GPTs

Plus

$ 20.00

Extended limits on messaging, file uploads, advanced data analysis, and image generation

Standard and advanced voice mode

Access to deep research, multiple reasoning models (o4-mini, o4-mini-high, and o3), and a research preview of GPT-4.5

Create and use tasks, projects, and custom GPTs

Limited access to Sora video generation

Opportunities to test new features

Pro

$ 200.00

Unlimited access to all reasoning models and GPT-4o

Unlimited access to advanced voice

Extended access to deep research, which conducts multi-step online research for complex tasks

Access to research previews of GPT-4.5 and Operator

Access to o1 pro mode, which uses more compute for the best answers to the hardest questions

Extended access to Sora video generation

Access to a research preview of Codex agent

Proud of the love you're getting? Show off your AI Toolbook reviews—then invite more fans to share the love and build your credibility.

Add an AI Toolbook badge to your site—an easy way to drive followers, showcase updates, and collect reviews. It's like a mini 24/7 billboard for your AI.

Reviews

Rating Distribution

Average score

Popular Mention

FAQs

Similar AI Tools

OpenAI o1

o1 is a fast, highly capable language model developed by OpenAI, optimized for performance, cost-efficiency, and general-purpose use. It represents the entry point into OpenAI’s GPT-4 class of models, delivering high-quality natural language generation, comprehension, and interaction at lower latency and cost than GPT-4 Turbo. Despite being a newer and smaller variant, o1 is robust enough for most AI applications—from content generation to customer support—making it a reliable choice for developers looking to build intelligent and responsive systems.

OpenAI o1

o1 is a fast, highly capable language model developed by OpenAI, optimized for performance, cost-efficiency, and general-purpose use. It represents the entry point into OpenAI’s GPT-4 class of models, delivering high-quality natural language generation, comprehension, and interaction at lower latency and cost than GPT-4 Turbo. Despite being a newer and smaller variant, o1 is robust enough for most AI applications—from content generation to customer support—making it a reliable choice for developers looking to build intelligent and responsive systems.

OpenAI o1

o1 is a fast, highly capable language model developed by OpenAI, optimized for performance, cost-efficiency, and general-purpose use. It represents the entry point into OpenAI’s GPT-4 class of models, delivering high-quality natural language generation, comprehension, and interaction at lower latency and cost than GPT-4 Turbo. Despite being a newer and smaller variant, o1 is robust enough for most AI applications—from content generation to customer support—making it a reliable choice for developers looking to build intelligent and responsive systems.

OpenAI o3

o3 is OpenAI's next-generation language model, representing a significant leap in performance, reasoning ability, and efficiency. Positioned between GPT-4 and GPT-4o in terms of evolution, o3 is engineered for advanced language understanding, content generation, multilingual communication, and code-related tasks—while maintaining faster speeds and lower latency than earlier models. As part of OpenAI’s GPT-4 Turbo family, o3 delivers high-quality outputs at scale, supporting both chat and completion endpoints. It’s currently used in various commercial and developer-facing tools for streamlined and intelligent interactions.

OpenAI o3

o3 is OpenAI's next-generation language model, representing a significant leap in performance, reasoning ability, and efficiency. Positioned between GPT-4 and GPT-4o in terms of evolution, o3 is engineered for advanced language understanding, content generation, multilingual communication, and code-related tasks—while maintaining faster speeds and lower latency than earlier models. As part of OpenAI’s GPT-4 Turbo family, o3 delivers high-quality outputs at scale, supporting both chat and completion endpoints. It’s currently used in various commercial and developer-facing tools for streamlined and intelligent interactions.

OpenAI o3

o3 is OpenAI's next-generation language model, representing a significant leap in performance, reasoning ability, and efficiency. Positioned between GPT-4 and GPT-4o in terms of evolution, o3 is engineered for advanced language understanding, content generation, multilingual communication, and code-related tasks—while maintaining faster speeds and lower latency than earlier models. As part of OpenAI’s GPT-4 Turbo family, o3 delivers high-quality outputs at scale, supporting both chat and completion endpoints. It’s currently used in various commercial and developer-facing tools for streamlined and intelligent interactions.

OpenAI o1-pro

o1-pro is a highly capable AI model developed by OpenAI, designed to deliver efficient, high-quality text generation across a wide range of use cases. As part of OpenAI’s GPT-4 architecture family, o1-pro is optimized for low-latency performance and high accuracy—making it suitable for both everyday tasks and enterprise-scale applications. It powers natural language interactions, content creation, summarization, and more, offering developers a solid balance between performance, cost, and output quality.

OpenAI o1-pro

o1-pro is a highly capable AI model developed by OpenAI, designed to deliver efficient, high-quality text generation across a wide range of use cases. As part of OpenAI’s GPT-4 architecture family, o1-pro is optimized for low-latency performance and high accuracy—making it suitable for both everyday tasks and enterprise-scale applications. It powers natural language interactions, content creation, summarization, and more, offering developers a solid balance between performance, cost, and output quality.

OpenAI o1-pro

o1-pro is a highly capable AI model developed by OpenAI, designed to deliver efficient, high-quality text generation across a wide range of use cases. As part of OpenAI’s GPT-4 architecture family, o1-pro is optimized for low-latency performance and high accuracy—making it suitable for both everyday tasks and enterprise-scale applications. It powers natural language interactions, content creation, summarization, and more, offering developers a solid balance between performance, cost, and output quality.

GPT-4.1 Mini is a lightweight version of OpenAI’s advanced GPT-4.1 model, designed for efficiency, speed, and affordability without compromising much on performance. Tailored for developers and teams who need capable AI reasoning and natural language processing in smaller-scale or cost-sensitive applications, GPT-4.1 Mini brings the power of GPT-4.1 into a more accessible form factor. Perfect for chatbots, content suggestions, productivity tools, and streamlined AI experiences, this compact model still delivers impressive accuracy, fast responses, and a reliable understanding of nuanced prompts—all while using fewer resources.

OpenAI GPT 4.1 min..

GPT-4.1 Mini is a lightweight version of OpenAI’s advanced GPT-4.1 model, designed for efficiency, speed, and affordability without compromising much on performance. Tailored for developers and teams who need capable AI reasoning and natural language processing in smaller-scale or cost-sensitive applications, GPT-4.1 Mini brings the power of GPT-4.1 into a more accessible form factor. Perfect for chatbots, content suggestions, productivity tools, and streamlined AI experiences, this compact model still delivers impressive accuracy, fast responses, and a reliable understanding of nuanced prompts—all while using fewer resources.

OpenAI GPT 4.1 min..

GPT-4.1 Mini is a lightweight version of OpenAI’s advanced GPT-4.1 model, designed for efficiency, speed, and affordability without compromising much on performance. Tailored for developers and teams who need capable AI reasoning and natural language processing in smaller-scale or cost-sensitive applications, GPT-4.1 Mini brings the power of GPT-4.1 into a more accessible form factor. Perfect for chatbots, content suggestions, productivity tools, and streamlined AI experiences, this compact model still delivers impressive accuracy, fast responses, and a reliable understanding of nuanced prompts—all while using fewer resources.

LM Studio

LM Studio is a local AI toolkit that empowers users to discover, download, and run Large Language Models (LLMs) directly on their personal computers. It provides a user-friendly interface to chat with models, set up a local LLM server for applications, and ensures complete data privacy as all processes occur locally on your machine.

LM Studio

LM Studio is a local AI toolkit that empowers users to discover, download, and run Large Language Models (LLMs) directly on their personal computers. It provides a user-friendly interface to chat with models, set up a local LLM server for applications, and ensures complete data privacy as all processes occur locally on your machine.

LM Studio

LM Studio is a local AI toolkit that empowers users to discover, download, and run Large Language Models (LLMs) directly on their personal computers. It provides a user-friendly interface to chat with models, set up a local LLM server for applications, and ensures complete data privacy as all processes occur locally on your machine.

ChatBetter

ChatBetter is an AI platform designed to unify access to all major large language models (LLMs) within a single chat interface. Built for productivity and accuracy, ChatBetter leverages automatic model selection to route every query to the most capable AI—eliminating guesswork about which model to use. Users can directly compare responses from OpenAI, Anthropic, Google, Meta, DeepSeek, Perplexity, Mistral, xAI, and Cohere models side by side, or merge answers for comprehensive insights. The system is crafted for teams and individuals alike, enabling complex research, planning, and writing tasks to be accomplished efficiently in one place.

ChatBetter

ChatBetter is an AI platform designed to unify access to all major large language models (LLMs) within a single chat interface. Built for productivity and accuracy, ChatBetter leverages automatic model selection to route every query to the most capable AI—eliminating guesswork about which model to use. Users can directly compare responses from OpenAI, Anthropic, Google, Meta, DeepSeek, Perplexity, Mistral, xAI, and Cohere models side by side, or merge answers for comprehensive insights. The system is crafted for teams and individuals alike, enabling complex research, planning, and writing tasks to be accomplished efficiently in one place.

ChatBetter

ChatBetter is an AI platform designed to unify access to all major large language models (LLMs) within a single chat interface. Built for productivity and accuracy, ChatBetter leverages automatic model selection to route every query to the most capable AI—eliminating guesswork about which model to use. Users can directly compare responses from OpenAI, Anthropic, Google, Meta, DeepSeek, Perplexity, Mistral, xAI, and Cohere models side by side, or merge answers for comprehensive insights. The system is crafted for teams and individuals alike, enabling complex research, planning, and writing tasks to be accomplished efficiently in one place.

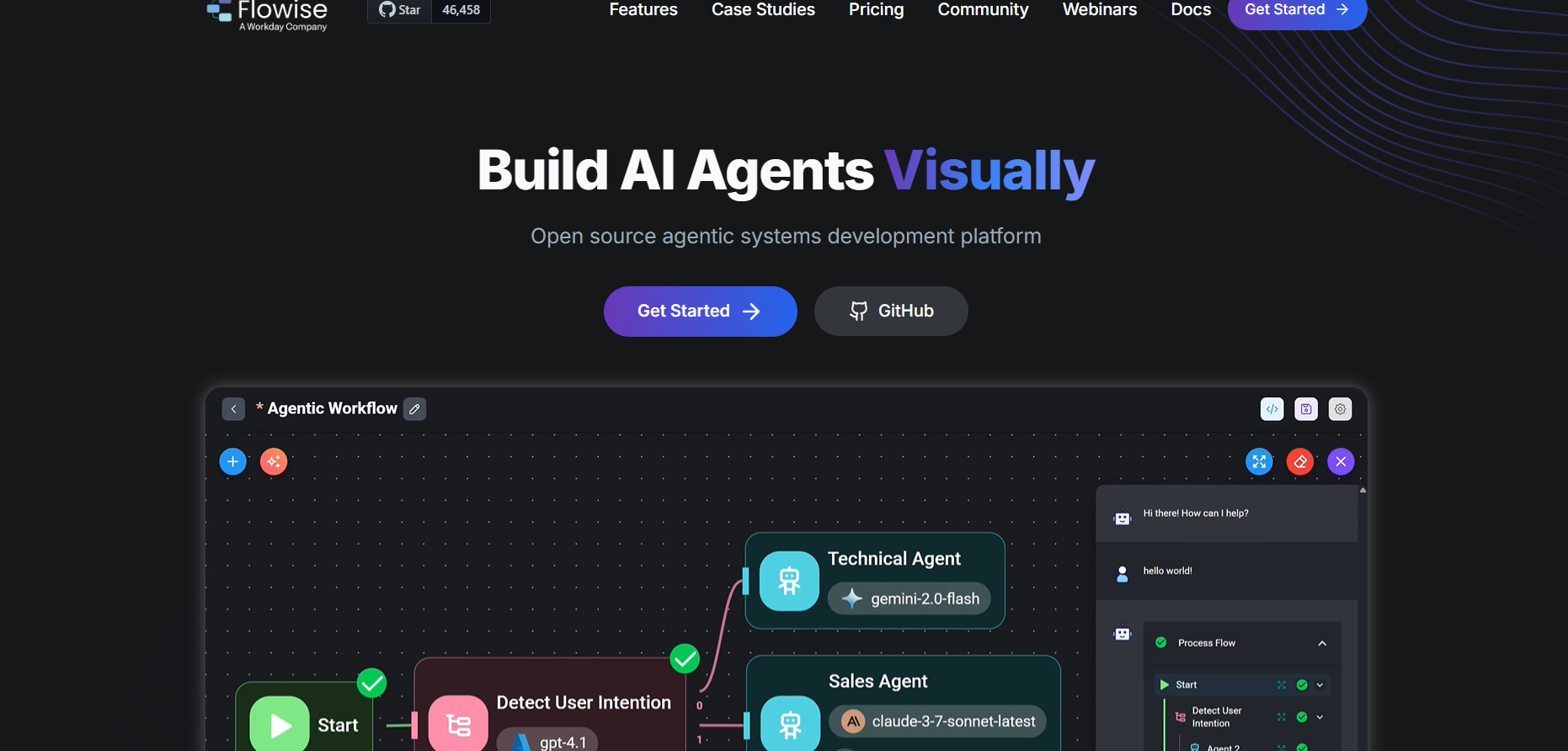

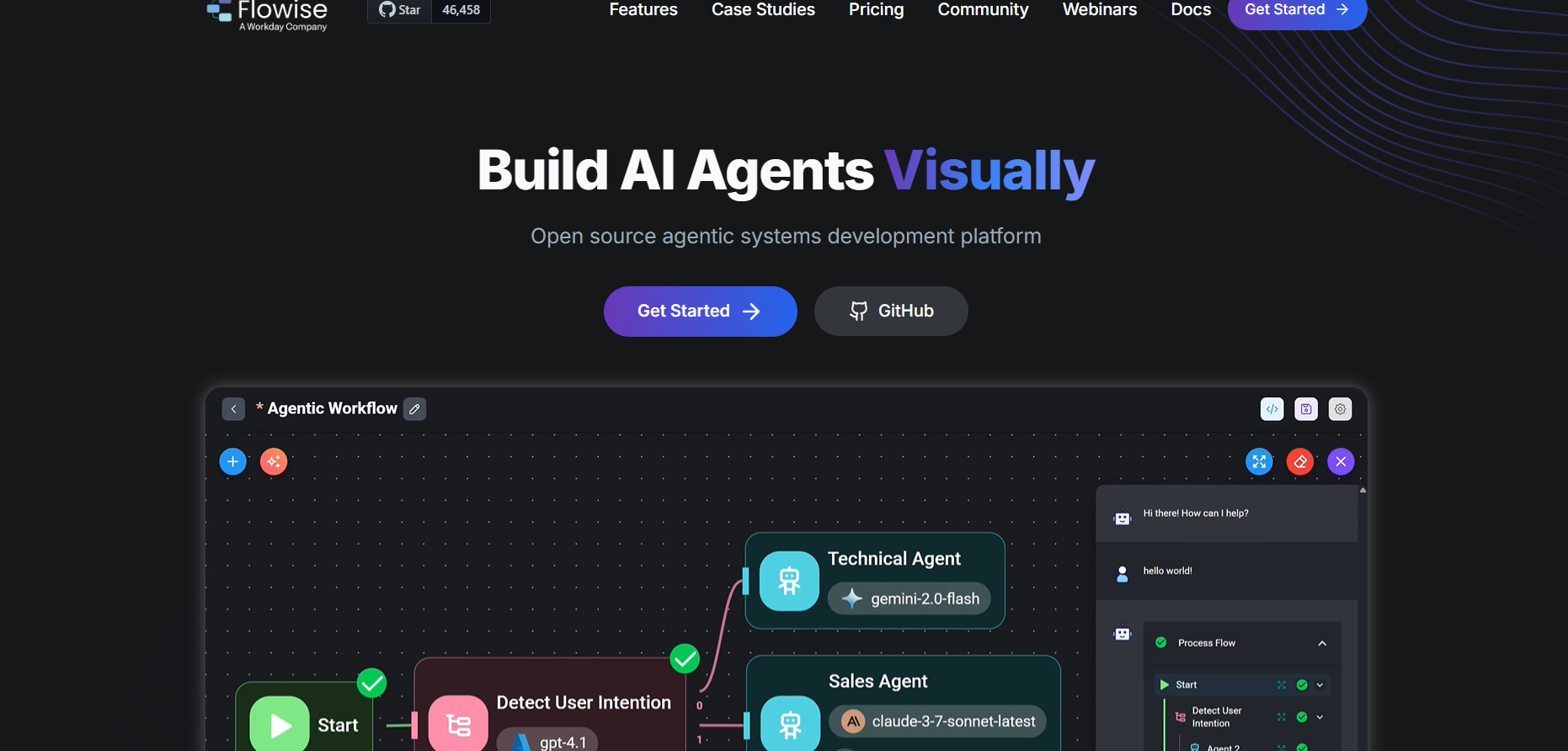

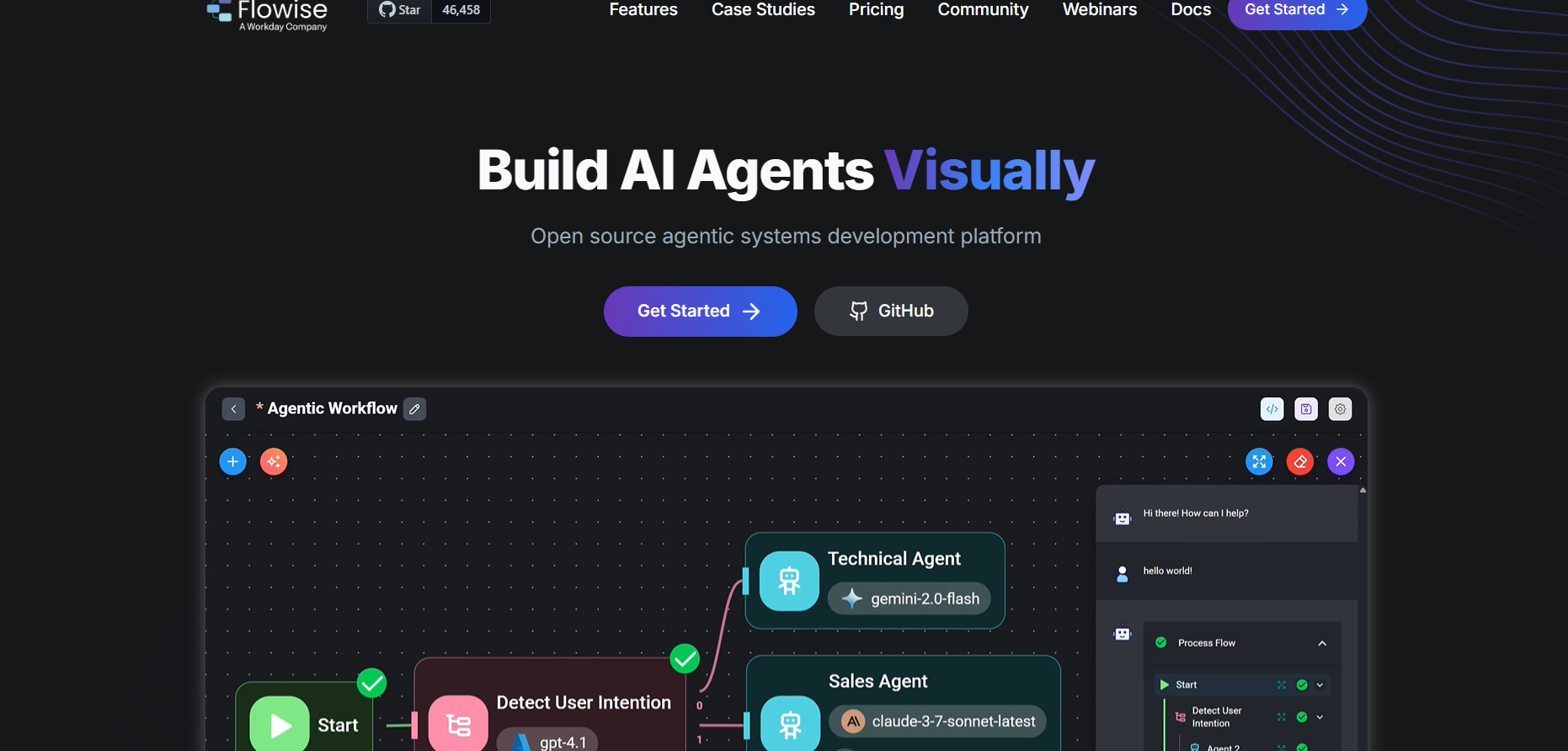

Flowise AI

Flowise AI is an open-source, visual tool that allows users to build, deploy, and manage AI workflows and chatbots powered by large language models without needing to code. It provides a drag-and-drop interface where users can visually connect LangChain components, APIs, data sources, and models to create complex AI systems easily. With Flowise AI, developers, analysts, and businesses can build chatbots, RAG pipelines, or automation systems through an intuitive UI rather than scripting everything manually. Its no-code design accelerates prototyping and deployment, enabling faster experimentation with LLM-powered workflows.

Flowise AI

Flowise AI is an open-source, visual tool that allows users to build, deploy, and manage AI workflows and chatbots powered by large language models without needing to code. It provides a drag-and-drop interface where users can visually connect LangChain components, APIs, data sources, and models to create complex AI systems easily. With Flowise AI, developers, analysts, and businesses can build chatbots, RAG pipelines, or automation systems through an intuitive UI rather than scripting everything manually. Its no-code design accelerates prototyping and deployment, enabling faster experimentation with LLM-powered workflows.

Flowise AI

Flowise AI is an open-source, visual tool that allows users to build, deploy, and manage AI workflows and chatbots powered by large language models without needing to code. It provides a drag-and-drop interface where users can visually connect LangChain components, APIs, data sources, and models to create complex AI systems easily. With Flowise AI, developers, analysts, and businesses can build chatbots, RAG pipelines, or automation systems through an intuitive UI rather than scripting everything manually. Its no-code design accelerates prototyping and deployment, enabling faster experimentation with LLM-powered workflows.

LLM Chat

LLMChat is a privacy-focused, open-source AI chatbot platform designed for advanced research, agentic workflows, and seamless interaction with multiple large language models (LLMs). It offers users a minimalistic and intuitive interface enabling deep exploration of complex topics with modes like Deep Research and Pro Search, which incorporates real-time web integration for current data. The platform emphasizes user privacy by storing all chat history locally in the browser, ensuring conversations never leave the device. LLMChat supports many popular LLM providers such as OpenAI, Anthropic, Google, and more, allowing users to customize AI assistants with personalized instructions and knowledge bases for a wide variety of applications ranging from research to content generation and coding assistance.

LLM Chat

LLMChat is a privacy-focused, open-source AI chatbot platform designed for advanced research, agentic workflows, and seamless interaction with multiple large language models (LLMs). It offers users a minimalistic and intuitive interface enabling deep exploration of complex topics with modes like Deep Research and Pro Search, which incorporates real-time web integration for current data. The platform emphasizes user privacy by storing all chat history locally in the browser, ensuring conversations never leave the device. LLMChat supports many popular LLM providers such as OpenAI, Anthropic, Google, and more, allowing users to customize AI assistants with personalized instructions and knowledge bases for a wide variety of applications ranging from research to content generation and coding assistance.

LLM Chat

LLMChat is a privacy-focused, open-source AI chatbot platform designed for advanced research, agentic workflows, and seamless interaction with multiple large language models (LLMs). It offers users a minimalistic and intuitive interface enabling deep exploration of complex topics with modes like Deep Research and Pro Search, which incorporates real-time web integration for current data. The platform emphasizes user privacy by storing all chat history locally in the browser, ensuring conversations never leave the device. LLMChat supports many popular LLM providers such as OpenAI, Anthropic, Google, and more, allowing users to customize AI assistants with personalized instructions and knowledge bases for a wide variety of applications ranging from research to content generation and coding assistance.

Awan LLM

Awan LLM is a cost-effective, unlimited token large language model inference API platform designed for power users and developers. Unlike traditional API providers that charge per token, Awan LLM offers a monthly subscription model that enables users to send and receive unlimited tokens up to the model's context limit. It supports unrestricted use of LLM models without censorship or constraints. The platform is built on privately owned data centers and GPUs, allowing it to offer efficient and scalable AI services. Awan LLM supports numerous use cases including AI assistants, AI agents, roleplaying, data processing, code completion, and building AI-powered applications without worrying about token limits or costs.

Awan LLM

Awan LLM is a cost-effective, unlimited token large language model inference API platform designed for power users and developers. Unlike traditional API providers that charge per token, Awan LLM offers a monthly subscription model that enables users to send and receive unlimited tokens up to the model's context limit. It supports unrestricted use of LLM models without censorship or constraints. The platform is built on privately owned data centers and GPUs, allowing it to offer efficient and scalable AI services. Awan LLM supports numerous use cases including AI assistants, AI agents, roleplaying, data processing, code completion, and building AI-powered applications without worrying about token limits or costs.

Awan LLM

Awan LLM is a cost-effective, unlimited token large language model inference API platform designed for power users and developers. Unlike traditional API providers that charge per token, Awan LLM offers a monthly subscription model that enables users to send and receive unlimited tokens up to the model's context limit. It supports unrestricted use of LLM models without censorship or constraints. The platform is built on privately owned data centers and GPUs, allowing it to offer efficient and scalable AI services. Awan LLM supports numerous use cases including AI assistants, AI agents, roleplaying, data processing, code completion, and building AI-powered applications without worrying about token limits or costs.

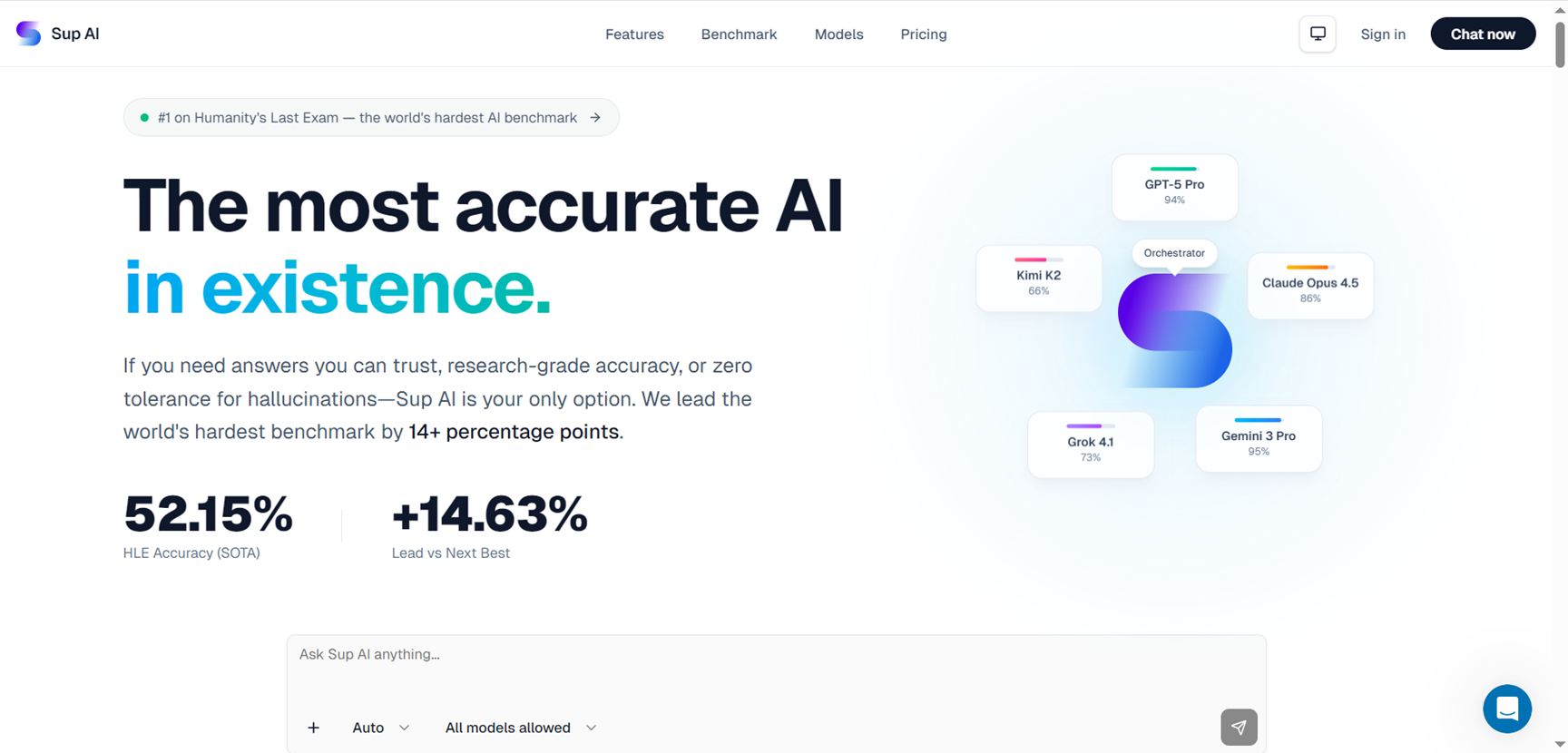

Sup AI

Sup AI is the most accurate AI platform that eliminates hallucinations through real-time K-min confidence filtering and multi-model orchestration, delivering research-grade responses from top frontier models like GPT-5 Pro, Claude Opus 4.5, and Gemini 3 Pro. It leads benchmarks like Humanity's Last Exam with 52.15% accuracy—14+ points ahead—using logprob scoring to terminate low-confidence outputs, multimodal RAG for perfect memory, and always-cited sources. Features include intelligent model selection, image generation/editing, secure collaboration, and OpenAI-compatible API for developers needing trustworthy AI.

Sup AI

Sup AI is the most accurate AI platform that eliminates hallucinations through real-time K-min confidence filtering and multi-model orchestration, delivering research-grade responses from top frontier models like GPT-5 Pro, Claude Opus 4.5, and Gemini 3 Pro. It leads benchmarks like Humanity's Last Exam with 52.15% accuracy—14+ points ahead—using logprob scoring to terminate low-confidence outputs, multimodal RAG for perfect memory, and always-cited sources. Features include intelligent model selection, image generation/editing, secure collaboration, and OpenAI-compatible API for developers needing trustworthy AI.

Sup AI

Sup AI is the most accurate AI platform that eliminates hallucinations through real-time K-min confidence filtering and multi-model orchestration, delivering research-grade responses from top frontier models like GPT-5 Pro, Claude Opus 4.5, and Gemini 3 Pro. It leads benchmarks like Humanity's Last Exam with 52.15% accuracy—14+ points ahead—using logprob scoring to terminate low-confidence outputs, multimodal RAG for perfect memory, and always-cited sources. Features include intelligent model selection, image generation/editing, secure collaboration, and OpenAI-compatible API for developers needing trustworthy AI.

Geekflare AI

Geekflare AI is a powerful multi-AI chat platform that brings together leading models from OpenAI, Google, Anthropic, and more into one seamless, collaborative workspace for businesses and teams. It eliminates the hassle of switching between multiple AI tools by letting users connect their own API keys or use built-in subscriptions, chat side-by-side with different models for diverse perspectives, and revisit past conversations effortlessly. Perfect for boosting productivity, this platform supports team collaboration through shared chats, analytics on usage, and features tailored for tasks like content generation, coding assistance, data analysis, and brainstorming, all while scaling for enterprises with thousands of users.

Geekflare AI

Geekflare AI is a powerful multi-AI chat platform that brings together leading models from OpenAI, Google, Anthropic, and more into one seamless, collaborative workspace for businesses and teams. It eliminates the hassle of switching between multiple AI tools by letting users connect their own API keys or use built-in subscriptions, chat side-by-side with different models for diverse perspectives, and revisit past conversations effortlessly. Perfect for boosting productivity, this platform supports team collaboration through shared chats, analytics on usage, and features tailored for tasks like content generation, coding assistance, data analysis, and brainstorming, all while scaling for enterprises with thousands of users.

Geekflare AI

Geekflare AI is a powerful multi-AI chat platform that brings together leading models from OpenAI, Google, Anthropic, and more into one seamless, collaborative workspace for businesses and teams. It eliminates the hassle of switching between multiple AI tools by letting users connect their own API keys or use built-in subscriptions, chat side-by-side with different models for diverse perspectives, and revisit past conversations effortlessly. Perfect for boosting productivity, this platform supports team collaboration through shared chats, analytics on usage, and features tailored for tasks like content generation, coding assistance, data analysis, and brainstorming, all while scaling for enterprises with thousands of users.

polychat

polychat is a multi-LLM chat platform that lets you interact with numerous AI models like OpenAI, Anthropic, Perplexity, Google, DeepSeek, Llama, and others in one interface, with free trials and no rate limits. Switch between models seamlessly for the best responses to your queries, whether coding, writing, or research, all at affordable plans starting $5/month. It's designed for power users wanting flexibility without juggling multiple apps or hitting usage caps quickly.

polychat

polychat is a multi-LLM chat platform that lets you interact with numerous AI models like OpenAI, Anthropic, Perplexity, Google, DeepSeek, Llama, and others in one interface, with free trials and no rate limits. Switch between models seamlessly for the best responses to your queries, whether coding, writing, or research, all at affordable plans starting $5/month. It's designed for power users wanting flexibility without juggling multiple apps or hitting usage caps quickly.

polychat

polychat is a multi-LLM chat platform that lets you interact with numerous AI models like OpenAI, Anthropic, Perplexity, Google, DeepSeek, Llama, and others in one interface, with free trials and no rate limits. Switch between models seamlessly for the best responses to your queries, whether coding, writing, or research, all at affordable plans starting $5/month. It's designed for power users wanting flexibility without juggling multiple apps or hitting usage caps quickly.

Editorial Note

This page was researched and written by the ATB Editorial Team. Our team researches each AI tool by reviewing its official website, testing features, exploring real use cases, and considering user feedback. Every page is fact-checked and regularly updated to ensure the information stays accurate, neutral, and useful for our readers.

If you have any suggestions or questions, email us at hello@aitoolbook.ai