Perfect for chatbots, content suggestions, productivity tools, and streamlined AI experiences, this compact model still delivers impressive accuracy, fast responses, and a reliable understanding of nuanced prompts—all while using fewer resources.

- App Developers: Integrate AI-powered features into apps with minimal cost and maximum speed.

- Customer Support Teams: Deploy smart chatbots that understand and respond accurately to user queries.

- SaaS Platforms: Offer contextual AI features like autocomplete, summarization, and recommendations.

- Productivity Tool Makers: Build AI-assisted tools for notes, scheduling, content generation, and more.

- Startups on a Budget: Leverage powerful AI without burning through API credits.

- Educators & Learners: Use for AI tutoring, Q&A, or lightweight NLP tasks at scale.

🛠️ How to Use GPT-4.1 Mini?

- Step 1: Access the API: Go to the OpenAI API platform and select GPT-4.1 Mini from the model list.

- Step 2: Use the Correct Model ID: Look for identifiers like gpt-4.1-mini or its specific model string provided in OpenAI docs.

- Step 3: Define Your Prompt: Craft a user prompt or system instruction that guides the model's tone, style, or intent.

- Step 4: Call the API with Lightweight Parameters: Due to its efficiency, GPT-4.1 Mini works great with shorter context lengths and low latency.

- Step 5: Deploy & Iterate: Add GPT-4.1 Mini into your app, feature, or product—and tune the behavior with temperature and top_p parameters.

- Compact and Efficient: Smaller model size means faster responses and reduced cost per API call.

- Great Performance for Its Size: Maintains strong reasoning and language capabilities, even with fewer parameters.

- Lower Latency: Ideal for real-time applications like chat, autocomplete, or embedded AI.

- Affordable Access: Best-in-class performance at a fraction of the cost of larger models.

- Easily Deployable: Fits seamlessly into lightweight, serverless, or mobile-first applications.

- Part of the GPT-4.1 Family: Shares architectural advances with full GPT-4.1, including better instruction following.

- Fast & Responsive: Optimized for speed, perfect for instant user interactions.

- Budget-Friendly: Reduced compute usage makes it ideal for high-volume or entry-level usage.

- Surprisingly Smart: Performs better than you'd expect from a “Mini” model.

- Modular Integration: Plug it into apps, workflows, or systems without complexity.

- Wide Range of Use Cases: Chatbots, content tools, customer support, and more.

- Limited Context Window: Smaller memory compared to GPT-4.1 or GPT-4 Turbo.

- May Struggle with Complex Tasks: Not ideal for advanced reasoning, coding, or long-form content.

- Occasional Gaps in Knowledge: Factual recall may be slightly less reliable than full-sized GPT-4.1.

- Less Configurable Tooling: Doesn’t include native support for advanced tools like code interpreter or browsing.

- Weaker Thread Continuity: Shorter memory limits multi-turn conversation depth.

Free

$ 0.00

Plus

$ 20.00

Pro

$ 200.00

API

$0.40/$1.60

Proud of the love you're getting? Show off your AI Toolbook reviews—then invite more fans to share the love and build your credibility.

Add an AI Toolbook badge to your site—an easy way to drive followers, showcase updates, and collect reviews. It's like a mini 24/7 billboard for your AI.

Reviews

Rating Distribution

Average score

Popular Mention

FAQs

Similar AI Tools

OpenAI o3

o3 is OpenAI's next-generation language model, representing a significant leap in performance, reasoning ability, and efficiency. Positioned between GPT-4 and GPT-4o in terms of evolution, o3 is engineered for advanced language understanding, content generation, multilingual communication, and code-related tasks—while maintaining faster speeds and lower latency than earlier models. As part of OpenAI’s GPT-4 Turbo family, o3 delivers high-quality outputs at scale, supporting both chat and completion endpoints. It’s currently used in various commercial and developer-facing tools for streamlined and intelligent interactions.

OpenAI o3

o3 is OpenAI's next-generation language model, representing a significant leap in performance, reasoning ability, and efficiency. Positioned between GPT-4 and GPT-4o in terms of evolution, o3 is engineered for advanced language understanding, content generation, multilingual communication, and code-related tasks—while maintaining faster speeds and lower latency than earlier models. As part of OpenAI’s GPT-4 Turbo family, o3 delivers high-quality outputs at scale, supporting both chat and completion endpoints. It’s currently used in various commercial and developer-facing tools for streamlined and intelligent interactions.

OpenAI o3

o3 is OpenAI's next-generation language model, representing a significant leap in performance, reasoning ability, and efficiency. Positioned between GPT-4 and GPT-4o in terms of evolution, o3 is engineered for advanced language understanding, content generation, multilingual communication, and code-related tasks—while maintaining faster speeds and lower latency than earlier models. As part of OpenAI’s GPT-4 Turbo family, o3 delivers high-quality outputs at scale, supporting both chat and completion endpoints. It’s currently used in various commercial and developer-facing tools for streamlined and intelligent interactions.

OpenAI o1-pro

o1-pro is a highly capable AI model developed by OpenAI, designed to deliver efficient, high-quality text generation across a wide range of use cases. As part of OpenAI’s GPT-4 architecture family, o1-pro is optimized for low-latency performance and high accuracy—making it suitable for both everyday tasks and enterprise-scale applications. It powers natural language interactions, content creation, summarization, and more, offering developers a solid balance between performance, cost, and output quality.

OpenAI o1-pro

o1-pro is a highly capable AI model developed by OpenAI, designed to deliver efficient, high-quality text generation across a wide range of use cases. As part of OpenAI’s GPT-4 architecture family, o1-pro is optimized for low-latency performance and high accuracy—making it suitable for both everyday tasks and enterprise-scale applications. It powers natural language interactions, content creation, summarization, and more, offering developers a solid balance between performance, cost, and output quality.

OpenAI o1-pro

o1-pro is a highly capable AI model developed by OpenAI, designed to deliver efficient, high-quality text generation across a wide range of use cases. As part of OpenAI’s GPT-4 architecture family, o1-pro is optimized for low-latency performance and high accuracy—making it suitable for both everyday tasks and enterprise-scale applications. It powers natural language interactions, content creation, summarization, and more, offering developers a solid balance between performance, cost, and output quality.

GPT-4o-mini-tts is OpenAI's lightweight, high-speed text-to-speech (TTS) model designed for fast, real-time voice synthesis using the GPT-4o-mini architecture. It's built to deliver natural, expressive, and low-latency speech output—ideal for developers building interactive applications that require instant voice responses, such as AI assistants, voice agents, or educational tools. Unlike larger TTS models, GPT-4o-mini-tts balances performance and efficiency, enabling responsive, engaging voice output even in environments with limited compute resources.

OpenAI GPT 4o mini..

GPT-4o-mini-tts is OpenAI's lightweight, high-speed text-to-speech (TTS) model designed for fast, real-time voice synthesis using the GPT-4o-mini architecture. It's built to deliver natural, expressive, and low-latency speech output—ideal for developers building interactive applications that require instant voice responses, such as AI assistants, voice agents, or educational tools. Unlike larger TTS models, GPT-4o-mini-tts balances performance and efficiency, enabling responsive, engaging voice output even in environments with limited compute resources.

OpenAI GPT 4o mini..

GPT-4o-mini-tts is OpenAI's lightweight, high-speed text-to-speech (TTS) model designed for fast, real-time voice synthesis using the GPT-4o-mini architecture. It's built to deliver natural, expressive, and low-latency speech output—ideal for developers building interactive applications that require instant voice responses, such as AI assistants, voice agents, or educational tools. Unlike larger TTS models, GPT-4o-mini-tts balances performance and efficiency, enabling responsive, engaging voice output even in environments with limited compute resources.

OpenAI GPT 4o mini..

GPT-4o-mini-transcribe is a lightweight, high-speed speech-to-text model from OpenAI, built on the GPT-4o-mini architecture. It converts spoken language into text with exceptional speed and surprising accuracy for its size—making it ideal for real-time transcription in resource-constrained environments. Whether you're building voice-enabled apps, smart assistants, meeting transcription tools, or captioning systems, GPT-4o-mini-transcribe offers responsive, multilingual transcription that balances cost, performance, and ease of integration.

OpenAI GPT 4o mini..

GPT-4o-mini-transcribe is a lightweight, high-speed speech-to-text model from OpenAI, built on the GPT-4o-mini architecture. It converts spoken language into text with exceptional speed and surprising accuracy for its size—making it ideal for real-time transcription in resource-constrained environments. Whether you're building voice-enabled apps, smart assistants, meeting transcription tools, or captioning systems, GPT-4o-mini-transcribe offers responsive, multilingual transcription that balances cost, performance, and ease of integration.

OpenAI GPT 4o mini..

GPT-4o-mini-transcribe is a lightweight, high-speed speech-to-text model from OpenAI, built on the GPT-4o-mini architecture. It converts spoken language into text with exceptional speed and surprising accuracy for its size—making it ideal for real-time transcription in resource-constrained environments. Whether you're building voice-enabled apps, smart assistants, meeting transcription tools, or captioning systems, GPT-4o-mini-transcribe offers responsive, multilingual transcription that balances cost, performance, and ease of integration.

OpenAI GPT 4o mini..

GPT-4o-mini Search Preview is OpenAI’s lightweight semantic search feature powered by the GPT-4o-mini model. Designed for real-time applications and low-latency environments, it brings retrieval-augmented intelligence to any product or tool that needs blazing-fast, accurate information lookup. While compact in size, it offers the power of contextual understanding, enabling smarter, more relevant search results with fewer resources. It’s ideal for startups, embedded systems, or anyone who needs search that just works—fast, efficient, and tuned for integration.

OpenAI GPT 4o mini..

GPT-4o-mini Search Preview is OpenAI’s lightweight semantic search feature powered by the GPT-4o-mini model. Designed for real-time applications and low-latency environments, it brings retrieval-augmented intelligence to any product or tool that needs blazing-fast, accurate information lookup. While compact in size, it offers the power of contextual understanding, enabling smarter, more relevant search results with fewer resources. It’s ideal for startups, embedded systems, or anyone who needs search that just works—fast, efficient, and tuned for integration.

OpenAI GPT 4o mini..

GPT-4o-mini Search Preview is OpenAI’s lightweight semantic search feature powered by the GPT-4o-mini model. Designed for real-time applications and low-latency environments, it brings retrieval-augmented intelligence to any product or tool that needs blazing-fast, accurate information lookup. While compact in size, it offers the power of contextual understanding, enabling smarter, more relevant search results with fewer resources. It’s ideal for startups, embedded systems, or anyone who needs search that just works—fast, efficient, and tuned for integration.

OpenAI Codex mini ..

codex-mini-latest is OpenAI’s lightweight, high-speed AI coding model, fine-tuned from the o4-mini architecture. Designed specifically for use with the Codex CLI, it brings ChatGPT-level reasoning directly to your terminal, enabling efficient code generation, debugging, and editing tasks. Despite its compact size, codex-mini-latest delivers impressive performance, making it ideal for developers seeking a fast, cost-effective coding assistant.

OpenAI Codex mini ..

codex-mini-latest is OpenAI’s lightweight, high-speed AI coding model, fine-tuned from the o4-mini architecture. Designed specifically for use with the Codex CLI, it brings ChatGPT-level reasoning directly to your terminal, enabling efficient code generation, debugging, and editing tasks. Despite its compact size, codex-mini-latest delivers impressive performance, making it ideal for developers seeking a fast, cost-effective coding assistant.

OpenAI Codex mini ..

codex-mini-latest is OpenAI’s lightweight, high-speed AI coding model, fine-tuned from the o4-mini architecture. Designed specifically for use with the Codex CLI, it brings ChatGPT-level reasoning directly to your terminal, enabling efficient code generation, debugging, and editing tasks. Despite its compact size, codex-mini-latest delivers impressive performance, making it ideal for developers seeking a fast, cost-effective coding assistant.

DeepSeek-R1-Distil..

DeepSeek R1 Distill refers to a family of dense, smaller models distilled from DeepSeek’s flagship DeepSeek R1 reasoning model. Released early 2025, these models come in sizes ranging from 1.5B to 70B parameters (e.g., DeepSeek‑R1‑Distill‑Qwen‑32B) and retain powerful reasoning and chain-of-thought abilities in a more efficient architecture. Benchmarks show distilled variants outperform models like OpenAI’s o1‑mini, while remaining open‑source under MIT license.

DeepSeek-R1-Distil..

DeepSeek R1 Distill refers to a family of dense, smaller models distilled from DeepSeek’s flagship DeepSeek R1 reasoning model. Released early 2025, these models come in sizes ranging from 1.5B to 70B parameters (e.g., DeepSeek‑R1‑Distill‑Qwen‑32B) and retain powerful reasoning and chain-of-thought abilities in a more efficient architecture. Benchmarks show distilled variants outperform models like OpenAI’s o1‑mini, while remaining open‑source under MIT license.

DeepSeek-R1-Distil..

DeepSeek R1 Distill refers to a family of dense, smaller models distilled from DeepSeek’s flagship DeepSeek R1 reasoning model. Released early 2025, these models come in sizes ranging from 1.5B to 70B parameters (e.g., DeepSeek‑R1‑Distill‑Qwen‑32B) and retain powerful reasoning and chain-of-thought abilities in a more efficient architecture. Benchmarks show distilled variants outperform models like OpenAI’s o1‑mini, while remaining open‑source under MIT license.

DeepSeek-R1-Distil..

DeepSeek R1 Distill Qwen‑32B is a 32-billion-parameter dense reasoning model released in early 2025. Distilled from the flagship DeepSeek R1 using Qwen 2.5‑32B as a base, it delivers state-of-the-art performance among dense LLMs—outperforming OpenAI’s o1‑mini on benchmarks like AIME, MATH‑500, GPQA Diamond, LiveCodeBench, and CodeForces rating.

DeepSeek-R1-Distil..

DeepSeek R1 Distill Qwen‑32B is a 32-billion-parameter dense reasoning model released in early 2025. Distilled from the flagship DeepSeek R1 using Qwen 2.5‑32B as a base, it delivers state-of-the-art performance among dense LLMs—outperforming OpenAI’s o1‑mini on benchmarks like AIME, MATH‑500, GPQA Diamond, LiveCodeBench, and CodeForces rating.

DeepSeek-R1-Distil..

DeepSeek R1 Distill Qwen‑32B is a 32-billion-parameter dense reasoning model released in early 2025. Distilled from the flagship DeepSeek R1 using Qwen 2.5‑32B as a base, it delivers state-of-the-art performance among dense LLMs—outperforming OpenAI’s o1‑mini on benchmarks like AIME, MATH‑500, GPQA Diamond, LiveCodeBench, and CodeForces rating.

Chat 01 AI

Chat01.ai is a platform that offers free and unlimited chat with OpenAI 01, a new series of AI models. These models are specifically designed for complex reasoning and problem-solving in areas such as science, coding, and math, by employing a "think more before responding" approach, trying different strategies, and recognizing mistakes.

Chat 01 AI

Chat01.ai is a platform that offers free and unlimited chat with OpenAI 01, a new series of AI models. These models are specifically designed for complex reasoning and problem-solving in areas such as science, coding, and math, by employing a "think more before responding" approach, trying different strategies, and recognizing mistakes.

Chat 01 AI

Chat01.ai is a platform that offers free and unlimited chat with OpenAI 01, a new series of AI models. These models are specifically designed for complex reasoning and problem-solving in areas such as science, coding, and math, by employing a "think more before responding" approach, trying different strategies, and recognizing mistakes.

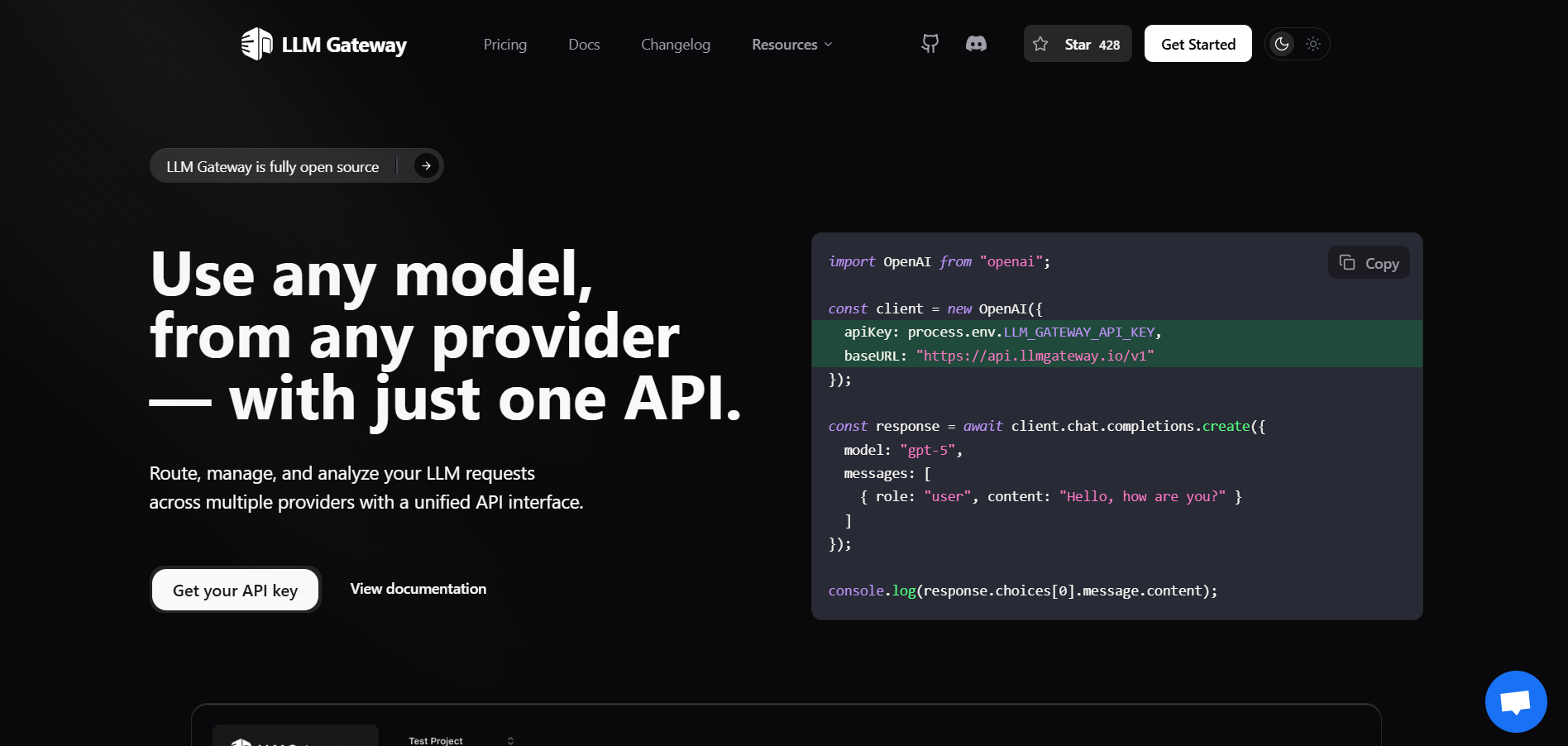

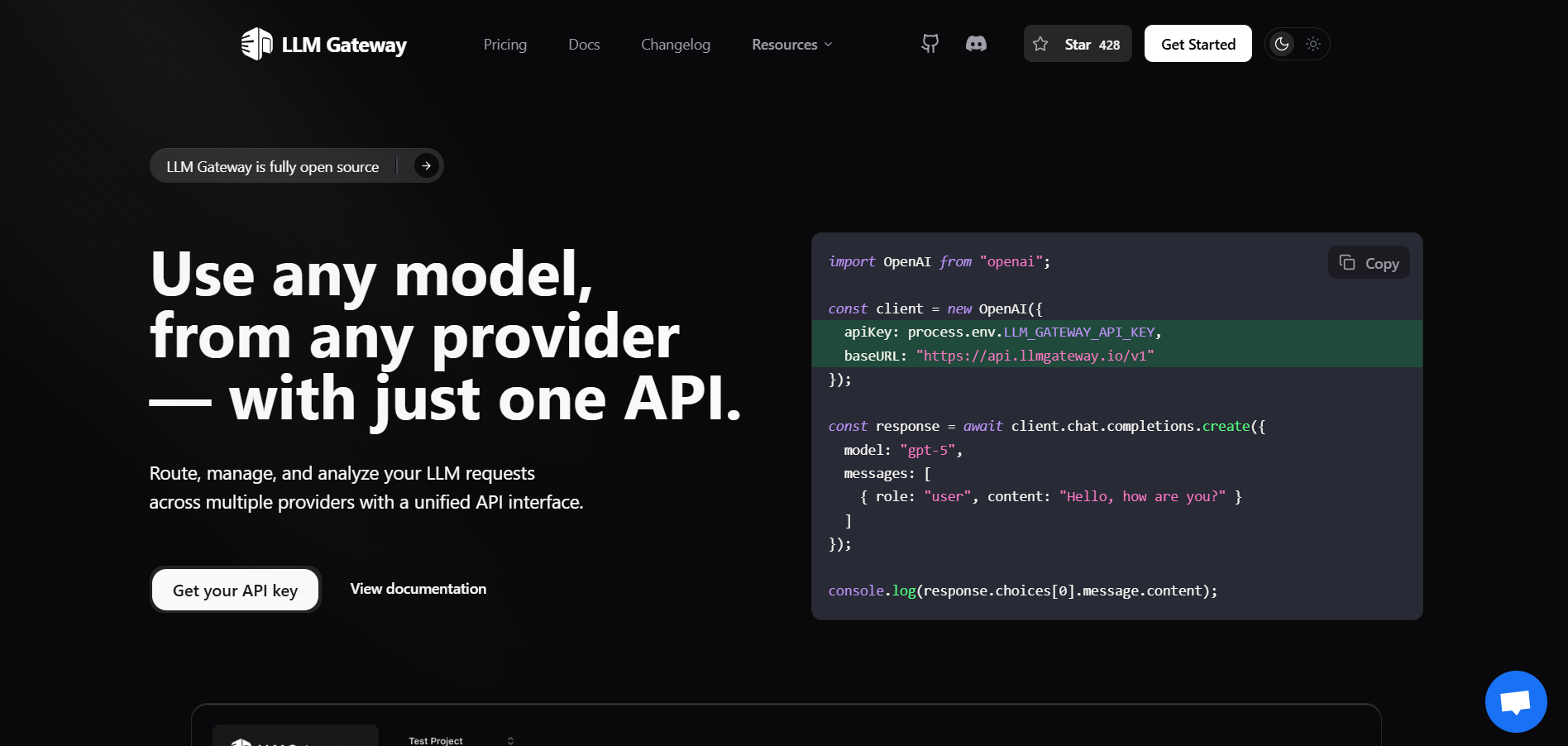

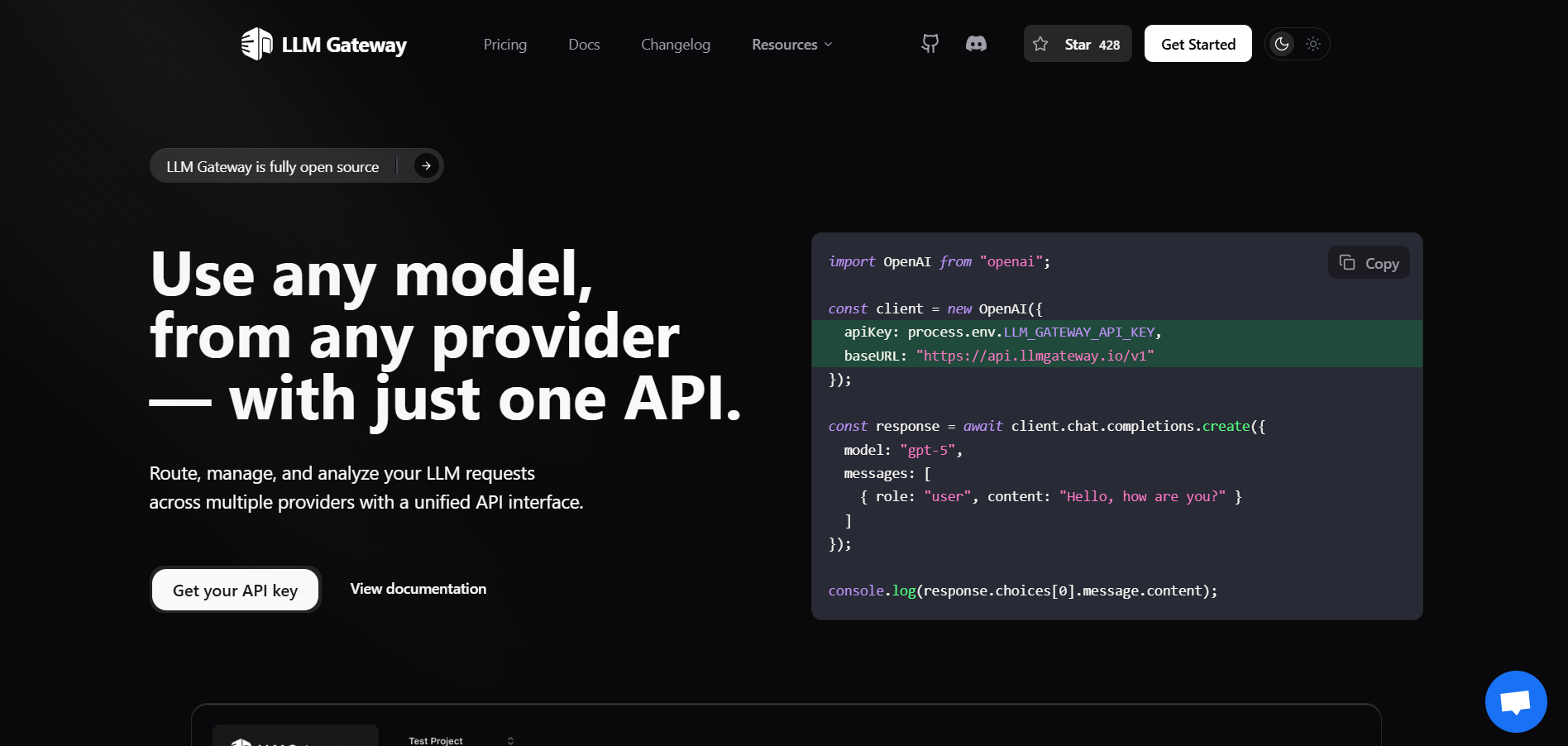

LLM Gateway

LLM Gateway is a unified API gateway designed to simplify working with large language models (LLMs) from multiple providers by offering a single, OpenAI-compatible endpoint. Whether using OpenAI, Anthropic, Google Vertex AI, or others, developers can route, monitor, and manage requests—all without altering existing code. Available as an open-source self-hosted option (MIT-licensed) or hosted service, it combines powerful features for analytics, cost optimization, and performance management—all under one roof.

LLM Gateway

LLM Gateway is a unified API gateway designed to simplify working with large language models (LLMs) from multiple providers by offering a single, OpenAI-compatible endpoint. Whether using OpenAI, Anthropic, Google Vertex AI, or others, developers can route, monitor, and manage requests—all without altering existing code. Available as an open-source self-hosted option (MIT-licensed) or hosted service, it combines powerful features for analytics, cost optimization, and performance management—all under one roof.

LLM Gateway

LLM Gateway is a unified API gateway designed to simplify working with large language models (LLMs) from multiple providers by offering a single, OpenAI-compatible endpoint. Whether using OpenAI, Anthropic, Google Vertex AI, or others, developers can route, monitor, and manage requests—all without altering existing code. Available as an open-source self-hosted option (MIT-licensed) or hosted service, it combines powerful features for analytics, cost optimization, and performance management—all under one roof.

Langchain

LangChain is a powerful open-source framework designed to help developers build context-aware applications that leverage large language models (LLMs). It allows users to connect language models to various data sources, APIs, and memory components, enabling intelligent, multi-step reasoning and decision-making processes. LangChain supports both Python and JavaScript, providing modular building blocks for developers to create chatbots, AI assistants, retrieval-augmented generation (RAG) systems, and agent-based tools. The framework is widely adopted across industries for its flexibility in connecting structured and unstructured data with LLMs.

Langchain

LangChain is a powerful open-source framework designed to help developers build context-aware applications that leverage large language models (LLMs). It allows users to connect language models to various data sources, APIs, and memory components, enabling intelligent, multi-step reasoning and decision-making processes. LangChain supports both Python and JavaScript, providing modular building blocks for developers to create chatbots, AI assistants, retrieval-augmented generation (RAG) systems, and agent-based tools. The framework is widely adopted across industries for its flexibility in connecting structured and unstructured data with LLMs.

Langchain

LangChain is a powerful open-source framework designed to help developers build context-aware applications that leverage large language models (LLMs). It allows users to connect language models to various data sources, APIs, and memory components, enabling intelligent, multi-step reasoning and decision-making processes. LangChain supports both Python and JavaScript, providing modular building blocks for developers to create chatbots, AI assistants, retrieval-augmented generation (RAG) systems, and agent-based tools. The framework is widely adopted across industries for its flexibility in connecting structured and unstructured data with LLMs.

LM Studio

LM Studio is a local large language model (LLM) platform that enables users to run and download powerful AI language models like LLaMa, MPT, and Gemma directly on their own computers. This platform supports Mac, Windows, and Linux operating systems, providing flexibility to users across different devices. LM Studio focuses on privacy and control by allowing users to work with AI models locally without relying on cloud-based services, ensuring data stays on the user’s device. It offers an easy-to-install interface with step-by-step guidance for setup, facilitating access to advanced AI capabilities for developers, researchers, and AI enthusiasts without requiring an internet connection.

LM Studio

LM Studio is a local large language model (LLM) platform that enables users to run and download powerful AI language models like LLaMa, MPT, and Gemma directly on their own computers. This platform supports Mac, Windows, and Linux operating systems, providing flexibility to users across different devices. LM Studio focuses on privacy and control by allowing users to work with AI models locally without relying on cloud-based services, ensuring data stays on the user’s device. It offers an easy-to-install interface with step-by-step guidance for setup, facilitating access to advanced AI capabilities for developers, researchers, and AI enthusiasts without requiring an internet connection.

LM Studio

LM Studio is a local large language model (LLM) platform that enables users to run and download powerful AI language models like LLaMa, MPT, and Gemma directly on their own computers. This platform supports Mac, Windows, and Linux operating systems, providing flexibility to users across different devices. LM Studio focuses on privacy and control by allowing users to work with AI models locally without relying on cloud-based services, ensuring data stays on the user’s device. It offers an easy-to-install interface with step-by-step guidance for setup, facilitating access to advanced AI capabilities for developers, researchers, and AI enthusiasts without requiring an internet connection.

Editorial Note

This page was researched and written by the ATB Editorial Team. Our team researches each AI tool by reviewing its official website, testing features, exploring real use cases, and considering user feedback. Every page is fact-checked and regularly updated to ensure the information stays accurate, neutral, and useful for our readers.

If you have any suggestions or questions, email us at hello@aitoolbook.ai