Whether you're building voice-enabled apps, smart assistants, meeting transcription tools, or captioning systems, GPT-4o-mini-transcribe offers responsive, multilingual transcription that balances cost, performance, and ease of integration.

- Mobile App Developers: Build lightweight voice input features without draining memory or compute.

- Startups & MVP Builders: Add fast transcription to prototypes or beta apps with minimal API costs.

- Customer Support Teams: Capture and analyze voice conversations from calls or voice chats.

- Education & Accessibility Teams: Enable quick, real-time captioning in e-learning platforms.

- IoT & Edge Device Makers: Power voice-to-text on smart speakers, appliances, or AR/VR headsets.

- Internal Tools Teams: Auto-transcribe meetings or create searchable voice notes.

🛠️ How to Use GPT-4o-mini-transcribe?

- Step 1: Use the OpenAI /v1/audio/transcriptions Endpoint: Upload audio files (e.g., MP3, MP4, WAV) directly to the OpenAI API.

- Step 2: Choose the gpt-4o-mini Model: Select GPT-4o-mini in your API call for fast, lightweight speech-to-text processing.

- Step 3: Provide Audio Input & Optional Settings: Add optional parameters like language, output format (text, srt, or vtt), or custom prompts.

- Step 4: Receive Instant Transcription: The model outputs clean, readable text in your desired format—ideal for both real-time use and batch jobs.

- Step 5: Integrate with Your App or Workflow: Use it to populate chat logs, search indexes, or on-screen captions.

- Compact Performance: Lightweight and fast, perfect for mobile or low-latency environments.

- Multilingual by Default: Works well with multiple languages, dialects, and accents.

- Fast Turnaround: Processes short audio clips in seconds with minimal lag.

- Custom Prompt Support: Guide the transcription with bias toward domain-specific terms.

- Multiple Output Formats: Generate .txt, .srt, or .vtt files for maximum flexibility.

- Budget-Friendly: Smaller model = fewer tokens = lower cost for high-volume use cases.

- Blazing Fast: Ideal for low-latency, real-time transcription tasks.

- Mobile-Ready: Runs well in constrained environments or lightweight workflows.

- Multilingual: Supports transcription in a wide range of global languages.

- Efficient & Cost-Effective: Reduced token use means lower API bills.

- Great for MVPs & Demos: Perfect for projects that need speech input fast.

- Slightly Lower Accuracy: Not as precise as larger models in noisy or technical audio.

- No Speaker Recognition: Doesn’t distinguish between different speakers.

- Limited Formatting Features: Lacks detailed punctuation or advanced structuring.

- Not Ideal for Long Audio: Best for short interactions or real-time voice snippets.

- No Summarization Built-In: You’ll need a separate model to summarize the transcription.

Text Input

$1.25/$5

Text Output: $5

Audio Input

$3/$5

Text Output: $5

Proud of the love you're getting? Show off your AI Toolbook reviews—then invite more fans to share the love and build your credibility.

Add an AI Toolbook badge to your site—an easy way to drive followers, showcase updates, and collect reviews. It's like a mini 24/7 billboard for your AI.

Reviews

Rating Distribution

Average score

Popular Mention

FAQs

Similar AI Tools

OpenAI Operator

OpenAI Operator is a cloud-native orchestration layer designed to help businesses deploy and manage AI models at scale. It optimizes performance, cost, and efficiency by dynamically selecting and running AI models based on workload demands. Operator enables seamless AI model deployment, monitoring, and scaling for enterprises, ensuring that AI-powered applications run efficiently and cost-effectively.

OpenAI Operator

OpenAI Operator is a cloud-native orchestration layer designed to help businesses deploy and manage AI models at scale. It optimizes performance, cost, and efficiency by dynamically selecting and running AI models based on workload demands. Operator enables seamless AI model deployment, monitoring, and scaling for enterprises, ensuring that AI-powered applications run efficiently and cost-effectively.

OpenAI Operator

OpenAI Operator is a cloud-native orchestration layer designed to help businesses deploy and manage AI models at scale. It optimizes performance, cost, and efficiency by dynamically selecting and running AI models based on workload demands. Operator enables seamless AI model deployment, monitoring, and scaling for enterprises, ensuring that AI-powered applications run efficiently and cost-effectively.

OpenAI ChatGPT

ChatGPT is an advanced AI chatbot developed by OpenAI that can generate human-like text, answer questions, assist with creative writing, and engage in natural conversations. Powered by OpenAI’s GPT models, it is widely used for customer support, content creation, tutoring, and even casual chat. ChatGPT is available as a web app, API, and mobile app, making it accessible for personal and business use.

OpenAI ChatGPT

ChatGPT is an advanced AI chatbot developed by OpenAI that can generate human-like text, answer questions, assist with creative writing, and engage in natural conversations. Powered by OpenAI’s GPT models, it is widely used for customer support, content creation, tutoring, and even casual chat. ChatGPT is available as a web app, API, and mobile app, making it accessible for personal and business use.

OpenAI ChatGPT

ChatGPT is an advanced AI chatbot developed by OpenAI that can generate human-like text, answer questions, assist with creative writing, and engage in natural conversations. Powered by OpenAI’s GPT models, it is widely used for customer support, content creation, tutoring, and even casual chat. ChatGPT is available as a web app, API, and mobile app, making it accessible for personal and business use.

OpenAI’s Real-Time API is a game-changing advancement in AI interaction, enabling developers to build apps that respond instantly—literally in milliseconds—to user inputs. It drastically reduces the response latency of OpenAI’s GPT-4o model to as low as 100 milliseconds, unlocking a whole new world of AI-powered experiences that feel more human, responsive, and conversational in real time. Whether you're building a live voice assistant, a responsive chatbot, or interactive multiplayer tools powered by AI, this API puts real in real-time AI.

OpenAI Realtime AP..

OpenAI’s Real-Time API is a game-changing advancement in AI interaction, enabling developers to build apps that respond instantly—literally in milliseconds—to user inputs. It drastically reduces the response latency of OpenAI’s GPT-4o model to as low as 100 milliseconds, unlocking a whole new world of AI-powered experiences that feel more human, responsive, and conversational in real time. Whether you're building a live voice assistant, a responsive chatbot, or interactive multiplayer tools powered by AI, this API puts real in real-time AI.

OpenAI Realtime AP..

OpenAI’s Real-Time API is a game-changing advancement in AI interaction, enabling developers to build apps that respond instantly—literally in milliseconds—to user inputs. It drastically reduces the response latency of OpenAI’s GPT-4o model to as low as 100 milliseconds, unlocking a whole new world of AI-powered experiences that feel more human, responsive, and conversational in real time. Whether you're building a live voice assistant, a responsive chatbot, or interactive multiplayer tools powered by AI, this API puts real in real-time AI.

OpenAI Whisper

OpenAI Whisper is a powerful automatic speech recognition (ASR) system designed to transcribe and translate spoken language with high accuracy. It supports multiple languages and can handle a variety of audio formats, making it an essential tool for transcription services, accessibility solutions, and real-time voice applications. Whisper is trained on a vast dataset of multilingual audio, ensuring robustness even in noisy environments.

OpenAI Whisper

OpenAI Whisper is a powerful automatic speech recognition (ASR) system designed to transcribe and translate spoken language with high accuracy. It supports multiple languages and can handle a variety of audio formats, making it an essential tool for transcription services, accessibility solutions, and real-time voice applications. Whisper is trained on a vast dataset of multilingual audio, ensuring robustness even in noisy environments.

OpenAI Whisper

OpenAI Whisper is a powerful automatic speech recognition (ASR) system designed to transcribe and translate spoken language with high accuracy. It supports multiple languages and can handle a variety of audio formats, making it an essential tool for transcription services, accessibility solutions, and real-time voice applications. Whisper is trained on a vast dataset of multilingual audio, ensuring robustness even in noisy environments.

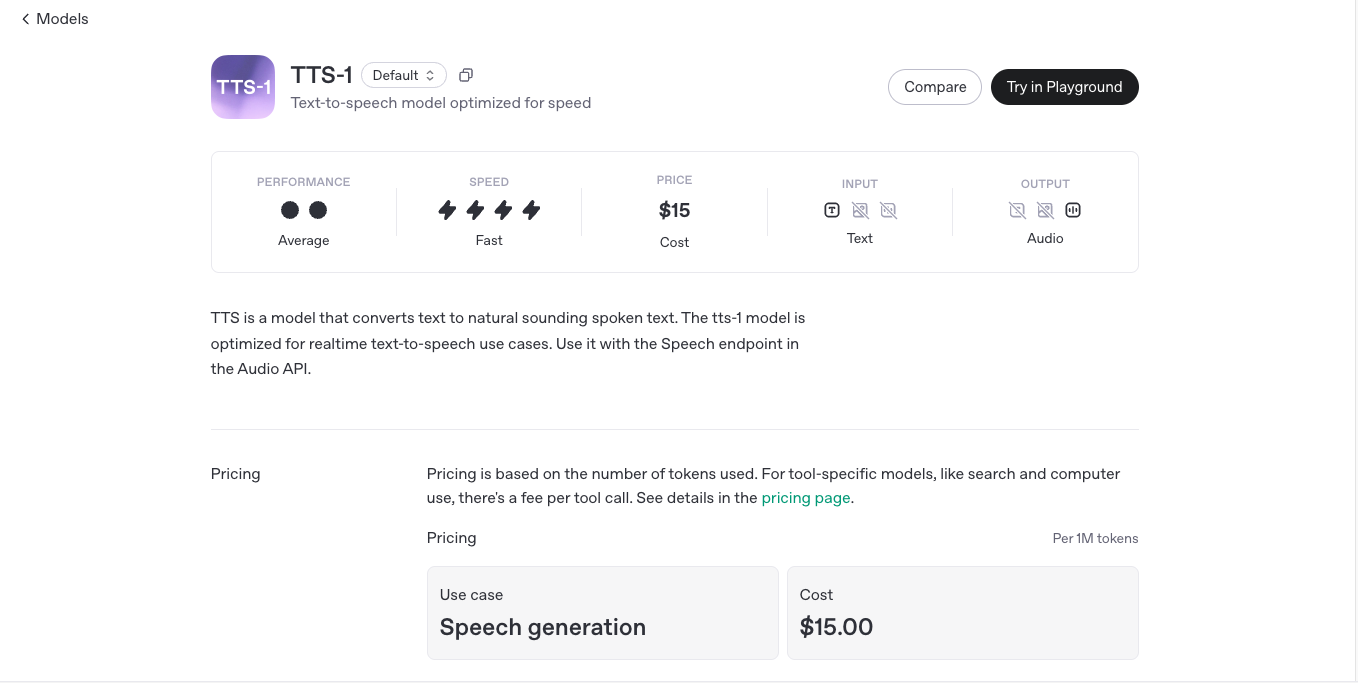

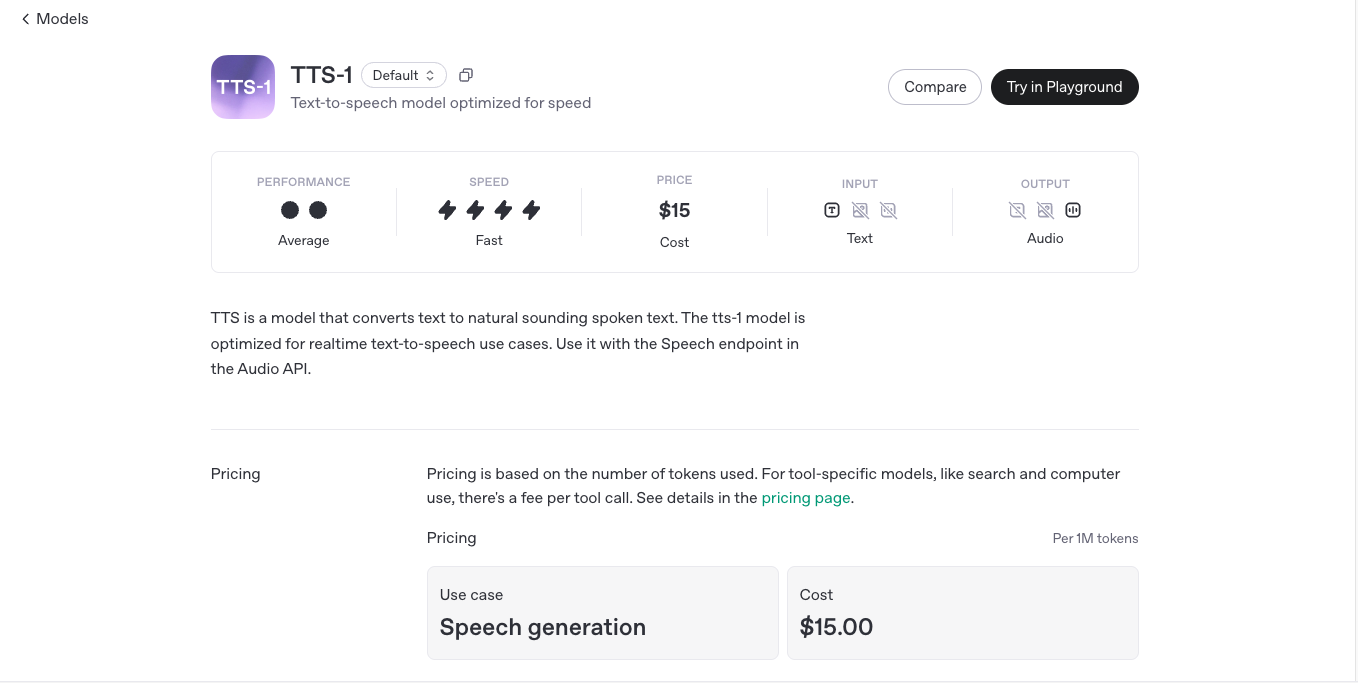

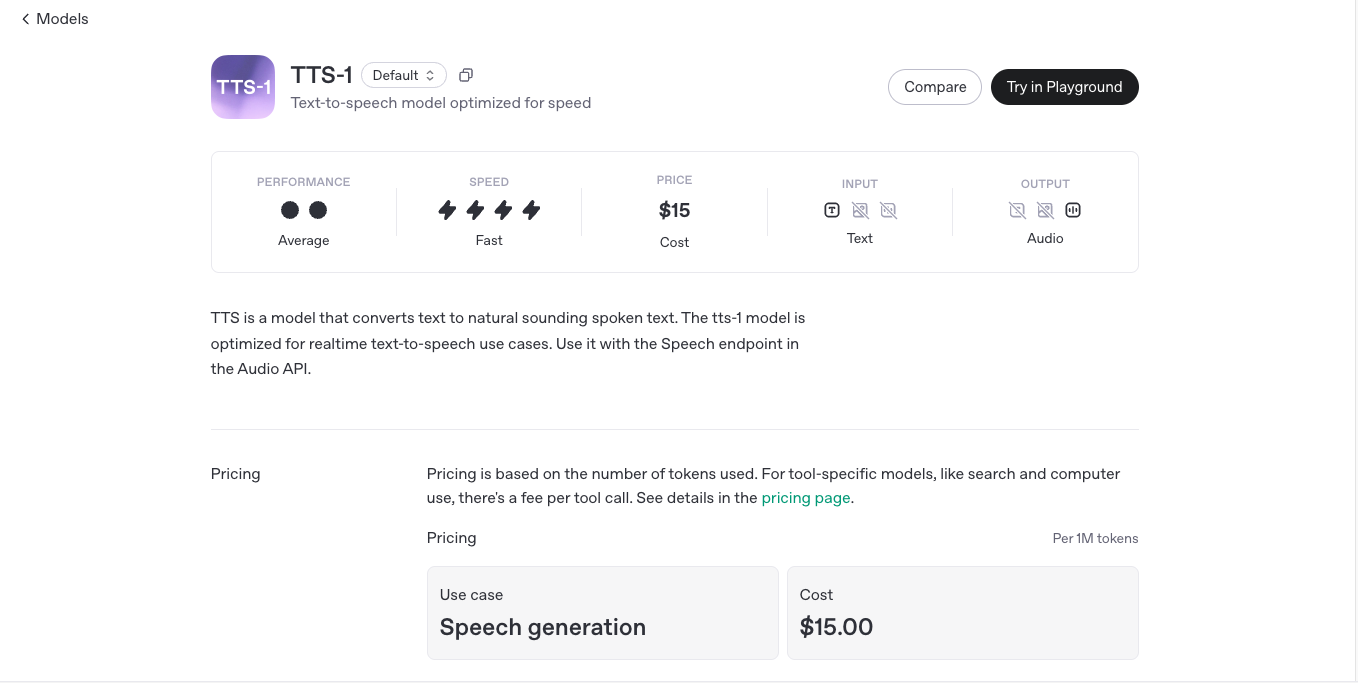

OpenAI TTS1

OpenAI's TTS-1 (Text-to-Speech) is a cutting-edge generative voice model that converts written text into natural-sounding speech with astonishing clarity, pacing, and emotional nuance. TTS-1 is designed to power real-time voice applications—like assistants, narrators, or conversational agents—with near-human vocal quality and minimal latency. Available through OpenAI’s API, this model makes it easy for developers to give their applications a voice that actually sounds human—not robotic. With multiple voices, languages, and low-latency streaming, TTS-1 redefines the synthetic voice experience.

OpenAI TTS1

OpenAI's TTS-1 (Text-to-Speech) is a cutting-edge generative voice model that converts written text into natural-sounding speech with astonishing clarity, pacing, and emotional nuance. TTS-1 is designed to power real-time voice applications—like assistants, narrators, or conversational agents—with near-human vocal quality and minimal latency. Available through OpenAI’s API, this model makes it easy for developers to give their applications a voice that actually sounds human—not robotic. With multiple voices, languages, and low-latency streaming, TTS-1 redefines the synthetic voice experience.

OpenAI TTS1

OpenAI's TTS-1 (Text-to-Speech) is a cutting-edge generative voice model that converts written text into natural-sounding speech with astonishing clarity, pacing, and emotional nuance. TTS-1 is designed to power real-time voice applications—like assistants, narrators, or conversational agents—with near-human vocal quality and minimal latency. Available through OpenAI’s API, this model makes it easy for developers to give their applications a voice that actually sounds human—not robotic. With multiple voices, languages, and low-latency streaming, TTS-1 redefines the synthetic voice experience.

OpenAI TTS1-HD

TTS-1-HD is OpenAI’s high-definition, low-latency streaming voice model designed to bring human-like speech to real-time applications. Building on the capabilities of the original TTS-1 model, TTS-1-HD enables developers to generate speech as the words are being produced—perfect for voice assistants, interactive bots, or live narration tools. It delivers smoother, faster, and more conversational speech experiences, making it an ideal choice for developers building next-gen voice-driven products.

OpenAI TTS1-HD

TTS-1-HD is OpenAI’s high-definition, low-latency streaming voice model designed to bring human-like speech to real-time applications. Building on the capabilities of the original TTS-1 model, TTS-1-HD enables developers to generate speech as the words are being produced—perfect for voice assistants, interactive bots, or live narration tools. It delivers smoother, faster, and more conversational speech experiences, making it an ideal choice for developers building next-gen voice-driven products.

OpenAI TTS1-HD

TTS-1-HD is OpenAI’s high-definition, low-latency streaming voice model designed to bring human-like speech to real-time applications. Building on the capabilities of the original TTS-1 model, TTS-1-HD enables developers to generate speech as the words are being produced—perfect for voice assistants, interactive bots, or live narration tools. It delivers smoother, faster, and more conversational speech experiences, making it an ideal choice for developers building next-gen voice-driven products.

OpenAI GPT Image 1

GPT-Image-1 is OpenAI's state-of-the-art vision model designed to understand and interpret images with human-like perception. It enables developers and businesses to analyze, summarize, and extract detailed insights from images using natural language. Whether you're building AI agents, accessibility tools, or image-driven workflows, GPT-Image-1 brings powerful multimodal capabilities into your applications with impressive accuracy. Optimized for use via API, it can handle diverse image types—charts, screenshots, photographs, documents, and more—making it one of the most versatile models in OpenAI’s portfolio.

OpenAI GPT Image 1

GPT-Image-1 is OpenAI's state-of-the-art vision model designed to understand and interpret images with human-like perception. It enables developers and businesses to analyze, summarize, and extract detailed insights from images using natural language. Whether you're building AI agents, accessibility tools, or image-driven workflows, GPT-Image-1 brings powerful multimodal capabilities into your applications with impressive accuracy. Optimized for use via API, it can handle diverse image types—charts, screenshots, photographs, documents, and more—making it one of the most versatile models in OpenAI’s portfolio.

OpenAI GPT Image 1

GPT-Image-1 is OpenAI's state-of-the-art vision model designed to understand and interpret images with human-like perception. It enables developers and businesses to analyze, summarize, and extract detailed insights from images using natural language. Whether you're building AI agents, accessibility tools, or image-driven workflows, GPT-Image-1 brings powerful multimodal capabilities into your applications with impressive accuracy. Optimized for use via API, it can handle diverse image types—charts, screenshots, photographs, documents, and more—making it one of the most versatile models in OpenAI’s portfolio.

OpenAI GPT 4o Sear..

GPT-4o Search Preview is a powerful experimental feature of OpenAI’s GPT-4o model, designed to act as a high-performance retrieval system. Rather than just generating answers from training data, it allows the model to search through large datasets, documents, or knowledge bases to surface relevant results with context-aware accuracy. Think of it as your AI assistant with built-in research superpowers—faster, smarter, and surprisingly precise. This preview gives developers a taste of what’s coming next: an intelligent search engine built directly into the GPT-4o ecosystem.

OpenAI GPT 4o Sear..

GPT-4o Search Preview is a powerful experimental feature of OpenAI’s GPT-4o model, designed to act as a high-performance retrieval system. Rather than just generating answers from training data, it allows the model to search through large datasets, documents, or knowledge bases to surface relevant results with context-aware accuracy. Think of it as your AI assistant with built-in research superpowers—faster, smarter, and surprisingly precise. This preview gives developers a taste of what’s coming next: an intelligent search engine built directly into the GPT-4o ecosystem.

OpenAI GPT 4o Sear..

GPT-4o Search Preview is a powerful experimental feature of OpenAI’s GPT-4o model, designed to act as a high-performance retrieval system. Rather than just generating answers from training data, it allows the model to search through large datasets, documents, or knowledge bases to surface relevant results with context-aware accuracy. Think of it as your AI assistant with built-in research superpowers—faster, smarter, and surprisingly precise. This preview gives developers a taste of what’s coming next: an intelligent search engine built directly into the GPT-4o ecosystem.

OpenAI GPT 4o mini..

GPT-4o-mini Search Preview is OpenAI’s lightweight semantic search feature powered by the GPT-4o-mini model. Designed for real-time applications and low-latency environments, it brings retrieval-augmented intelligence to any product or tool that needs blazing-fast, accurate information lookup. While compact in size, it offers the power of contextual understanding, enabling smarter, more relevant search results with fewer resources. It’s ideal for startups, embedded systems, or anyone who needs search that just works—fast, efficient, and tuned for integration.

OpenAI GPT 4o mini..

GPT-4o-mini Search Preview is OpenAI’s lightweight semantic search feature powered by the GPT-4o-mini model. Designed for real-time applications and low-latency environments, it brings retrieval-augmented intelligence to any product or tool that needs blazing-fast, accurate information lookup. While compact in size, it offers the power of contextual understanding, enabling smarter, more relevant search results with fewer resources. It’s ideal for startups, embedded systems, or anyone who needs search that just works—fast, efficient, and tuned for integration.

OpenAI GPT 4o mini..

GPT-4o-mini Search Preview is OpenAI’s lightweight semantic search feature powered by the GPT-4o-mini model. Designed for real-time applications and low-latency environments, it brings retrieval-augmented intelligence to any product or tool that needs blazing-fast, accurate information lookup. While compact in size, it offers the power of contextual understanding, enabling smarter, more relevant search results with fewer resources. It’s ideal for startups, embedded systems, or anyone who needs search that just works—fast, efficient, and tuned for integration.

I love Transcripti..

I ♡ Transcriptions is an AI-powered service that converts audio and video files into accurate text transcripts. Using OpenAI's Whisper transcription model, combined with their own optimizations, the platform provides a simple, accessible, and affordable solution for anyone needing to transcribe spoken content.

I love Transcripti..

I ♡ Transcriptions is an AI-powered service that converts audio and video files into accurate text transcripts. Using OpenAI's Whisper transcription model, combined with their own optimizations, the platform provides a simple, accessible, and affordable solution for anyone needing to transcribe spoken content.

I love Transcripti..

I ♡ Transcriptions is an AI-powered service that converts audio and video files into accurate text transcripts. Using OpenAI's Whisper transcription model, combined with their own optimizations, the platform provides a simple, accessible, and affordable solution for anyone needing to transcribe spoken content.

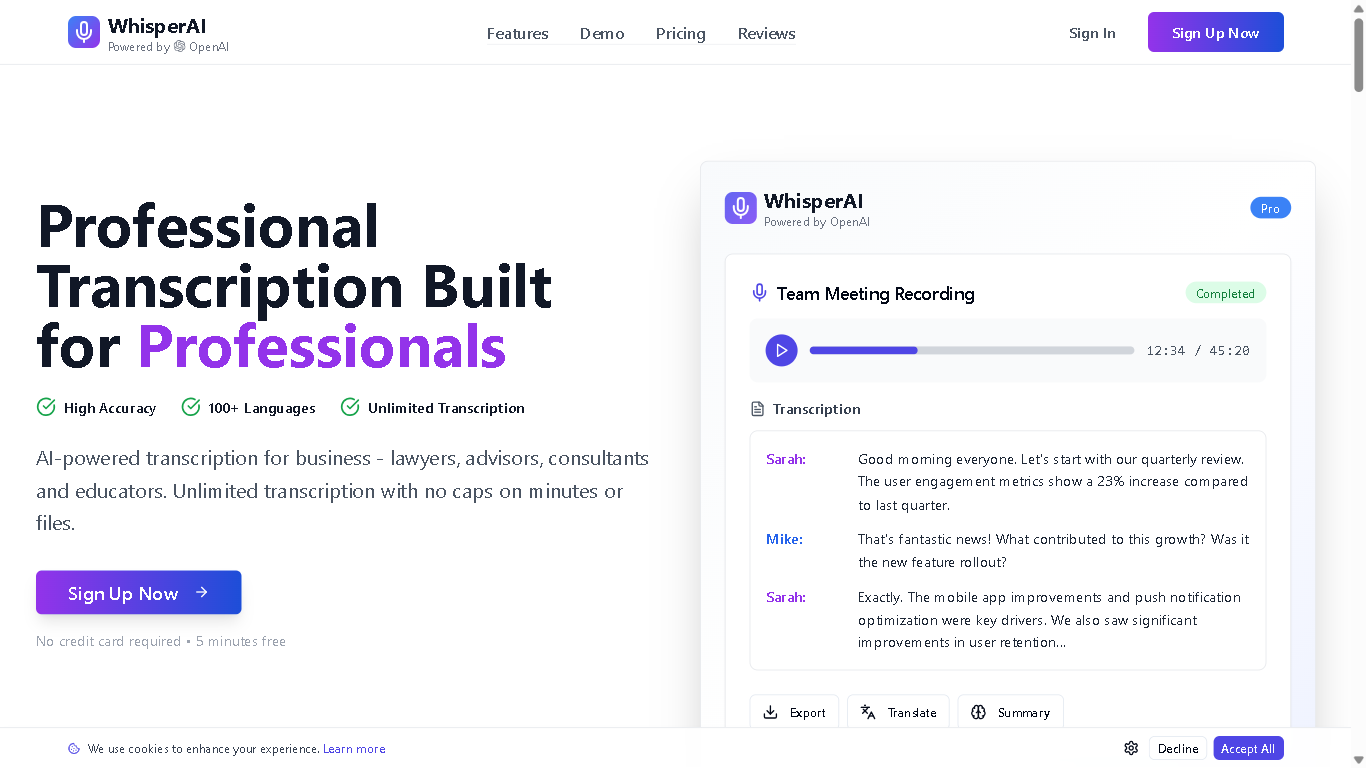

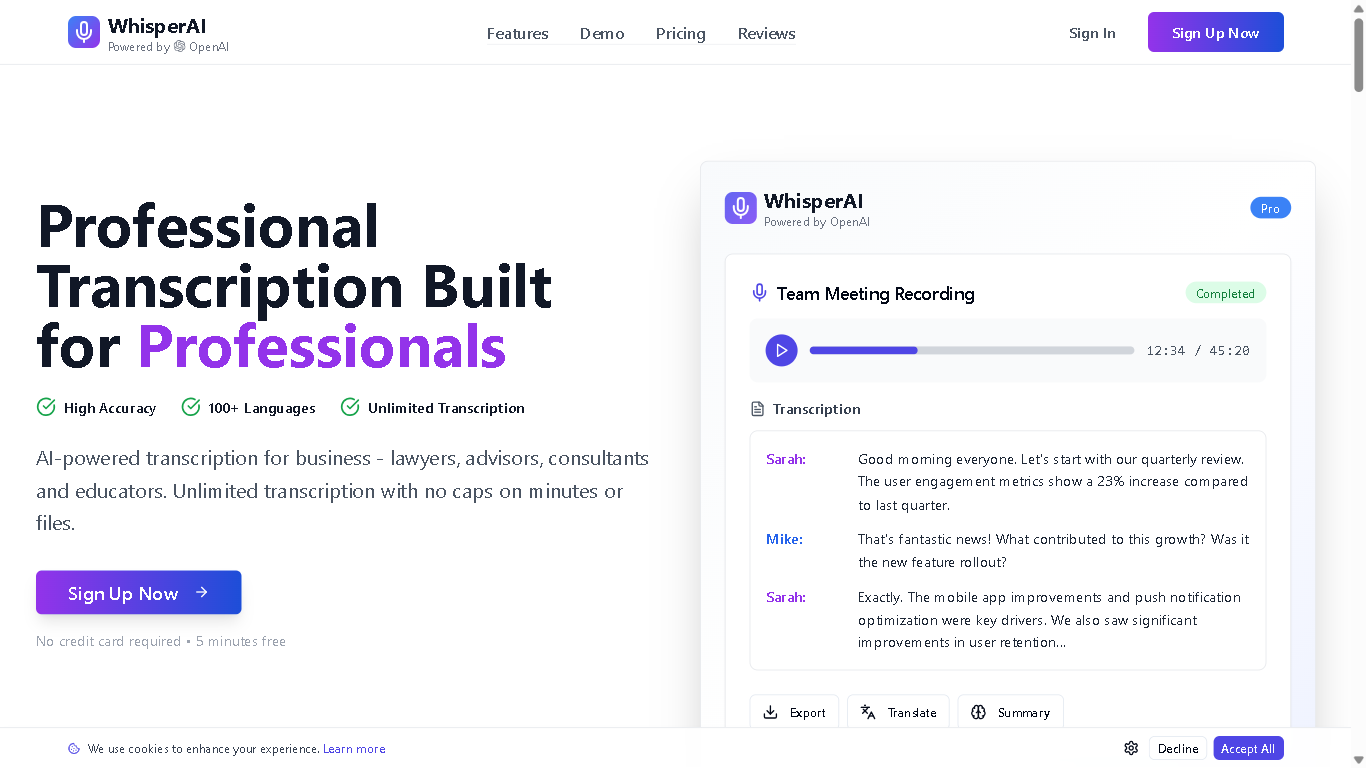

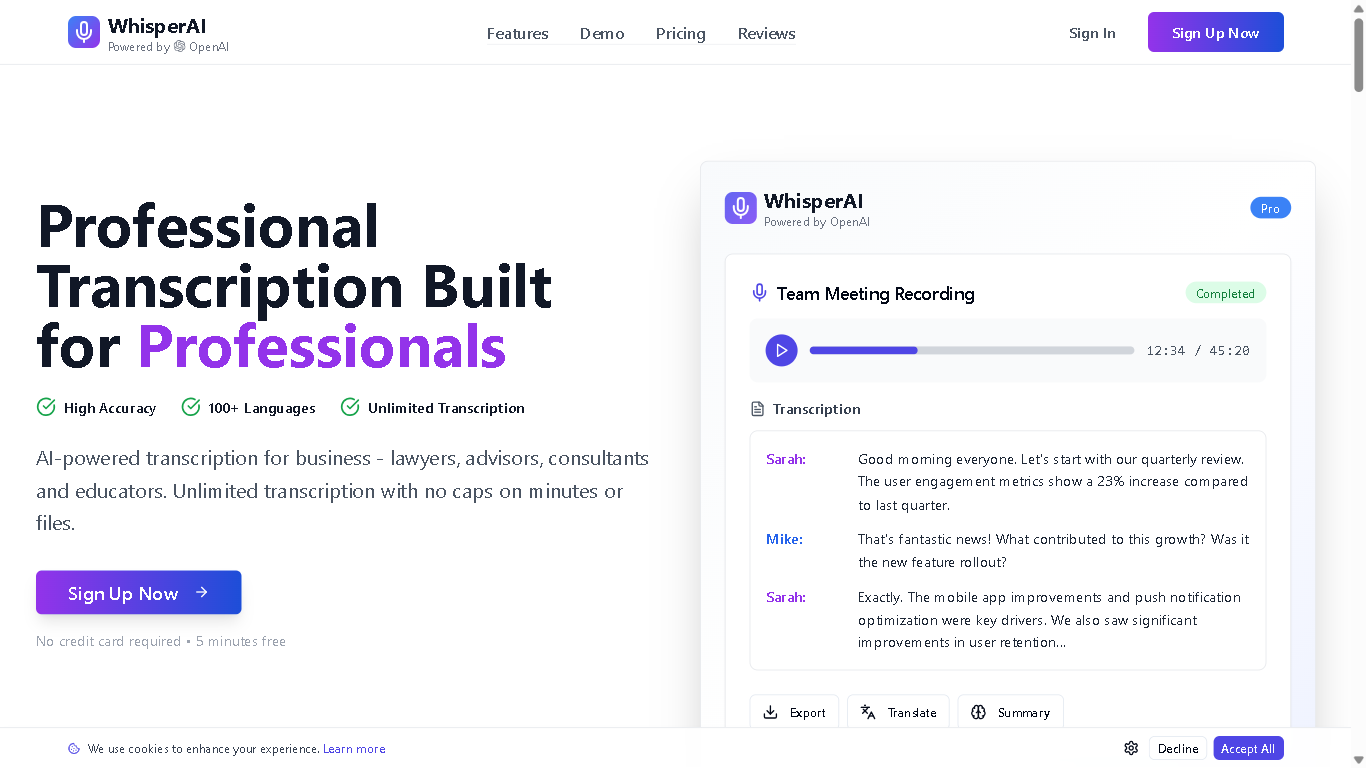

Whispr AI by OpenA..

Whisprai.ai is an AI-powered transcription and summarization tool designed to help businesses and individuals quickly and accurately transcribe audio and video files, and generate concise summaries of their content. It offers features for improving workflow efficiency and enhancing productivity through AI-driven automation.

Whispr AI by OpenA..

Whisprai.ai is an AI-powered transcription and summarization tool designed to help businesses and individuals quickly and accurately transcribe audio and video files, and generate concise summaries of their content. It offers features for improving workflow efficiency and enhancing productivity through AI-driven automation.

Whispr AI by OpenA..

Whisprai.ai is an AI-powered transcription and summarization tool designed to help businesses and individuals quickly and accurately transcribe audio and video files, and generate concise summaries of their content. It offers features for improving workflow efficiency and enhancing productivity through AI-driven automation.

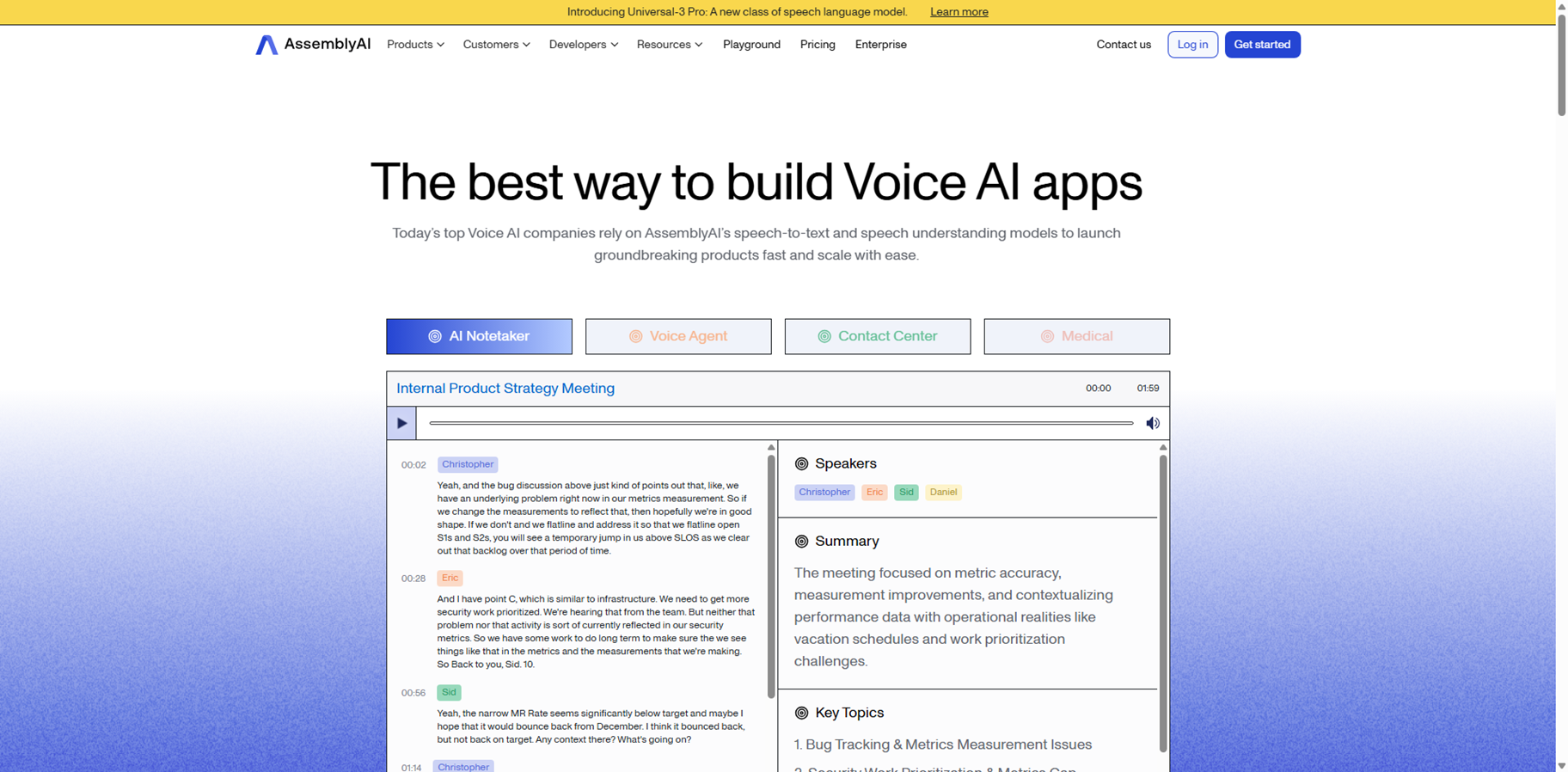

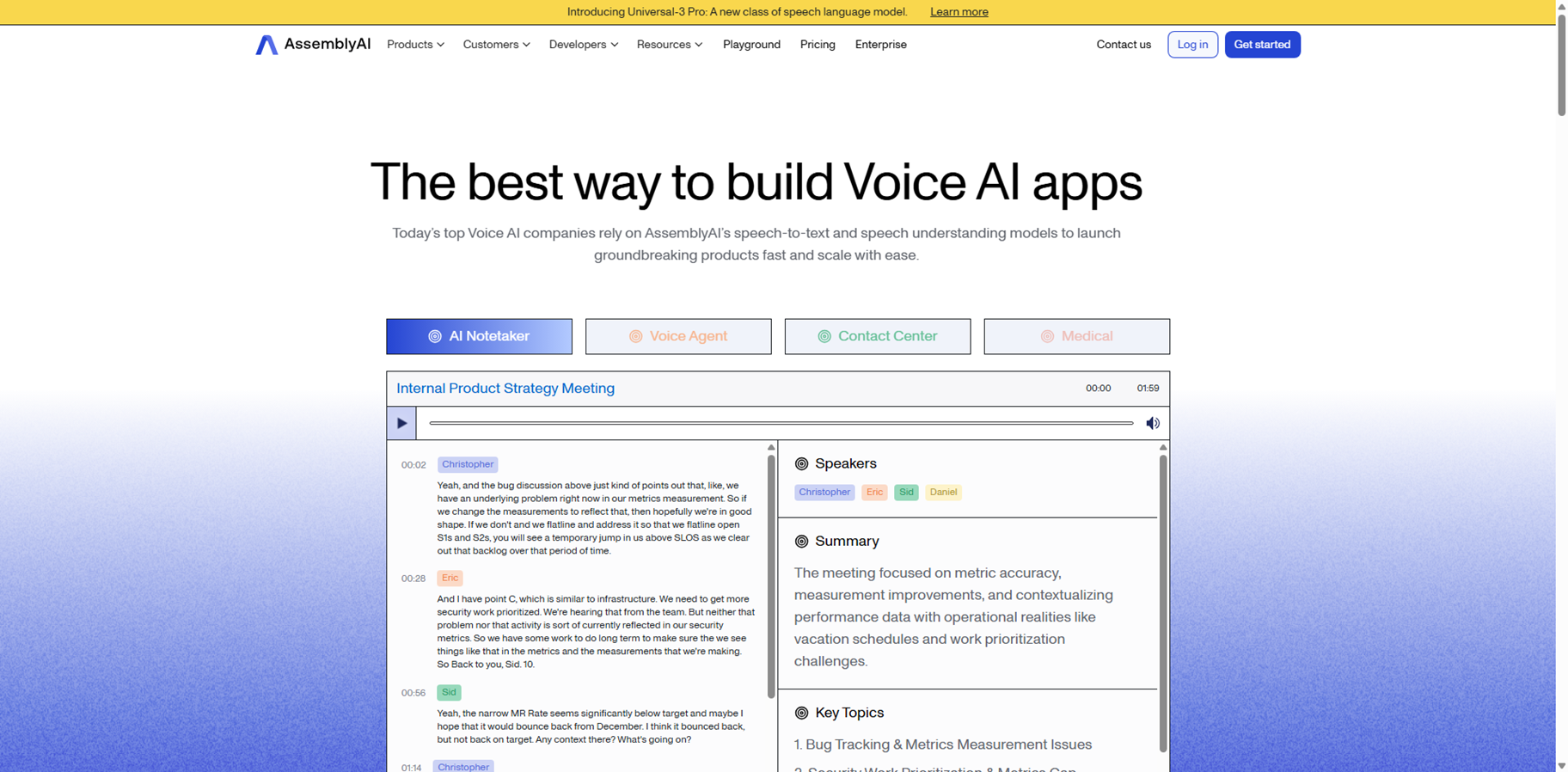

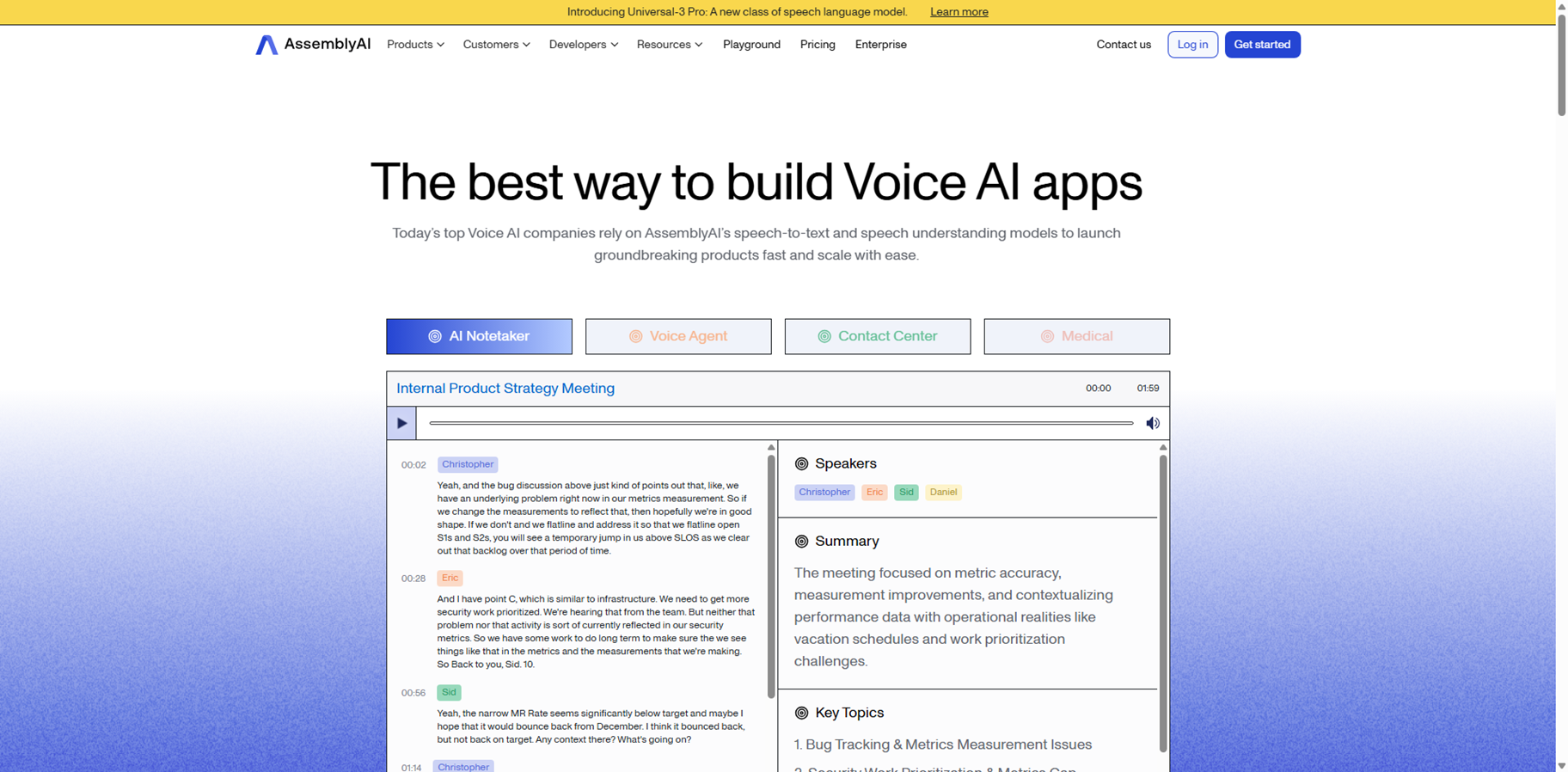

AssemblyAI

AssemblyAI is a developer-first Voice AI platform offering state-of-the-art AI models to transcribe and deeply understand speech so you can build powerful voice-driven apps. Its APIs go far beyond basic transcription with features like advanced speaker diarization, automatic formatting of text and alphanumerics, and multilingual support with automatic language detection to keep outputs clean and usable. With industry-leading accuracy, the lowest Word Error Rate, and up to 30% fewer hallucinations than other providers, it’s designed to be a rock-solid foundation for any product that depends on voice. Developers can start quickly, then seamlessly scale to millions of users, backed by infrastructure that already processes over 40 terabytes of audio and hundreds of millions of API calls each month.

AssemblyAI

AssemblyAI is a developer-first Voice AI platform offering state-of-the-art AI models to transcribe and deeply understand speech so you can build powerful voice-driven apps. Its APIs go far beyond basic transcription with features like advanced speaker diarization, automatic formatting of text and alphanumerics, and multilingual support with automatic language detection to keep outputs clean and usable. With industry-leading accuracy, the lowest Word Error Rate, and up to 30% fewer hallucinations than other providers, it’s designed to be a rock-solid foundation for any product that depends on voice. Developers can start quickly, then seamlessly scale to millions of users, backed by infrastructure that already processes over 40 terabytes of audio and hundreds of millions of API calls each month.

AssemblyAI

AssemblyAI is a developer-first Voice AI platform offering state-of-the-art AI models to transcribe and deeply understand speech so you can build powerful voice-driven apps. Its APIs go far beyond basic transcription with features like advanced speaker diarization, automatic formatting of text and alphanumerics, and multilingual support with automatic language detection to keep outputs clean and usable. With industry-leading accuracy, the lowest Word Error Rate, and up to 30% fewer hallucinations than other providers, it’s designed to be a rock-solid foundation for any product that depends on voice. Developers can start quickly, then seamlessly scale to millions of users, backed by infrastructure that already processes over 40 terabytes of audio and hundreds of millions of API calls each month.

Editorial Note

This page was researched and written by the ATB Editorial Team. Our team researches each AI tool by reviewing its official website, testing features, exploring real use cases, and considering user feedback. Every page is fact-checked and regularly updated to ensure the information stays accurate, neutral, and useful for our readers.

If you have any suggestions or questions, email us at hello@aitoolbook.ai