- Biologists: Who need to perform omics analysis but lack coding skills.

- Researchers in omics fields: Such as genomics, proteomics, metabolomics, etc..

- Scientists: Seeking to simplify complex bioinformatics tasks.

- Laboratories & Academia: Requiring natural language interaction for data analysis.

- Anyone working with omics data: Who prefers a coding-free approach to analysis.

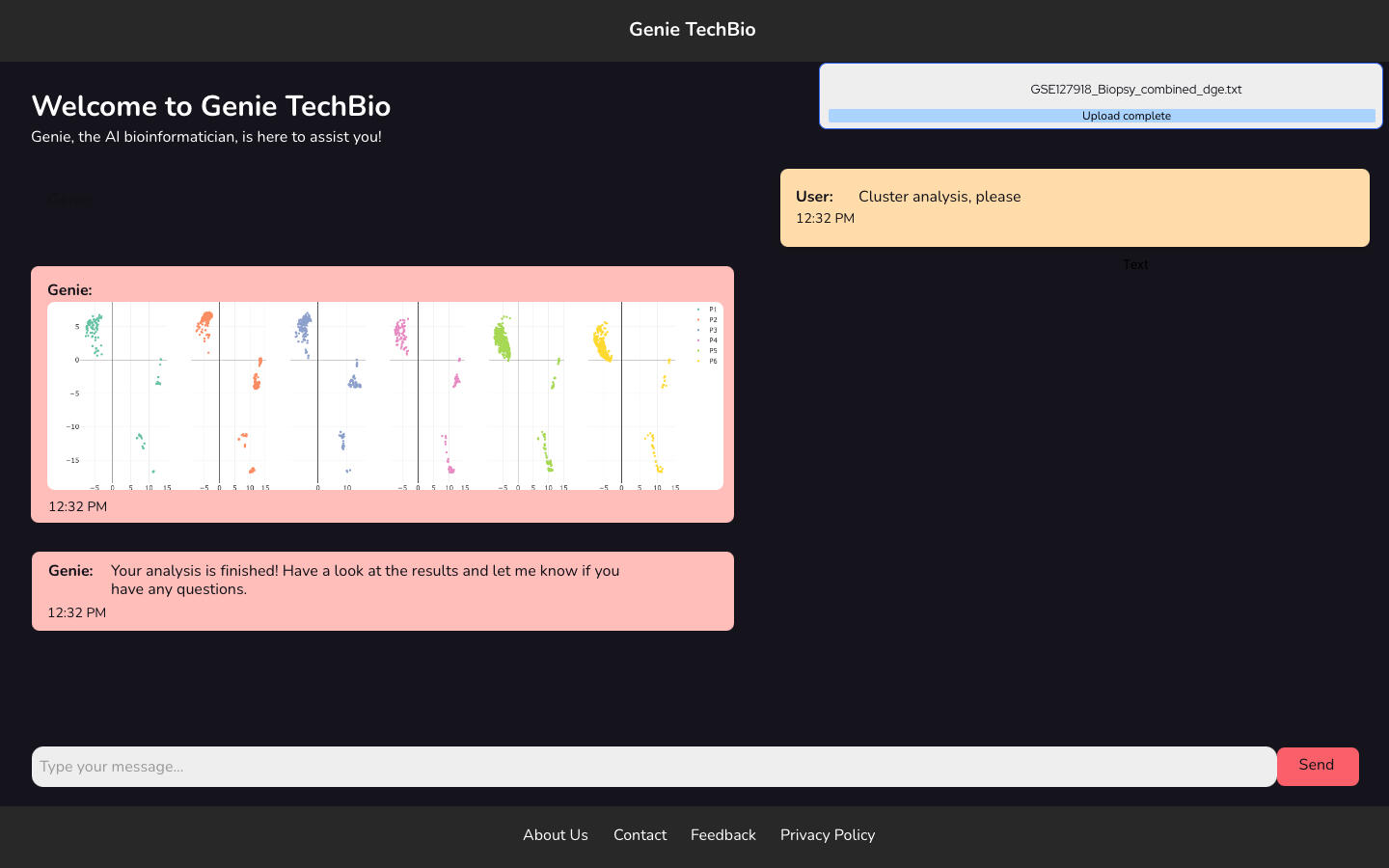

How to Use Genie TechBio?

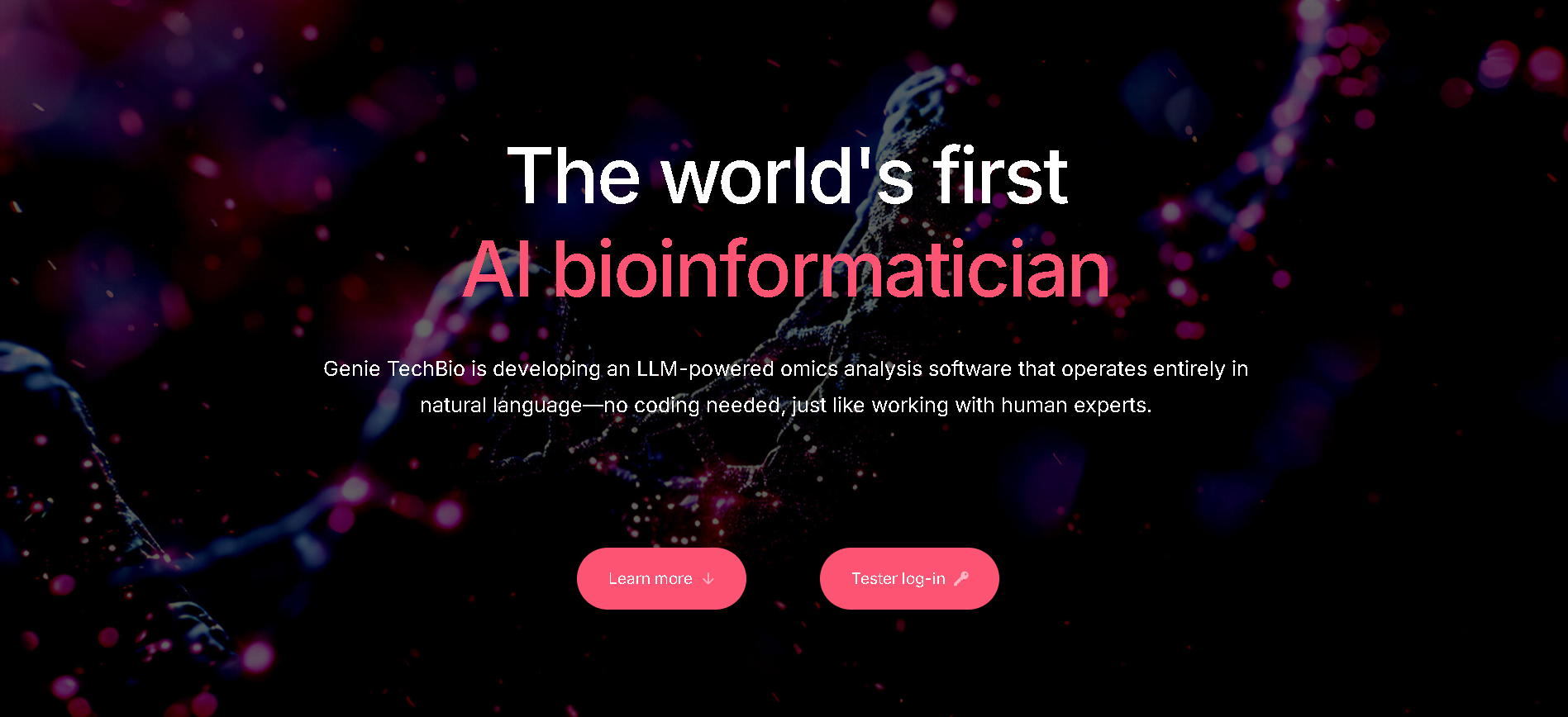

- Learn More: Users can click "Learn more" for additional information.

- Tester Log-in: There is an option for a "Tester log-in" which suggests a way to access the software.

- Interact via Natural Language: (Inferred) Users would interact with the LLM-powered software using natural language, similar to conversing with a human expert, for omics analysis.

- Perform Omics Analysis: (Inferred) The AI would perform complex omics analysis based on user queries without requiring coding.

- World's First AI Bioinformatician: Positions itself as a pioneering tool in the field.

- LLM-Powered Omics Analysis: Utilizes Large Language Models (LLM) for complex biological data analysis.

- Natural Language Interface: Operates entirely in natural language, eliminating the need for coding.

- Expert-like Interaction: Designed to allow users to work with it "just like working with human experts".

- Simplifies Complex Biology: Aims to make omics analysis accessible to those without extensive bioinformatics coding skills.

- It is "the world's first AI bioinformatician," indicating innovation.

- Uses LLM for omics analysis, simplifying complex biological data interpretation.

- Features a natural language interface, removing the need for coding.

- Aims to provide an experience "just like working with human experts".

- Offers a "Tester log-in," suggesting a way to try the product.

- The accuracy and reliability of AI-driven bioinformatics analysis need to be thoroughly validated.

- Currently in development (implied by "Tester log-in"), not yet broadly available.

Paid

custom

Proud of the love you're getting? Show off your AI Toolbook reviews—then invite more fans to share the love and build your credibility.

Add an AI Toolbook badge to your site—an easy way to drive followers, showcase updates, and collect reviews. It's like a mini 24/7 billboard for your AI.

Reviews

Rating Distribution

Average score

Popular Mention

FAQs

Similar AI Tools

LangChain AI

LangChain AI Local Deep Researcher is an autonomous, fully local web research assistant designed to conduct in-depth research on user-provided topics. It leverages local Large Language Models (LLMs) hosted by Ollama or LM Studio to iteratively generate search queries, summarize findings from web sources, and refine its understanding by identifying and addressing knowledge gaps. The final output is a comprehensive markdown report with citations to all sources.

LangChain AI

LangChain AI Local Deep Researcher is an autonomous, fully local web research assistant designed to conduct in-depth research on user-provided topics. It leverages local Large Language Models (LLMs) hosted by Ollama or LM Studio to iteratively generate search queries, summarize findings from web sources, and refine its understanding by identifying and addressing knowledge gaps. The final output is a comprehensive markdown report with citations to all sources.

LangChain AI

LangChain AI Local Deep Researcher is an autonomous, fully local web research assistant designed to conduct in-depth research on user-provided topics. It leverages local Large Language Models (LLMs) hosted by Ollama or LM Studio to iteratively generate search queries, summarize findings from web sources, and refine its understanding by identifying and addressing knowledge gaps. The final output is a comprehensive markdown report with citations to all sources.

Meta Llama 3.3

Llama 3.3 is Meta’s instruction-tuned, text-only large language model released on December 6, 2024, available in a 70B-parameter size. It matches the performance of much larger models using significantly fewer parameters, is multilingual across eight key languages, and supports a massive 128,000-token context window—ideal for handling long-form documents, codebases, and detailed reasoning tasks.

Meta Llama 3.3

Llama 3.3 is Meta’s instruction-tuned, text-only large language model released on December 6, 2024, available in a 70B-parameter size. It matches the performance of much larger models using significantly fewer parameters, is multilingual across eight key languages, and supports a massive 128,000-token context window—ideal for handling long-form documents, codebases, and detailed reasoning tasks.

Meta Llama 3.3

Llama 3.3 is Meta’s instruction-tuned, text-only large language model released on December 6, 2024, available in a 70B-parameter size. It matches the performance of much larger models using significantly fewer parameters, is multilingual across eight key languages, and supports a massive 128,000-token context window—ideal for handling long-form documents, codebases, and detailed reasoning tasks.

Mistral Saba

Mistral Saba is a 24 billion‑parameter regional language model launched by Mistral AI on February 17, 2025. Designed for native fluency in Arabic and South Asian languages (like Tamil, Malayalam, and Urdu), it delivers culturally-aware responses on single‑GPU systems—faster and more precise than much larger general models.

Mistral Saba

Mistral Saba is a 24 billion‑parameter regional language model launched by Mistral AI on February 17, 2025. Designed for native fluency in Arabic and South Asian languages (like Tamil, Malayalam, and Urdu), it delivers culturally-aware responses on single‑GPU systems—faster and more precise than much larger general models.

Mistral Saba

Mistral Saba is a 24 billion‑parameter regional language model launched by Mistral AI on February 17, 2025. Designed for native fluency in Arabic and South Asian languages (like Tamil, Malayalam, and Urdu), it delivers culturally-aware responses on single‑GPU systems—faster and more precise than much larger general models.

Groq APP Gen

Groq AppGen is an innovative, web-based tool that uses AI to generate and modify web applications in real-time. Powered by Groq's LLM API and the Llama 3.3 70B model, it allows users to create full-stack applications and components using simple, natural language queries. The platform's primary purpose is to dramatically accelerate the development process by generating code in milliseconds, providing an open-source solution for both developers and "no-code" users.

Groq APP Gen

Groq AppGen is an innovative, web-based tool that uses AI to generate and modify web applications in real-time. Powered by Groq's LLM API and the Llama 3.3 70B model, it allows users to create full-stack applications and components using simple, natural language queries. The platform's primary purpose is to dramatically accelerate the development process by generating code in milliseconds, providing an open-source solution for both developers and "no-code" users.

Groq APP Gen

Groq AppGen is an innovative, web-based tool that uses AI to generate and modify web applications in real-time. Powered by Groq's LLM API and the Llama 3.3 70B model, it allows users to create full-stack applications and components using simple, natural language queries. The platform's primary purpose is to dramatically accelerate the development process by generating code in milliseconds, providing an open-source solution for both developers and "no-code" users.

LM Arena

LMArena is a platform designed to allow users to contribute to the development of AI through collective feedback. Users interact with and provide feedback on various Large Language Models (LLMs) by voting on their responses, thereby helping to shape and improve AI capabilities. The platform fosters a global community and features a leaderboard to showcase user contributions.

LM Arena

LMArena is a platform designed to allow users to contribute to the development of AI through collective feedback. Users interact with and provide feedback on various Large Language Models (LLMs) by voting on their responses, thereby helping to shape and improve AI capabilities. The platform fosters a global community and features a leaderboard to showcase user contributions.

LM Arena

LMArena is a platform designed to allow users to contribute to the development of AI through collective feedback. Users interact with and provide feedback on various Large Language Models (LLMs) by voting on their responses, thereby helping to shape and improve AI capabilities. The platform fosters a global community and features a leaderboard to showcase user contributions.

Genie 3 is DeepMind’s cutting-edge world model designed to advance AI’s ability to understand, simulate, and reason about complex real-world environments. Building on years of research in reinforcement learning and model-based AI, Genie 3 integrates sophisticated prediction, imagination, and planning capabilities to generate highly accurate and dynamic representations of the world. This enables smarter decision-making, improved transfer learning, and powerful generalization across diverse tasks, marking a new frontier in AI’s capacity to model and interact with its surroundings.

Google Deepmind Ge..

Genie 3 is DeepMind’s cutting-edge world model designed to advance AI’s ability to understand, simulate, and reason about complex real-world environments. Building on years of research in reinforcement learning and model-based AI, Genie 3 integrates sophisticated prediction, imagination, and planning capabilities to generate highly accurate and dynamic representations of the world. This enables smarter decision-making, improved transfer learning, and powerful generalization across diverse tasks, marking a new frontier in AI’s capacity to model and interact with its surroundings.

Google Deepmind Ge..

Genie 3 is DeepMind’s cutting-edge world model designed to advance AI’s ability to understand, simulate, and reason about complex real-world environments. Building on years of research in reinforcement learning and model-based AI, Genie 3 integrates sophisticated prediction, imagination, and planning capabilities to generate highly accurate and dynamic representations of the world. This enables smarter decision-making, improved transfer learning, and powerful generalization across diverse tasks, marking a new frontier in AI’s capacity to model and interact with its surroundings.

inception

Inception Labs is an AI research company that develops Mercury, the world's first commercial diffusion-based large language models. Unlike traditional autoregressive LLMs that generate tokens sequentially, Mercury models use diffusion architecture to generate text through parallel refinement passes. This breakthrough approach enables ultra-fast inference speeds of over 1,000 tokens per second while maintaining frontier-level quality. The platform offers Mercury for general-purpose tasks and Mercury Coder for development workflows, both featuring streaming capabilities, tool use, structured output, and 128K context windows. These models serve as drop-in replacements for traditional LLMs through OpenAI-compatible APIs and are available across major cloud providers including AWS Bedrock, Azure Foundry, and various AI platforms for enterprise deployment.

inception

Inception Labs is an AI research company that develops Mercury, the world's first commercial diffusion-based large language models. Unlike traditional autoregressive LLMs that generate tokens sequentially, Mercury models use diffusion architecture to generate text through parallel refinement passes. This breakthrough approach enables ultra-fast inference speeds of over 1,000 tokens per second while maintaining frontier-level quality. The platform offers Mercury for general-purpose tasks and Mercury Coder for development workflows, both featuring streaming capabilities, tool use, structured output, and 128K context windows. These models serve as drop-in replacements for traditional LLMs through OpenAI-compatible APIs and are available across major cloud providers including AWS Bedrock, Azure Foundry, and various AI platforms for enterprise deployment.

inception

Inception Labs is an AI research company that develops Mercury, the world's first commercial diffusion-based large language models. Unlike traditional autoregressive LLMs that generate tokens sequentially, Mercury models use diffusion architecture to generate text through parallel refinement passes. This breakthrough approach enables ultra-fast inference speeds of over 1,000 tokens per second while maintaining frontier-level quality. The platform offers Mercury for general-purpose tasks and Mercury Coder for development workflows, both featuring streaming capabilities, tool use, structured output, and 128K context windows. These models serve as drop-in replacements for traditional LLMs through OpenAI-compatible APIs and are available across major cloud providers including AWS Bedrock, Azure Foundry, and various AI platforms for enterprise deployment.

Abacus.AI

ChatLLM Teams by Abacus.AI is an all‑in‑one AI assistant that unifies access to top LLMs, image and video generators, and powerful agentic tools in a single workspace. It includes DeepAgent for complex, multi‑step tasks, code execution with an editor, document/chat with files, web search, TTS, and slide/doc generation. Users can build custom chatbots, set up AI workflows, generate images and videos from multiple models, and organize work with projects across desktop and mobile apps. The platform is OpenAI‑style in usability but adds operator features for running tasks on a computer, plus DeepAgent Desktop and AppLLM for building and hosting small apps.

Abacus.AI

ChatLLM Teams by Abacus.AI is an all‑in‑one AI assistant that unifies access to top LLMs, image and video generators, and powerful agentic tools in a single workspace. It includes DeepAgent for complex, multi‑step tasks, code execution with an editor, document/chat with files, web search, TTS, and slide/doc generation. Users can build custom chatbots, set up AI workflows, generate images and videos from multiple models, and organize work with projects across desktop and mobile apps. The platform is OpenAI‑style in usability but adds operator features for running tasks on a computer, plus DeepAgent Desktop and AppLLM for building and hosting small apps.

Abacus.AI

ChatLLM Teams by Abacus.AI is an all‑in‑one AI assistant that unifies access to top LLMs, image and video generators, and powerful agentic tools in a single workspace. It includes DeepAgent for complex, multi‑step tasks, code execution with an editor, document/chat with files, web search, TTS, and slide/doc generation. Users can build custom chatbots, set up AI workflows, generate images and videos from multiple models, and organize work with projects across desktop and mobile apps. The platform is OpenAI‑style in usability but adds operator features for running tasks on a computer, plus DeepAgent Desktop and AppLLM for building and hosting small apps.

Soket AI

Soket AI is an Indian deep-tech startup building sovereign, multilingual foundational AI models and real-time voice/speech APIs designed for Indic languages and global scale. By focusing on language diversity, cultural context and ethical AI, Soket AI aims to develop models that recognise and respond across many languages, while delivering enterprise-grade capabilities for sectors such as defence, healthcare, education and governance.

Soket AI

Soket AI is an Indian deep-tech startup building sovereign, multilingual foundational AI models and real-time voice/speech APIs designed for Indic languages and global scale. By focusing on language diversity, cultural context and ethical AI, Soket AI aims to develop models that recognise and respond across many languages, while delivering enterprise-grade capabilities for sectors such as defence, healthcare, education and governance.

Soket AI

Soket AI is an Indian deep-tech startup building sovereign, multilingual foundational AI models and real-time voice/speech APIs designed for Indic languages and global scale. By focusing on language diversity, cultural context and ethical AI, Soket AI aims to develop models that recognise and respond across many languages, while delivering enterprise-grade capabilities for sectors such as defence, healthcare, education and governance.

ChatBetter

ChatBetter is an AI platform designed to unify access to all major large language models (LLMs) within a single chat interface. Built for productivity and accuracy, ChatBetter leverages automatic model selection to route every query to the most capable AI—eliminating guesswork about which model to use. Users can directly compare responses from OpenAI, Anthropic, Google, Meta, DeepSeek, Perplexity, Mistral, xAI, and Cohere models side by side, or merge answers for comprehensive insights. The system is crafted for teams and individuals alike, enabling complex research, planning, and writing tasks to be accomplished efficiently in one place.

ChatBetter

ChatBetter is an AI platform designed to unify access to all major large language models (LLMs) within a single chat interface. Built for productivity and accuracy, ChatBetter leverages automatic model selection to route every query to the most capable AI—eliminating guesswork about which model to use. Users can directly compare responses from OpenAI, Anthropic, Google, Meta, DeepSeek, Perplexity, Mistral, xAI, and Cohere models side by side, or merge answers for comprehensive insights. The system is crafted for teams and individuals alike, enabling complex research, planning, and writing tasks to be accomplished efficiently in one place.

ChatBetter

ChatBetter is an AI platform designed to unify access to all major large language models (LLMs) within a single chat interface. Built for productivity and accuracy, ChatBetter leverages automatic model selection to route every query to the most capable AI—eliminating guesswork about which model to use. Users can directly compare responses from OpenAI, Anthropic, Google, Meta, DeepSeek, Perplexity, Mistral, xAI, and Cohere models side by side, or merge answers for comprehensive insights. The system is crafted for teams and individuals alike, enabling complex research, planning, and writing tasks to be accomplished efficiently in one place.

Ask Any Model

AskAnyModel is a unified AI interface that allows users to interact with multiple leading AI models — such as GPT, Claude, Gemini, and Mistral — from a single platform. It eliminates the need for multiple subscriptions and interfaces by bringing top AI models into one streamlined environment. Users can compare responses, analyze outputs, and select the best AI model for specific tasks like content creation, coding, data analysis, or research. AskAnyModel empowers individuals and teams to harness AI diversity efficiently, offering advanced tools for prompt testing, model benchmarking, and workflow integration.

Ask Any Model

AskAnyModel is a unified AI interface that allows users to interact with multiple leading AI models — such as GPT, Claude, Gemini, and Mistral — from a single platform. It eliminates the need for multiple subscriptions and interfaces by bringing top AI models into one streamlined environment. Users can compare responses, analyze outputs, and select the best AI model for specific tasks like content creation, coding, data analysis, or research. AskAnyModel empowers individuals and teams to harness AI diversity efficiently, offering advanced tools for prompt testing, model benchmarking, and workflow integration.

Ask Any Model

AskAnyModel is a unified AI interface that allows users to interact with multiple leading AI models — such as GPT, Claude, Gemini, and Mistral — from a single platform. It eliminates the need for multiple subscriptions and interfaces by bringing top AI models into one streamlined environment. Users can compare responses, analyze outputs, and select the best AI model for specific tasks like content creation, coding, data analysis, or research. AskAnyModel empowers individuals and teams to harness AI diversity efficiently, offering advanced tools for prompt testing, model benchmarking, and workflow integration.

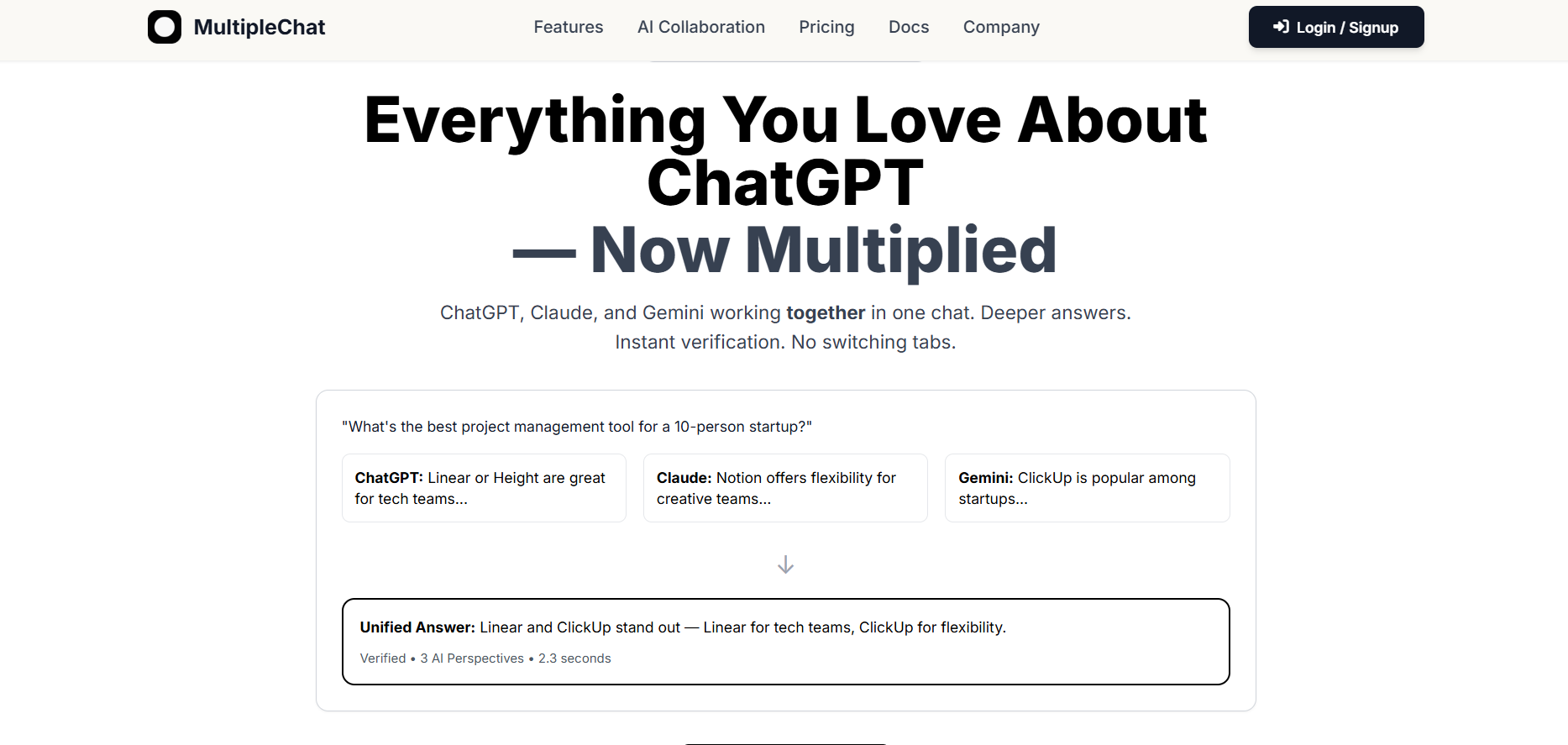

Multiple Chat

Multiple is a multi-model AI chat platform that allows users to interact with several leading AI models within a single interface. By combining responses from different AI systems, it enables deeper exploration, comparison, and verification of answers without switching tabs. The platform is designed for users who rely heavily on conversational AI and want improved accuracy, broader perspectives, and more confident outputs.

Multiple Chat

Multiple is a multi-model AI chat platform that allows users to interact with several leading AI models within a single interface. By combining responses from different AI systems, it enables deeper exploration, comparison, and verification of answers without switching tabs. The platform is designed for users who rely heavily on conversational AI and want improved accuracy, broader perspectives, and more confident outputs.

Multiple Chat

Multiple is a multi-model AI chat platform that allows users to interact with several leading AI models within a single interface. By combining responses from different AI systems, it enables deeper exploration, comparison, and verification of answers without switching tabs. The platform is designed for users who rely heavily on conversational AI and want improved accuracy, broader perspectives, and more confident outputs.

Editorial Note

This page was researched and written by the ATB Editorial Team. Our team researches each AI tool by reviewing its official website, testing features, exploring real use cases, and considering user feedback. Every page is fact-checked and regularly updated to ensure the information stays accurate, neutral, and useful for our readers.

If you have any suggestions or questions, email us at hello@aitoolbook.ai