- Enterprise Developers & API Users: Build high-throughput applications like chatbots, moderation, automated support, and RAG pipelines.

- Data & Compliance Teams: Perform fast multimodal document analysis, classification, routing, and summarization at scale.

- AI & Agent Builders: Use thinking-enabled reasoning and tool invocation for AI assistant workflows.

- Localization & Voice Platforms: Deliver rapid text-to-speech or audio-interactive services.

- SaaS & High-Volume Clients: Leverage low-latency processing for critical, large-scale tasks via APIs like Vertex AI.

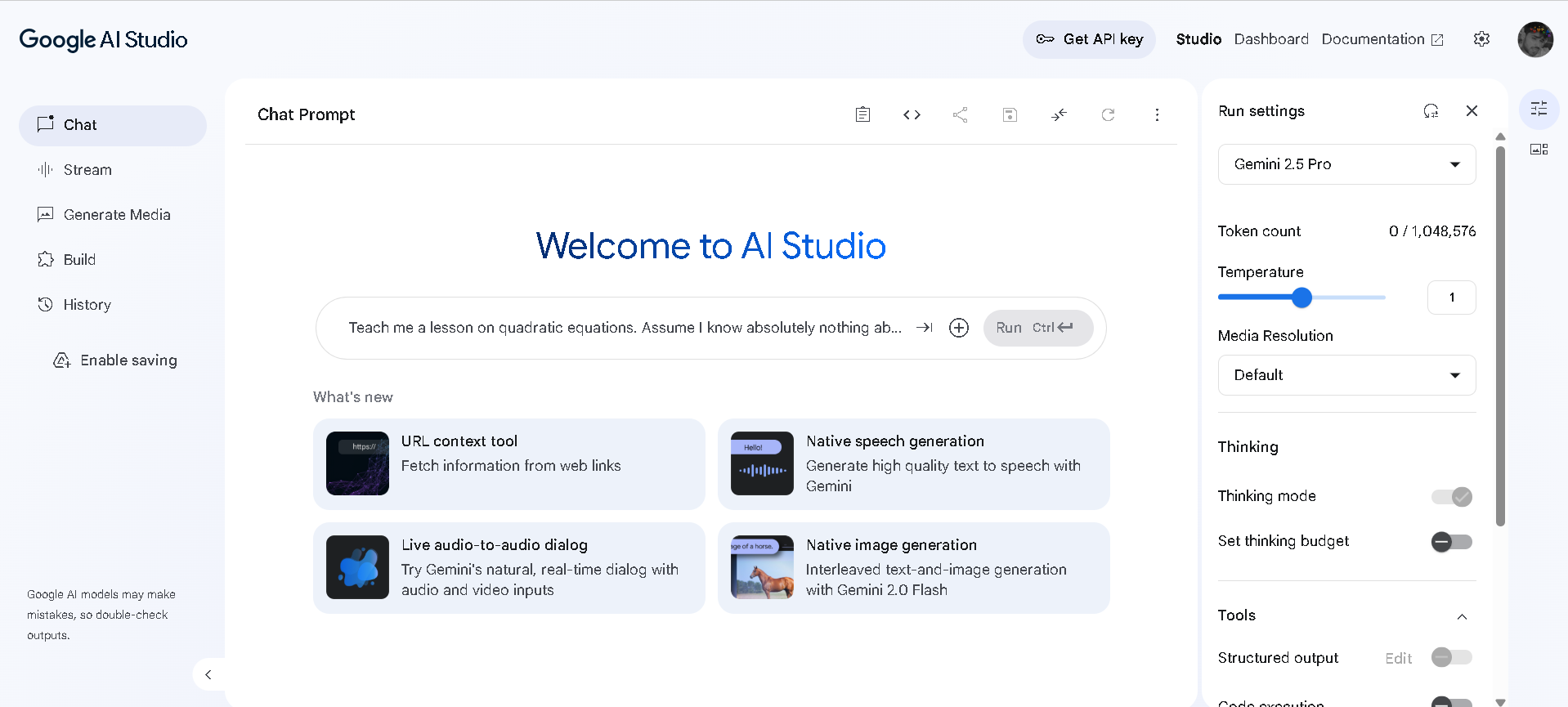

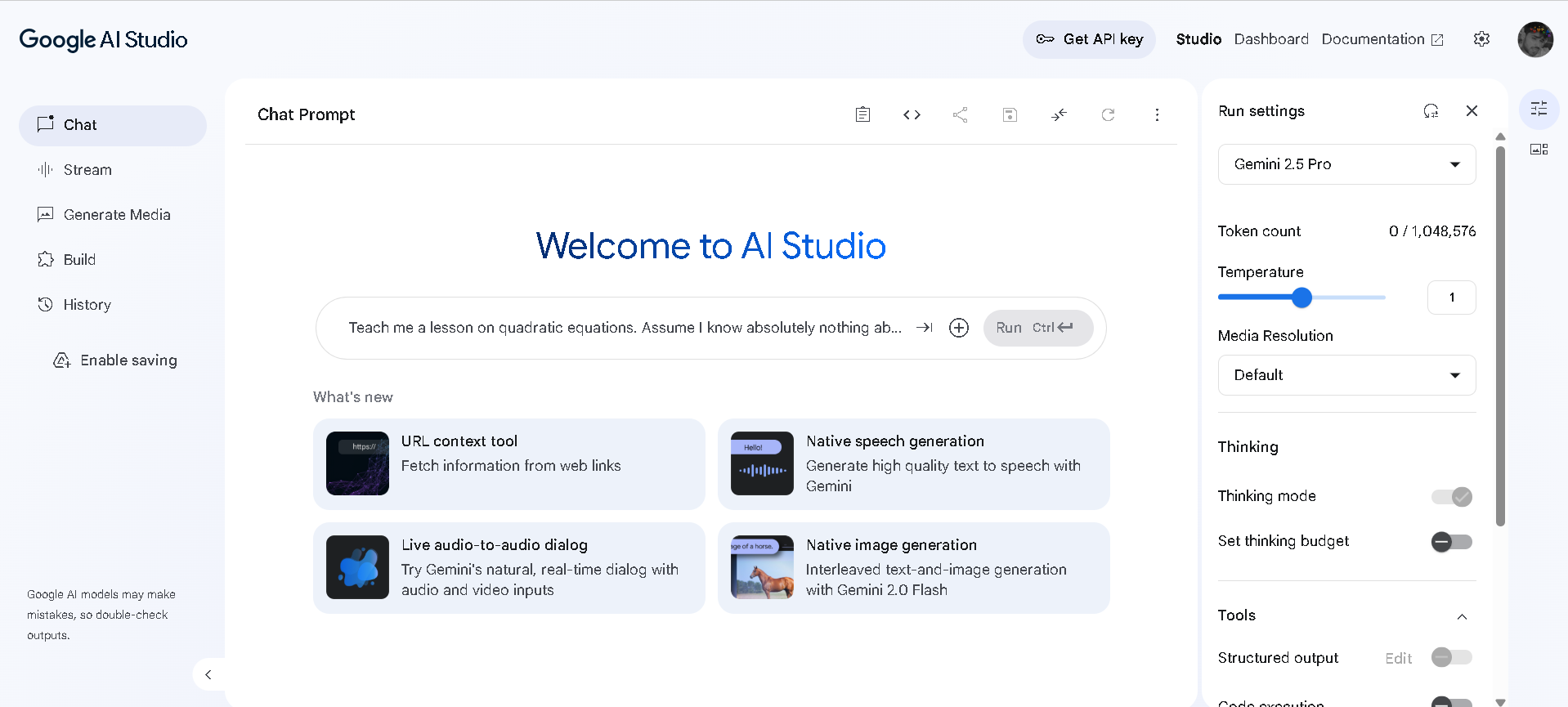

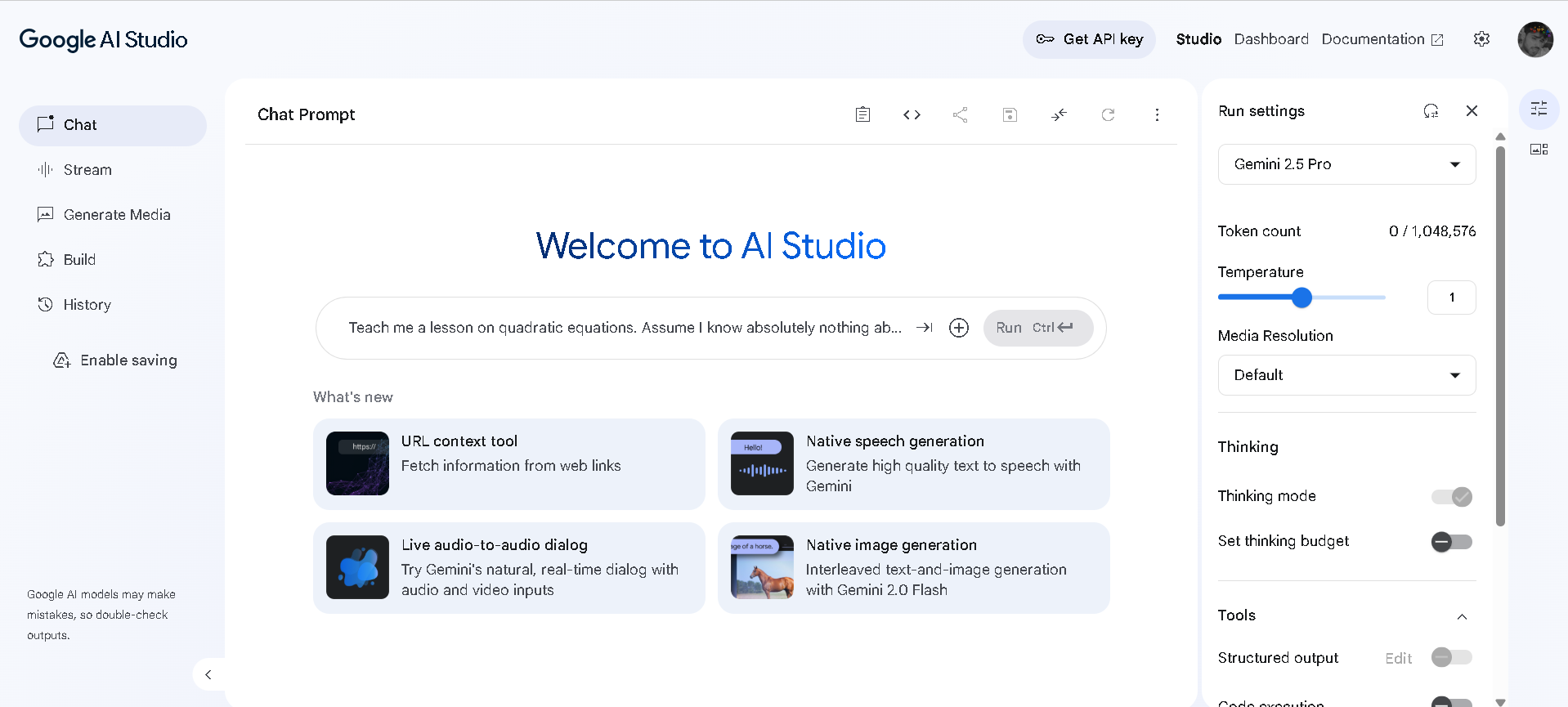

How to Use Gemini 2.5 Flash?

- Access the Model: Available via Google’s Gemini API and Vertex AI in the Studio.

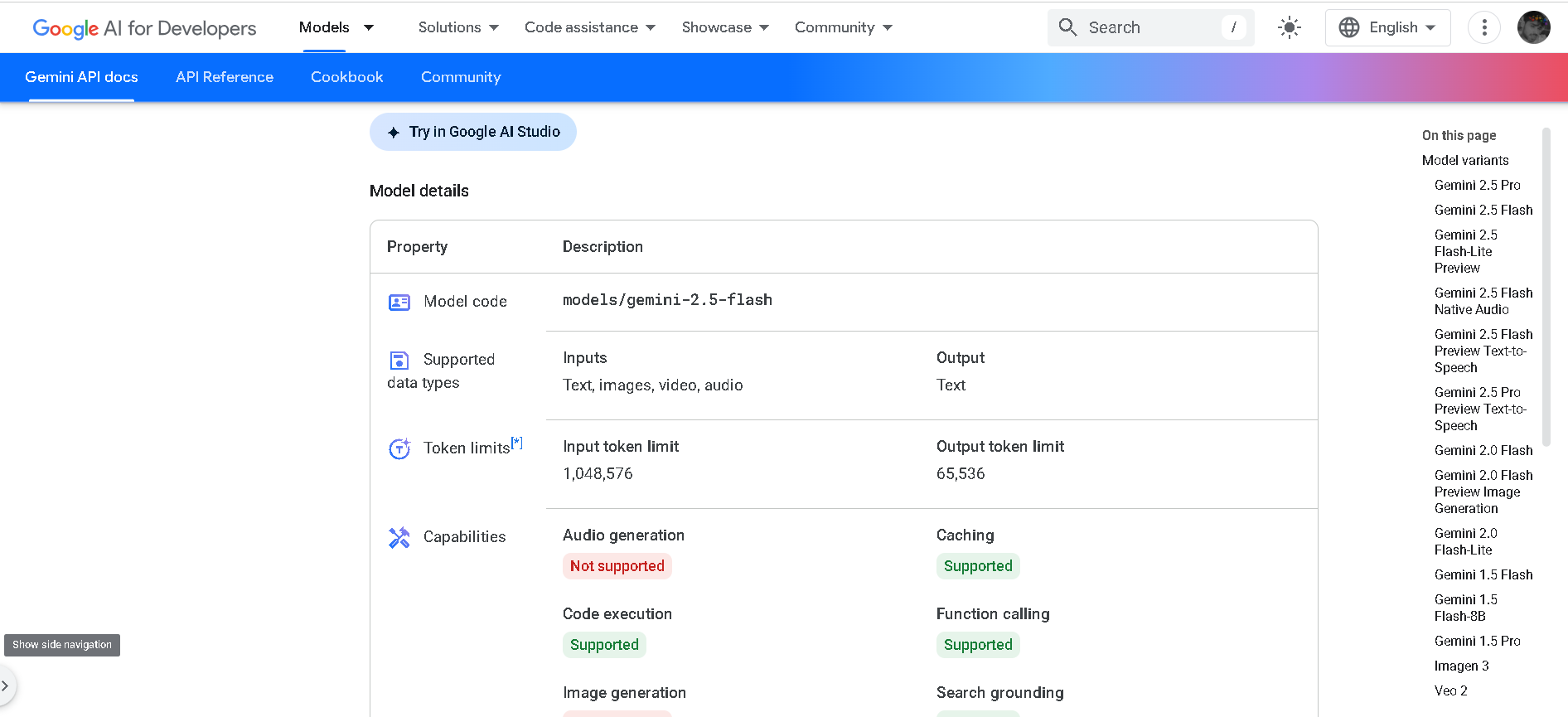

- Send Mixed Inputs: Input text, images, audio, or video to receive swift text-based responses.

- Manage Thinking Depth: Use “thinking budgets” to balance response quality, cost, and latency.

- Scale & Integrate: Deploy for mission-critical workflows like summarization, classification, and routing at volume.

- Monitor & Optimize: Track token usage, latency, and reasoning performance to tune cost-efficiency through caching, batching, or Flash-Lite.

- Best-in-Class Speed: Processes high throughput with a first-token latency around 0.37 seconds.

- Hybrid Reasoning: Automatically thinks before answering; developers can set reasoning budgets to optimize performance.

- Large Context Window: Supports up to 1 million tokens—suitable for massive documents or long workflows.

- Multimodal Inputs: Accepts text, images, audio, and video—fully integrated for real-time understanding.

- Strong Performance Metrics: High intelligence index (~0.809 MMLU), faster output (282 ops), and efficient cost ($0.26 per million tokens).

- Ultra-low latency—ideal for real-time systems

- Flexible, controllable reasoning with “thinking budgets”

- Scalable across multimodal enterprise use cases

- Large context for complex workflows

- Efficient price-performance compared to peers

- Developer access required—no out-of-the-box UI

- Cost can rise with frequent or deep thinking

- Multimodal complexity may need tuning for optimal latency

Free

$ 0.00

Paid

Custom

- Input price: 1) $0.30 (text / image / video) 2) $1.00 (audio)

- Context caching: 1) $0.075 (text / image / video) 2) $0.25 (audio)

- Grounding with Google Search: 1,500 RPD (free, limit shared with Flash-Lite RPD), then $35 / 1,000 requests

Proud of the love you're getting? Show off your AI Toolbook reviews—then invite more fans to share the love and build your credibility.

Add an AI Toolbook badge to your site—an easy way to drive followers, showcase updates, and collect reviews. It's like a mini 24/7 billboard for your AI.

Reviews

Rating Distribution

Average score

Popular Mention

FAQs

Similar AI Tools

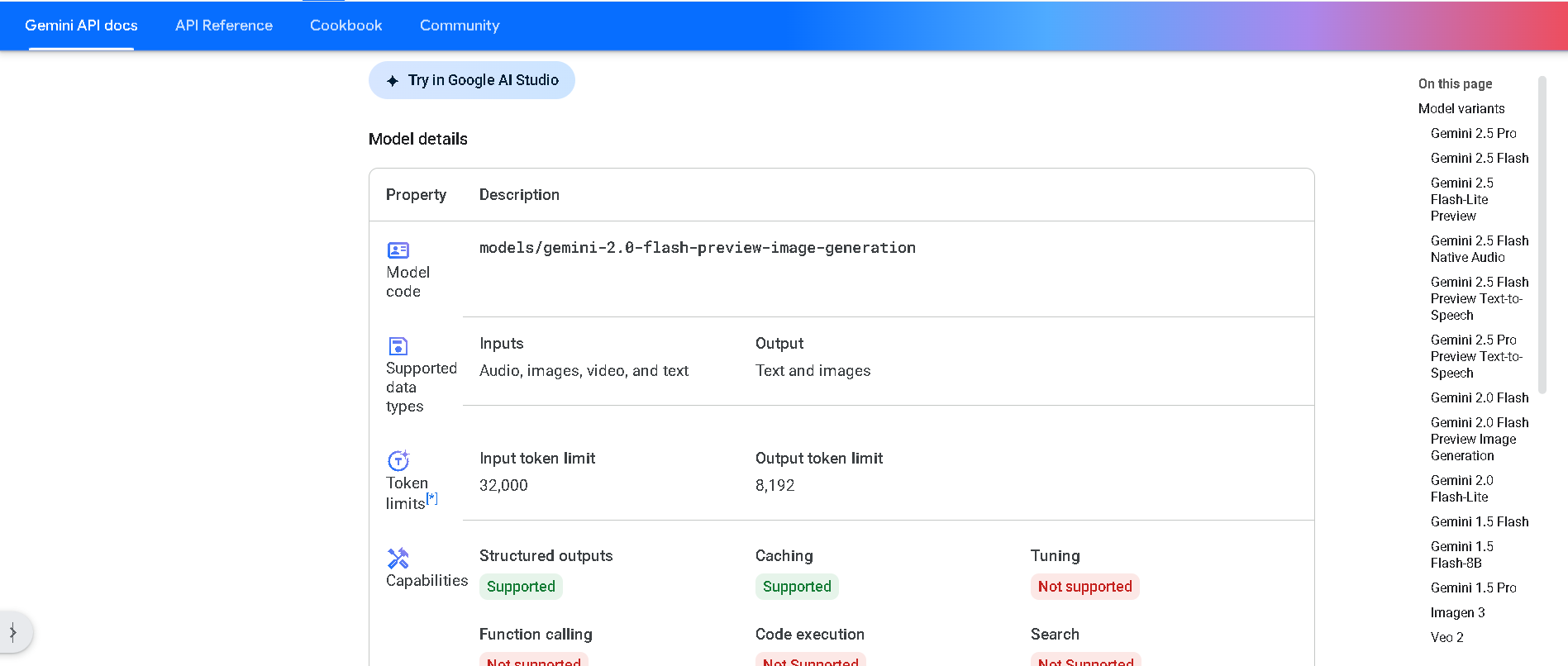

Gemini 2.0 Flash Preview Image Generation is Google’s experimental vision feature built into the Flash model. It enables developers to generate and edit images alongside text in a conversational manner and supports multi-turn, context-aware visual workflows via the Gemini API or Vertex AI.

Gemini 2.0 Flash P..

Gemini 2.0 Flash Preview Image Generation is Google’s experimental vision feature built into the Flash model. It enables developers to generate and edit images alongside text in a conversational manner and supports multi-turn, context-aware visual workflows via the Gemini API or Vertex AI.

Gemini 2.0 Flash P..

Gemini 2.0 Flash Preview Image Generation is Google’s experimental vision feature built into the Flash model. It enables developers to generate and edit images alongside text in a conversational manner and supports multi-turn, context-aware visual workflows via the Gemini API or Vertex AI.

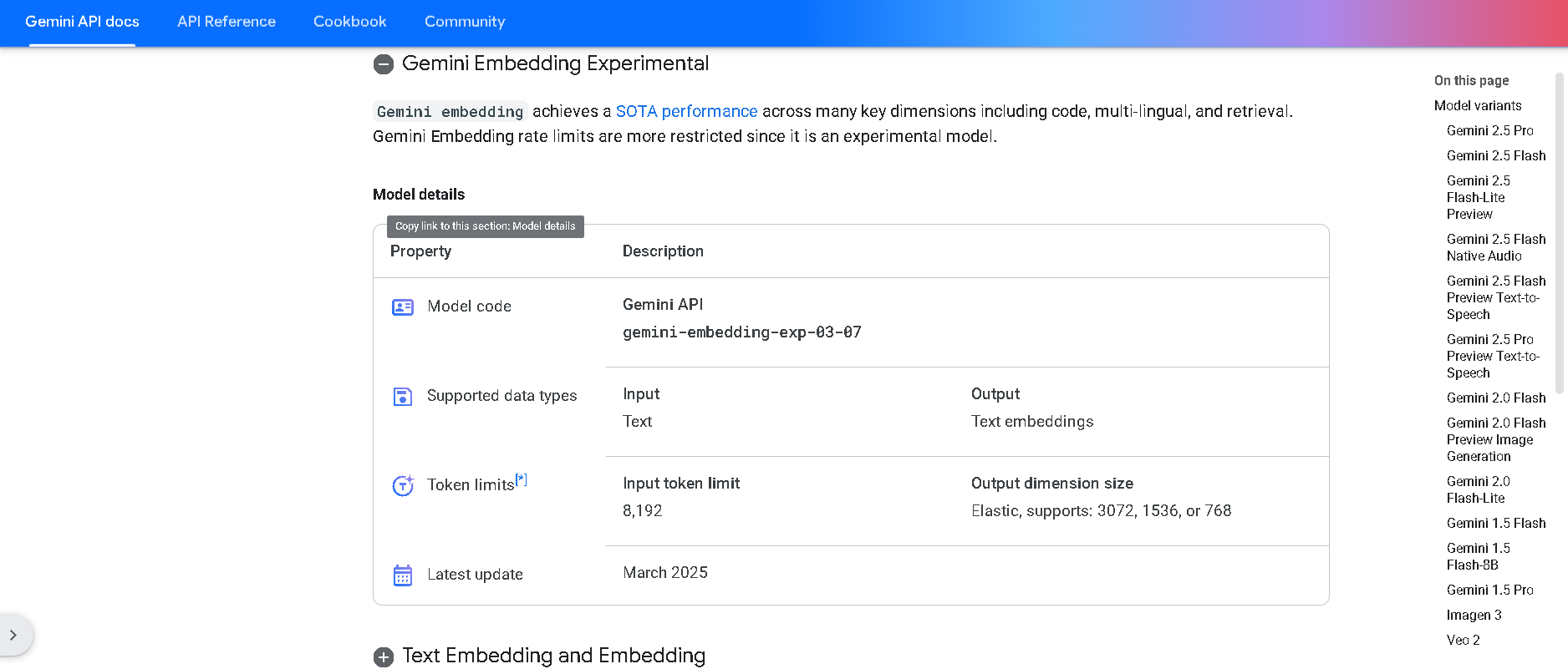

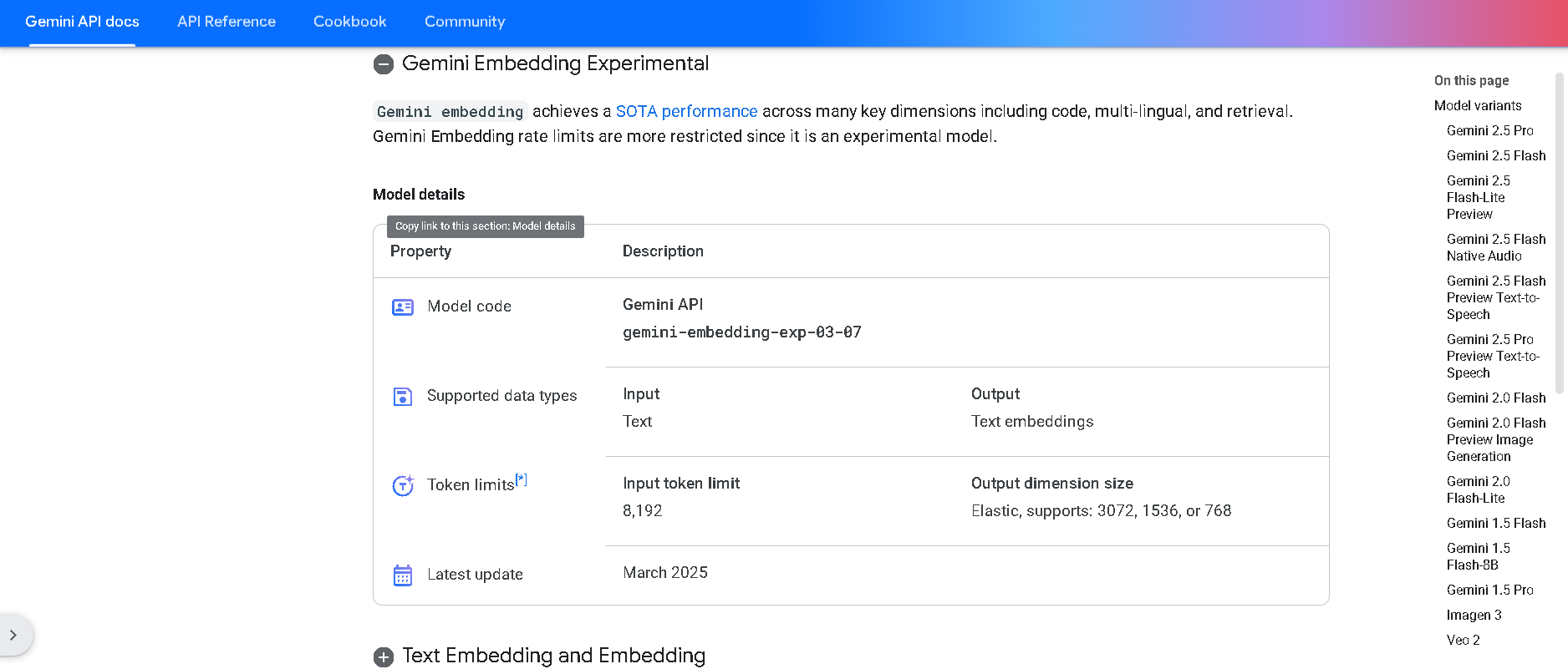

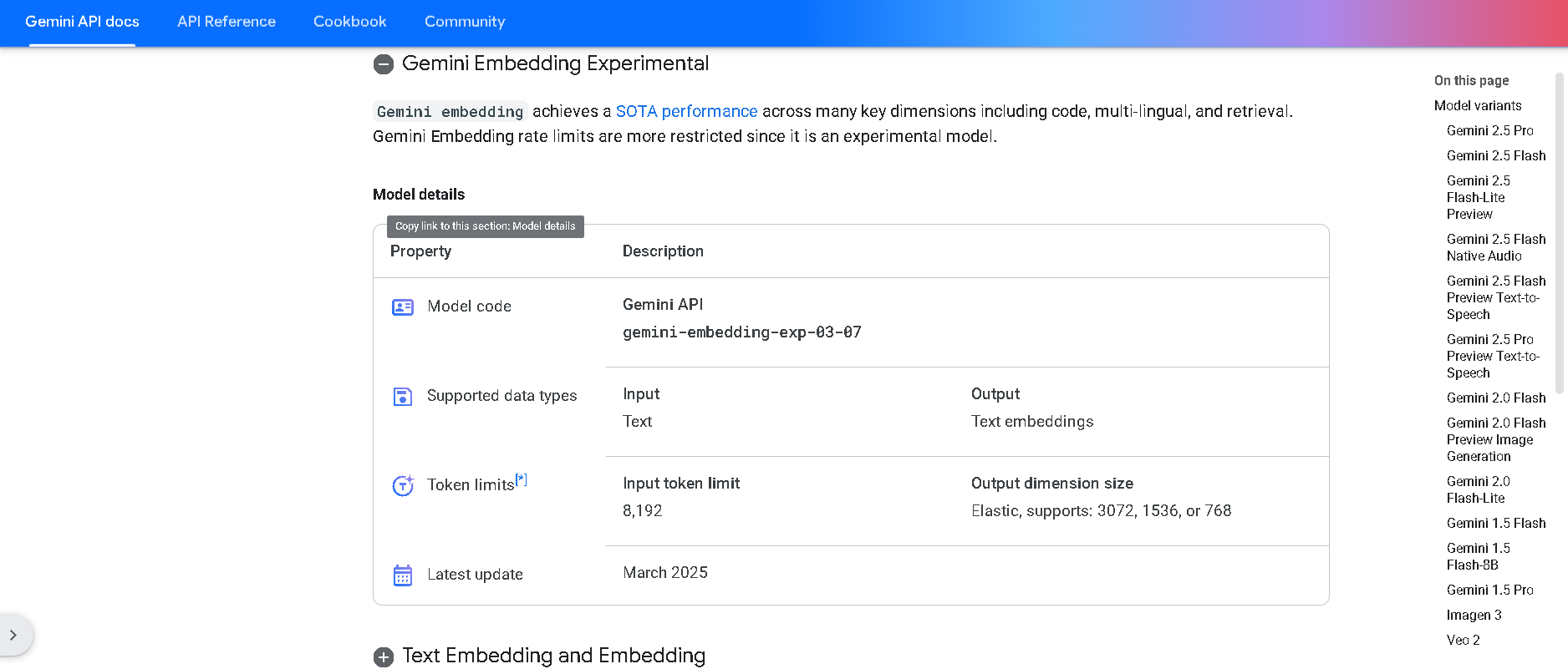

Gemini Embedding

Gemini Embedding is Google DeepMind’s state-of-the-art text embedding model, built on the powerful Gemini family. It transforms text into high-dimensional numerical vectors (up to 3,072 dimensions) with exceptional accuracy and generalization across over 100 languages and multiple modalities—including code. It achieves state-of-the-art results on the Massive Multilingual Text Embedding Benchmark (MMTEB), outperforming prior models across multilingual, English, and code-based tasks

Gemini Embedding

Gemini Embedding is Google DeepMind’s state-of-the-art text embedding model, built on the powerful Gemini family. It transforms text into high-dimensional numerical vectors (up to 3,072 dimensions) with exceptional accuracy and generalization across over 100 languages and multiple modalities—including code. It achieves state-of-the-art results on the Massive Multilingual Text Embedding Benchmark (MMTEB), outperforming prior models across multilingual, English, and code-based tasks

Gemini Embedding

Gemini Embedding is Google DeepMind’s state-of-the-art text embedding model, built on the powerful Gemini family. It transforms text into high-dimensional numerical vectors (up to 3,072 dimensions) with exceptional accuracy and generalization across over 100 languages and multiple modalities—including code. It achieves state-of-the-art results on the Massive Multilingual Text Embedding Benchmark (MMTEB), outperforming prior models across multilingual, English, and code-based tasks

DeepSeek-V3

DeepSeek V3 is the latest flagship Mixture‑of‑Experts (MoE) open‑source AI model from DeepSeek. It features 671 billion total parameters (with ~37 billion activated per token), supports up to 128K context length, and excels across reasoning, code generation, language, and multimodal tasks. On standard benchmarks, it rivals or exceeds proprietary models—including GPT‑4o and Claude 3.5—as a high-performance, cost-efficient alternative.

DeepSeek-V3

DeepSeek V3 is the latest flagship Mixture‑of‑Experts (MoE) open‑source AI model from DeepSeek. It features 671 billion total parameters (with ~37 billion activated per token), supports up to 128K context length, and excels across reasoning, code generation, language, and multimodal tasks. On standard benchmarks, it rivals or exceeds proprietary models—including GPT‑4o and Claude 3.5—as a high-performance, cost-efficient alternative.

DeepSeek-V3

DeepSeek V3 is the latest flagship Mixture‑of‑Experts (MoE) open‑source AI model from DeepSeek. It features 671 billion total parameters (with ~37 billion activated per token), supports up to 128K context length, and excels across reasoning, code generation, language, and multimodal tasks. On standard benchmarks, it rivals or exceeds proprietary models—including GPT‑4o and Claude 3.5—as a high-performance, cost-efficient alternative.

Claude 3 Opus

Claude 3 Opus is Anthropic’s flagship Claude 3 model, released March 4, 2024. It offers top-tier performance for deep reasoning, complex code, advanced math, and multimodal understanding—including charts and documents—supported by a 200K‑token context window (extendable to 1 million in select enterprise cases). It consistently outperforms GPT‑4 and Gemini Ultra on benchmark tests like MMLU, HumanEval, HellaSwag, and more.

Claude 3 Opus

Claude 3 Opus is Anthropic’s flagship Claude 3 model, released March 4, 2024. It offers top-tier performance for deep reasoning, complex code, advanced math, and multimodal understanding—including charts and documents—supported by a 200K‑token context window (extendable to 1 million in select enterprise cases). It consistently outperforms GPT‑4 and Gemini Ultra on benchmark tests like MMLU, HumanEval, HellaSwag, and more.

Claude 3 Opus

Claude 3 Opus is Anthropic’s flagship Claude 3 model, released March 4, 2024. It offers top-tier performance for deep reasoning, complex code, advanced math, and multimodal understanding—including charts and documents—supported by a 200K‑token context window (extendable to 1 million in select enterprise cases). It consistently outperforms GPT‑4 and Gemini Ultra on benchmark tests like MMLU, HumanEval, HellaSwag, and more.

Grok 3 Latest

Grok 3 is xAI’s newest flagship AI chatbot, released on February 17, 2025, running on the massive Colossus supercluster (~200,000 GPUs). It offers elite-level reasoning, chain-of-thought transparency (“Think” mode), advanced “Big Brain” deeper reasoning, multimodal support (text, images), and integrated real-time DeepSearch—positioning it as a top-tier competitor to GPT‑4o, Gemini, Claude, and DeepSeek V3 on benchmarks.

Grok 3 Latest

Grok 3 is xAI’s newest flagship AI chatbot, released on February 17, 2025, running on the massive Colossus supercluster (~200,000 GPUs). It offers elite-level reasoning, chain-of-thought transparency (“Think” mode), advanced “Big Brain” deeper reasoning, multimodal support (text, images), and integrated real-time DeepSearch—positioning it as a top-tier competitor to GPT‑4o, Gemini, Claude, and DeepSeek V3 on benchmarks.

Grok 3 Latest

Grok 3 is xAI’s newest flagship AI chatbot, released on February 17, 2025, running on the massive Colossus supercluster (~200,000 GPUs). It offers elite-level reasoning, chain-of-thought transparency (“Think” mode), advanced “Big Brain” deeper reasoning, multimodal support (text, images), and integrated real-time DeepSearch—positioning it as a top-tier competitor to GPT‑4o, Gemini, Claude, and DeepSeek V3 on benchmarks.

grok-2-latest

Grok 2 is xAI’s second-generation chatbot model, launched in August 2024 as a substantial upgrade over Grok 1.5. It delivers frontier-level performance in chat, coding, reasoning, vision tasks, and image generation via the FLUX.1 system. On leaderboards, it outscored Claude 3.5 Sonnet and GPT‑4 Turbo, with strong results in GPQA (56%), MMLU (87.5%), MATH (76.1%), HumanEval (88.4%), MathVista, and DocVQA benchmarks.

grok-2-latest

Grok 2 is xAI’s second-generation chatbot model, launched in August 2024 as a substantial upgrade over Grok 1.5. It delivers frontier-level performance in chat, coding, reasoning, vision tasks, and image generation via the FLUX.1 system. On leaderboards, it outscored Claude 3.5 Sonnet and GPT‑4 Turbo, with strong results in GPQA (56%), MMLU (87.5%), MATH (76.1%), HumanEval (88.4%), MathVista, and DocVQA benchmarks.

grok-2-latest

Grok 2 is xAI’s second-generation chatbot model, launched in August 2024 as a substantial upgrade over Grok 1.5. It delivers frontier-level performance in chat, coding, reasoning, vision tasks, and image generation via the FLUX.1 system. On leaderboards, it outscored Claude 3.5 Sonnet and GPT‑4 Turbo, with strong results in GPQA (56%), MMLU (87.5%), MATH (76.1%), HumanEval (88.4%), MathVista, and DocVQA benchmarks.

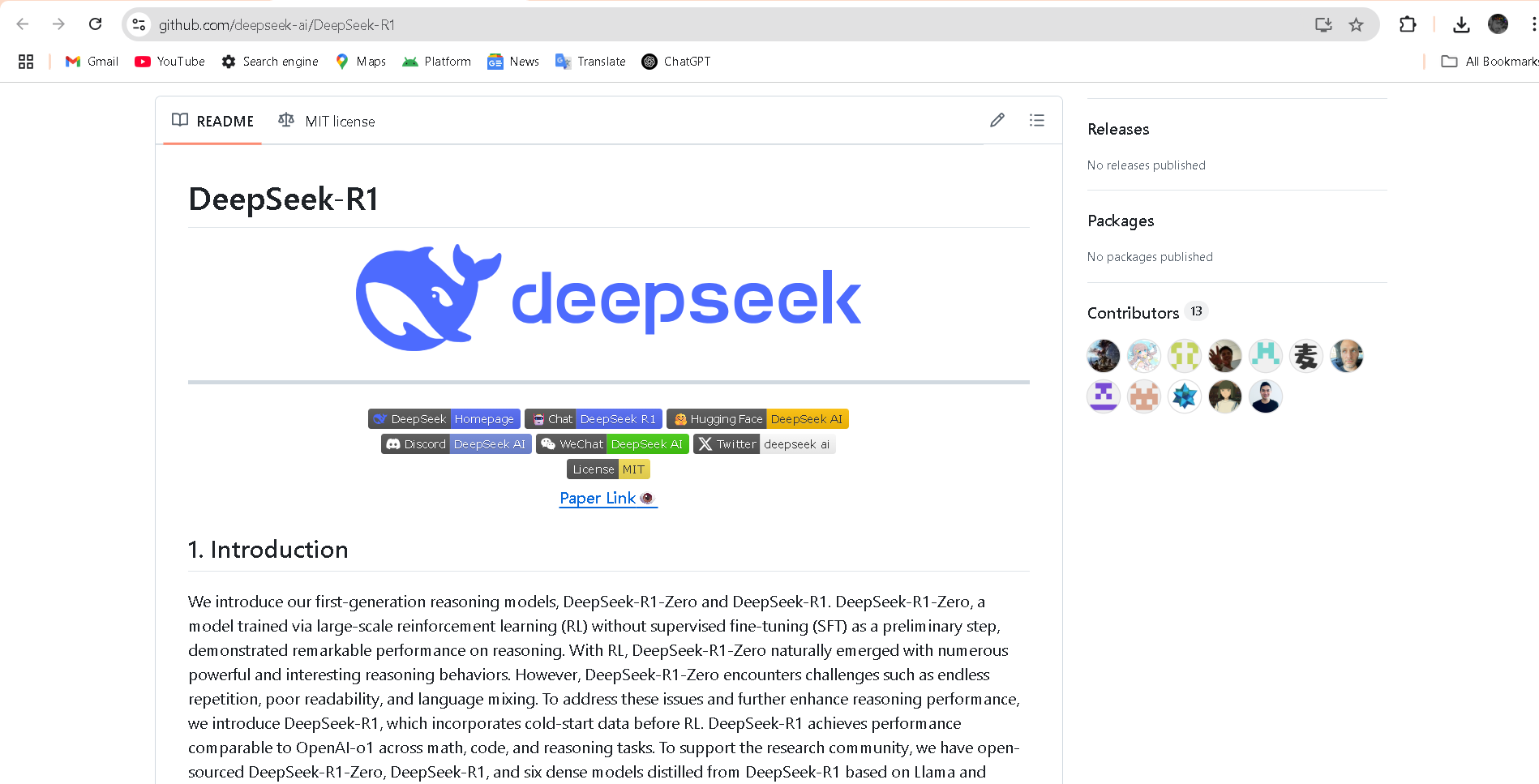

DeepSeek-R1-Zero

DeepSeek R1 Zero is an open-source large language model introduced in January 2025 by DeepSeek AI. It is a reinforcement learning–only version of DeepSeek R1, trained without supervised fine-tuning. With 671B total parameters (37B active) and a 128K-token context window, it demonstrates strong chain-of-thought reasoning, self-verification, and reflection.

DeepSeek-R1-Zero

DeepSeek R1 Zero is an open-source large language model introduced in January 2025 by DeepSeek AI. It is a reinforcement learning–only version of DeepSeek R1, trained without supervised fine-tuning. With 671B total parameters (37B active) and a 128K-token context window, it demonstrates strong chain-of-thought reasoning, self-verification, and reflection.

DeepSeek-R1-Zero

DeepSeek R1 Zero is an open-source large language model introduced in January 2025 by DeepSeek AI. It is a reinforcement learning–only version of DeepSeek R1, trained without supervised fine-tuning. With 671B total parameters (37B active) and a 128K-token context window, it demonstrates strong chain-of-thought reasoning, self-verification, and reflection.

DeepSeek-R1-Distil..

DeepSeek R1 Distill Qwen‑32B is a 32-billion-parameter dense reasoning model released in early 2025. Distilled from the flagship DeepSeek R1 using Qwen 2.5‑32B as a base, it delivers state-of-the-art performance among dense LLMs—outperforming OpenAI’s o1‑mini on benchmarks like AIME, MATH‑500, GPQA Diamond, LiveCodeBench, and CodeForces rating.

DeepSeek-R1-Distil..

DeepSeek R1 Distill Qwen‑32B is a 32-billion-parameter dense reasoning model released in early 2025. Distilled from the flagship DeepSeek R1 using Qwen 2.5‑32B as a base, it delivers state-of-the-art performance among dense LLMs—outperforming OpenAI’s o1‑mini on benchmarks like AIME, MATH‑500, GPQA Diamond, LiveCodeBench, and CodeForces rating.

DeepSeek-R1-Distil..

DeepSeek R1 Distill Qwen‑32B is a 32-billion-parameter dense reasoning model released in early 2025. Distilled from the flagship DeepSeek R1 using Qwen 2.5‑32B as a base, it delivers state-of-the-art performance among dense LLMs—outperforming OpenAI’s o1‑mini on benchmarks like AIME, MATH‑500, GPQA Diamond, LiveCodeBench, and CodeForces rating.

Qwen Chat

Qwen Chat is Alibaba Cloud’s conversational AI assistant built on the Qwen series (e.g., Qwen‑7B‑Chat, Qwen1.5‑7B‑Chat, Qwen‑VL, Qwen‑Audio, and Qwen2.5‑Omni). It supports text, vision, audio, and video understanding, plus image and document processing, web search integration, and image generation—all through a unified chat interface.

Qwen Chat

Qwen Chat is Alibaba Cloud’s conversational AI assistant built on the Qwen series (e.g., Qwen‑7B‑Chat, Qwen1.5‑7B‑Chat, Qwen‑VL, Qwen‑Audio, and Qwen2.5‑Omni). It supports text, vision, audio, and video understanding, plus image and document processing, web search integration, and image generation—all through a unified chat interface.

Qwen Chat

Qwen Chat is Alibaba Cloud’s conversational AI assistant built on the Qwen series (e.g., Qwen‑7B‑Chat, Qwen1.5‑7B‑Chat, Qwen‑VL, Qwen‑Audio, and Qwen2.5‑Omni). It supports text, vision, audio, and video understanding, plus image and document processing, web search integration, and image generation—all through a unified chat interface.

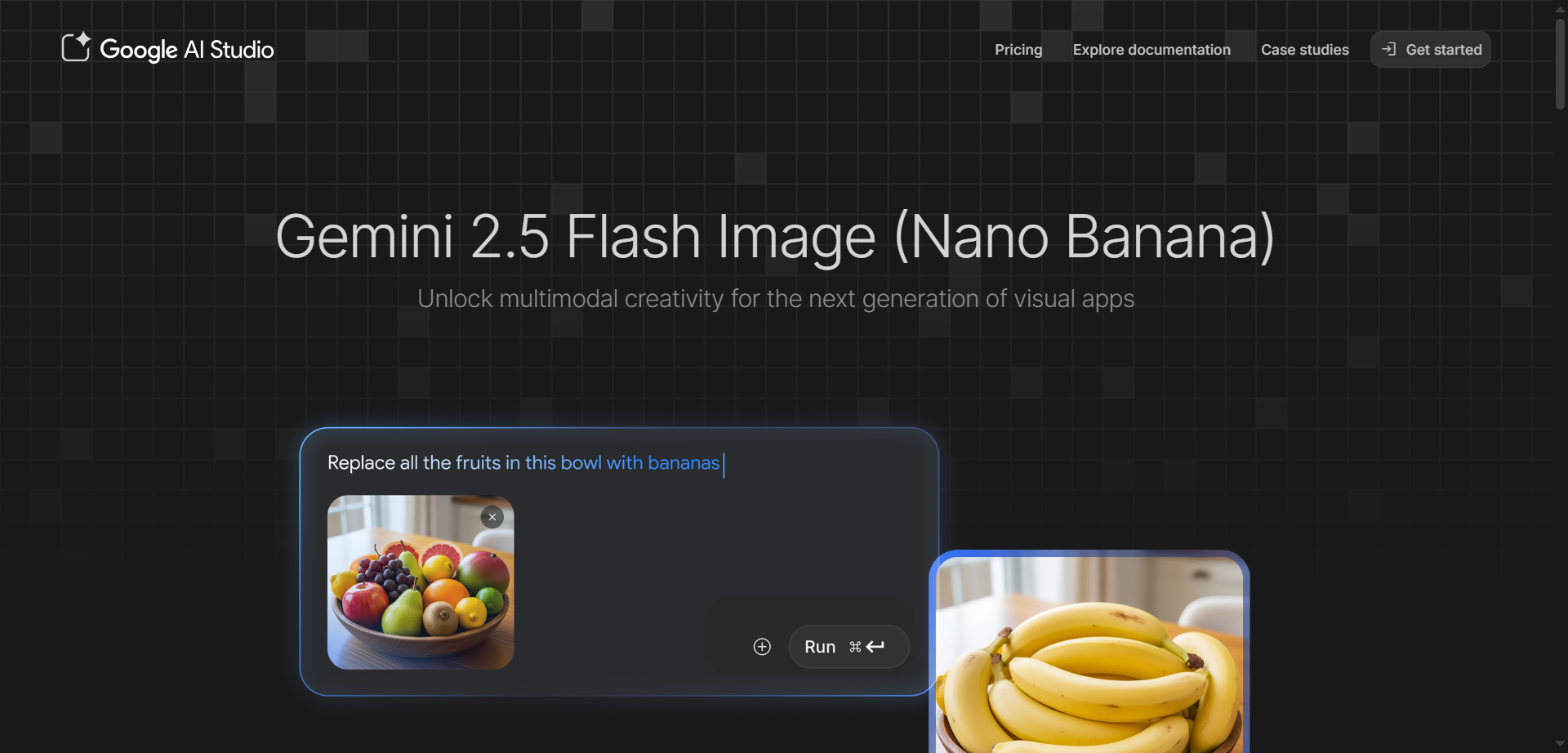

Google AI Studio

Google AI Studio is a web-based development environment that allows users to explore, prototype, and build applications using Google's cutting-edge generative AI models, such as Gemini. It provides a comprehensive set of tools for interacting with AI through chat prompts, generating various media types, and fine-tuning model behaviors for specific use cases.

Google AI Studio

Google AI Studio is a web-based development environment that allows users to explore, prototype, and build applications using Google's cutting-edge generative AI models, such as Gemini. It provides a comprehensive set of tools for interacting with AI through chat prompts, generating various media types, and fine-tuning model behaviors for specific use cases.

Google AI Studio

Google AI Studio is a web-based development environment that allows users to explore, prototype, and build applications using Google's cutting-edge generative AI models, such as Gemini. It provides a comprehensive set of tools for interacting with AI through chat prompts, generating various media types, and fine-tuning model behaviors for specific use cases.

Nano Banana

Gemini 2.5 Flash Image is Google's state-of-the-art AI image generation and editing model, nicknamed Nano Banana, designed for fast, high-quality creative workflows. It excels at blending multiple images into seamless compositions, maintaining character consistency across scenes, and making precise edits through natural language prompts like blurring backgrounds or changing poses. Accessible via Google AI Studio and Gemini API, it leverages Gemini's world knowledge for realistic transformations, style transfers, and conversational refinements without restarting from scratch. Developers love its low latency, token-based pricing at about $0.039 per image, and SynthID watermarking for easy AI identification. Perfect for product mockups, storytelling, education tools, and professional photo editing.

Nano Banana

Gemini 2.5 Flash Image is Google's state-of-the-art AI image generation and editing model, nicknamed Nano Banana, designed for fast, high-quality creative workflows. It excels at blending multiple images into seamless compositions, maintaining character consistency across scenes, and making precise edits through natural language prompts like blurring backgrounds or changing poses. Accessible via Google AI Studio and Gemini API, it leverages Gemini's world knowledge for realistic transformations, style transfers, and conversational refinements without restarting from scratch. Developers love its low latency, token-based pricing at about $0.039 per image, and SynthID watermarking for easy AI identification. Perfect for product mockups, storytelling, education tools, and professional photo editing.

Nano Banana

Gemini 2.5 Flash Image is Google's state-of-the-art AI image generation and editing model, nicknamed Nano Banana, designed for fast, high-quality creative workflows. It excels at blending multiple images into seamless compositions, maintaining character consistency across scenes, and making precise edits through natural language prompts like blurring backgrounds or changing poses. Accessible via Google AI Studio and Gemini API, it leverages Gemini's world knowledge for realistic transformations, style transfers, and conversational refinements without restarting from scratch. Developers love its low latency, token-based pricing at about $0.039 per image, and SynthID watermarking for easy AI identification. Perfect for product mockups, storytelling, education tools, and professional photo editing.

GlobalGPT

GlobalGPT is an all-in-one AI platform that unifies leading models for writing, coding, research, image generation, and video creation—accessible through a single account and subscription. It brings together top models like GPT-5, GPT-4.1, GPT-4o, GPT-o3, Claude Opus 4.1, Claude Sonnet 4, Gemini 2.5 Pro, DeepSeek V3, Unikorn (MJ-like) V7, Flux, Ideogram, and Google Veo 3 so you can chat, draft content, analyze documents, generate images, and produce cinematic videos without switching tools. Designed for over 2 million users worldwide, GlobalGPT also includes advanced research agents powered by GPT-4o and perplexity for deep web analysis, PDF summarization, and market insights, all wrapped in a clean interface with flexible credit-based plans.

GlobalGPT

GlobalGPT is an all-in-one AI platform that unifies leading models for writing, coding, research, image generation, and video creation—accessible through a single account and subscription. It brings together top models like GPT-5, GPT-4.1, GPT-4o, GPT-o3, Claude Opus 4.1, Claude Sonnet 4, Gemini 2.5 Pro, DeepSeek V3, Unikorn (MJ-like) V7, Flux, Ideogram, and Google Veo 3 so you can chat, draft content, analyze documents, generate images, and produce cinematic videos without switching tools. Designed for over 2 million users worldwide, GlobalGPT also includes advanced research agents powered by GPT-4o and perplexity for deep web analysis, PDF summarization, and market insights, all wrapped in a clean interface with flexible credit-based plans.

GlobalGPT

GlobalGPT is an all-in-one AI platform that unifies leading models for writing, coding, research, image generation, and video creation—accessible through a single account and subscription. It brings together top models like GPT-5, GPT-4.1, GPT-4o, GPT-o3, Claude Opus 4.1, Claude Sonnet 4, Gemini 2.5 Pro, DeepSeek V3, Unikorn (MJ-like) V7, Flux, Ideogram, and Google Veo 3 so you can chat, draft content, analyze documents, generate images, and produce cinematic videos without switching tools. Designed for over 2 million users worldwide, GlobalGPT also includes advanced research agents powered by GPT-4o and perplexity for deep web analysis, PDF summarization, and market insights, all wrapped in a clean interface with flexible credit-based plans.

Editorial Note

This page was researched and written by the ATB Editorial Team. Our team researches each AI tool by reviewing its official website, testing features, exploring real use cases, and considering user feedback. Every page is fact-checked and regularly updated to ensure the information stays accurate, neutral, and useful for our readers.

If you have any suggestions or questions, email us at hello@aitoolbook.ai