Built to deliver top-tier semantic understanding, this model is ideal when accuracy and relevance are mission-critical. It’s the spiritual successor to text-embedding-ada-002, bringing huge improvements in contextual understanding, generalization, and relevance scoring.

- Enterprise Developers: Power intelligent search and recommendation systems with ultra-precise embeddings.

- Data Scientists & ML Engineers: Use in machine learning pipelines, anomaly detection, or document classification.

- Product Teams: Integrate into AI features that require deep understanding of user input and content.

- AI Researchers: Apply in state-of-the-art NLP research, especially in tasks requiring nuanced understanding.

- Semantic Search Builders: Great for applications like legal search, academic paper indexing, or support ticket classification.

- E-commerce Teams: Build smarter product discovery and personalized experiences using semantic embeddings.

🛠️ How to Use text-embedding-3-large?

- Step 1: Choose the Model: Select text-embedding-3-large when making requests to the OpenAI v1/embeddings API endpoint.

- Step 2: Input the Text: Provide raw text—sentences, paragraphs, documents—up to 8192 tokens in a single request.

- Step 3: Generate Embeddings: Receive a 3,072-dimensional vector that represents the input’s semantic meaning.

- Step 4: Use the Embeddings: Perform similarity matching, vector search, clustering, or feed into downstream ML models.

- Step 5: Scale with Vector Databases: Store and manage embeddings using platforms like Pinecone, Weaviate, FAISS, or Qdrant for lightning-fast retrieval.

- Top-Tier Accuracy: Delivers OpenAI’s best semantic understanding for complex queries and large-scale systems.

- Long Context Support: Accepts up to 8192 tokens, ideal for embedding large bodies of text.

- Fine-Grained Semantics: Perfect for use cases that depend on subtle differences in language and meaning.

- Advanced Architecture: Built with OpenAI’s latest embedding techniques for speed, accuracy, and efficiency.

- Versatile Application: Suitable across domains like healthcare, legal, SaaS, retail, education, and more.

- Future-Proofed: Designed to scale with upcoming improvements in AI-driven semantic understanding.

- Highest Embedding Quality: Exceptional performance on retrieval and relevance tasks.

- Deep Semantic Understanding: Outperforms older models in benchmarks and real-world use.

- Handles Long Texts Gracefully: 8192-token input limit allows full documents without breaking them into chunks.

- Ideal for Complex Search Tasks: Especially when shallow embeddings fall short.

- Strong Generalization: Works well across domains—technical, conversational, creative, or formal.

- More Expensive than “Small” Version: Best suited when accuracy is worth the cost.

- Higher Latency: Larger vector size and model complexity may result in slower response times.

- Overkill for Simple Tasks: Might not be necessary for lightweight or single-sentence matching.

- No Custom Fine-Tuning: Model is fixed and not currently customizable.

- Larger Vector Size: 3072-dimensional output may increase storage and compute costs for huge datasets.

1 million tokens

$ 0.13

Proud of the love you're getting? Show off your AI Toolbook reviews—then invite more fans to share the love and build your credibility.

Add an AI Toolbook badge to your site—an easy way to drive followers, showcase updates, and collect reviews. It's like a mini 24/7 billboard for your AI.

Reviews

Rating Distribution

Average score

Popular Mention

FAQs

Similar AI Tools

OpenAI GPT-4o

GPT-4o is OpenAI’s latest and most advanced AI model, offering faster, more powerful, and cost-efficient natural language processing. It can handle text, vision, and audio in real time, making it the first OpenAI model to process multimodal inputs natively. It’s significantly faster and cheaper than GPT-4 Turbo while improving accuracy, reasoning, and multilingual support.

OpenAI GPT-4o

GPT-4o is OpenAI’s latest and most advanced AI model, offering faster, more powerful, and cost-efficient natural language processing. It can handle text, vision, and audio in real time, making it the first OpenAI model to process multimodal inputs natively. It’s significantly faster and cheaper than GPT-4 Turbo while improving accuracy, reasoning, and multilingual support.

OpenAI GPT-4o

GPT-4o is OpenAI’s latest and most advanced AI model, offering faster, more powerful, and cost-efficient natural language processing. It can handle text, vision, and audio in real time, making it the first OpenAI model to process multimodal inputs natively. It’s significantly faster and cheaper than GPT-4 Turbo while improving accuracy, reasoning, and multilingual support.

OpenAI Dall-E 3

OpenAI DALL·E 3 is an advanced AI image generation model that creates highly detailed and realistic images from text prompts. It builds upon previous versions by offering better composition, improved understanding of complex prompts, and seamless integration with ChatGPT. DALL·E 3 is designed for artists, designers, marketers, and content creators who want high-quality AI-generated visuals.

OpenAI Dall-E 3

OpenAI DALL·E 3 is an advanced AI image generation model that creates highly detailed and realistic images from text prompts. It builds upon previous versions by offering better composition, improved understanding of complex prompts, and seamless integration with ChatGPT. DALL·E 3 is designed for artists, designers, marketers, and content creators who want high-quality AI-generated visuals.

OpenAI Dall-E 3

OpenAI DALL·E 3 is an advanced AI image generation model that creates highly detailed and realistic images from text prompts. It builds upon previous versions by offering better composition, improved understanding of complex prompts, and seamless integration with ChatGPT. DALL·E 3 is designed for artists, designers, marketers, and content creators who want high-quality AI-generated visuals.

OpenAI o3

o3 is OpenAI's next-generation language model, representing a significant leap in performance, reasoning ability, and efficiency. Positioned between GPT-4 and GPT-4o in terms of evolution, o3 is engineered for advanced language understanding, content generation, multilingual communication, and code-related tasks—while maintaining faster speeds and lower latency than earlier models. As part of OpenAI’s GPT-4 Turbo family, o3 delivers high-quality outputs at scale, supporting both chat and completion endpoints. It’s currently used in various commercial and developer-facing tools for streamlined and intelligent interactions.

OpenAI o3

o3 is OpenAI's next-generation language model, representing a significant leap in performance, reasoning ability, and efficiency. Positioned between GPT-4 and GPT-4o in terms of evolution, o3 is engineered for advanced language understanding, content generation, multilingual communication, and code-related tasks—while maintaining faster speeds and lower latency than earlier models. As part of OpenAI’s GPT-4 Turbo family, o3 delivers high-quality outputs at scale, supporting both chat and completion endpoints. It’s currently used in various commercial and developer-facing tools for streamlined and intelligent interactions.

OpenAI o3

o3 is OpenAI's next-generation language model, representing a significant leap in performance, reasoning ability, and efficiency. Positioned between GPT-4 and GPT-4o in terms of evolution, o3 is engineered for advanced language understanding, content generation, multilingual communication, and code-related tasks—while maintaining faster speeds and lower latency than earlier models. As part of OpenAI’s GPT-4 Turbo family, o3 delivers high-quality outputs at scale, supporting both chat and completion endpoints. It’s currently used in various commercial and developer-facing tools for streamlined and intelligent interactions.

OpenAI o1-pro

o1-pro is a highly capable AI model developed by OpenAI, designed to deliver efficient, high-quality text generation across a wide range of use cases. As part of OpenAI’s GPT-4 architecture family, o1-pro is optimized for low-latency performance and high accuracy—making it suitable for both everyday tasks and enterprise-scale applications. It powers natural language interactions, content creation, summarization, and more, offering developers a solid balance between performance, cost, and output quality.

OpenAI o1-pro

o1-pro is a highly capable AI model developed by OpenAI, designed to deliver efficient, high-quality text generation across a wide range of use cases. As part of OpenAI’s GPT-4 architecture family, o1-pro is optimized for low-latency performance and high accuracy—making it suitable for both everyday tasks and enterprise-scale applications. It powers natural language interactions, content creation, summarization, and more, offering developers a solid balance between performance, cost, and output quality.

OpenAI o1-pro

o1-pro is a highly capable AI model developed by OpenAI, designed to deliver efficient, high-quality text generation across a wide range of use cases. As part of OpenAI’s GPT-4 architecture family, o1-pro is optimized for low-latency performance and high accuracy—making it suitable for both everyday tasks and enterprise-scale applications. It powers natural language interactions, content creation, summarization, and more, offering developers a solid balance between performance, cost, and output quality.

OpenAI - GPT 4.1

GPT-4.1 is OpenAI’s newest multimodal large language model, designed to deliver highly capable, efficient, and intelligent performance across a broad range of tasks. It builds on the foundation of GPT-4 and GPT-4 Turbo, offering enhanced reasoning, greater factual accuracy, and smoother integration with tools like code interpreters, retrieval systems, and image understanding. With native support for a 128K token context window, function calling, and robust tool usage, GPT-4.1 brings AI closer to behaving like a reliable, adaptive assistant—ready to work, build, and collaborate across tasks with speed and precision.

OpenAI - GPT 4.1

GPT-4.1 is OpenAI’s newest multimodal large language model, designed to deliver highly capable, efficient, and intelligent performance across a broad range of tasks. It builds on the foundation of GPT-4 and GPT-4 Turbo, offering enhanced reasoning, greater factual accuracy, and smoother integration with tools like code interpreters, retrieval systems, and image understanding. With native support for a 128K token context window, function calling, and robust tool usage, GPT-4.1 brings AI closer to behaving like a reliable, adaptive assistant—ready to work, build, and collaborate across tasks with speed and precision.

OpenAI - GPT 4.1

GPT-4.1 is OpenAI’s newest multimodal large language model, designed to deliver highly capable, efficient, and intelligent performance across a broad range of tasks. It builds on the foundation of GPT-4 and GPT-4 Turbo, offering enhanced reasoning, greater factual accuracy, and smoother integration with tools like code interpreters, retrieval systems, and image understanding. With native support for a 128K token context window, function calling, and robust tool usage, GPT-4.1 brings AI closer to behaving like a reliable, adaptive assistant—ready to work, build, and collaborate across tasks with speed and precision.

GPT-4o Search Preview is a powerful experimental feature of OpenAI’s GPT-4o model, designed to act as a high-performance retrieval system. Rather than just generating answers from training data, it allows the model to search through large datasets, documents, or knowledge bases to surface relevant results with context-aware accuracy. Think of it as your AI assistant with built-in research superpowers—faster, smarter, and surprisingly precise. This preview gives developers a taste of what’s coming next: an intelligent search engine built directly into the GPT-4o ecosystem.

OpenAI GPT 4o Sear..

GPT-4o Search Preview is a powerful experimental feature of OpenAI’s GPT-4o model, designed to act as a high-performance retrieval system. Rather than just generating answers from training data, it allows the model to search through large datasets, documents, or knowledge bases to surface relevant results with context-aware accuracy. Think of it as your AI assistant with built-in research superpowers—faster, smarter, and surprisingly precise. This preview gives developers a taste of what’s coming next: an intelligent search engine built directly into the GPT-4o ecosystem.

OpenAI GPT 4o Sear..

GPT-4o Search Preview is a powerful experimental feature of OpenAI’s GPT-4o model, designed to act as a high-performance retrieval system. Rather than just generating answers from training data, it allows the model to search through large datasets, documents, or knowledge bases to surface relevant results with context-aware accuracy. Think of it as your AI assistant with built-in research superpowers—faster, smarter, and surprisingly precise. This preview gives developers a taste of what’s coming next: an intelligent search engine built directly into the GPT-4o ecosystem.

OpenAI Omni Modera..

omni-moderation-latest is OpenAI’s most advanced content moderation model, designed to detect and flag harmful, unsafe, or policy-violating content across a wide range of modalities and languages. Built on the GPT-4o architecture, it leverages multimodal understanding and multilingual capabilities to provide robust moderation for text, images, and audio inputs. This model is particularly effective in identifying nuanced and culturally specific toxic content, including implicit insults, sarcasm, and aggression that general-purpose systems might overlook.

OpenAI Omni Modera..

omni-moderation-latest is OpenAI’s most advanced content moderation model, designed to detect and flag harmful, unsafe, or policy-violating content across a wide range of modalities and languages. Built on the GPT-4o architecture, it leverages multimodal understanding and multilingual capabilities to provide robust moderation for text, images, and audio inputs. This model is particularly effective in identifying nuanced and culturally specific toxic content, including implicit insults, sarcasm, and aggression that general-purpose systems might overlook.

OpenAI Omni Modera..

omni-moderation-latest is OpenAI’s most advanced content moderation model, designed to detect and flag harmful, unsafe, or policy-violating content across a wide range of modalities and languages. Built on the GPT-4o architecture, it leverages multimodal understanding and multilingual capabilities to provide robust moderation for text, images, and audio inputs. This model is particularly effective in identifying nuanced and culturally specific toxic content, including implicit insults, sarcasm, and aggression that general-purpose systems might overlook.

OpenAI GPT 4 Turbo

GPT-4 Turbo is OpenAI’s enhanced version of GPT-4, engineered to deliver faster performance, extended context handling, and more cost-effective usage. Released in November 2023, GPT-4 Turbo boasts a 128,000-token context window, allowing it to process and generate longer and more complex content. It supports multimodal inputs, including text and images, making it versatile for various applications.

OpenAI GPT 4 Turbo

GPT-4 Turbo is OpenAI’s enhanced version of GPT-4, engineered to deliver faster performance, extended context handling, and more cost-effective usage. Released in November 2023, GPT-4 Turbo boasts a 128,000-token context window, allowing it to process and generate longer and more complex content. It supports multimodal inputs, including text and images, making it versatile for various applications.

OpenAI GPT 4 Turbo

GPT-4 Turbo is OpenAI’s enhanced version of GPT-4, engineered to deliver faster performance, extended context handling, and more cost-effective usage. Released in November 2023, GPT-4 Turbo boasts a 128,000-token context window, allowing it to process and generate longer and more complex content. It supports multimodal inputs, including text and images, making it versatile for various applications.

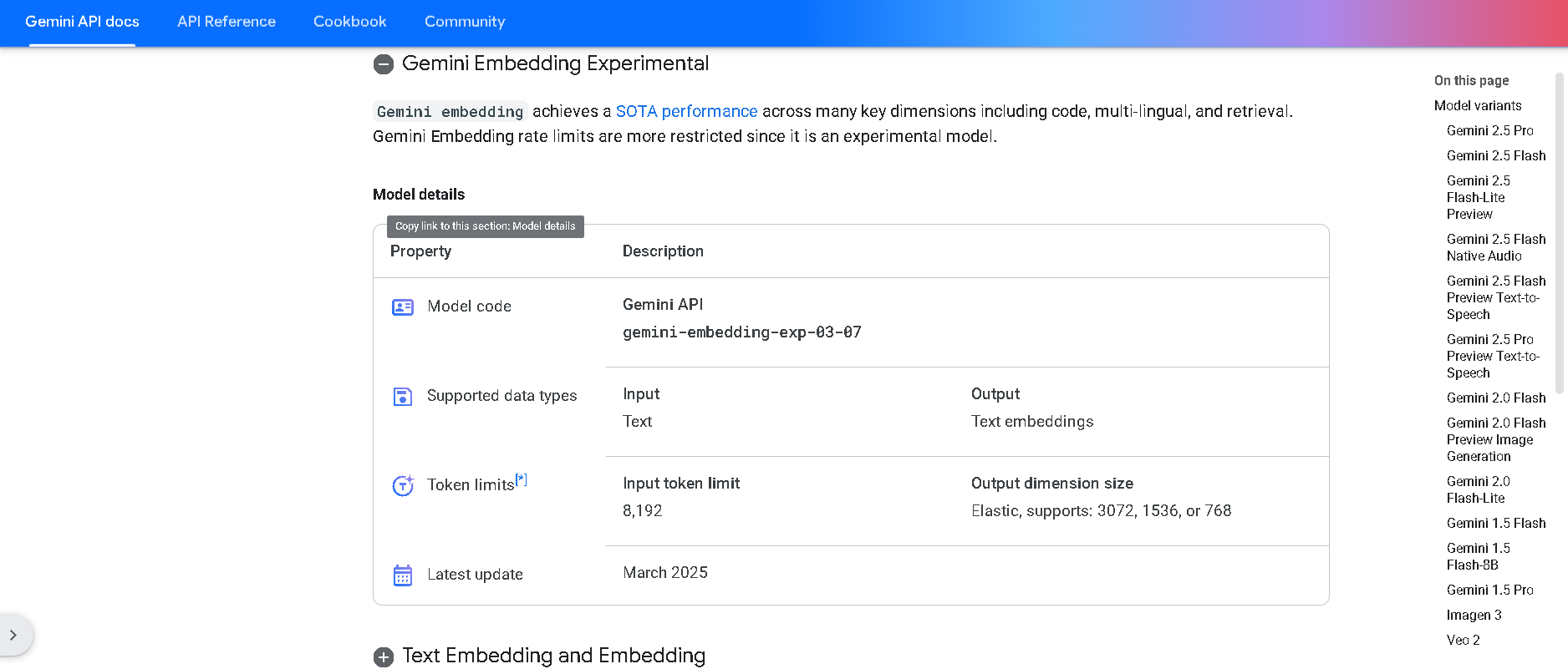

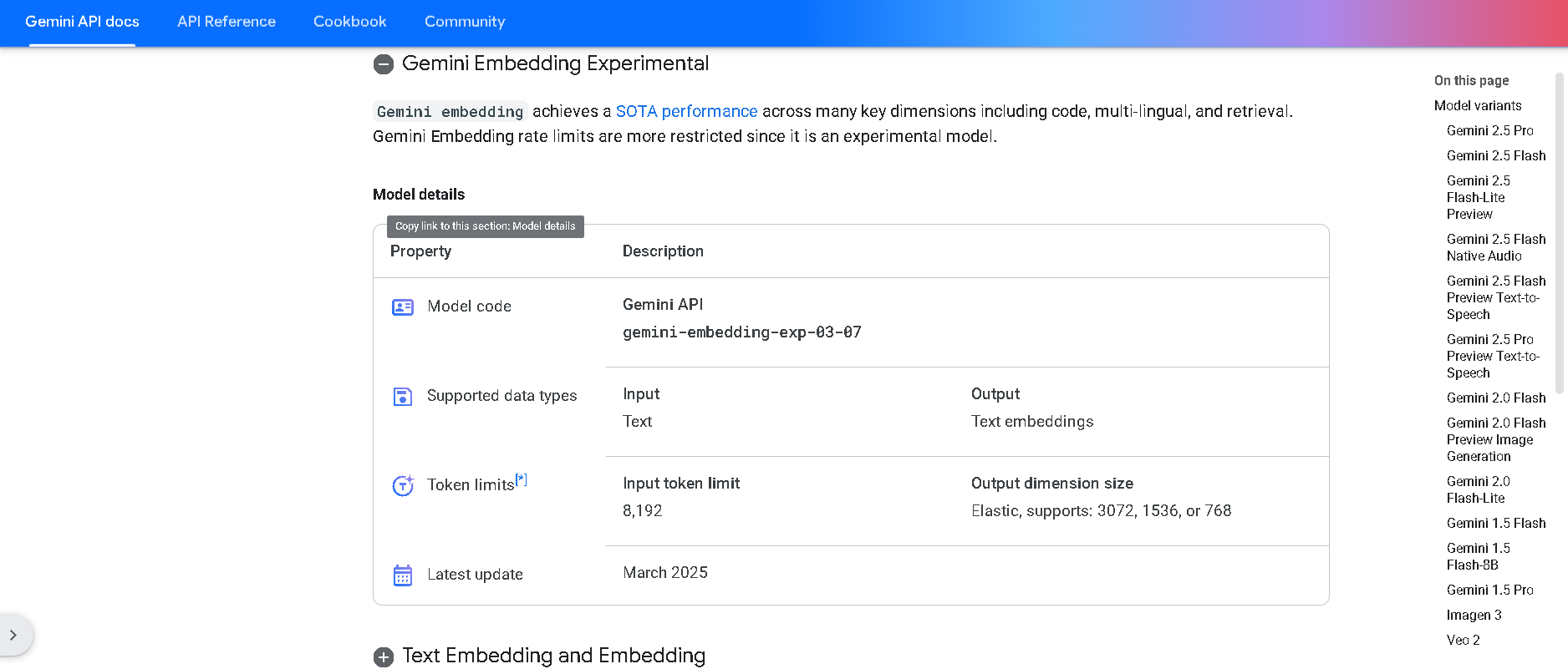

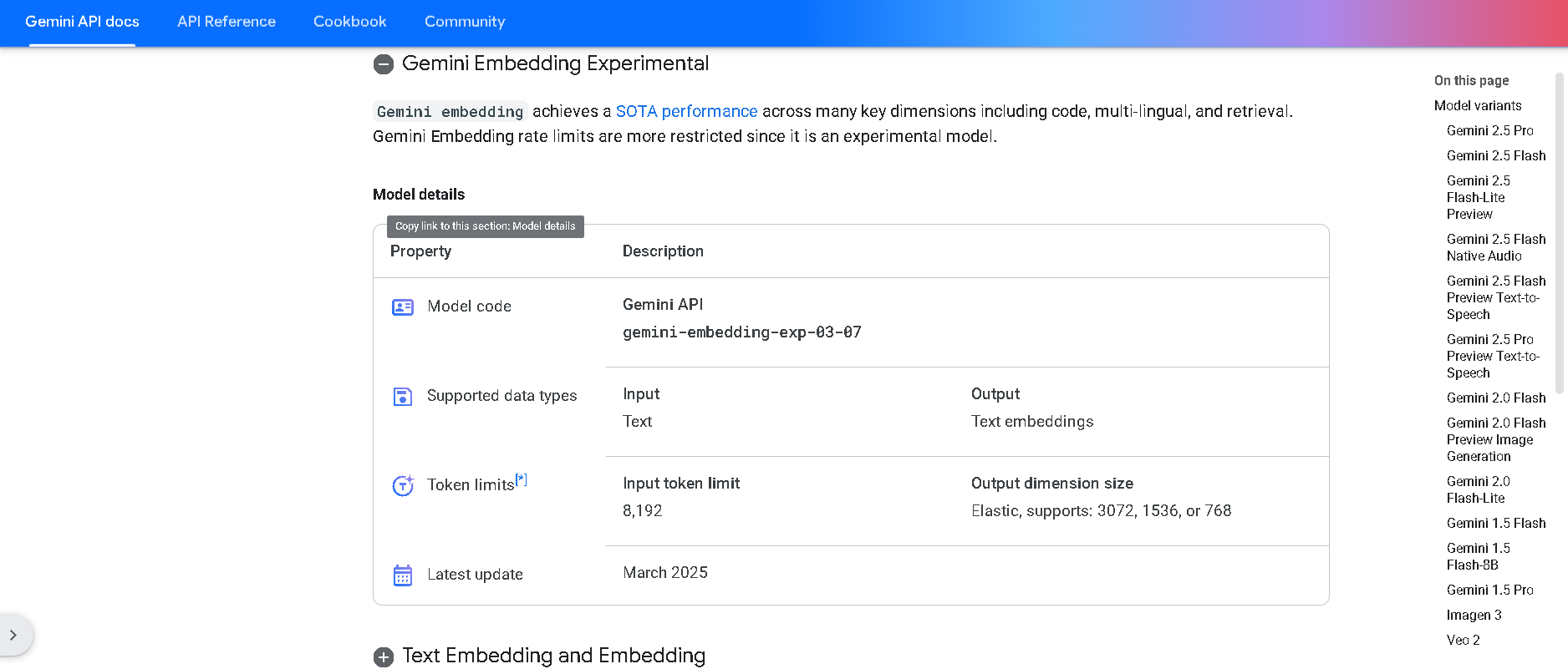

Gemini Embedding

Gemini Embedding is Google DeepMind’s state-of-the-art text embedding model, built on the powerful Gemini family. It transforms text into high-dimensional numerical vectors (up to 3,072 dimensions) with exceptional accuracy and generalization across over 100 languages and multiple modalities—including code. It achieves state-of-the-art results on the Massive Multilingual Text Embedding Benchmark (MMTEB), outperforming prior models across multilingual, English, and code-based tasks

Gemini Embedding

Gemini Embedding is Google DeepMind’s state-of-the-art text embedding model, built on the powerful Gemini family. It transforms text into high-dimensional numerical vectors (up to 3,072 dimensions) with exceptional accuracy and generalization across over 100 languages and multiple modalities—including code. It achieves state-of-the-art results on the Massive Multilingual Text Embedding Benchmark (MMTEB), outperforming prior models across multilingual, English, and code-based tasks

Gemini Embedding

Gemini Embedding is Google DeepMind’s state-of-the-art text embedding model, built on the powerful Gemini family. It transforms text into high-dimensional numerical vectors (up to 3,072 dimensions) with exceptional accuracy and generalization across over 100 languages and multiple modalities—including code. It achieves state-of-the-art results on the Massive Multilingual Text Embedding Benchmark (MMTEB), outperforming prior models across multilingual, English, and code-based tasks

Mistral Embed

Mistral Embed is Mistral AI’s high-performance text embedding model designed for semantic retrieval, clustering, classification, and retrieval-augmented generation (RAG). With support for up to 8,192 tokens and producing 1,024-dimensional vectors, it delivers state-of-the-art semantic similarity and organization capabilities.

Mistral Embed

Mistral Embed is Mistral AI’s high-performance text embedding model designed for semantic retrieval, clustering, classification, and retrieval-augmented generation (RAG). With support for up to 8,192 tokens and producing 1,024-dimensional vectors, it delivers state-of-the-art semantic similarity and organization capabilities.

Mistral Embed

Mistral Embed is Mistral AI’s high-performance text embedding model designed for semantic retrieval, clustering, classification, and retrieval-augmented generation (RAG). With support for up to 8,192 tokens and producing 1,024-dimensional vectors, it delivers state-of-the-art semantic similarity and organization capabilities.

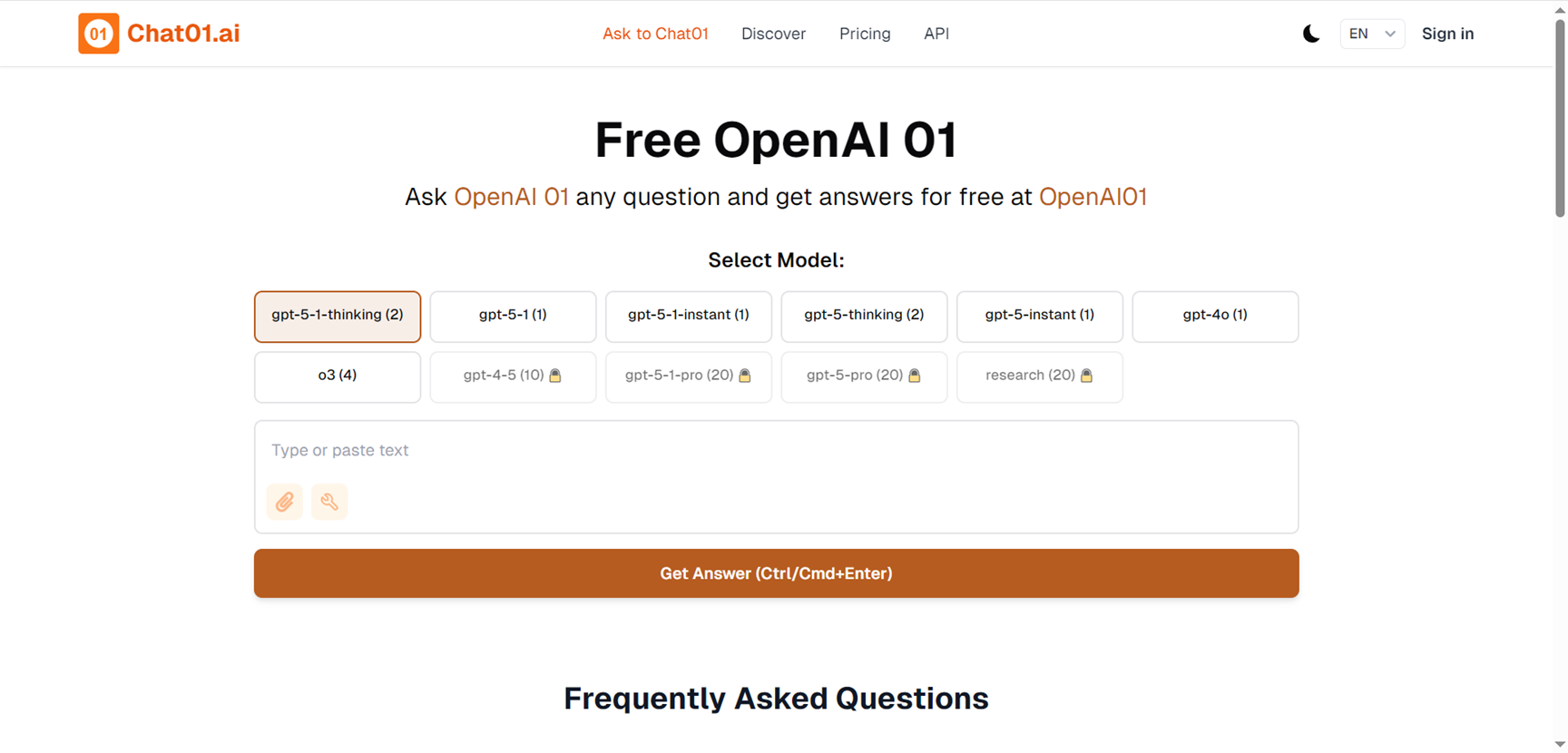

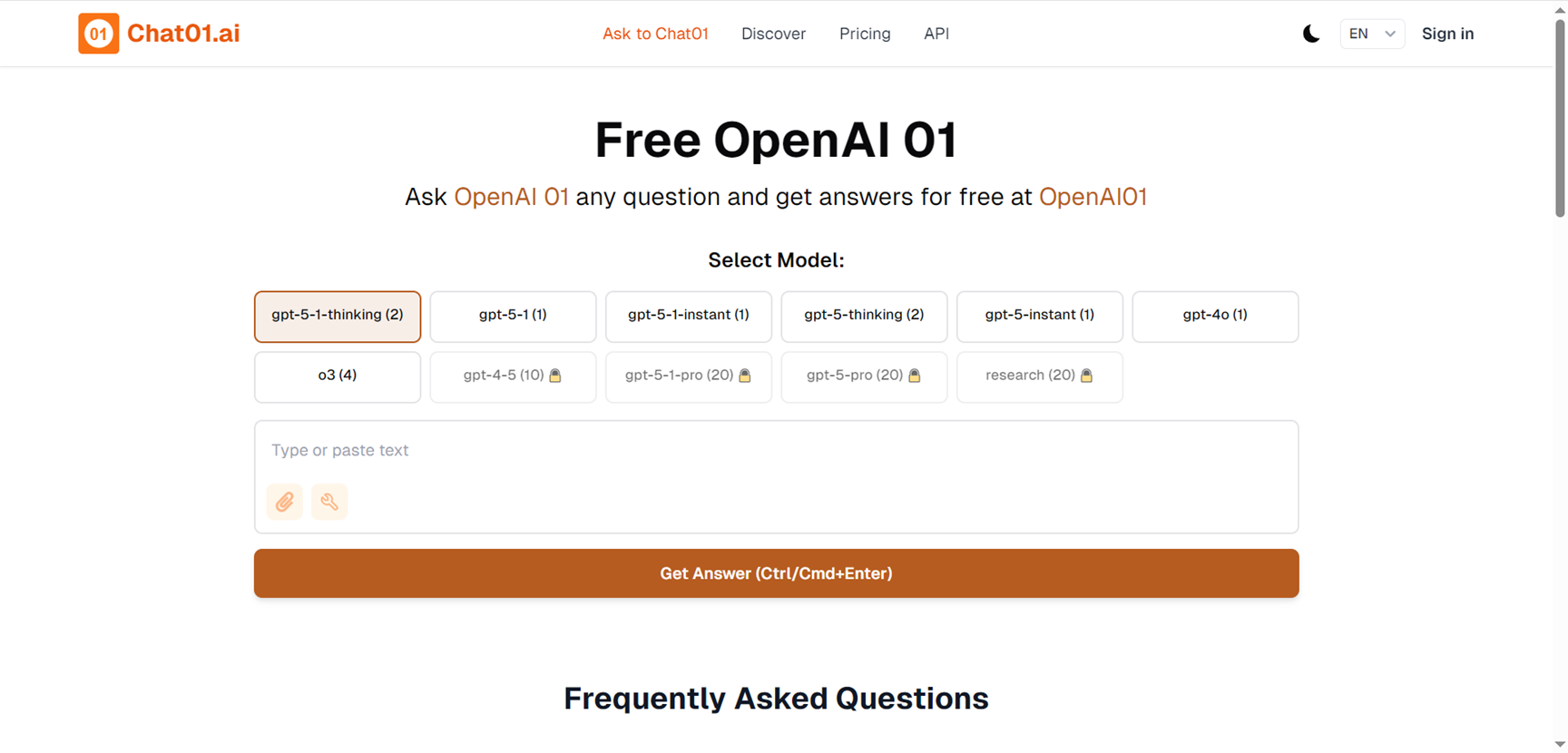

Chat01.ai

OpenAI01.net is a third-party, browser-based chat platform that lets you use OpenAI’s o1 family of advanced reasoning models for free, without needing your own API key or paid account. Branded as Chat01.ai in some places, it focuses on giving users generous access to o1-preview and o1-mini through a simple chat interface so they can tackle complex math, coding, science, and problem-solving tasks. The site often features public question-and-answer threads, allowing you to study other users’ prompts and responses to improve your own prompting skills. It acts as an accessible front-end to powerful OpenAI models, but is not officially operated by OpenAI.

Chat01.ai

OpenAI01.net is a third-party, browser-based chat platform that lets you use OpenAI’s o1 family of advanced reasoning models for free, without needing your own API key or paid account. Branded as Chat01.ai in some places, it focuses on giving users generous access to o1-preview and o1-mini through a simple chat interface so they can tackle complex math, coding, science, and problem-solving tasks. The site often features public question-and-answer threads, allowing you to study other users’ prompts and responses to improve your own prompting skills. It acts as an accessible front-end to powerful OpenAI models, but is not officially operated by OpenAI.

Chat01.ai

OpenAI01.net is a third-party, browser-based chat platform that lets you use OpenAI’s o1 family of advanced reasoning models for free, without needing your own API key or paid account. Branded as Chat01.ai in some places, it focuses on giving users generous access to o1-preview and o1-mini through a simple chat interface so they can tackle complex math, coding, science, and problem-solving tasks. The site often features public question-and-answer threads, allowing you to study other users’ prompts and responses to improve your own prompting skills. It acts as an accessible front-end to powerful OpenAI models, but is not officially operated by OpenAI.

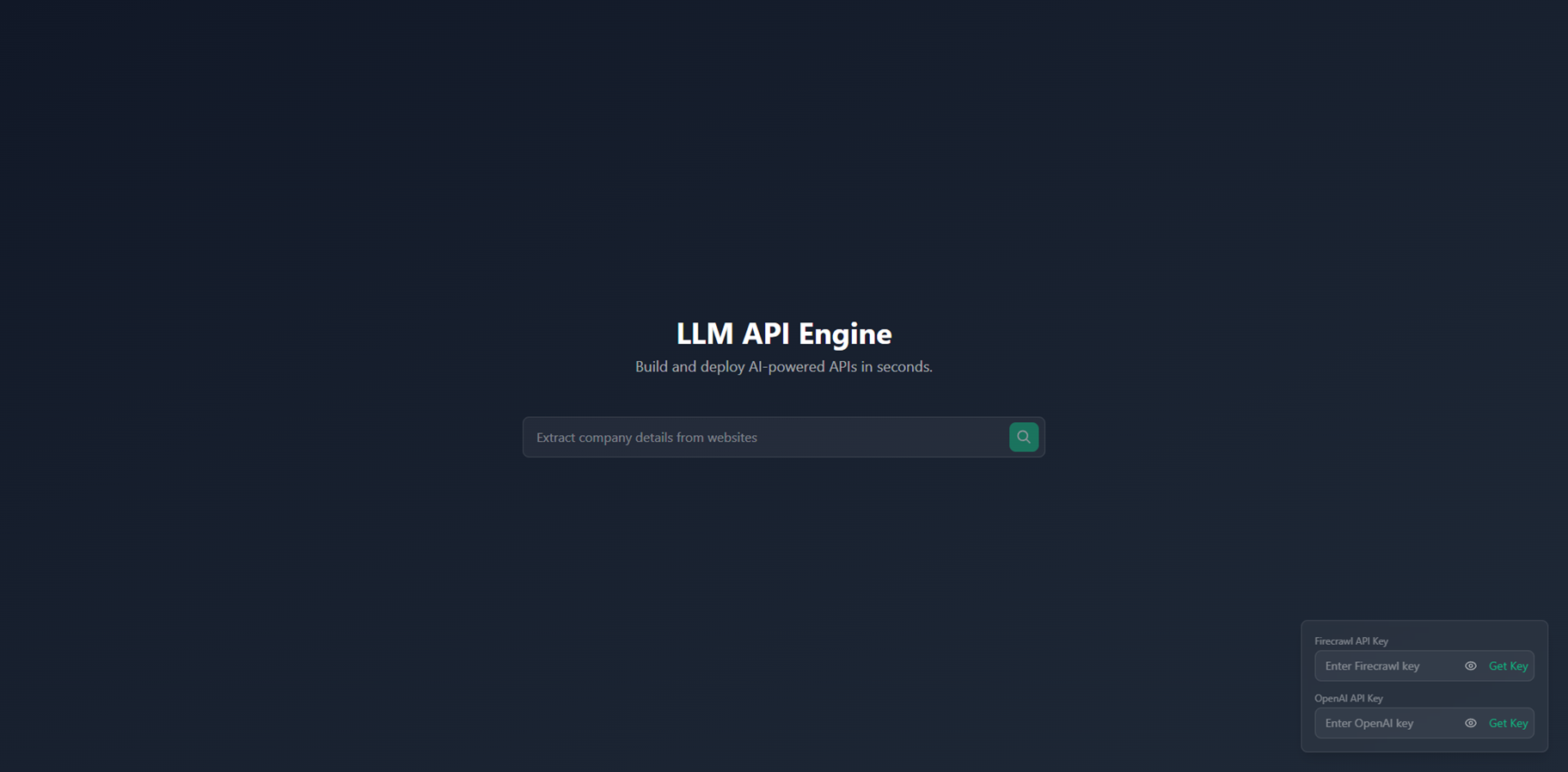

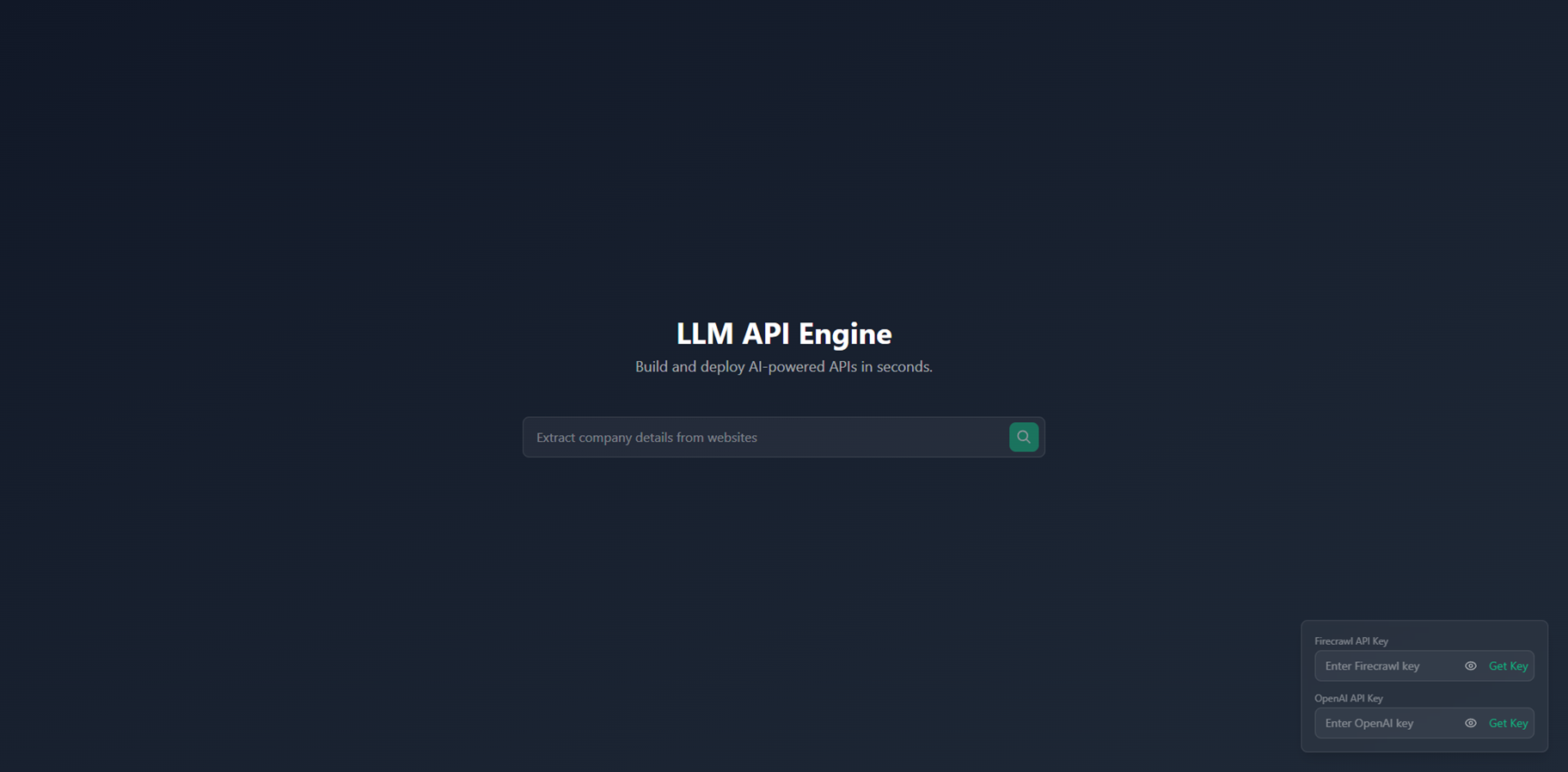

Text to API

Text to API is an LLM-powered API engine that lets you build and deploy AI-driven APIs in seconds using natural language instead of boilerplate backend code. You describe the data or functionality you want, and the engine handles schema creation, integration with providers like Firecrawl and OpenAI, and endpoint deployment behind the scenes. It’s designed to turn messy web data into clean, structured APIs, so developers can focus on product logic rather than wiring infrastructure. With a workflow built around dynamic schema generation, intelligent web scraping, and real-time updates, it’s ideal for quickly prototyping or shipping production-ready AI features.

Text to API

Text to API is an LLM-powered API engine that lets you build and deploy AI-driven APIs in seconds using natural language instead of boilerplate backend code. You describe the data or functionality you want, and the engine handles schema creation, integration with providers like Firecrawl and OpenAI, and endpoint deployment behind the scenes. It’s designed to turn messy web data into clean, structured APIs, so developers can focus on product logic rather than wiring infrastructure. With a workflow built around dynamic schema generation, intelligent web scraping, and real-time updates, it’s ideal for quickly prototyping or shipping production-ready AI features.

Text to API

Text to API is an LLM-powered API engine that lets you build and deploy AI-driven APIs in seconds using natural language instead of boilerplate backend code. You describe the data or functionality you want, and the engine handles schema creation, integration with providers like Firecrawl and OpenAI, and endpoint deployment behind the scenes. It’s designed to turn messy web data into clean, structured APIs, so developers can focus on product logic rather than wiring infrastructure. With a workflow built around dynamic schema generation, intelligent web scraping, and real-time updates, it’s ideal for quickly prototyping or shipping production-ready AI features.

Editorial Note

This page was researched and written by the ATB Editorial Team. Our team researches each AI tool by reviewing its official website, testing features, exploring real use cases, and considering user feedback. Every page is fact-checked and regularly updated to ensure the information stays accurate, neutral, and useful for our readers.

If you have any suggestions or questions, email us at hello@aitoolbook.ai