- Enterprises & Corporations: Unlock insights from image data combined with language for business intelligence.

- Developers & AI Teams: Build next-generation AI applications with multimodal input understanding.

- Data Analysts & Researchers: Enhance analysis with integrated text and image comprehension tools.

- Healthcare & Manufacturing: Apply visual AI for diagnostic, quality control, and operational uses.

- Marketing & Creative Teams: Automate image tagging, categorization, and content creation workflows.

How to Use Command A Vision?

- Access via Cohere Platform: Deploy the model using Cohere’s secure cloud services.

- Integrate with Existing Systems: Connect through APIs to enhance business processes with vision AI.

- Optimize for Enterprise Deployments: Customize configurations to balance performance and cost.

- Leverage Developer Resources: Use Cohere’s documentation and support for smooth implementation.

- Multimodal Fusion: Combines advanced image recognition with powerful language understanding.

- Low Compute Footprint: Efficient design suitable for large-scale enterprise deployments.

- Enterprise-Ready Security: Equipped to handle sensitive business and industry-specific data.

- Versatile Application Scope: Supports a wide range of industries from healthcare to marketing.

- Strong Developer Ecosystem: Comprehensive tools and resources for rapid deployment and customization.

- High accuracy on complex image understanding tasks.

- Efficient operation reduces cloud computing costs.

- Excellent integration of visual and language data for richer insights.

- Wide applicability for various enterprise verticals and workflows.

- Specialized multimodal features may require training for business teams.

- Availability may be limited initially to enterprise customers.

- Some custom use cases might need additional fine-tuning.

- Ecosystem and public benchmarks are still expanding.

Custom

Pricing information is not directly provided.

Proud of the love you're getting? Show off your AI Toolbook reviews—then invite more fans to share the love and build your credibility.

Add an AI Toolbook badge to your site—an easy way to drive followers, showcase updates, and collect reviews. It's like a mini 24/7 billboard for your AI.

Reviews

Rating Distribution

Average score

Popular Mention

FAQs

Similar AI Tools

OpenAI GPT-4o

GPT-4o is OpenAI’s latest and most advanced AI model, offering faster, more powerful, and cost-efficient natural language processing. It can handle text, vision, and audio in real time, making it the first OpenAI model to process multimodal inputs natively. It’s significantly faster and cheaper than GPT-4 Turbo while improving accuracy, reasoning, and multilingual support.

OpenAI GPT-4o

GPT-4o is OpenAI’s latest and most advanced AI model, offering faster, more powerful, and cost-efficient natural language processing. It can handle text, vision, and audio in real time, making it the first OpenAI model to process multimodal inputs natively. It’s significantly faster and cheaper than GPT-4 Turbo while improving accuracy, reasoning, and multilingual support.

OpenAI GPT-4o

GPT-4o is OpenAI’s latest and most advanced AI model, offering faster, more powerful, and cost-efficient natural language processing. It can handle text, vision, and audio in real time, making it the first OpenAI model to process multimodal inputs natively. It’s significantly faster and cheaper than GPT-4 Turbo while improving accuracy, reasoning, and multilingual support.

OpenAI - GPT 4.1

GPT-4.1 is OpenAI’s newest multimodal large language model, designed to deliver highly capable, efficient, and intelligent performance across a broad range of tasks. It builds on the foundation of GPT-4 and GPT-4 Turbo, offering enhanced reasoning, greater factual accuracy, and smoother integration with tools like code interpreters, retrieval systems, and image understanding. With native support for a 128K token context window, function calling, and robust tool usage, GPT-4.1 brings AI closer to behaving like a reliable, adaptive assistant—ready to work, build, and collaborate across tasks with speed and precision.

OpenAI - GPT 4.1

GPT-4.1 is OpenAI’s newest multimodal large language model, designed to deliver highly capable, efficient, and intelligent performance across a broad range of tasks. It builds on the foundation of GPT-4 and GPT-4 Turbo, offering enhanced reasoning, greater factual accuracy, and smoother integration with tools like code interpreters, retrieval systems, and image understanding. With native support for a 128K token context window, function calling, and robust tool usage, GPT-4.1 brings AI closer to behaving like a reliable, adaptive assistant—ready to work, build, and collaborate across tasks with speed and precision.

OpenAI - GPT 4.1

GPT-4.1 is OpenAI’s newest multimodal large language model, designed to deliver highly capable, efficient, and intelligent performance across a broad range of tasks. It builds on the foundation of GPT-4 and GPT-4 Turbo, offering enhanced reasoning, greater factual accuracy, and smoother integration with tools like code interpreters, retrieval systems, and image understanding. With native support for a 128K token context window, function calling, and robust tool usage, GPT-4.1 brings AI closer to behaving like a reliable, adaptive assistant—ready to work, build, and collaborate across tasks with speed and precision.

GPT-4o Realtime Preview is OpenAI’s latest and most advanced multimodal AI model—designed for lightning-fast, real-time interaction across text, vision, and audio. The "o" stands for "omni," reflecting its groundbreaking ability to understand and generate across multiple input and output types. With human-like responsiveness, low latency, and top-tier intelligence, GPT-4o Realtime Preview offers a glimpse into the future of natural AI interfaces. Whether you're building voice assistants, dynamic UIs, or smart multi-input applications, GPT-4o is the new gold standard in real-time AI performance.

OpenAI GPT 4o Real..

GPT-4o Realtime Preview is OpenAI’s latest and most advanced multimodal AI model—designed for lightning-fast, real-time interaction across text, vision, and audio. The "o" stands for "omni," reflecting its groundbreaking ability to understand and generate across multiple input and output types. With human-like responsiveness, low latency, and top-tier intelligence, GPT-4o Realtime Preview offers a glimpse into the future of natural AI interfaces. Whether you're building voice assistants, dynamic UIs, or smart multi-input applications, GPT-4o is the new gold standard in real-time AI performance.

OpenAI GPT 4o Real..

GPT-4o Realtime Preview is OpenAI’s latest and most advanced multimodal AI model—designed for lightning-fast, real-time interaction across text, vision, and audio. The "o" stands for "omni," reflecting its groundbreaking ability to understand and generate across multiple input and output types. With human-like responsiveness, low latency, and top-tier intelligence, GPT-4o Realtime Preview offers a glimpse into the future of natural AI interfaces. Whether you're building voice assistants, dynamic UIs, or smart multi-input applications, GPT-4o is the new gold standard in real-time AI performance.

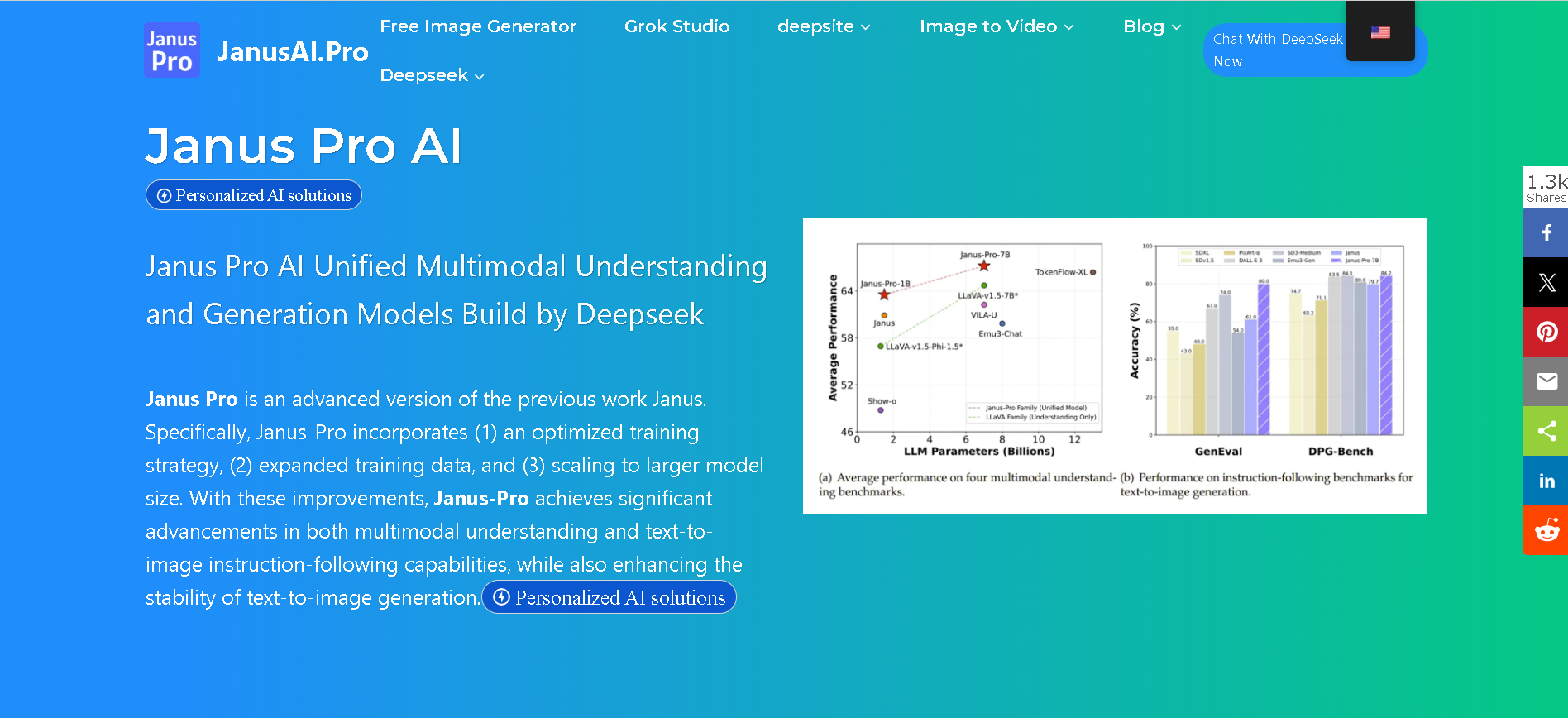

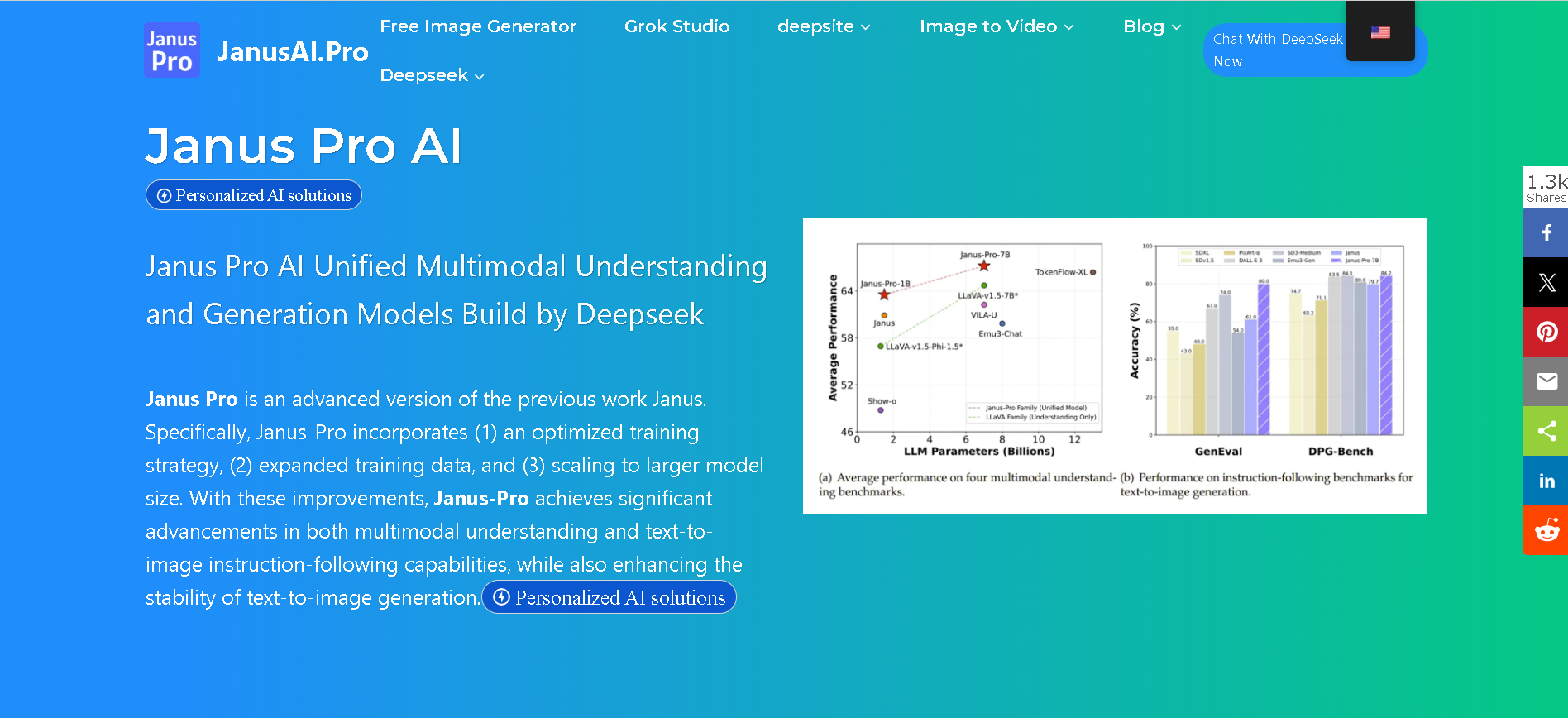

Janus-Pro-7B

anus Pro 7B is DeepSeek’s flagship open-source multimodal AI model, unifying vision understanding and text-to-image generation within a single transformer architecture. Built on DeepSeek‑LLM‑7B, it uses a decoupled visual encoding approach paired with SigLIP‑L and VQ tokenizer, delivering superior visual fidelity, prompt alignment, and stability across tasks—benchmarked ahead of OpenAI’s DALL‑E 3 and Stable Diffusion variants.

Janus-Pro-7B

anus Pro 7B is DeepSeek’s flagship open-source multimodal AI model, unifying vision understanding and text-to-image generation within a single transformer architecture. Built on DeepSeek‑LLM‑7B, it uses a decoupled visual encoding approach paired with SigLIP‑L and VQ tokenizer, delivering superior visual fidelity, prompt alignment, and stability across tasks—benchmarked ahead of OpenAI’s DALL‑E 3 and Stable Diffusion variants.

Janus-Pro-7B

anus Pro 7B is DeepSeek’s flagship open-source multimodal AI model, unifying vision understanding and text-to-image generation within a single transformer architecture. Built on DeepSeek‑LLM‑7B, it uses a decoupled visual encoding approach paired with SigLIP‑L and VQ tokenizer, delivering superior visual fidelity, prompt alignment, and stability across tasks—benchmarked ahead of OpenAI’s DALL‑E 3 and Stable Diffusion variants.

DeepSeek VL

DeepSeek VL is DeepSeek’s open-source vision-language model designed for real-world multimodal understanding. It employs a hybrid vision encoder (SigLIP‑L + SAM), processes high-resolution images (up to 1024×1024), and supports both base and chat variants across two sizes: 1.3B and 7B parameters. It excels on tasks like OCR, diagram reasoning, webpage parsing, and visual Q&A—while preserving strong language ability.

DeepSeek VL

DeepSeek VL is DeepSeek’s open-source vision-language model designed for real-world multimodal understanding. It employs a hybrid vision encoder (SigLIP‑L + SAM), processes high-resolution images (up to 1024×1024), and supports both base and chat variants across two sizes: 1.3B and 7B parameters. It excels on tasks like OCR, diagram reasoning, webpage parsing, and visual Q&A—while preserving strong language ability.

DeepSeek VL

DeepSeek VL is DeepSeek’s open-source vision-language model designed for real-world multimodal understanding. It employs a hybrid vision encoder (SigLIP‑L + SAM), processes high-resolution images (up to 1024×1024), and supports both base and chat variants across two sizes: 1.3B and 7B parameters. It excels on tasks like OCR, diagram reasoning, webpage parsing, and visual Q&A—while preserving strong language ability.

grok-2-vision

Grok 2 Vision (also known as Grok‑2‑Vision‑1212 or grok‑2‑vision‑latest) is xAI’s multimodal variant of Grok 2, designed specifically for advanced image understanding and generation. Launched in December 2024, it supports joint text+image inputs up to 32,768 tokens, excelling in visual math reasoning (MathVista), document question answering (DocVQA), object recognition, and style analysis—while also offering photorealistic image creation via the FLUX.1 model.

grok-2-vision

Grok 2 Vision (also known as Grok‑2‑Vision‑1212 or grok‑2‑vision‑latest) is xAI’s multimodal variant of Grok 2, designed specifically for advanced image understanding and generation. Launched in December 2024, it supports joint text+image inputs up to 32,768 tokens, excelling in visual math reasoning (MathVista), document question answering (DocVQA), object recognition, and style analysis—while also offering photorealistic image creation via the FLUX.1 model.

grok-2-vision

Grok 2 Vision (also known as Grok‑2‑Vision‑1212 or grok‑2‑vision‑latest) is xAI’s multimodal variant of Grok 2, designed specifically for advanced image understanding and generation. Launched in December 2024, it supports joint text+image inputs up to 32,768 tokens, excelling in visual math reasoning (MathVista), document question answering (DocVQA), object recognition, and style analysis—while also offering photorealistic image creation via the FLUX.1 model.

grok-2-vision-1212

Grok 2 Vision – 1212 is a December 2024 release of xAI’s multimodal large language model, fine-tuned specifically for image understanding and generation. It supports combined text and image inputs (up to 32,768 tokens) and excels in document question answering, visual math reasoning, object recognition, and photorealistic image generation powered by FLUX.1. It also supports API deployment for developers and enterprises.

grok-2-vision-1212

Grok 2 Vision – 1212 is a December 2024 release of xAI’s multimodal large language model, fine-tuned specifically for image understanding and generation. It supports combined text and image inputs (up to 32,768 tokens) and excels in document question answering, visual math reasoning, object recognition, and photorealistic image generation powered by FLUX.1. It also supports API deployment for developers and enterprises.

grok-2-vision-1212

Grok 2 Vision – 1212 is a December 2024 release of xAI’s multimodal large language model, fine-tuned specifically for image understanding and generation. It supports combined text and image inputs (up to 32,768 tokens) and excels in document question answering, visual math reasoning, object recognition, and photorealistic image generation powered by FLUX.1. It also supports API deployment for developers and enterprises.

Meta Llama 3.2 Vis..

Llama 3.2 Vision is Meta’s first open-source multimodal Llama model series, released on September 25, 2024. Available in 11 B and 90 B parameter sizes, it merges advanced image understanding with a massive 128 K‑token text context. Optimized for vision reasoning, captioning, document QA, and visual math tasks, it outperforms many closed-source multimodal models.

Meta Llama 3.2 Vis..

Llama 3.2 Vision is Meta’s first open-source multimodal Llama model series, released on September 25, 2024. Available in 11 B and 90 B parameter sizes, it merges advanced image understanding with a massive 128 K‑token text context. Optimized for vision reasoning, captioning, document QA, and visual math tasks, it outperforms many closed-source multimodal models.

Meta Llama 3.2 Vis..

Llama 3.2 Vision is Meta’s first open-source multimodal Llama model series, released on September 25, 2024. Available in 11 B and 90 B parameter sizes, it merges advanced image understanding with a massive 128 K‑token text context. Optimized for vision reasoning, captioning, document QA, and visual math tasks, it outperforms many closed-source multimodal models.

Mistral Small 3.1

Mistral Small 3.1 is the March 17, 2025 update to Mistral AI's open-source 24B-parameter small model. It offers instruction-following, multimodal vision understanding, and an expanded 128K-token context window, delivering performance on par with or better than GPT‑4o Mini, Gemma 3, and Claude 3.5 Haiku—all while maintaining fast inference speeds (~150 tokens/sec) and running on devices like an RTX 4090 or a 32 GB Mac.

Mistral Small 3.1

Mistral Small 3.1 is the March 17, 2025 update to Mistral AI's open-source 24B-parameter small model. It offers instruction-following, multimodal vision understanding, and an expanded 128K-token context window, delivering performance on par with or better than GPT‑4o Mini, Gemma 3, and Claude 3.5 Haiku—all while maintaining fast inference speeds (~150 tokens/sec) and running on devices like an RTX 4090 or a 32 GB Mac.

Mistral Small 3.1

Mistral Small 3.1 is the March 17, 2025 update to Mistral AI's open-source 24B-parameter small model. It offers instruction-following, multimodal vision understanding, and an expanded 128K-token context window, delivering performance on par with or better than GPT‑4o Mini, Gemma 3, and Claude 3.5 Haiku—all while maintaining fast inference speeds (~150 tokens/sec) and running on devices like an RTX 4090 or a 32 GB Mac.

Mistral Pixtral La..

Pixtral Large is Mistral AI’s latest multimodal powerhouse, launched November 18, 2024. Built atop the 123B‑parameter Mistral Large 2, it features a 124B‑parameter multimodal decoder paired with a 1B‑parameter vision encoder, and supports a massive 128K‑token context window—enabling it to process up to 30 high-resolution images or ~300-page documents.

Mistral Pixtral La..

Pixtral Large is Mistral AI’s latest multimodal powerhouse, launched November 18, 2024. Built atop the 123B‑parameter Mistral Large 2, it features a 124B‑parameter multimodal decoder paired with a 1B‑parameter vision encoder, and supports a massive 128K‑token context window—enabling it to process up to 30 high-resolution images or ~300-page documents.

Mistral Pixtral La..

Pixtral Large is Mistral AI’s latest multimodal powerhouse, launched November 18, 2024. Built atop the 123B‑parameter Mistral Large 2, it features a 124B‑parameter multimodal decoder paired with a 1B‑parameter vision encoder, and supports a massive 128K‑token context window—enabling it to process up to 30 high-resolution images or ~300-page documents.

Grok 4

Grok 4 is the latest and most intelligent AI model developed by xAI, designed for expert-level reasoning and real-time knowledge integration. It combines large-scale reinforcement learning with native tool use, including code interpretation, web browsing, and advanced search capabilities, to provide highly accurate and up-to-date responses. Grok 4 excels across diverse domains such as math, coding, science, and complex reasoning, supporting multimodal inputs like text and vision. With its massive 256,000-token context window and advanced toolset, Grok 4 is built to push the boundaries of AI intelligence and practical utility for both developers and enterprises.

Grok 4

Grok 4 is the latest and most intelligent AI model developed by xAI, designed for expert-level reasoning and real-time knowledge integration. It combines large-scale reinforcement learning with native tool use, including code interpretation, web browsing, and advanced search capabilities, to provide highly accurate and up-to-date responses. Grok 4 excels across diverse domains such as math, coding, science, and complex reasoning, supporting multimodal inputs like text and vision. With its massive 256,000-token context window and advanced toolset, Grok 4 is built to push the boundaries of AI intelligence and practical utility for both developers and enterprises.

Grok 4

Grok 4 is the latest and most intelligent AI model developed by xAI, designed for expert-level reasoning and real-time knowledge integration. It combines large-scale reinforcement learning with native tool use, including code interpretation, web browsing, and advanced search capabilities, to provide highly accurate and up-to-date responses. Grok 4 excels across diverse domains such as math, coding, science, and complex reasoning, supporting multimodal inputs like text and vision. With its massive 256,000-token context window and advanced toolset, Grok 4 is built to push the boundaries of AI intelligence and practical utility for both developers and enterprises.

Cohere - Command R..

Command R+ is Cohere’s latest state-of-the-art language model built for enterprise, optimized specifically for retrieval-augmented generation (RAG) workloads at scale. Available first on Microsoft Azure, Command R+ handles complex business data, integrates with secure infrastructure, and powers advanced AI workflows with fast, accurate responses. Designed for reliability, customization, and seamless deployment, it offers enterprises the ability to leverage cutting-edge generative and retrieval technologies across regulated industries.

Cohere - Command R..

Command R+ is Cohere’s latest state-of-the-art language model built for enterprise, optimized specifically for retrieval-augmented generation (RAG) workloads at scale. Available first on Microsoft Azure, Command R+ handles complex business data, integrates with secure infrastructure, and powers advanced AI workflows with fast, accurate responses. Designed for reliability, customization, and seamless deployment, it offers enterprises the ability to leverage cutting-edge generative and retrieval technologies across regulated industries.

Cohere - Command R..

Command R+ is Cohere’s latest state-of-the-art language model built for enterprise, optimized specifically for retrieval-augmented generation (RAG) workloads at scale. Available first on Microsoft Azure, Command R+ handles complex business data, integrates with secure infrastructure, and powers advanced AI workflows with fast, accurate responses. Designed for reliability, customization, and seamless deployment, it offers enterprises the ability to leverage cutting-edge generative and retrieval technologies across regulated industries.

Editorial Note

This page was researched and written by the ATB Editorial Team. Our team researches each AI tool by reviewing its official website, testing features, exploring real use cases, and considering user feedback. Every page is fact-checked and regularly updated to ensure the information stays accurate, neutral, and useful for our readers.

If you have any suggestions or questions, email us at hello@aitoolbook.ai