- Multinational Enterprises: Gain multilingual AI support across Korean, English, Japanese, and more.

- Developers & Data Scientists: Build complex reasoning and tool-using applications with high accuracy.

- Financial & Legal Professionals: Leverage domain-specific NLP expertise for specialized document understanding.

- Healthcare & Insurance Sectors: Automate document analysis, summarization, and data interpretation.

- AI Integrators & Researchers: Explore efficient, cutting-edge AI with strong problem-solving capabilities.

How to Use Solar Pro 2?

- Access via Cloud or On-Premises: Deploy through cloud marketplaces or enterprise on-prem solutions.

- Engage Reasoning Mode: Enable advanced multi-step reasoning for complex problem solving.

- Utilize Tool Use Features: Integrate with external tools to automate workflows and data interactions.

- Apply Across Domains: Use model for multilingual processing, summarization, Q&A, and coding tasks.

- Best-in-Class Korean Performance: Leads multiple Korean NLP benchmarks even vs. larger models.

- Advanced Multi-Step Reasoning: Excels in math, logic, and code with strong benchmark results.

- Superior Tool Use: Acts autonomously with external tools for actionable business outcomes.

- Parameter Efficiency: Outperforms many larger models, demonstrating smarter, leaner AI design.

- Cross-Domain Expertise: Specialized handling of legal, financial, and medical language and concepts.

- Powerful multilingual abilities with Korean language leadership.

- Strong reasoning and complex problem-solving efficiency.

- Integrated, autonomous tool use for enhanced workflow automation.

- Compact model size with enterprise-level performance and flexibility.

- Smaller parameter count may limit raw generative creativity compared to larger models.

- Enterprise adoption may require expertise for custom integrations.

- Focused primarily on Korean and key Asian languages—other languages less emphasized.

- Ongoing updates needed to expand industry-specific applications and tool integrations.

Developers

Custom

Pay-as-you-go

Public models (See detailed pricing)

REST API

Public cloud

Help center

Enterprise

Custom

All public models

Custom LLM and RAG

Custom key information extraction

Custom document classification

REST API

Custom integrations

Serverless (API)

Private cloud

On-premises

Upstage Fine-tuning Studio

Upstage Labeling Studio

Personal information masking

Usage and resource monitoring

SSO

24/7 priority support

99% uptime SLA

Dedicated success manager

Proud of the love you're getting? Show off your AI Toolbook reviews—then invite more fans to share the love and build your credibility.

Add an AI Toolbook badge to your site—an easy way to drive followers, showcase updates, and collect reviews. It's like a mini 24/7 billboard for your AI.

Reviews

Rating Distribution

Average score

Popular Mention

FAQs

Similar AI Tools

OpenAI Dall-E 2

DALL·E 2 is an AI model developed by OpenAI that generates images from text descriptions (prompts). It improves upon its predecessor, DALL·E 1, by producing higher-resolution, more realistic, and creative images based on user input. The model can also edit existing images, expand images beyond their original borders (inpainting), and create artistic interpretations of text descriptions. ❗ Note: OpenAI has phased out DALL·E 2 in favor of DALL·E 3, which offers more advanced image generation.

OpenAI Dall-E 2

DALL·E 2 is an AI model developed by OpenAI that generates images from text descriptions (prompts). It improves upon its predecessor, DALL·E 1, by producing higher-resolution, more realistic, and creative images based on user input. The model can also edit existing images, expand images beyond their original borders (inpainting), and create artistic interpretations of text descriptions. ❗ Note: OpenAI has phased out DALL·E 2 in favor of DALL·E 3, which offers more advanced image generation.

OpenAI Dall-E 2

DALL·E 2 is an AI model developed by OpenAI that generates images from text descriptions (prompts). It improves upon its predecessor, DALL·E 1, by producing higher-resolution, more realistic, and creative images based on user input. The model can also edit existing images, expand images beyond their original borders (inpainting), and create artistic interpretations of text descriptions. ❗ Note: OpenAI has phased out DALL·E 2 in favor of DALL·E 3, which offers more advanced image generation.

Grok 3

Grok 3 is the latest flagship chatbot by Elon Musk’s xAI, described as "the world’s smartest AI." It was trained on a massive 200,000‑GPU supercomputer and offers tenfold more computing power than Grok 2. Equipped with two reasoning modes—Think and Big Brain—and featuring DeepSearch (a contextual web-and-X research tool), Grok 3 excels in math, science, coding, and truth-seeking tasks—all while offering fast, lively conversational style.

Grok 3

Grok 3 is the latest flagship chatbot by Elon Musk’s xAI, described as "the world’s smartest AI." It was trained on a massive 200,000‑GPU supercomputer and offers tenfold more computing power than Grok 2. Equipped with two reasoning modes—Think and Big Brain—and featuring DeepSearch (a contextual web-and-X research tool), Grok 3 excels in math, science, coding, and truth-seeking tasks—all while offering fast, lively conversational style.

Grok 3

Grok 3 is the latest flagship chatbot by Elon Musk’s xAI, described as "the world’s smartest AI." It was trained on a massive 200,000‑GPU supercomputer and offers tenfold more computing power than Grok 2. Equipped with two reasoning modes—Think and Big Brain—and featuring DeepSearch (a contextual web-and-X research tool), Grok 3 excels in math, science, coding, and truth-seeking tasks—all while offering fast, lively conversational style.

Grok 2

Grok 2 is xAI’s second-generation chatbot that extends Grok’s capabilities to include real-time web access, multimodal output (text, vision, image generation via FLUX.1), and improved reasoning performance. It’s available to X Premium and Premium+ users and through xAI’s enterprise API.

Grok 2

Grok 2 is xAI’s second-generation chatbot that extends Grok’s capabilities to include real-time web access, multimodal output (text, vision, image generation via FLUX.1), and improved reasoning performance. It’s available to X Premium and Premium+ users and through xAI’s enterprise API.

Grok 2

Grok 2 is xAI’s second-generation chatbot that extends Grok’s capabilities to include real-time web access, multimodal output (text, vision, image generation via FLUX.1), and improved reasoning performance. It’s available to X Premium and Premium+ users and through xAI’s enterprise API.

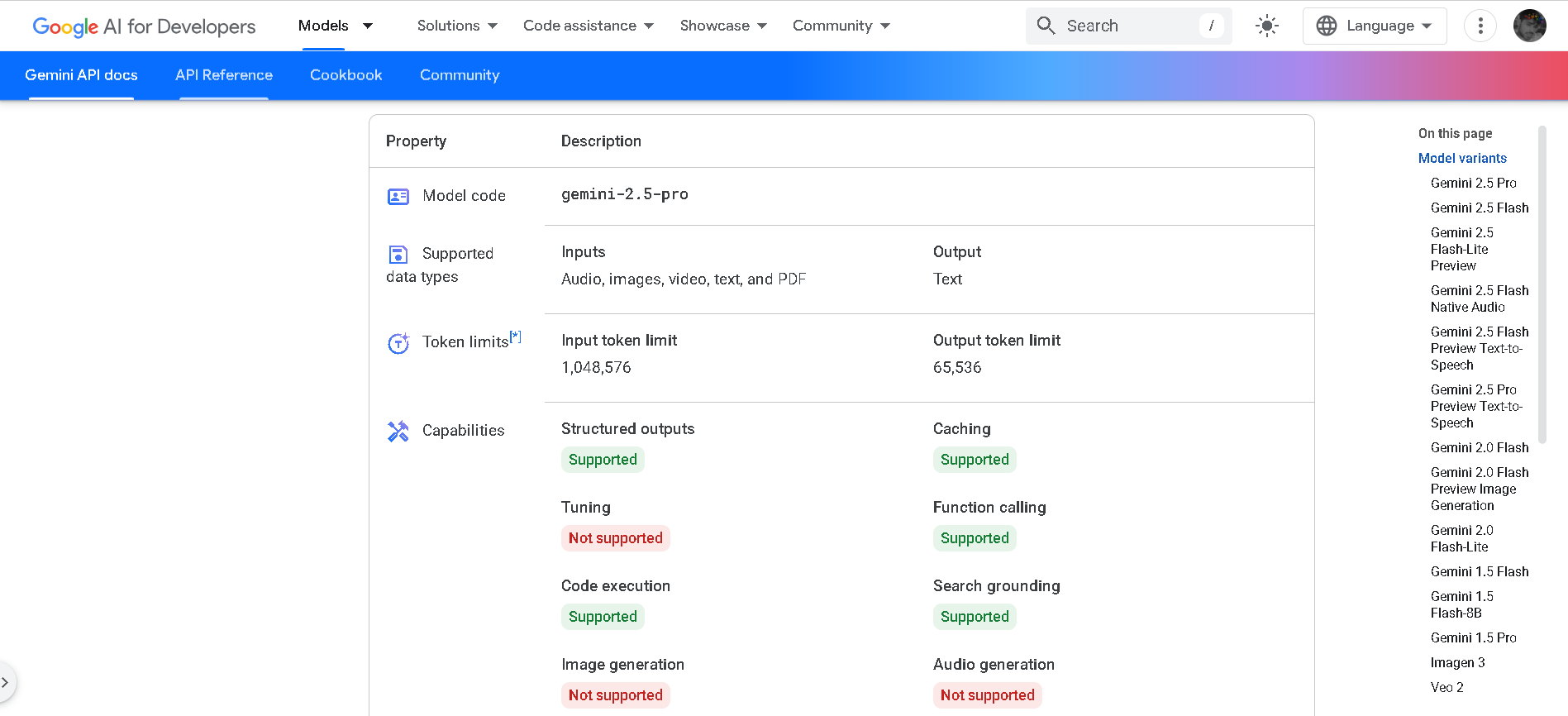

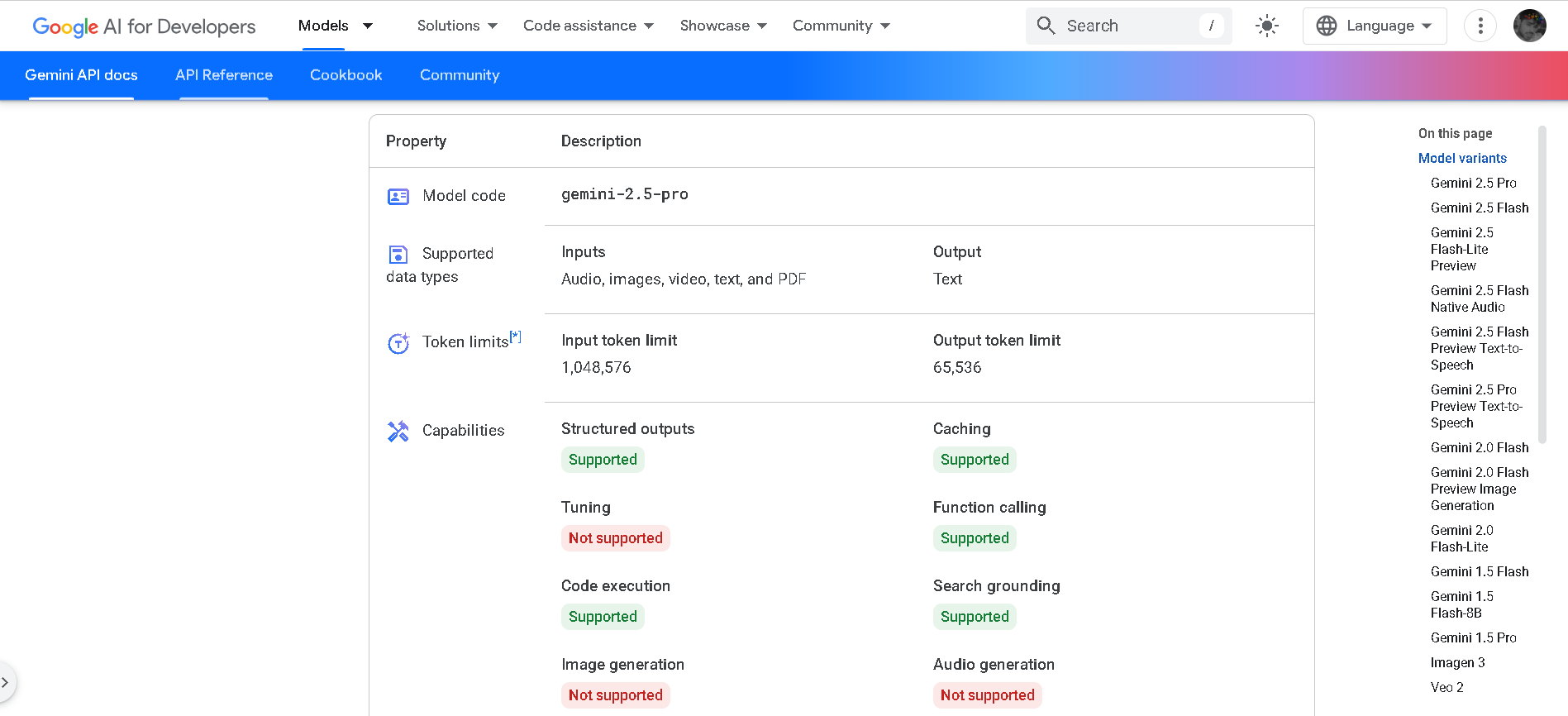

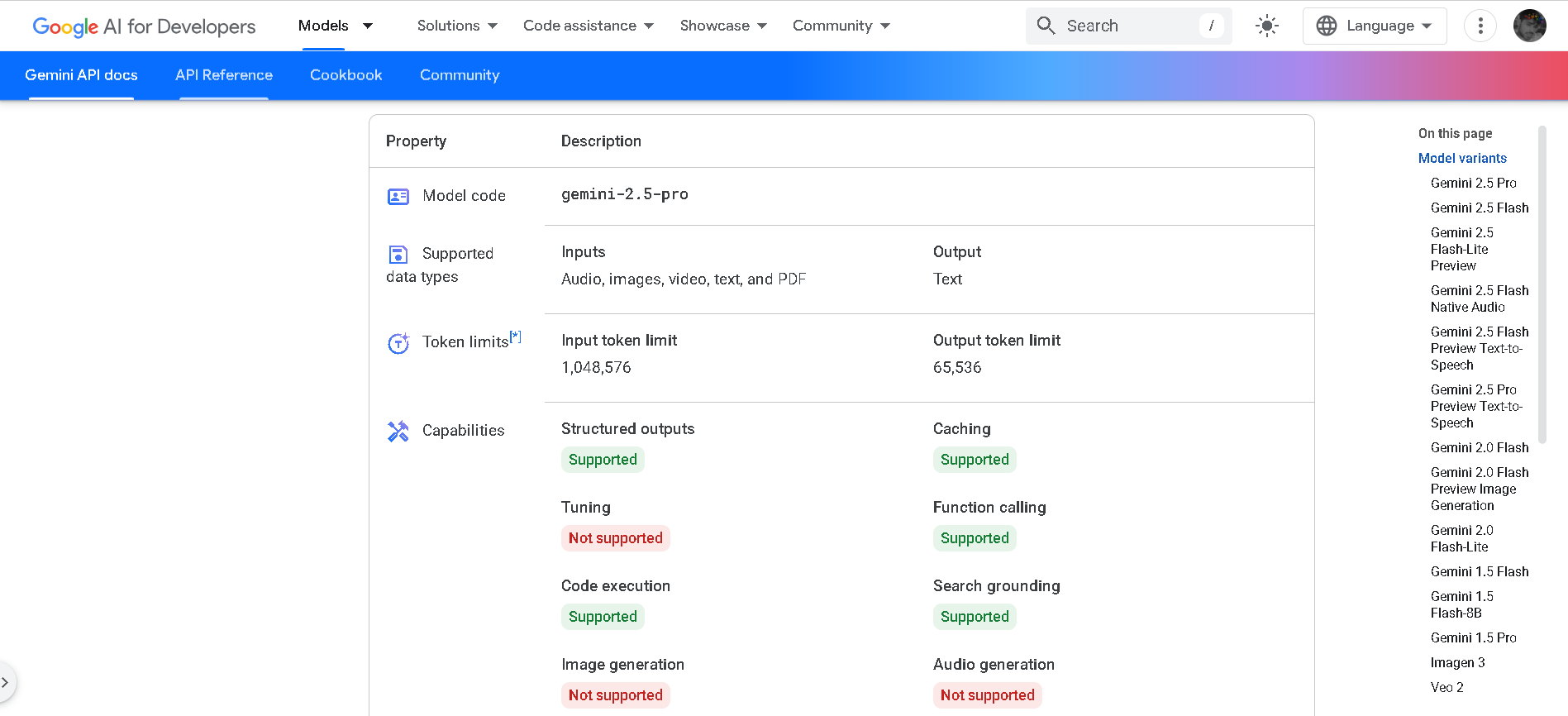

Gemini 2.5 Pro

Gemini 2.5 Pro is Google DeepMind’s advanced hybrid-reasoning AI model, designed to think deeply before responding. With support for multimodal inputs—text, images, audio, video, and code—it offers lightning-fast inference performance, up to 2 million tokens of context, and top-tier results in math, science, and coding benchmarks.

Gemini 2.5 Pro

Gemini 2.5 Pro is Google DeepMind’s advanced hybrid-reasoning AI model, designed to think deeply before responding. With support for multimodal inputs—text, images, audio, video, and code—it offers lightning-fast inference performance, up to 2 million tokens of context, and top-tier results in math, science, and coding benchmarks.

Gemini 2.5 Pro

Gemini 2.5 Pro is Google DeepMind’s advanced hybrid-reasoning AI model, designed to think deeply before responding. With support for multimodal inputs—text, images, audio, video, and code—it offers lightning-fast inference performance, up to 2 million tokens of context, and top-tier results in math, science, and coding benchmarks.

grok-2-latest

Grok 2 is xAI’s second-generation chatbot model, launched in August 2024 as a substantial upgrade over Grok 1.5. It delivers frontier-level performance in chat, coding, reasoning, vision tasks, and image generation via the FLUX.1 system. On leaderboards, it outscored Claude 3.5 Sonnet and GPT‑4 Turbo, with strong results in GPQA (56%), MMLU (87.5%), MATH (76.1%), HumanEval (88.4%), MathVista, and DocVQA benchmarks.

grok-2-latest

Grok 2 is xAI’s second-generation chatbot model, launched in August 2024 as a substantial upgrade over Grok 1.5. It delivers frontier-level performance in chat, coding, reasoning, vision tasks, and image generation via the FLUX.1 system. On leaderboards, it outscored Claude 3.5 Sonnet and GPT‑4 Turbo, with strong results in GPQA (56%), MMLU (87.5%), MATH (76.1%), HumanEval (88.4%), MathVista, and DocVQA benchmarks.

grok-2-latest

Grok 2 is xAI’s second-generation chatbot model, launched in August 2024 as a substantial upgrade over Grok 1.5. It delivers frontier-level performance in chat, coding, reasoning, vision tasks, and image generation via the FLUX.1 system. On leaderboards, it outscored Claude 3.5 Sonnet and GPT‑4 Turbo, with strong results in GPQA (56%), MMLU (87.5%), MATH (76.1%), HumanEval (88.4%), MathVista, and DocVQA benchmarks.

Grok 2 Vision is xAI’s advanced vision-enabled variant of Grok 2, launched in December 2024. It supports joint text + image inputs with a 32K-token context window, combining image understanding, document QA, visual math reasoning (e.g., MathVista, DocVQA), and photorealistic image generation via FLUX.1 (later complemented by Aurora). It scores state-of-the-art on multimodal tasks.

grok-2-vision-late..

Grok 2 Vision is xAI’s advanced vision-enabled variant of Grok 2, launched in December 2024. It supports joint text + image inputs with a 32K-token context window, combining image understanding, document QA, visual math reasoning (e.g., MathVista, DocVQA), and photorealistic image generation via FLUX.1 (later complemented by Aurora). It scores state-of-the-art on multimodal tasks.

grok-2-vision-late..

Grok 2 Vision is xAI’s advanced vision-enabled variant of Grok 2, launched in December 2024. It supports joint text + image inputs with a 32K-token context window, combining image understanding, document QA, visual math reasoning (e.g., MathVista, DocVQA), and photorealistic image generation via FLUX.1 (later complemented by Aurora). It scores state-of-the-art on multimodal tasks.

grok-2-vision-1212

Grok 2 Vision – 1212 is a December 2024 release of xAI’s multimodal large language model, fine-tuned specifically for image understanding and generation. It supports combined text and image inputs (up to 32,768 tokens) and excels in document question answering, visual math reasoning, object recognition, and photorealistic image generation powered by FLUX.1. It also supports API deployment for developers and enterprises.

grok-2-vision-1212

Grok 2 Vision – 1212 is a December 2024 release of xAI’s multimodal large language model, fine-tuned specifically for image understanding and generation. It supports combined text and image inputs (up to 32,768 tokens) and excels in document question answering, visual math reasoning, object recognition, and photorealistic image generation powered by FLUX.1. It also supports API deployment for developers and enterprises.

grok-2-vision-1212

Grok 2 Vision – 1212 is a December 2024 release of xAI’s multimodal large language model, fine-tuned specifically for image understanding and generation. It supports combined text and image inputs (up to 32,768 tokens) and excels in document question answering, visual math reasoning, object recognition, and photorealistic image generation powered by FLUX.1. It also supports API deployment for developers and enterprises.

grok-2-image-lates..

Grok 2 Image (a.k.a. Grok 2‑image‑latest) is xAI’s vision-forward extension of its Grok 2 model. Released in December 2024, it merges photorealistic image generation via Aurora with strong image understanding capabilities—supporting object detection, chart analysis, OCR, and visual reasoning tasks. Operates in a unified multimodal pipeline using text+image inputs up to 32K-token context.

grok-2-image-lates..

Grok 2 Image (a.k.a. Grok 2‑image‑latest) is xAI’s vision-forward extension of its Grok 2 model. Released in December 2024, it merges photorealistic image generation via Aurora with strong image understanding capabilities—supporting object detection, chart analysis, OCR, and visual reasoning tasks. Operates in a unified multimodal pipeline using text+image inputs up to 32K-token context.

grok-2-image-lates..

Grok 2 Image (a.k.a. Grok 2‑image‑latest) is xAI’s vision-forward extension of its Grok 2 model. Released in December 2024, it merges photorealistic image generation via Aurora with strong image understanding capabilities—supporting object detection, chart analysis, OCR, and visual reasoning tasks. Operates in a unified multimodal pipeline using text+image inputs up to 32K-token context.

grok-2-image-1212

Grok 2 Image 1212 (also known as grok-2-image-1212) is xAI’s December 2024 release of their unified image generation and understanding model. Built on Grok 2, it combines Aurora-powered photorealistic image creation with strong multimodal comprehension—handling image editing, vision QA, chart interpretation, and document analysis—within a single API and 32,768-token context.

grok-2-image-1212

Grok 2 Image 1212 (also known as grok-2-image-1212) is xAI’s December 2024 release of their unified image generation and understanding model. Built on Grok 2, it combines Aurora-powered photorealistic image creation with strong multimodal comprehension—handling image editing, vision QA, chart interpretation, and document analysis—within a single API and 32,768-token context.

grok-2-image-1212

Grok 2 Image 1212 (also known as grok-2-image-1212) is xAI’s December 2024 release of their unified image generation and understanding model. Built on Grok 2, it combines Aurora-powered photorealistic image creation with strong multimodal comprehension—handling image editing, vision QA, chart interpretation, and document analysis—within a single API and 32,768-token context.

Meta Llama 3.3

Llama 3.3 is Meta’s instruction-tuned, text-only large language model released on December 6, 2024, available in a 70B-parameter size. It matches the performance of much larger models using significantly fewer parameters, is multilingual across eight key languages, and supports a massive 128,000-token context window—ideal for handling long-form documents, codebases, and detailed reasoning tasks.

Meta Llama 3.3

Llama 3.3 is Meta’s instruction-tuned, text-only large language model released on December 6, 2024, available in a 70B-parameter size. It matches the performance of much larger models using significantly fewer parameters, is multilingual across eight key languages, and supports a massive 128,000-token context window—ideal for handling long-form documents, codebases, and detailed reasoning tasks.

Meta Llama 3.3

Llama 3.3 is Meta’s instruction-tuned, text-only large language model released on December 6, 2024, available in a 70B-parameter size. It matches the performance of much larger models using significantly fewer parameters, is multilingual across eight key languages, and supports a massive 128,000-token context window—ideal for handling long-form documents, codebases, and detailed reasoning tasks.

Mistral Pixtral La..

Pixtral Large is Mistral AI’s latest multimodal powerhouse, launched November 18, 2024. Built atop the 123B‑parameter Mistral Large 2, it features a 124B‑parameter multimodal decoder paired with a 1B‑parameter vision encoder, and supports a massive 128K‑token context window—enabling it to process up to 30 high-resolution images or ~300-page documents.

Mistral Pixtral La..

Pixtral Large is Mistral AI’s latest multimodal powerhouse, launched November 18, 2024. Built atop the 123B‑parameter Mistral Large 2, it features a 124B‑parameter multimodal decoder paired with a 1B‑parameter vision encoder, and supports a massive 128K‑token context window—enabling it to process up to 30 high-resolution images or ~300-page documents.

Mistral Pixtral La..

Pixtral Large is Mistral AI’s latest multimodal powerhouse, launched November 18, 2024. Built atop the 123B‑parameter Mistral Large 2, it features a 124B‑parameter multimodal decoder paired with a 1B‑parameter vision encoder, and supports a massive 128K‑token context window—enabling it to process up to 30 high-resolution images or ~300-page documents.

Upstage - Informat..

Upstage Information Extract is a powerful, schema-agnostic document data extraction solution that requires zero training or setup. It intelligently extracts structured insights from any document type—PDFs, scanned images, Office files, multi-page documents, and more—understanding both explicit content and implicit contextual meaning such as totals from line items. Designed for enterprise-scale workflows, it offers high accuracy, dynamic schema alignment, and seamless API-first integration with ERP, CRM, cloud storage, and automation platforms, enabling reliable and customizable data extraction without the complexity typical of traditional IDP or generic LLM approaches.

Upstage - Informat..

Upstage Information Extract is a powerful, schema-agnostic document data extraction solution that requires zero training or setup. It intelligently extracts structured insights from any document type—PDFs, scanned images, Office files, multi-page documents, and more—understanding both explicit content and implicit contextual meaning such as totals from line items. Designed for enterprise-scale workflows, it offers high accuracy, dynamic schema alignment, and seamless API-first integration with ERP, CRM, cloud storage, and automation platforms, enabling reliable and customizable data extraction without the complexity typical of traditional IDP or generic LLM approaches.

Upstage - Informat..

Upstage Information Extract is a powerful, schema-agnostic document data extraction solution that requires zero training or setup. It intelligently extracts structured insights from any document type—PDFs, scanned images, Office files, multi-page documents, and more—understanding both explicit content and implicit contextual meaning such as totals from line items. Designed for enterprise-scale workflows, it offers high accuracy, dynamic schema alignment, and seamless API-first integration with ERP, CRM, cloud storage, and automation platforms, enabling reliable and customizable data extraction without the complexity typical of traditional IDP or generic LLM approaches.

Editorial Note

This page was researched and written by the ATB Editorial Team. Our team researches each AI tool by reviewing its official website, testing features, exploring real use cases, and considering user feedback. Every page is fact-checked and regularly updated to ensure the information stays accurate, neutral, and useful for our readers.

If you have any suggestions or questions, email us at hello@aitoolbook.ai