- AI Engineers: To automate tedious tasks and focus on core AI development.

- Machine Learning Engineers: To build, test, and deploy AI models more efficiently.

- Data Scientists: To refine prompts and evaluate LLM performance effectively.

- CTOs and Tech Leads: To ensure the robustness, reliability, and scalability of AI initiatives.

- AI Development Teams: For collaborative development and maintaining high standards in AI production.

- MLOps Professionals: To integrate automated workflows for deployment, monitoring, and maintenance of AI systems.

- Companies Deploying AI Solutions: To reduce development time, costs, and risks associated with AI failures.

How to Use Teammately.ai?

- Automated Prompt Generation: Input your desired AI task, and Teammately automatically generates optimized prompts based on best practices for various foundation models.

- Self-Refinement & Evaluation: If initial AI evaluations are poor, the system self-refines the AI. It also synthesizes high-quality test cases and uses LLM judges for comprehensive evaluation.

- Automated RAG Building: Provide your documents, and Teammately automates chunking, embedding, indexing, and can even clean "dirty" documents or rewrite chunks for better contextual retrieval.

- AI Observability: Monitor your AI in production using interpretable, multi-dimensional LLM judges that identify problems and provide insights.

- Deployment & Management: Utilize Teammately to containerize your models, prompts, and retrieval engines, simplifying deployment and management with minimal latency.

- Compare & Failover: Compare multiple AI architectures simultaneously and set up automatic failover to secondary models/prompts in case a primary foundation model fails.

- End-to-End Automation: Automates nearly every stage of AI development, from prompt engineering to deployment and observability, significantly speeding up the process.

- Focus on Production Robustness: Designed specifically to make production-level AI more reliable, reducing common failure points.

- Intelligent RAG Building: Automates complex RAG processes, including cleaning and rewriting document chunks for superior contextual retrieval.

- Interpretable AI Observability: Uses multi-dimensional LLM judges to provide clear, actionable insights into AI performance in production.

- Automatic Failover: Features the ability to automatically switch to secondary models and prompts if a primary foundation model fails, ensuring continuous operation.

- AI-Powered Documentation: Generates comprehensive documentation automatically, fostering better team collaboration.

- Model Architecture Comparison: Allows for simultaneous comparison of multiple AI architectures.

- Automates significant portions of the AI development lifecycle.

- Focuses on building robust, production-ready AI, reducing failures.

- Advanced evaluation with high-quality test cases and LLM judges.

- Intelligent RAG building, including cleaning "dirty" documents.

- Automated failover capability enhances system reliability.

- Provides interpretable AI observability for production monitoring.

- Generates AI-powered documentation for better collaboration.

- Allows for simultaneous comparison of different AI architectures.

- Highly specialized tool, potentially a steep learning curve for those new to advanced AI engineering.

- The effectiveness relies heavily on the quality and specifics of the foundation models and input data.

- Pricing information is not readily available on the primary page.

- Integration with existing complex enterprise systems might require significant setup.

Free

$ 0.00

Limited access to generating prompts, knowledge, test cases, and judges

Limited access to test inference

Limited access to deployed endpoints

Plus

$ 25.00

Extended access to generating prompts, knowledge, test cases, and judges

Extended access to test inference

Deploy live AI endpoints and retrieval database with secret management for connecting your own API key (additional charges may apply for overage)

Early access to new features

Business

Coming Soon

Always-on observability agent (coming soon)

Data warehouse for knowledge management (coming soon)

Model fine-tuning & training agent (coming soon)

Access to collaboration features & organizational controls (coming soon)

Earlier access to new features

Enterprise

custom

Single Sign-On (SSO)

Service Level Agreement (SLA)

Dedicated server & region

Dedicated support

Proud of the love you're getting? Show off your AI Toolbook reviews—then invite more fans to share the love and build your credibility.

Add an AI Toolbook badge to your site—an easy way to drive followers, showcase updates, and collect reviews. It's like a mini 24/7 billboard for your AI.

Reviews

Rating Distribution

Average score

Popular Mention

FAQs

Similar AI Tools

tavily

Tavily is a specialized search engine meticulously optimized for Large Language Models (LLMs) and AI agents. Its primary goal is to provide real-time, accurate, and unbiased information, significantly enhancing the ability of AI applications to retrieve and process data efficiently. Unlike traditional search APIs, Tavily focuses on delivering highly relevant content snippets and structured data that are specifically tailored for AI workflows like Retrieval-Augmented Generation (RAG), aiming to reduce AI hallucinations and enable better decision-making.

tavily

Tavily is a specialized search engine meticulously optimized for Large Language Models (LLMs) and AI agents. Its primary goal is to provide real-time, accurate, and unbiased information, significantly enhancing the ability of AI applications to retrieve and process data efficiently. Unlike traditional search APIs, Tavily focuses on delivering highly relevant content snippets and structured data that are specifically tailored for AI workflows like Retrieval-Augmented Generation (RAG), aiming to reduce AI hallucinations and enable better decision-making.

tavily

Tavily is a specialized search engine meticulously optimized for Large Language Models (LLMs) and AI agents. Its primary goal is to provide real-time, accurate, and unbiased information, significantly enhancing the ability of AI applications to retrieve and process data efficiently. Unlike traditional search APIs, Tavily focuses on delivering highly relevant content snippets and structured data that are specifically tailored for AI workflows like Retrieval-Augmented Generation (RAG), aiming to reduce AI hallucinations and enable better decision-making.

LM Studio

LM Studio is a local AI toolkit that empowers users to discover, download, and run Large Language Models (LLMs) directly on their personal computers. It provides a user-friendly interface to chat with models, set up a local LLM server for applications, and ensures complete data privacy as all processes occur locally on your machine.

LM Studio

LM Studio is a local AI toolkit that empowers users to discover, download, and run Large Language Models (LLMs) directly on their personal computers. It provides a user-friendly interface to chat with models, set up a local LLM server for applications, and ensures complete data privacy as all processes occur locally on your machine.

LM Studio

LM Studio is a local AI toolkit that empowers users to discover, download, and run Large Language Models (LLMs) directly on their personal computers. It provides a user-friendly interface to chat with models, set up a local LLM server for applications, and ensures complete data privacy as all processes occur locally on your machine.

i10X

i10X is an all‑in‑one AI workspace that consolidates access to top-tier large language models—such as ChatGPT, Claude, Gemini, Perplexity, Grok, and Flux—alongside over 500 specialized AI agents, all under a single subscription that starts at around $8/month. It’s designed to replace multiple costly subscriptions, offering a no-code platform where users can browse, launch, and manage AI tools for writing, design, marketing, legal tasks, productivity and more all in one streamlined environment. Expert prompt engineers curate and test each agent, ensuring consistent performance across categories. i10X simplifies workflows by combining model flexibility, affordability, and breadth of tools to support creative, professional, and technical tasks efficiently.

i10X

i10X is an all‑in‑one AI workspace that consolidates access to top-tier large language models—such as ChatGPT, Claude, Gemini, Perplexity, Grok, and Flux—alongside over 500 specialized AI agents, all under a single subscription that starts at around $8/month. It’s designed to replace multiple costly subscriptions, offering a no-code platform where users can browse, launch, and manage AI tools for writing, design, marketing, legal tasks, productivity and more all in one streamlined environment. Expert prompt engineers curate and test each agent, ensuring consistent performance across categories. i10X simplifies workflows by combining model flexibility, affordability, and breadth of tools to support creative, professional, and technical tasks efficiently.

i10X

i10X is an all‑in‑one AI workspace that consolidates access to top-tier large language models—such as ChatGPT, Claude, Gemini, Perplexity, Grok, and Flux—alongside over 500 specialized AI agents, all under a single subscription that starts at around $8/month. It’s designed to replace multiple costly subscriptions, offering a no-code platform where users can browse, launch, and manage AI tools for writing, design, marketing, legal tasks, productivity and more all in one streamlined environment. Expert prompt engineers curate and test each agent, ensuring consistent performance across categories. i10X simplifies workflows by combining model flexibility, affordability, and breadth of tools to support creative, professional, and technical tasks efficiently.

WebDev Arena

LMArena is an open, crowdsourced platform for evaluating large language models (LLMs) based on human preferences. Rather than relying purely on automated benchmarks, it presents paired responses from different models to users, who vote for which is better. These votes build live leaderboards, revealing which models perform best in real-use scenarios. Key features include prompt-to-leaderboard comparison, transparent evaluation methods, style control for how responses are formatted, and auditability of feedback data. The platform is particularly valuable for researchers, developers, and AI labs that want to understand how their models compare when judged by real people, not just metrics.

WebDev Arena

LMArena is an open, crowdsourced platform for evaluating large language models (LLMs) based on human preferences. Rather than relying purely on automated benchmarks, it presents paired responses from different models to users, who vote for which is better. These votes build live leaderboards, revealing which models perform best in real-use scenarios. Key features include prompt-to-leaderboard comparison, transparent evaluation methods, style control for how responses are formatted, and auditability of feedback data. The platform is particularly valuable for researchers, developers, and AI labs that want to understand how their models compare when judged by real people, not just metrics.

WebDev Arena

LMArena is an open, crowdsourced platform for evaluating large language models (LLMs) based on human preferences. Rather than relying purely on automated benchmarks, it presents paired responses from different models to users, who vote for which is better. These votes build live leaderboards, revealing which models perform best in real-use scenarios. Key features include prompt-to-leaderboard comparison, transparent evaluation methods, style control for how responses are formatted, and auditability of feedback data. The platform is particularly valuable for researchers, developers, and AI labs that want to understand how their models compare when judged by real people, not just metrics.

ChatBetter

ChatBetter is an AI platform designed to unify access to all major large language models (LLMs) within a single chat interface. Built for productivity and accuracy, ChatBetter leverages automatic model selection to route every query to the most capable AI—eliminating guesswork about which model to use. Users can directly compare responses from OpenAI, Anthropic, Google, Meta, DeepSeek, Perplexity, Mistral, xAI, and Cohere models side by side, or merge answers for comprehensive insights. The system is crafted for teams and individuals alike, enabling complex research, planning, and writing tasks to be accomplished efficiently in one place.

ChatBetter

ChatBetter is an AI platform designed to unify access to all major large language models (LLMs) within a single chat interface. Built for productivity and accuracy, ChatBetter leverages automatic model selection to route every query to the most capable AI—eliminating guesswork about which model to use. Users can directly compare responses from OpenAI, Anthropic, Google, Meta, DeepSeek, Perplexity, Mistral, xAI, and Cohere models side by side, or merge answers for comprehensive insights. The system is crafted for teams and individuals alike, enabling complex research, planning, and writing tasks to be accomplished efficiently in one place.

ChatBetter

ChatBetter is an AI platform designed to unify access to all major large language models (LLMs) within a single chat interface. Built for productivity and accuracy, ChatBetter leverages automatic model selection to route every query to the most capable AI—eliminating guesswork about which model to use. Users can directly compare responses from OpenAI, Anthropic, Google, Meta, DeepSeek, Perplexity, Mistral, xAI, and Cohere models side by side, or merge answers for comprehensive insights. The system is crafted for teams and individuals alike, enabling complex research, planning, and writing tasks to be accomplished efficiently in one place.

Langchain

LangChain is a powerful open-source framework designed to help developers build context-aware applications that leverage large language models (LLMs). It allows users to connect language models to various data sources, APIs, and memory components, enabling intelligent, multi-step reasoning and decision-making processes. LangChain supports both Python and JavaScript, providing modular building blocks for developers to create chatbots, AI assistants, retrieval-augmented generation (RAG) systems, and agent-based tools. The framework is widely adopted across industries for its flexibility in connecting structured and unstructured data with LLMs.

Langchain

LangChain is a powerful open-source framework designed to help developers build context-aware applications that leverage large language models (LLMs). It allows users to connect language models to various data sources, APIs, and memory components, enabling intelligent, multi-step reasoning and decision-making processes. LangChain supports both Python and JavaScript, providing modular building blocks for developers to create chatbots, AI assistants, retrieval-augmented generation (RAG) systems, and agent-based tools. The framework is widely adopted across industries for its flexibility in connecting structured and unstructured data with LLMs.

Langchain

LangChain is a powerful open-source framework designed to help developers build context-aware applications that leverage large language models (LLMs). It allows users to connect language models to various data sources, APIs, and memory components, enabling intelligent, multi-step reasoning and decision-making processes. LangChain supports both Python and JavaScript, providing modular building blocks for developers to create chatbots, AI assistants, retrieval-augmented generation (RAG) systems, and agent-based tools. The framework is widely adopted across industries for its flexibility in connecting structured and unstructured data with LLMs.

Ask Any Model

AskAnyModel is a unified AI interface that allows users to interact with multiple leading AI models — such as GPT, Claude, Gemini, and Mistral — from a single platform. It eliminates the need for multiple subscriptions and interfaces by bringing top AI models into one streamlined environment. Users can compare responses, analyze outputs, and select the best AI model for specific tasks like content creation, coding, data analysis, or research. AskAnyModel empowers individuals and teams to harness AI diversity efficiently, offering advanced tools for prompt testing, model benchmarking, and workflow integration.

Ask Any Model

AskAnyModel is a unified AI interface that allows users to interact with multiple leading AI models — such as GPT, Claude, Gemini, and Mistral — from a single platform. It eliminates the need for multiple subscriptions and interfaces by bringing top AI models into one streamlined environment. Users can compare responses, analyze outputs, and select the best AI model for specific tasks like content creation, coding, data analysis, or research. AskAnyModel empowers individuals and teams to harness AI diversity efficiently, offering advanced tools for prompt testing, model benchmarking, and workflow integration.

Ask Any Model

AskAnyModel is a unified AI interface that allows users to interact with multiple leading AI models — such as GPT, Claude, Gemini, and Mistral — from a single platform. It eliminates the need for multiple subscriptions and interfaces by bringing top AI models into one streamlined environment. Users can compare responses, analyze outputs, and select the best AI model for specific tasks like content creation, coding, data analysis, or research. AskAnyModel empowers individuals and teams to harness AI diversity efficiently, offering advanced tools for prompt testing, model benchmarking, and workflow integration.

LLM Chat

LLMChat is a privacy-focused, open-source AI chatbot platform designed for advanced research, agentic workflows, and seamless interaction with multiple large language models (LLMs). It offers users a minimalistic and intuitive interface enabling deep exploration of complex topics with modes like Deep Research and Pro Search, which incorporates real-time web integration for current data. The platform emphasizes user privacy by storing all chat history locally in the browser, ensuring conversations never leave the device. LLMChat supports many popular LLM providers such as OpenAI, Anthropic, Google, and more, allowing users to customize AI assistants with personalized instructions and knowledge bases for a wide variety of applications ranging from research to content generation and coding assistance.

LLM Chat

LLMChat is a privacy-focused, open-source AI chatbot platform designed for advanced research, agentic workflows, and seamless interaction with multiple large language models (LLMs). It offers users a minimalistic and intuitive interface enabling deep exploration of complex topics with modes like Deep Research and Pro Search, which incorporates real-time web integration for current data. The platform emphasizes user privacy by storing all chat history locally in the browser, ensuring conversations never leave the device. LLMChat supports many popular LLM providers such as OpenAI, Anthropic, Google, and more, allowing users to customize AI assistants with personalized instructions and knowledge bases for a wide variety of applications ranging from research to content generation and coding assistance.

LLM Chat

LLMChat is a privacy-focused, open-source AI chatbot platform designed for advanced research, agentic workflows, and seamless interaction with multiple large language models (LLMs). It offers users a minimalistic and intuitive interface enabling deep exploration of complex topics with modes like Deep Research and Pro Search, which incorporates real-time web integration for current data. The platform emphasizes user privacy by storing all chat history locally in the browser, ensuring conversations never leave the device. LLMChat supports many popular LLM providers such as OpenAI, Anthropic, Google, and more, allowing users to customize AI assistants with personalized instructions and knowledge bases for a wide variety of applications ranging from research to content generation and coding assistance.

Awan LLM

Awan LLM is a cost-effective, unlimited token large language model inference API platform designed for power users and developers. Unlike traditional API providers that charge per token, Awan LLM offers a monthly subscription model that enables users to send and receive unlimited tokens up to the model's context limit. It supports unrestricted use of LLM models without censorship or constraints. The platform is built on privately owned data centers and GPUs, allowing it to offer efficient and scalable AI services. Awan LLM supports numerous use cases including AI assistants, AI agents, roleplaying, data processing, code completion, and building AI-powered applications without worrying about token limits or costs.

Awan LLM

Awan LLM is a cost-effective, unlimited token large language model inference API platform designed for power users and developers. Unlike traditional API providers that charge per token, Awan LLM offers a monthly subscription model that enables users to send and receive unlimited tokens up to the model's context limit. It supports unrestricted use of LLM models without censorship or constraints. The platform is built on privately owned data centers and GPUs, allowing it to offer efficient and scalable AI services. Awan LLM supports numerous use cases including AI assistants, AI agents, roleplaying, data processing, code completion, and building AI-powered applications without worrying about token limits or costs.

Awan LLM

Awan LLM is a cost-effective, unlimited token large language model inference API platform designed for power users and developers. Unlike traditional API providers that charge per token, Awan LLM offers a monthly subscription model that enables users to send and receive unlimited tokens up to the model's context limit. It supports unrestricted use of LLM models without censorship or constraints. The platform is built on privately owned data centers and GPUs, allowing it to offer efficient and scalable AI services. Awan LLM supports numerous use cases including AI assistants, AI agents, roleplaying, data processing, code completion, and building AI-powered applications without worrying about token limits or costs.

potpie.ai

Potpie.ai is an innovative AI agent platform that enables developers to create intelligent agents for their codebase in just minutes, transforming software development through automated understanding and interaction. Designed specifically for codebase analysis, it allows users to deploy AI agents that comprehend entire repositories, perform tasks like code reviews, bug detection, refactoring suggestions, and feature implementation autonomously. The platform emphasizes speed and simplicity, requiring minimal setup to integrate with existing projects on GitHub or local environments. Key capabilities include natural language queries for code exploration, multi-agent collaboration for complex workflows, and seamless integration with popular IDEs like VS Code. By leveraging advanced LLMs fine-tuned for code, Potpie.ai boosts productivity for solo developers and teams alike, making AI-assisted coding accessible without deep expertise.

potpie.ai

Potpie.ai is an innovative AI agent platform that enables developers to create intelligent agents for their codebase in just minutes, transforming software development through automated understanding and interaction. Designed specifically for codebase analysis, it allows users to deploy AI agents that comprehend entire repositories, perform tasks like code reviews, bug detection, refactoring suggestions, and feature implementation autonomously. The platform emphasizes speed and simplicity, requiring minimal setup to integrate with existing projects on GitHub or local environments. Key capabilities include natural language queries for code exploration, multi-agent collaboration for complex workflows, and seamless integration with popular IDEs like VS Code. By leveraging advanced LLMs fine-tuned for code, Potpie.ai boosts productivity for solo developers and teams alike, making AI-assisted coding accessible without deep expertise.

potpie.ai

Potpie.ai is an innovative AI agent platform that enables developers to create intelligent agents for their codebase in just minutes, transforming software development through automated understanding and interaction. Designed specifically for codebase analysis, it allows users to deploy AI agents that comprehend entire repositories, perform tasks like code reviews, bug detection, refactoring suggestions, and feature implementation autonomously. The platform emphasizes speed and simplicity, requiring minimal setup to integrate with existing projects on GitHub or local environments. Key capabilities include natural language queries for code exploration, multi-agent collaboration for complex workflows, and seamless integration with popular IDEs like VS Code. By leveraging advanced LLMs fine-tuned for code, Potpie.ai boosts productivity for solo developers and teams alike, making AI-assisted coding accessible without deep expertise.

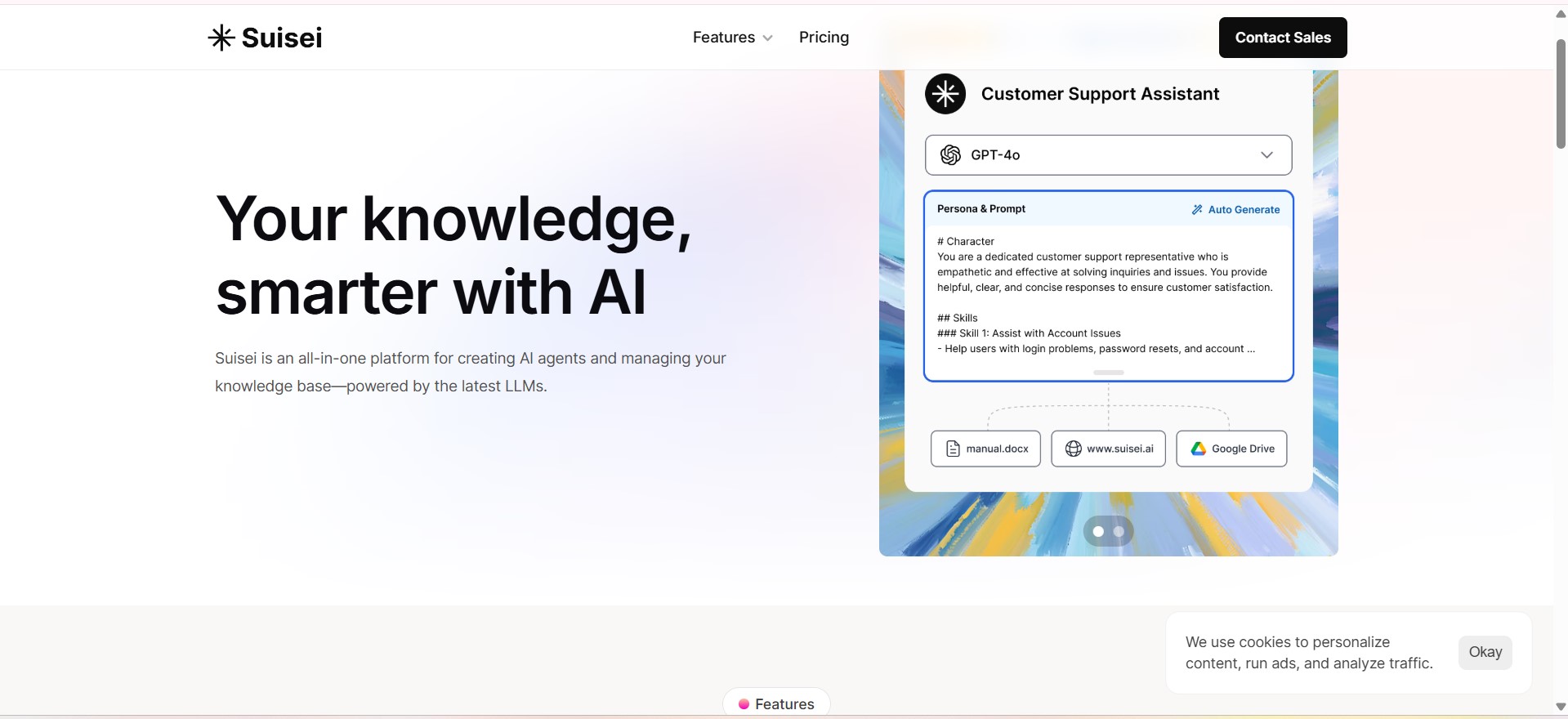

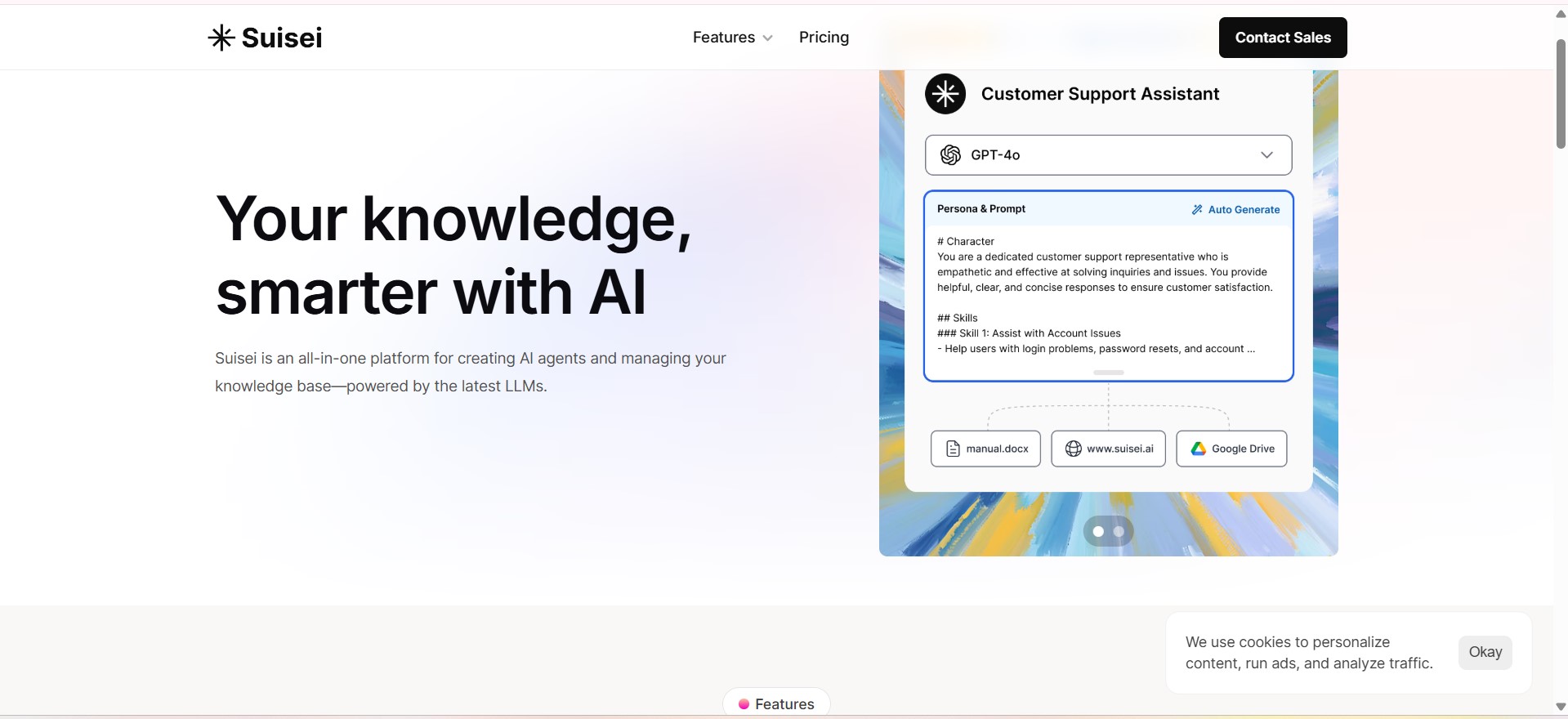

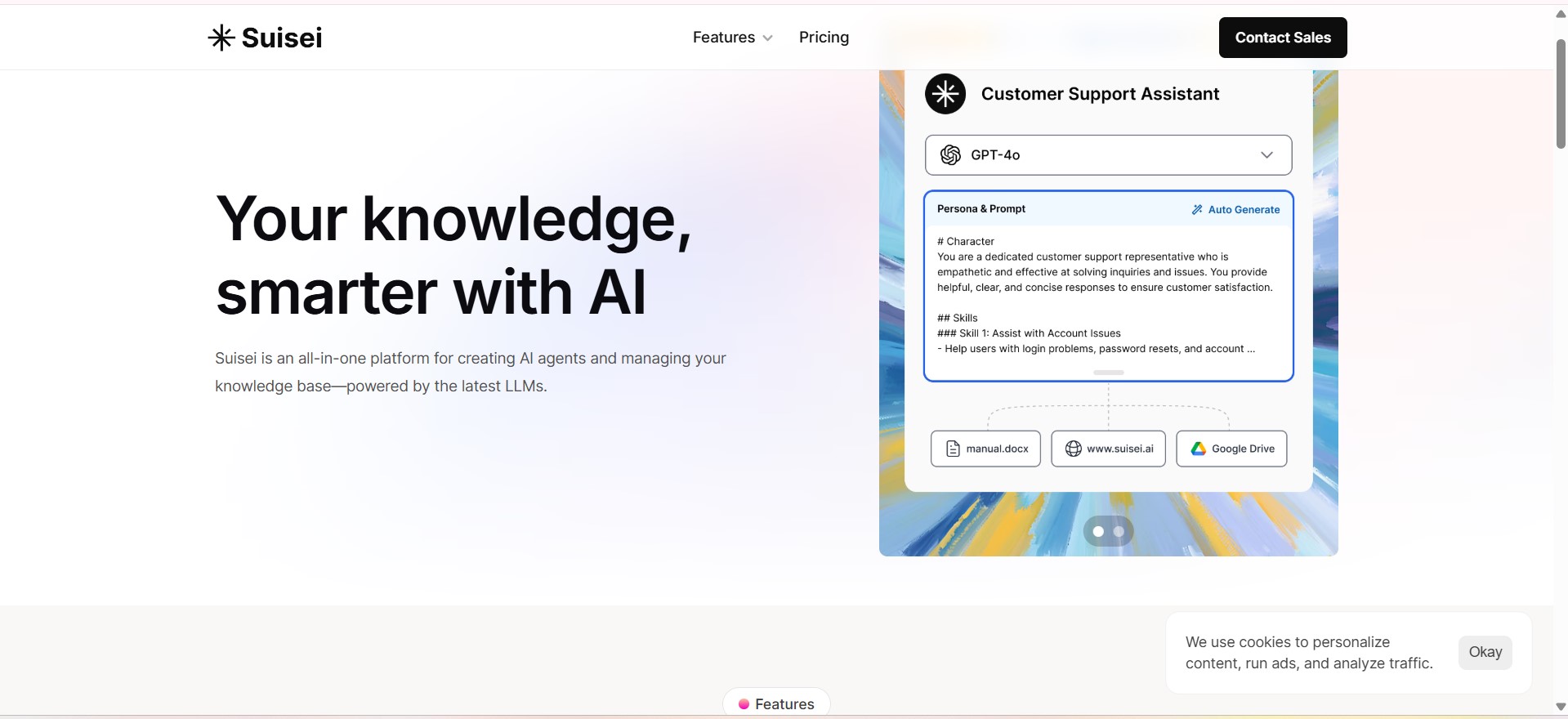

Suisei AI

Suisei AI is a modern AI platform designed to help individuals and businesses build, manage, and deploy intelligent AI agents tailored to their needs. At its core, Suisei positions itself as an all-in-one workspace for AI agents and knowledge management, powered by the latest large language models (LLMs) such as GPT, Claude, and Google’s Gemini.The platform’s primary focus is on AI agents—automated assistants that can perform a wide variety of tasks. Users can create custom AI agents without writing code, using intuitive templates or starting from scratch.

Suisei AI

Suisei AI is a modern AI platform designed to help individuals and businesses build, manage, and deploy intelligent AI agents tailored to their needs. At its core, Suisei positions itself as an all-in-one workspace for AI agents and knowledge management, powered by the latest large language models (LLMs) such as GPT, Claude, and Google’s Gemini.The platform’s primary focus is on AI agents—automated assistants that can perform a wide variety of tasks. Users can create custom AI agents without writing code, using intuitive templates or starting from scratch.

Suisei AI

Suisei AI is a modern AI platform designed to help individuals and businesses build, manage, and deploy intelligent AI agents tailored to their needs. At its core, Suisei positions itself as an all-in-one workspace for AI agents and knowledge management, powered by the latest large language models (LLMs) such as GPT, Claude, and Google’s Gemini.The platform’s primary focus is on AI agents—automated assistants that can perform a wide variety of tasks. Users can create custom AI agents without writing code, using intuitive templates or starting from scratch.

Convolut

Convolut is a smart platform called ConAir that acts as a central hub for managing, organizing, and streaming context snippets to Large Language Models and AI agents. It lets users store knowledge bases, retrieve info quickly, and export data to supercharge AI interactions with relevant context. Perfect for developers and teams, it handles everything from basic storage to advanced organization, making AI responses more accurate and nuanced by feeding in the right details every time. Teams love how it streamlines workflows, cuts down on manual data hunting, and boosts AI performance across projects.

Convolut

Convolut is a smart platform called ConAir that acts as a central hub for managing, organizing, and streaming context snippets to Large Language Models and AI agents. It lets users store knowledge bases, retrieve info quickly, and export data to supercharge AI interactions with relevant context. Perfect for developers and teams, it handles everything from basic storage to advanced organization, making AI responses more accurate and nuanced by feeding in the right details every time. Teams love how it streamlines workflows, cuts down on manual data hunting, and boosts AI performance across projects.

Convolut

Convolut is a smart platform called ConAir that acts as a central hub for managing, organizing, and streaming context snippets to Large Language Models and AI agents. It lets users store knowledge bases, retrieve info quickly, and export data to supercharge AI interactions with relevant context. Perfect for developers and teams, it handles everything from basic storage to advanced organization, making AI responses more accurate and nuanced by feeding in the right details every time. Teams love how it streamlines workflows, cuts down on manual data hunting, and boosts AI performance across projects.

Editorial Note

This page was researched and written by the ATB Editorial Team. Our team researches each AI tool by reviewing its official website, testing features, exploring real use cases, and considering user feedback. Every page is fact-checked and regularly updated to ensure the information stays accurate, neutral, and useful for our readers.

If you have any suggestions or questions, email us at hello@aitoolbook.ai