- Researchers & AI Labs: Ideal for developing next-gen models, complex reasoning, and cutting-edge experiments.

- Enterprises: Intended as backend infrastructure to train or fine-tune distilled models (like Maverick) at scale.

- Developers & Engineers: Access via cloud or platform partnerships once released, especially for high-stakes AI workflows.

- Academia: Enables study of ultra-large model behavior, multimodal fusion, and teacher-student distillation.

- Cloud Providers: Will power backend systems and provide inference/dataset services for other models.

How to Use Llama 4 Behemoth?

- Still in Training: It's currently undergoing safety, alignment, and performance refining—unavailable publicly.

- Distillation Role: Used internally to train and improve smaller Llama 4 models like Maverick and Scout.

- Awaiting Release: Public access may be via select cloud platforms or research collabs once performance and reliability are verified.

- Upcoming Features: Expected to offer multimodal, long-context (1–2 million tokens or more), and top-tier reasoning capabilities.

- Massive Scale: 2 trillion parameters—one of the largest MoE-based language models ever built.

- STEM Benchmark Leader: Internal performance shows

95% on MATH-500,82% GPQA Diamond, ~85.8% multilingual MMLU—surpassing existing flagship models. - Teacher Backbone: Powers the development and fine-tuning of smaller, deployable Llama 4 models.

- Potentially Unmatched Context: Expected to support 1–2 million-token or larger context windows.

- Cautious Rollout: Meta is delaying public release to ensure strong alignment, performance consistency, and readiness for enterprise standards.

- Record-breaking scale and internal performance on STEM/multilingual benchmarks

- Serves as a powerful teacher model to enhance smaller Llama models

- Expected to support ultra-long context and multimodality

- Will be available via select cloud/research platforms when ready

- Still unreleased—delayed to fall 2025 or later

- Operationally expensive and infrastructure-heavy, limiting early access

- Internal performance gaps and scale complexities have raised concerns

Proud of the love you're getting? Show off your AI Toolbook reviews—then invite more fans to share the love and build your credibility.

Add an AI Toolbook badge to your site—an easy way to drive followers, showcase updates, and collect reviews. It's like a mini 24/7 billboard for your AI.

Reviews

Rating Distribution

Average score

Popular Mention

FAQs

Similar AI Tools

OpenAI GPT 4 Turbo

GPT-4 Turbo is OpenAI’s enhanced version of GPT-4, engineered to deliver faster performance, extended context handling, and more cost-effective usage. Released in November 2023, GPT-4 Turbo boasts a 128,000-token context window, allowing it to process and generate longer and more complex content. It supports multimodal inputs, including text and images, making it versatile for various applications.

OpenAI GPT 4 Turbo

GPT-4 Turbo is OpenAI’s enhanced version of GPT-4, engineered to deliver faster performance, extended context handling, and more cost-effective usage. Released in November 2023, GPT-4 Turbo boasts a 128,000-token context window, allowing it to process and generate longer and more complex content. It supports multimodal inputs, including text and images, making it versatile for various applications.

OpenAI GPT 4 Turbo

GPT-4 Turbo is OpenAI’s enhanced version of GPT-4, engineered to deliver faster performance, extended context handling, and more cost-effective usage. Released in November 2023, GPT-4 Turbo boasts a 128,000-token context window, allowing it to process and generate longer and more complex content. It supports multimodal inputs, including text and images, making it versatile for various applications.

Poe AI

Poe.com is a comprehensive AI chatbot aggregation platform developed by Quora, providing users with unified access to a wide range of conversational AI models from various leading providers, including OpenAI, Anthropic, Google, and Meta. It simplifies the process of discovering and interacting with different AI chatbots and also empowers users to create and monetize their own custom AI bots.

Poe AI

Poe.com is a comprehensive AI chatbot aggregation platform developed by Quora, providing users with unified access to a wide range of conversational AI models from various leading providers, including OpenAI, Anthropic, Google, and Meta. It simplifies the process of discovering and interacting with different AI chatbots and also empowers users to create and monetize their own custom AI bots.

Poe AI

Poe.com is a comprehensive AI chatbot aggregation platform developed by Quora, providing users with unified access to a wide range of conversational AI models from various leading providers, including OpenAI, Anthropic, Google, and Meta. It simplifies the process of discovering and interacting with different AI chatbots and also empowers users to create and monetize their own custom AI bots.

Claude Sonnet 4

Claude Sonnet 4 is Anthropic’s hybrid‑reasoning AI model that combines fast, near-instant responses with visible, step‑by‑step thinking in a single model. It delivers frontier-level performance in coding, reasoning, vision, and tool usage—while offering a massive 200K token context window and cost-effective pricing

Claude Sonnet 4

Claude Sonnet 4 is Anthropic’s hybrid‑reasoning AI model that combines fast, near-instant responses with visible, step‑by‑step thinking in a single model. It delivers frontier-level performance in coding, reasoning, vision, and tool usage—while offering a massive 200K token context window and cost-effective pricing

Claude Sonnet 4

Claude Sonnet 4 is Anthropic’s hybrid‑reasoning AI model that combines fast, near-instant responses with visible, step‑by‑step thinking in a single model. It delivers frontier-level performance in coding, reasoning, vision, and tool usage—while offering a massive 200K token context window and cost-effective pricing

Perplexity AI

Perplexity AI is a powerful AI‑powered answer engine and search assistant launched in December 2022. It combines real‑time web search with large language models (like GPT‑4.1, Claude 4, Sonar), delivering direct answers with in‑text citations and multi‑turn conversational context.

Perplexity AI

Perplexity AI is a powerful AI‑powered answer engine and search assistant launched in December 2022. It combines real‑time web search with large language models (like GPT‑4.1, Claude 4, Sonar), delivering direct answers with in‑text citations and multi‑turn conversational context.

Perplexity AI

Perplexity AI is a powerful AI‑powered answer engine and search assistant launched in December 2022. It combines real‑time web search with large language models (like GPT‑4.1, Claude 4, Sonar), delivering direct answers with in‑text citations and multi‑turn conversational context.

Chat 01 AI

Chat01.ai is a platform that offers free and unlimited chat with OpenAI 01, a new series of AI models. These models are specifically designed for complex reasoning and problem-solving in areas such as science, coding, and math, by employing a "think more before responding" approach, trying different strategies, and recognizing mistakes.

Chat 01 AI

Chat01.ai is a platform that offers free and unlimited chat with OpenAI 01, a new series of AI models. These models are specifically designed for complex reasoning and problem-solving in areas such as science, coding, and math, by employing a "think more before responding" approach, trying different strategies, and recognizing mistakes.

Chat 01 AI

Chat01.ai is a platform that offers free and unlimited chat with OpenAI 01, a new series of AI models. These models are specifically designed for complex reasoning and problem-solving in areas such as science, coding, and math, by employing a "think more before responding" approach, trying different strategies, and recognizing mistakes.

Grok 4

Grok 4 is the latest and most intelligent AI model developed by xAI, designed for expert-level reasoning and real-time knowledge integration. It combines large-scale reinforcement learning with native tool use, including code interpretation, web browsing, and advanced search capabilities, to provide highly accurate and up-to-date responses. Grok 4 excels across diverse domains such as math, coding, science, and complex reasoning, supporting multimodal inputs like text and vision. With its massive 256,000-token context window and advanced toolset, Grok 4 is built to push the boundaries of AI intelligence and practical utility for both developers and enterprises.

Grok 4

Grok 4 is the latest and most intelligent AI model developed by xAI, designed for expert-level reasoning and real-time knowledge integration. It combines large-scale reinforcement learning with native tool use, including code interpretation, web browsing, and advanced search capabilities, to provide highly accurate and up-to-date responses. Grok 4 excels across diverse domains such as math, coding, science, and complex reasoning, supporting multimodal inputs like text and vision. With its massive 256,000-token context window and advanced toolset, Grok 4 is built to push the boundaries of AI intelligence and practical utility for both developers and enterprises.

Grok 4

Grok 4 is the latest and most intelligent AI model developed by xAI, designed for expert-level reasoning and real-time knowledge integration. It combines large-scale reinforcement learning with native tool use, including code interpretation, web browsing, and advanced search capabilities, to provide highly accurate and up-to-date responses. Grok 4 excels across diverse domains such as math, coding, science, and complex reasoning, supporting multimodal inputs like text and vision. With its massive 256,000-token context window and advanced toolset, Grok 4 is built to push the boundaries of AI intelligence and practical utility for both developers and enterprises.

Llama Nemotron Ultra is NVIDIA’s open-source reasoning AI model engineered for deep problem solving, advanced coding, and scientific analysis across business, enterprise, and research applications. It leads open models in intelligence and reasoning benchmarks, excelling at scientific, mathematical, and programming challenges. Building on Meta Llama 3.1, it is trained for complex, human-aligned chat, agentic workflows, and retrieval-augmented generation. Llama Nemotron Ultra is designed to be efficient, cost-effective, and highly adaptable, available via Hugging Face and as an NVIDIA NIM inference microservice for scalable deployment.

NVidia Llama Nemot..

Llama Nemotron Ultra is NVIDIA’s open-source reasoning AI model engineered for deep problem solving, advanced coding, and scientific analysis across business, enterprise, and research applications. It leads open models in intelligence and reasoning benchmarks, excelling at scientific, mathematical, and programming challenges. Building on Meta Llama 3.1, it is trained for complex, human-aligned chat, agentic workflows, and retrieval-augmented generation. Llama Nemotron Ultra is designed to be efficient, cost-effective, and highly adaptable, available via Hugging Face and as an NVIDIA NIM inference microservice for scalable deployment.

NVidia Llama Nemot..

Llama Nemotron Ultra is NVIDIA’s open-source reasoning AI model engineered for deep problem solving, advanced coding, and scientific analysis across business, enterprise, and research applications. It leads open models in intelligence and reasoning benchmarks, excelling at scientific, mathematical, and programming challenges. Building on Meta Llama 3.1, it is trained for complex, human-aligned chat, agentic workflows, and retrieval-augmented generation. Llama Nemotron Ultra is designed to be efficient, cost-effective, and highly adaptable, available via Hugging Face and as an NVIDIA NIM inference microservice for scalable deployment.

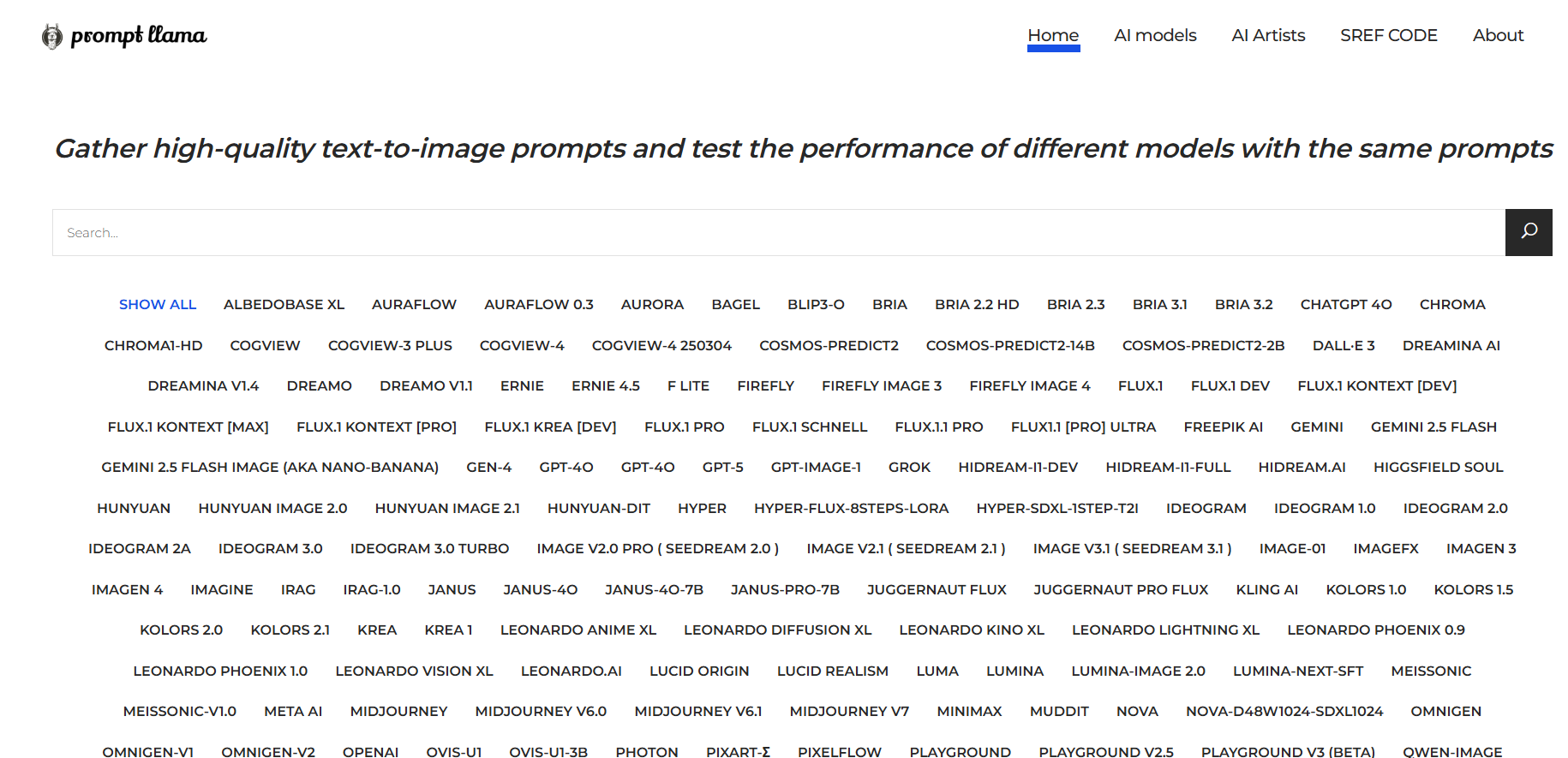

Prompt Llama

Prompt Llama is a tool for creatives and AI enthusiasts that lets you gather high-quality text-to-image prompts and test how different generative AI models respond to the same prompts. It’s made for comparing model outputs side by side, so you can see strengths and weaknesses, styles, fidelity, and prompt adherence across models without doing the prompt-engineering yourself every time.

Prompt Llama

Prompt Llama is a tool for creatives and AI enthusiasts that lets you gather high-quality text-to-image prompts and test how different generative AI models respond to the same prompts. It’s made for comparing model outputs side by side, so you can see strengths and weaknesses, styles, fidelity, and prompt adherence across models without doing the prompt-engineering yourself every time.

Prompt Llama

Prompt Llama is a tool for creatives and AI enthusiasts that lets you gather high-quality text-to-image prompts and test how different generative AI models respond to the same prompts. It’s made for comparing model outputs side by side, so you can see strengths and weaknesses, styles, fidelity, and prompt adherence across models without doing the prompt-engineering yourself every time.

Unsloth AI

Unsloth.AI is an open-source platform designed to accelerate and simplify the fine-tuning of large language models (LLMs). By leveraging manual mathematical derivations, custom GPU kernels, and efficient optimization techniques, Unsloth achieves up to 30x faster training speeds compared to traditional methods, without compromising model accuracy. It supports a wide range of popular models, including Llama, Mistral, Gemma, and BERT, and works seamlessly on various GPUs, from consumer-grade Tesla T4 to high-end H100, as well as AMD and Intel GPUs. Unsloth empowers developers, researchers, and AI enthusiasts to fine-tune models efficiently, even with limited computational resources, democratizing access to advanced AI model customization. With a focus on performance, scalability, and flexibility, Unsloth.AI is suitable for both academic research and commercial applications, helping users deploy specialized AI solutions faster and more effectively.

Unsloth AI

Unsloth.AI is an open-source platform designed to accelerate and simplify the fine-tuning of large language models (LLMs). By leveraging manual mathematical derivations, custom GPU kernels, and efficient optimization techniques, Unsloth achieves up to 30x faster training speeds compared to traditional methods, without compromising model accuracy. It supports a wide range of popular models, including Llama, Mistral, Gemma, and BERT, and works seamlessly on various GPUs, from consumer-grade Tesla T4 to high-end H100, as well as AMD and Intel GPUs. Unsloth empowers developers, researchers, and AI enthusiasts to fine-tune models efficiently, even with limited computational resources, democratizing access to advanced AI model customization. With a focus on performance, scalability, and flexibility, Unsloth.AI is suitable for both academic research and commercial applications, helping users deploy specialized AI solutions faster and more effectively.

Unsloth AI

Unsloth.AI is an open-source platform designed to accelerate and simplify the fine-tuning of large language models (LLMs). By leveraging manual mathematical derivations, custom GPU kernels, and efficient optimization techniques, Unsloth achieves up to 30x faster training speeds compared to traditional methods, without compromising model accuracy. It supports a wide range of popular models, including Llama, Mistral, Gemma, and BERT, and works seamlessly on various GPUs, from consumer-grade Tesla T4 to high-end H100, as well as AMD and Intel GPUs. Unsloth empowers developers, researchers, and AI enthusiasts to fine-tune models efficiently, even with limited computational resources, democratizing access to advanced AI model customization. With a focus on performance, scalability, and flexibility, Unsloth.AI is suitable for both academic research and commercial applications, helping users deploy specialized AI solutions faster and more effectively.

LM Studio

LM Studio is a local large language model (LLM) platform that enables users to run and download powerful AI language models like LLaMa, MPT, and Gemma directly on their own computers. This platform supports Mac, Windows, and Linux operating systems, providing flexibility to users across different devices. LM Studio focuses on privacy and control by allowing users to work with AI models locally without relying on cloud-based services, ensuring data stays on the user’s device. It offers an easy-to-install interface with step-by-step guidance for setup, facilitating access to advanced AI capabilities for developers, researchers, and AI enthusiasts without requiring an internet connection.

LM Studio

LM Studio is a local large language model (LLM) platform that enables users to run and download powerful AI language models like LLaMa, MPT, and Gemma directly on their own computers. This platform supports Mac, Windows, and Linux operating systems, providing flexibility to users across different devices. LM Studio focuses on privacy and control by allowing users to work with AI models locally without relying on cloud-based services, ensuring data stays on the user’s device. It offers an easy-to-install interface with step-by-step guidance for setup, facilitating access to advanced AI capabilities for developers, researchers, and AI enthusiasts without requiring an internet connection.

LM Studio

LM Studio is a local large language model (LLM) platform that enables users to run and download powerful AI language models like LLaMa, MPT, and Gemma directly on their own computers. This platform supports Mac, Windows, and Linux operating systems, providing flexibility to users across different devices. LM Studio focuses on privacy and control by allowing users to work with AI models locally without relying on cloud-based services, ensuring data stays on the user’s device. It offers an easy-to-install interface with step-by-step guidance for setup, facilitating access to advanced AI capabilities for developers, researchers, and AI enthusiasts without requiring an internet connection.

GlobalGPT

GlobalGPT is an all-in-one AI platform that unifies leading models for writing, coding, research, image generation, and video creation—accessible through a single account and subscription. It brings together top models like GPT-5, GPT-4.1, GPT-4o, GPT-o3, Claude Opus 4.1, Claude Sonnet 4, Gemini 2.5 Pro, DeepSeek V3, Unikorn (MJ-like) V7, Flux, Ideogram, and Google Veo 3 so you can chat, draft content, analyze documents, generate images, and produce cinematic videos without switching tools. Designed for over 2 million users worldwide, GlobalGPT also includes advanced research agents powered by GPT-4o and perplexity for deep web analysis, PDF summarization, and market insights, all wrapped in a clean interface with flexible credit-based plans.

GlobalGPT

GlobalGPT is an all-in-one AI platform that unifies leading models for writing, coding, research, image generation, and video creation—accessible through a single account and subscription. It brings together top models like GPT-5, GPT-4.1, GPT-4o, GPT-o3, Claude Opus 4.1, Claude Sonnet 4, Gemini 2.5 Pro, DeepSeek V3, Unikorn (MJ-like) V7, Flux, Ideogram, and Google Veo 3 so you can chat, draft content, analyze documents, generate images, and produce cinematic videos without switching tools. Designed for over 2 million users worldwide, GlobalGPT also includes advanced research agents powered by GPT-4o and perplexity for deep web analysis, PDF summarization, and market insights, all wrapped in a clean interface with flexible credit-based plans.

GlobalGPT

GlobalGPT is an all-in-one AI platform that unifies leading models for writing, coding, research, image generation, and video creation—accessible through a single account and subscription. It brings together top models like GPT-5, GPT-4.1, GPT-4o, GPT-o3, Claude Opus 4.1, Claude Sonnet 4, Gemini 2.5 Pro, DeepSeek V3, Unikorn (MJ-like) V7, Flux, Ideogram, and Google Veo 3 so you can chat, draft content, analyze documents, generate images, and produce cinematic videos without switching tools. Designed for over 2 million users worldwide, GlobalGPT also includes advanced research agents powered by GPT-4o and perplexity for deep web analysis, PDF summarization, and market insights, all wrapped in a clean interface with flexible credit-based plans.

polychat

polychat is a multi-LLM chat platform that lets you interact with numerous AI models like OpenAI, Anthropic, Perplexity, Google, DeepSeek, Llama, and others in one interface, with free trials and no rate limits. Switch between models seamlessly for the best responses to your queries, whether coding, writing, or research, all at affordable plans starting $5/month. It's designed for power users wanting flexibility without juggling multiple apps or hitting usage caps quickly.

polychat

polychat is a multi-LLM chat platform that lets you interact with numerous AI models like OpenAI, Anthropic, Perplexity, Google, DeepSeek, Llama, and others in one interface, with free trials and no rate limits. Switch between models seamlessly for the best responses to your queries, whether coding, writing, or research, all at affordable plans starting $5/month. It's designed for power users wanting flexibility without juggling multiple apps or hitting usage caps quickly.

polychat

polychat is a multi-LLM chat platform that lets you interact with numerous AI models like OpenAI, Anthropic, Perplexity, Google, DeepSeek, Llama, and others in one interface, with free trials and no rate limits. Switch between models seamlessly for the best responses to your queries, whether coding, writing, or research, all at affordable plans starting $5/month. It's designed for power users wanting flexibility without juggling multiple apps or hitting usage caps quickly.

Editorial Note

This page was researched and written by the ATB Editorial Team. Our team researches each AI tool by reviewing its official website, testing features, exploring real use cases, and considering user feedback. Every page is fact-checked and regularly updated to ensure the information stays accurate, neutral, and useful for our readers.

If you have any suggestions or questions, email us at hello@aitoolbook.ai