- Data Analysts & Scientists: Query multimodal data and build AI apps using simple SQL functions.

- Developers & Engineers: Access LLMs and APIs for gen AI without infrastructure setup.

- Business Intelligence Teams: Create trusted enterprise agents for insights and actions.

- IT & Security Professionals: Govern AI workloads with unified security and compliance.

- Enterprise Leaders: Scale analytics across structured and unstructured data securely.

How to Use Snowflake's Cortex AI?

- Connect Your Data: Unify structured and unstructured data within Snowflake's platform.

- Query with SQL: Use functions for multimodal analysis, embeddings, and agent orchestration.

- Build AI Apps: Leverage APIs and serverless LLMs like Claude or Llama for inference.

- Govern & Scale: Apply policies, monitor usage, and ensure secure processing at scale.

- SQL-Native AI: Build gen AI apps directly in SQL without coding or data movement.

- Multimodal Processing: Analyze text, images, audio with structured data seamlessly.

- Data Agents: Orchestrate across sources for high-quality, trusted insights automatically.

- Secure Perimeter: All AI runs within Snowflake governance with built-in guardrails.

- Serverless LLMs: Access top models like Mistral Large 2 without infrastructure management.

- Processes multimodal data efficiently in one platform.

- SQL simplicity speeds up AI app development dramatically.

- Strong governance keeps enterprise data secure during AI use.

- Scales serverless with no setup for LLMs and agents.

- Requires Snowflake platform commitment for full access.

- Limited to supported LLMs without custom model uploads.

- Learning curve for advanced agent orchestration features.

- Cost scales with data volume and AI inference usage.

Standard

$ 2.00

All core platform functionality with fully managed elastic compute

Security with automatic encryption of all data

Snowpark

Data sharing

Optimized storage with compression and Time Travel

+ more

Enterprise

$ 3.00

ALL STANDARD EDITION FEATURES +

Ability to use multi-cluster compute

Granular governance and privacy controls

Extended Time Travel windows

+ more

Business Critical

$ 4.00

ALL ENTERPRISE EDITION FEATURES +

Tri-Secret Secure

Access to private connectivity

Failover and failback for backup and disaster recovery

+ more

Virtual Private Snowflake

Contact Sales

Proud of the love you're getting? Show off your AI Toolbook reviews—then invite more fans to share the love and build your credibility.

Add an AI Toolbook badge to your site—an easy way to drive followers, showcase updates, and collect reviews. It's like a mini 24/7 billboard for your AI.

Reviews

Rating Distribution

Average score

Popular Mention

FAQs

Similar AI Tools

Inweave

Inweave is an AI tool designed to help startups and scaleups automate their workflows. It allows users to create, deploy, and manage tailored AI assistants for a variety of tasks and business processes. By offering flexible model selection and robust API support, Inweave enables businesses to seamlessly integrate AI into their existing applications, boosting productivity and efficiency.

Inweave

Inweave is an AI tool designed to help startups and scaleups automate their workflows. It allows users to create, deploy, and manage tailored AI assistants for a variety of tasks and business processes. By offering flexible model selection and robust API support, Inweave enables businesses to seamlessly integrate AI into their existing applications, boosting productivity and efficiency.

Inweave

Inweave is an AI tool designed to help startups and scaleups automate their workflows. It allows users to create, deploy, and manage tailored AI assistants for a variety of tasks and business processes. By offering flexible model selection and robust API support, Inweave enables businesses to seamlessly integrate AI into their existing applications, boosting productivity and efficiency.

Okibi AI

Okibi is a no-code web platform that empowers users to build and deploy AI agents simply by describing what they need in plain language. Instead of writing boilerplate code, managing integrations, or orchestrating multiple services manually, you use natural language prompts to define the agent’s behavior. Okibi takes care of constructing the architecture, handling browser automation, conditional logic, human-in-the-loop interaction, tool calling, and initial evaluations automatically behind the scenes. The platform offers a visual templates gallery to help users get started quickly as well as a chat interface where you refine or iterate on your agent’s design. It’s built for speed and accessibility so that non-technical users, teams with limited engineering bandwidth, or product builders can create internal automations for prospect qualification, invoice generation, meeting prep, content workflows, or similar agentic tasks without hiring devs.

Okibi AI

Okibi is a no-code web platform that empowers users to build and deploy AI agents simply by describing what they need in plain language. Instead of writing boilerplate code, managing integrations, or orchestrating multiple services manually, you use natural language prompts to define the agent’s behavior. Okibi takes care of constructing the architecture, handling browser automation, conditional logic, human-in-the-loop interaction, tool calling, and initial evaluations automatically behind the scenes. The platform offers a visual templates gallery to help users get started quickly as well as a chat interface where you refine or iterate on your agent’s design. It’s built for speed and accessibility so that non-technical users, teams with limited engineering bandwidth, or product builders can create internal automations for prospect qualification, invoice generation, meeting prep, content workflows, or similar agentic tasks without hiring devs.

Okibi AI

Okibi is a no-code web platform that empowers users to build and deploy AI agents simply by describing what they need in plain language. Instead of writing boilerplate code, managing integrations, or orchestrating multiple services manually, you use natural language prompts to define the agent’s behavior. Okibi takes care of constructing the architecture, handling browser automation, conditional logic, human-in-the-loop interaction, tool calling, and initial evaluations automatically behind the scenes. The platform offers a visual templates gallery to help users get started quickly as well as a chat interface where you refine or iterate on your agent’s design. It’s built for speed and accessibility so that non-technical users, teams with limited engineering bandwidth, or product builders can create internal automations for prospect qualification, invoice generation, meeting prep, content workflows, or similar agentic tasks without hiring devs.

Mirai

TryMirai is an on-device AI infrastructure platform that enables developers to integrate high-performance AI models directly into their apps with minimal latency, full data privacy, and no inference costs. The platform includes an optimized library of models (ranging in parameter sizes such as 0.3B, 0.5B, 1B, 3B, and 7B) to match different business goals, ensuring both efficiency and adaptability. It offers a smart routing engine to balance performance, privacy, and cost, and tools like SDKs for Apple platforms (with upcoming support for Android) to simplify integration. Users can deploy AI capabilities—such as summarization, classification, general chat, and custom use cases—without relying on cloud offloading, which reduces dependencies on network connectivity and protects user data.

Mirai

TryMirai is an on-device AI infrastructure platform that enables developers to integrate high-performance AI models directly into their apps with minimal latency, full data privacy, and no inference costs. The platform includes an optimized library of models (ranging in parameter sizes such as 0.3B, 0.5B, 1B, 3B, and 7B) to match different business goals, ensuring both efficiency and adaptability. It offers a smart routing engine to balance performance, privacy, and cost, and tools like SDKs for Apple platforms (with upcoming support for Android) to simplify integration. Users can deploy AI capabilities—such as summarization, classification, general chat, and custom use cases—without relying on cloud offloading, which reduces dependencies on network connectivity and protects user data.

Mirai

TryMirai is an on-device AI infrastructure platform that enables developers to integrate high-performance AI models directly into their apps with minimal latency, full data privacy, and no inference costs. The platform includes an optimized library of models (ranging in parameter sizes such as 0.3B, 0.5B, 1B, 3B, and 7B) to match different business goals, ensuring both efficiency and adaptability. It offers a smart routing engine to balance performance, privacy, and cost, and tools like SDKs for Apple platforms (with upcoming support for Android) to simplify integration. Users can deploy AI capabilities—such as summarization, classification, general chat, and custom use cases—without relying on cloud offloading, which reduces dependencies on network connectivity and protects user data.

SiliconFlow

SiliconFlow is an AI infrastructure platform built for developers and enterprises who want to deploy, run, and fine-tune large language models (LLMs) and multimodal models efficiently. It offers a unified stack for inference, model hosting, and acceleration so that you don’t have to manage all the infrastructure yourself. The platform supports many open source and commercial models, high throughput, low latency, autoscaling and flexible deployment (serverless, reserved GPUs, private cloud). It also emphasizes cost-effectiveness, data security, and feature-rich tooling such as APIs compatible with OpenAI style, fine-tuning, monitoring, and scalability.

SiliconFlow

SiliconFlow is an AI infrastructure platform built for developers and enterprises who want to deploy, run, and fine-tune large language models (LLMs) and multimodal models efficiently. It offers a unified stack for inference, model hosting, and acceleration so that you don’t have to manage all the infrastructure yourself. The platform supports many open source and commercial models, high throughput, low latency, autoscaling and flexible deployment (serverless, reserved GPUs, private cloud). It also emphasizes cost-effectiveness, data security, and feature-rich tooling such as APIs compatible with OpenAI style, fine-tuning, monitoring, and scalability.

SiliconFlow

SiliconFlow is an AI infrastructure platform built for developers and enterprises who want to deploy, run, and fine-tune large language models (LLMs) and multimodal models efficiently. It offers a unified stack for inference, model hosting, and acceleration so that you don’t have to manage all the infrastructure yourself. The platform supports many open source and commercial models, high throughput, low latency, autoscaling and flexible deployment (serverless, reserved GPUs, private cloud). It also emphasizes cost-effectiveness, data security, and feature-rich tooling such as APIs compatible with OpenAI style, fine-tuning, monitoring, and scalability.

Kiro AI

Kiro.dev is a powerful AI-powered code generation tool designed to accelerate the software development process. It leverages advanced machine learning models to help developers write code faster, more efficiently, and with fewer errors. Kiro.dev offers various features, including code completion, code generation from natural language prompts, and code explanation, making it a valuable asset for developers of all skill levels.

Kiro AI

Kiro.dev is a powerful AI-powered code generation tool designed to accelerate the software development process. It leverages advanced machine learning models to help developers write code faster, more efficiently, and with fewer errors. Kiro.dev offers various features, including code completion, code generation from natural language prompts, and code explanation, making it a valuable asset for developers of all skill levels.

Kiro AI

Kiro.dev is a powerful AI-powered code generation tool designed to accelerate the software development process. It leverages advanced machine learning models to help developers write code faster, more efficiently, and with fewer errors. Kiro.dev offers various features, including code completion, code generation from natural language prompts, and code explanation, making it a valuable asset for developers of all skill levels.

Genloop AI

Genloop is a platform that empowers enterprises to build, deploy, and manage custom, private large language models (LLMs) tailored to their business data and requirements — all with minimal development effort. It turns enterprise data into intelligent, conversational insights, allowing users to ask business questions in natural language and receive actionable analysis instantly. The platform enables organizations to confidently manage their data-driven decision-making by offering advanced fine-tuning, automation, and deployment tools. Businesses can transform their existing datasets into private AI assistants that deliver accurate insights, while maintaining complete security and compliance. Genloop’s focus is on bridging the gap between AI and enterprise data operations, providing a scalable, trustworthy, and adaptive solution for teams that want to leverage AI without extensive coding or infrastructure complexity.

Genloop AI

Genloop is a platform that empowers enterprises to build, deploy, and manage custom, private large language models (LLMs) tailored to their business data and requirements — all with minimal development effort. It turns enterprise data into intelligent, conversational insights, allowing users to ask business questions in natural language and receive actionable analysis instantly. The platform enables organizations to confidently manage their data-driven decision-making by offering advanced fine-tuning, automation, and deployment tools. Businesses can transform their existing datasets into private AI assistants that deliver accurate insights, while maintaining complete security and compliance. Genloop’s focus is on bridging the gap between AI and enterprise data operations, providing a scalable, trustworthy, and adaptive solution for teams that want to leverage AI without extensive coding or infrastructure complexity.

Genloop AI

Genloop is a platform that empowers enterprises to build, deploy, and manage custom, private large language models (LLMs) tailored to their business data and requirements — all with minimal development effort. It turns enterprise data into intelligent, conversational insights, allowing users to ask business questions in natural language and receive actionable analysis instantly. The platform enables organizations to confidently manage their data-driven decision-making by offering advanced fine-tuning, automation, and deployment tools. Businesses can transform their existing datasets into private AI assistants that deliver accurate insights, while maintaining complete security and compliance. Genloop’s focus is on bridging the gap between AI and enterprise data operations, providing a scalable, trustworthy, and adaptive solution for teams that want to leverage AI without extensive coding or infrastructure complexity.

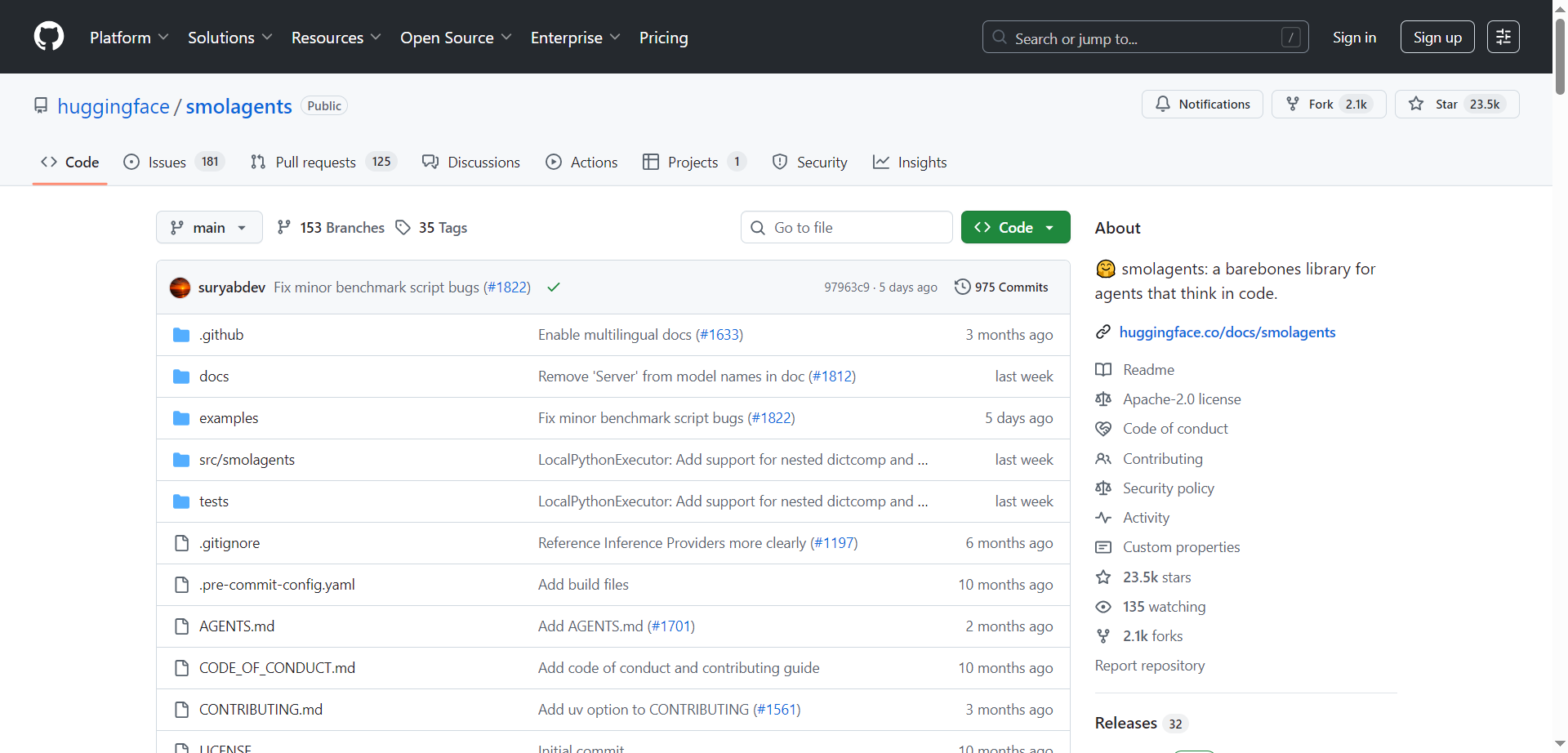

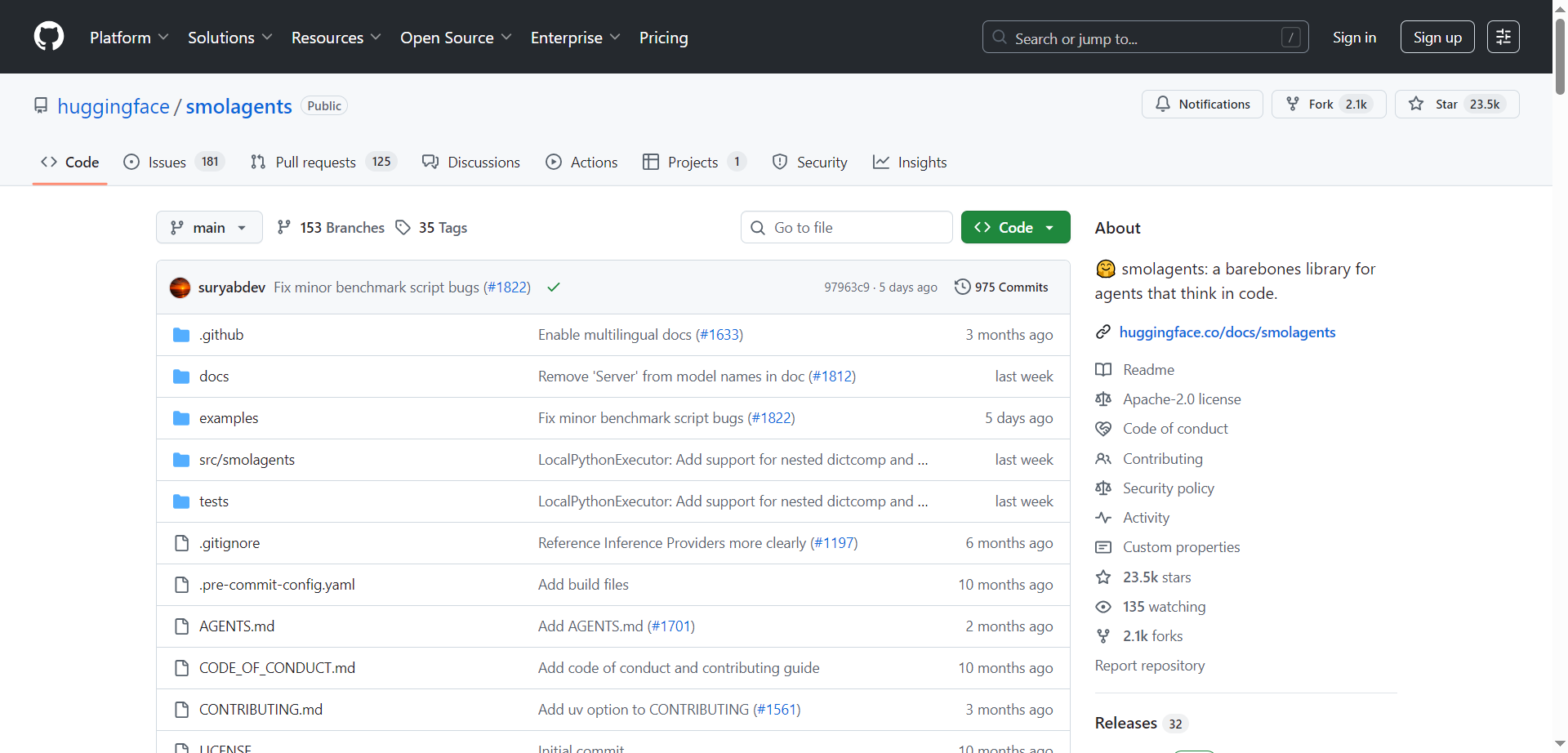

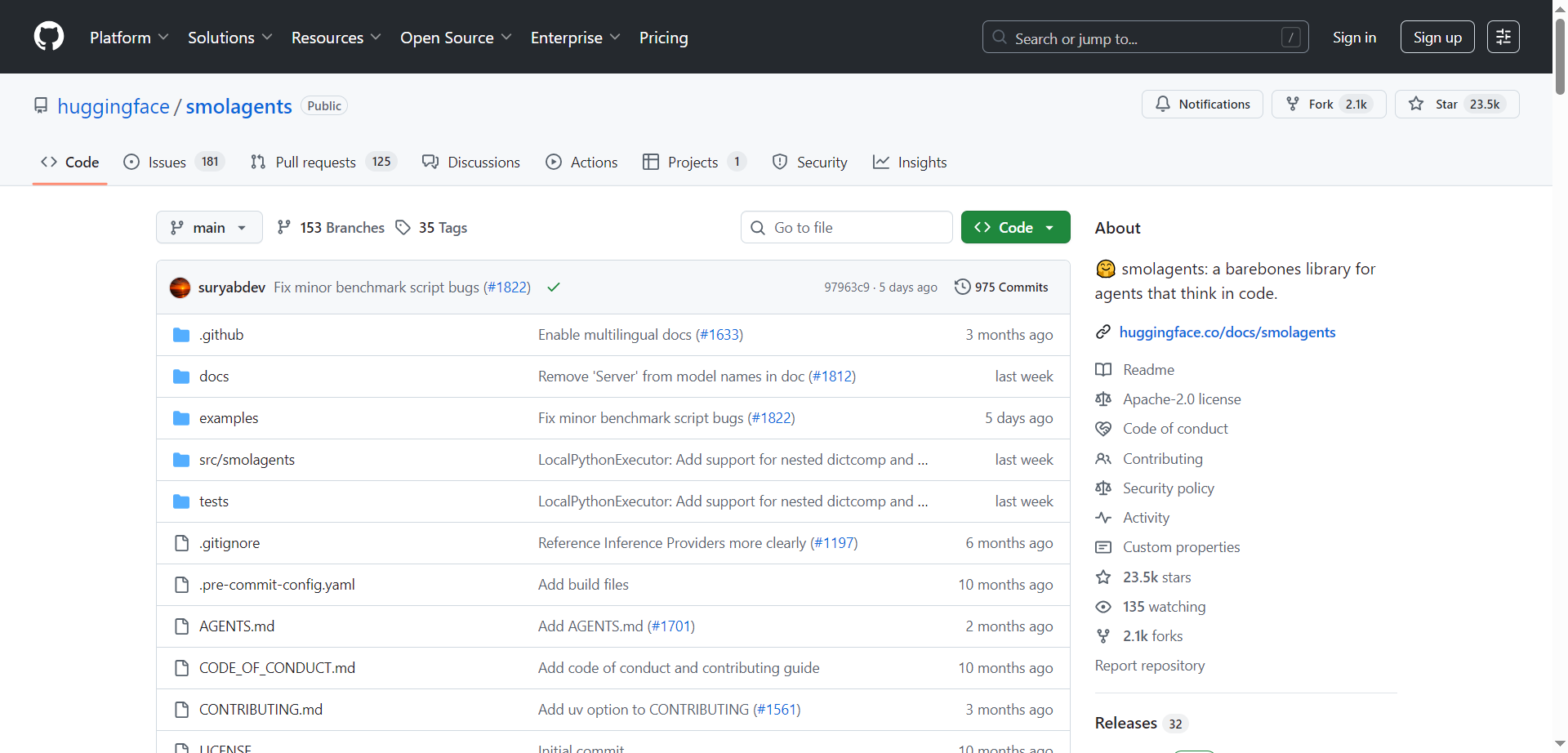

Smolagents

Smolagents is a minimalist yet powerful Python library designed to create intelligent agents that think and act through code. It enables developers to build agents that write their actions as Python code snippets, making the agent's logic clear and easy to customize. Supporting various language models (LLMs) from local transformers to open AI services, smolagents offers a flexible, model-agnostic framework for running agents. With sandboxed execution for security, tool integrations, and support for multiple input types including text, vision, and audio, the library aims to make agent development accessible and efficient for a wide range of AI applications.

Smolagents

Smolagents is a minimalist yet powerful Python library designed to create intelligent agents that think and act through code. It enables developers to build agents that write their actions as Python code snippets, making the agent's logic clear and easy to customize. Supporting various language models (LLMs) from local transformers to open AI services, smolagents offers a flexible, model-agnostic framework for running agents. With sandboxed execution for security, tool integrations, and support for multiple input types including text, vision, and audio, the library aims to make agent development accessible and efficient for a wide range of AI applications.

Smolagents

Smolagents is a minimalist yet powerful Python library designed to create intelligent agents that think and act through code. It enables developers to build agents that write their actions as Python code snippets, making the agent's logic clear and easy to customize. Supporting various language models (LLMs) from local transformers to open AI services, smolagents offers a flexible, model-agnostic framework for running agents. With sandboxed execution for security, tool integrations, and support for multiple input types including text, vision, and audio, the library aims to make agent development accessible and efficient for a wide range of AI applications.

Langchain

LangChain is a powerful open-source framework designed to help developers build context-aware applications that leverage large language models (LLMs). It allows users to connect language models to various data sources, APIs, and memory components, enabling intelligent, multi-step reasoning and decision-making processes. LangChain supports both Python and JavaScript, providing modular building blocks for developers to create chatbots, AI assistants, retrieval-augmented generation (RAG) systems, and agent-based tools. The framework is widely adopted across industries for its flexibility in connecting structured and unstructured data with LLMs.

Langchain

LangChain is a powerful open-source framework designed to help developers build context-aware applications that leverage large language models (LLMs). It allows users to connect language models to various data sources, APIs, and memory components, enabling intelligent, multi-step reasoning and decision-making processes. LangChain supports both Python and JavaScript, providing modular building blocks for developers to create chatbots, AI assistants, retrieval-augmented generation (RAG) systems, and agent-based tools. The framework is widely adopted across industries for its flexibility in connecting structured and unstructured data with LLMs.

Langchain

LangChain is a powerful open-source framework designed to help developers build context-aware applications that leverage large language models (LLMs). It allows users to connect language models to various data sources, APIs, and memory components, enabling intelligent, multi-step reasoning and decision-making processes. LangChain supports both Python and JavaScript, providing modular building blocks for developers to create chatbots, AI assistants, retrieval-augmented generation (RAG) systems, and agent-based tools. The framework is widely adopted across industries for its flexibility in connecting structured and unstructured data with LLMs.

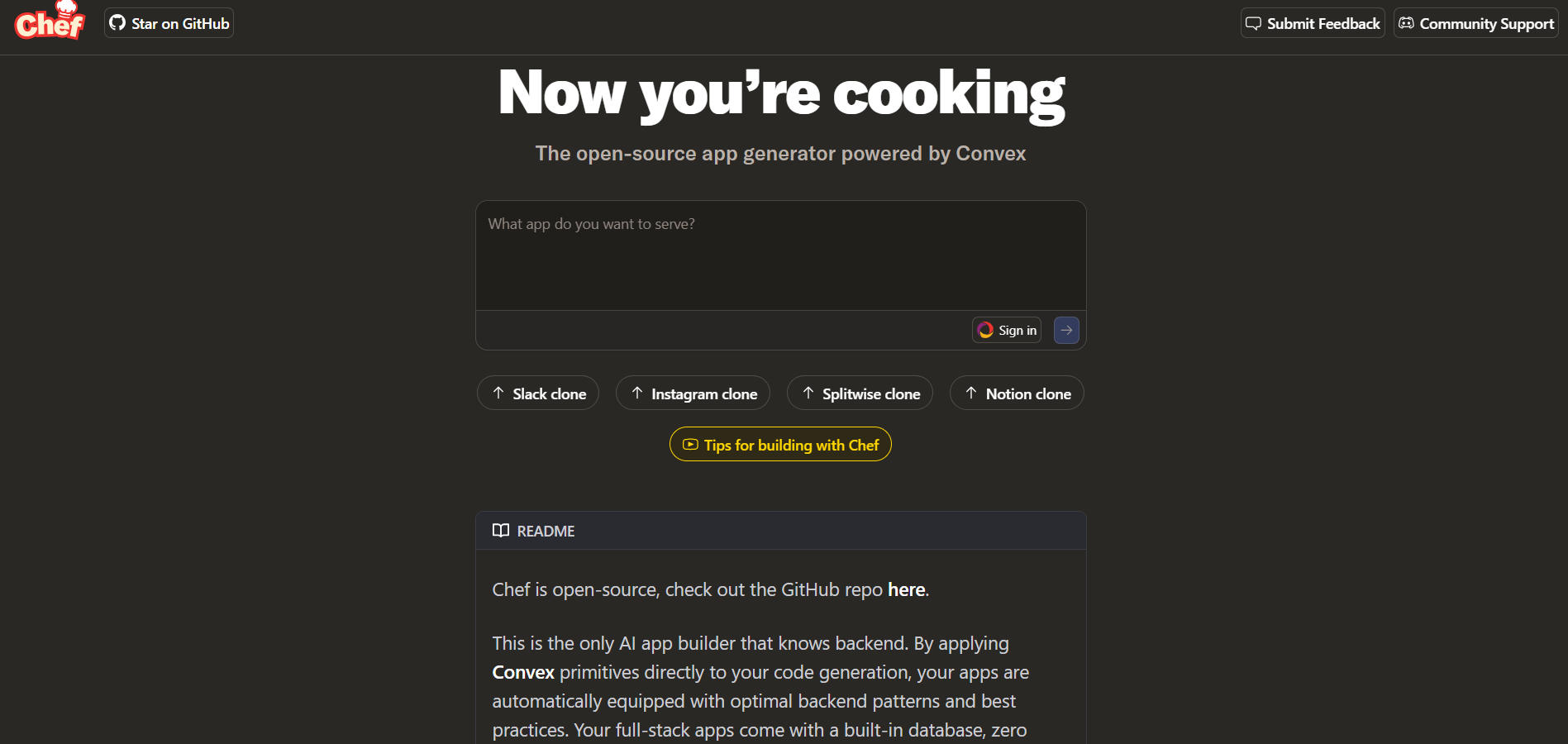

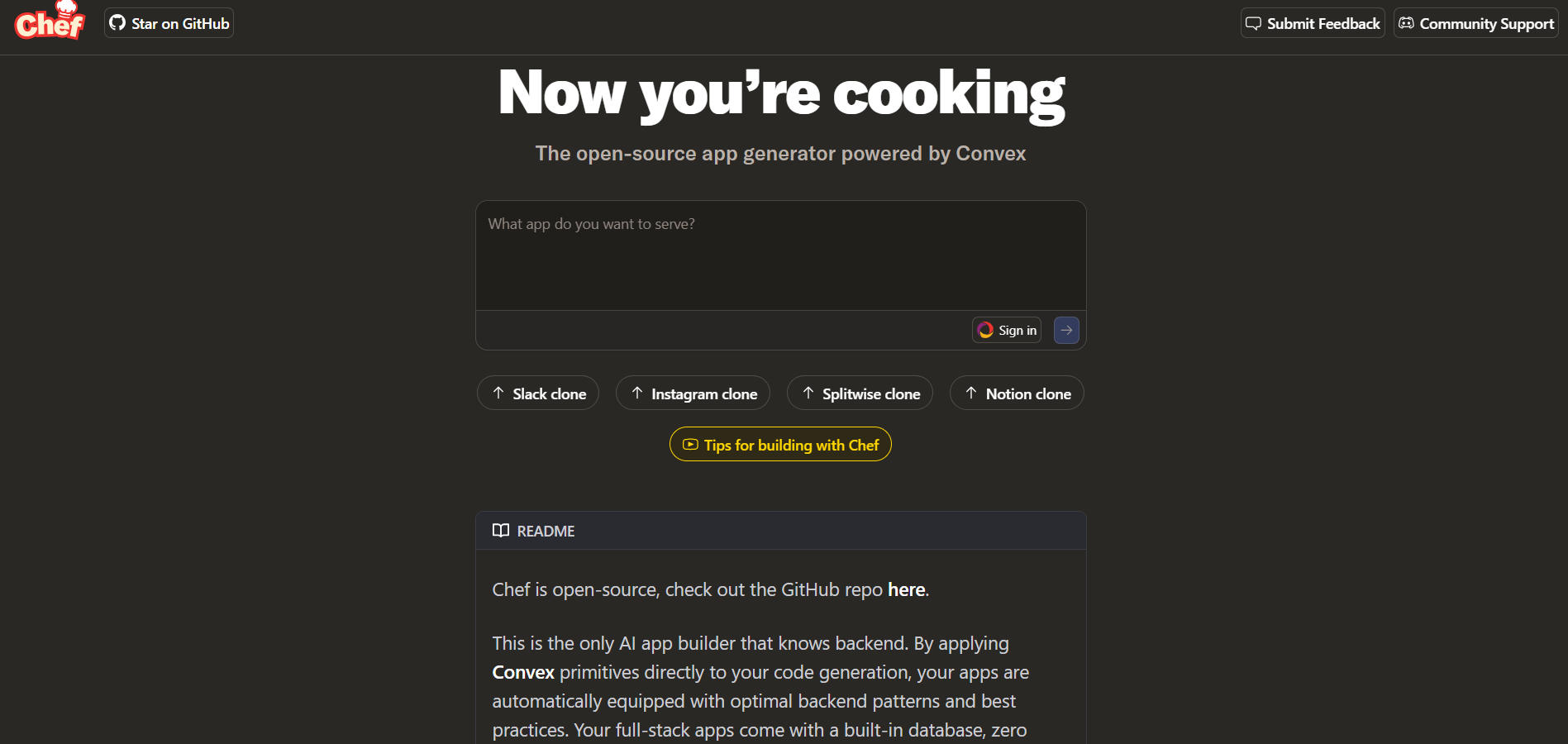

Chef

Chef by Convex is an AI-powered development assistant built to help developers create, test, and deploy web applications faster using natural language prompts. Integrated with Convex’s backend-as-a-service platform, Chef automates code generation, database setup, and API creation, enabling developers to move from concept to functional prototype within minutes. The tool understands developer intent, allowing users to describe what they want and instantly receive production-ready code snippets. With collaborative features and integrated testing environments, Chef by Convex simplifies modern app development for both individuals and teams, combining AI intelligence with backend scalability.

Chef

Chef by Convex is an AI-powered development assistant built to help developers create, test, and deploy web applications faster using natural language prompts. Integrated with Convex’s backend-as-a-service platform, Chef automates code generation, database setup, and API creation, enabling developers to move from concept to functional prototype within minutes. The tool understands developer intent, allowing users to describe what they want and instantly receive production-ready code snippets. With collaborative features and integrated testing environments, Chef by Convex simplifies modern app development for both individuals and teams, combining AI intelligence with backend scalability.

Chef

Chef by Convex is an AI-powered development assistant built to help developers create, test, and deploy web applications faster using natural language prompts. Integrated with Convex’s backend-as-a-service platform, Chef automates code generation, database setup, and API creation, enabling developers to move from concept to functional prototype within minutes. The tool understands developer intent, allowing users to describe what they want and instantly receive production-ready code snippets. With collaborative features and integrated testing environments, Chef by Convex simplifies modern app development for both individuals and teams, combining AI intelligence with backend scalability.

LLM as-a-service

LLM.co LLM-as-a-Service (LLMaaS) is a secure, enterprise-grade AI platform that provides private and fully managed large language model deployments tailored to an organization’s specific industry, workflows, and data. Unlike public LLM APIs, each client receives a dedicated, single-tenant model hosted in private clouds or virtual private clouds (VPCs), ensuring complete data privacy and compliance. The platform offers model fine-tuning on proprietary internal documents, semantic search, multi-document Q&A, custom AI agents, contract review, and offline AI capabilities for regulated industries. It removes infrastructure burdens by handling deployment, scaling, and monitoring, while enabling businesses to customize models for domain-specific language, regulatory compliance, and unique operational needs.

LLM as-a-service

LLM.co LLM-as-a-Service (LLMaaS) is a secure, enterprise-grade AI platform that provides private and fully managed large language model deployments tailored to an organization’s specific industry, workflows, and data. Unlike public LLM APIs, each client receives a dedicated, single-tenant model hosted in private clouds or virtual private clouds (VPCs), ensuring complete data privacy and compliance. The platform offers model fine-tuning on proprietary internal documents, semantic search, multi-document Q&A, custom AI agents, contract review, and offline AI capabilities for regulated industries. It removes infrastructure burdens by handling deployment, scaling, and monitoring, while enabling businesses to customize models for domain-specific language, regulatory compliance, and unique operational needs.

LLM as-a-service

LLM.co LLM-as-a-Service (LLMaaS) is a secure, enterprise-grade AI platform that provides private and fully managed large language model deployments tailored to an organization’s specific industry, workflows, and data. Unlike public LLM APIs, each client receives a dedicated, single-tenant model hosted in private clouds or virtual private clouds (VPCs), ensuring complete data privacy and compliance. The platform offers model fine-tuning on proprietary internal documents, semantic search, multi-document Q&A, custom AI agents, contract review, and offline AI capabilities for regulated industries. It removes infrastructure burdens by handling deployment, scaling, and monitoring, while enabling businesses to customize models for domain-specific language, regulatory compliance, and unique operational needs.

Clay

Clay is a powerful go-to-market (GTM) data and AI platform that centralizes and enriches sales and marketing data from over 150 providers, enabling teams to act with precision and speed. It offers enriched leads, intent signals, and AI-led research to identify new prospects, score accounts, and personalize outreach efforts. Clay’s flexible workflows allow users to automate complex GTM processes without engineering, streamline data formatting, and update tools like CRMs and email sequencers in real time. Designed for scale, Clay integrates seamlessly with existing systems to empower GTM teams to be faster, more strategic, and highly creative.

Clay

Clay is a powerful go-to-market (GTM) data and AI platform that centralizes and enriches sales and marketing data from over 150 providers, enabling teams to act with precision and speed. It offers enriched leads, intent signals, and AI-led research to identify new prospects, score accounts, and personalize outreach efforts. Clay’s flexible workflows allow users to automate complex GTM processes without engineering, streamline data formatting, and update tools like CRMs and email sequencers in real time. Designed for scale, Clay integrates seamlessly with existing systems to empower GTM teams to be faster, more strategic, and highly creative.

Clay

Clay is a powerful go-to-market (GTM) data and AI platform that centralizes and enriches sales and marketing data from over 150 providers, enabling teams to act with precision and speed. It offers enriched leads, intent signals, and AI-led research to identify new prospects, score accounts, and personalize outreach efforts. Clay’s flexible workflows allow users to automate complex GTM processes without engineering, streamline data formatting, and update tools like CRMs and email sequencers in real time. Designed for scale, Clay integrates seamlessly with existing systems to empower GTM teams to be faster, more strategic, and highly creative.

Lumio AI

Lumio AI is a multi-model artificial intelligence platform that brings access to multiple top AI models like ChatGPT, Claude, Gemini, Grok, DeepSeek, and more into one unified workspace, enabling users to interact with the best AI for each task through a single interface. Why settle for one perspective when you can have many? With the Multi-LLM system, researchers, developers, and business professionals gain access to multiple AI models delivering sharper insights, faster solutions, and smarter decisions, all in one powerful platform.

Lumio AI

Lumio AI is a multi-model artificial intelligence platform that brings access to multiple top AI models like ChatGPT, Claude, Gemini, Grok, DeepSeek, and more into one unified workspace, enabling users to interact with the best AI for each task through a single interface. Why settle for one perspective when you can have many? With the Multi-LLM system, researchers, developers, and business professionals gain access to multiple AI models delivering sharper insights, faster solutions, and smarter decisions, all in one powerful platform.

Lumio AI

Lumio AI is a multi-model artificial intelligence platform that brings access to multiple top AI models like ChatGPT, Claude, Gemini, Grok, DeepSeek, and more into one unified workspace, enabling users to interact with the best AI for each task through a single interface. Why settle for one perspective when you can have many? With the Multi-LLM system, researchers, developers, and business professionals gain access to multiple AI models delivering sharper insights, faster solutions, and smarter decisions, all in one powerful platform.

Editorial Note

This page was researched and written by the ATB Editorial Team. Our team researches each AI tool by reviewing its official website, testing features, exploring real use cases, and considering user feedback. Every page is fact-checked and regularly updated to ensure the information stays accurate, neutral, and useful for our readers.

If you have any suggestions or questions, email us at hello@aitoolbook.ai