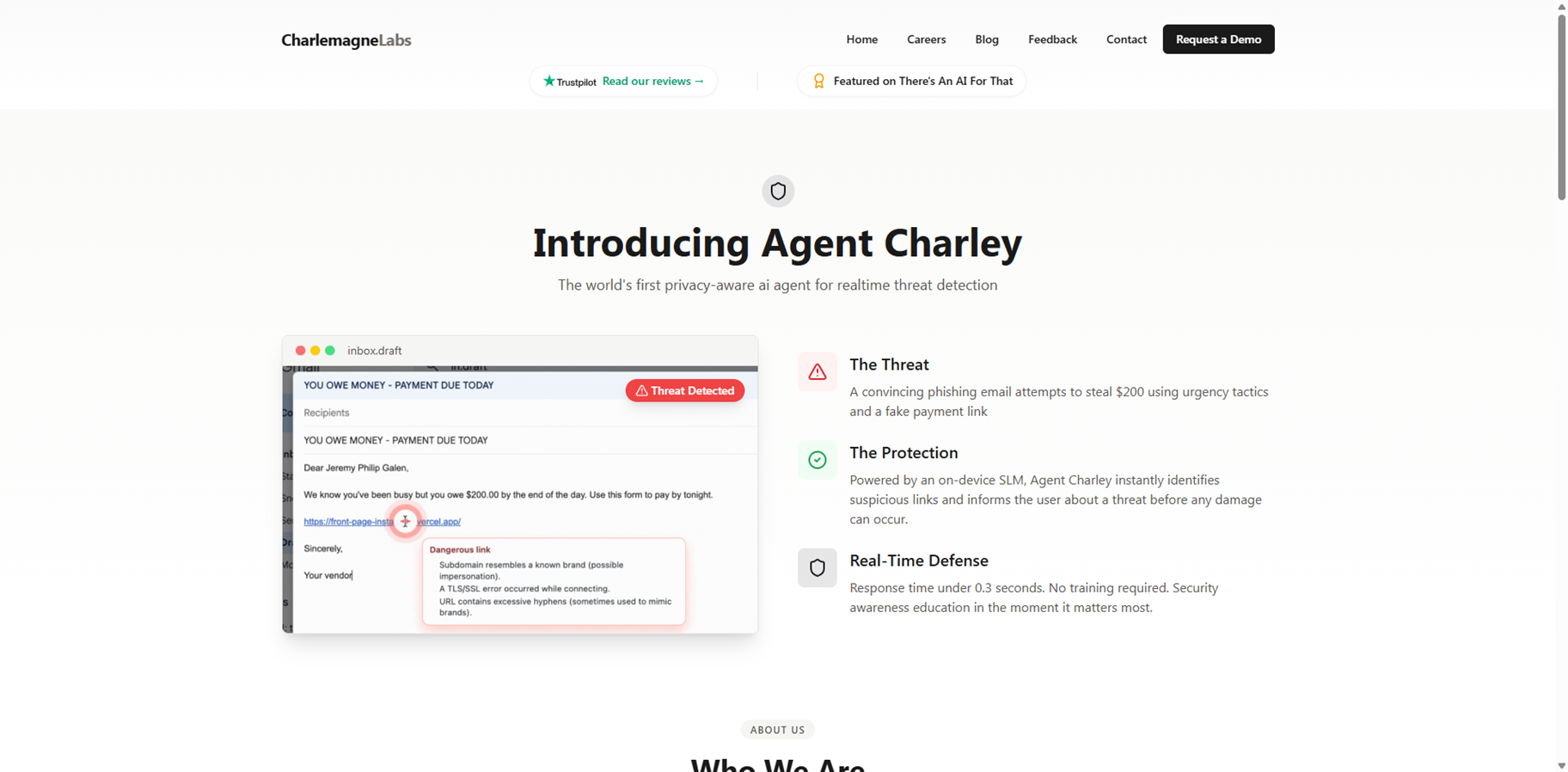

- Individuals & Families: Spot phishing emails instantly to protect personal accounts.

- Cybersecurity Pros: Deploy real-time detection in workflows for threat hunting.

- SMBs & Enterprises: Scale protection with analytics and no cloud data risks.

- Remote Workers: Guard against social engineering on personal devices securely.

- Tech-Savvy Users: Run local AI for fast, private threat defense anywhere.

How to Use Charlemagne Labs?

- Download Beta: Get Agent Charley from the site and install on your device.

- Scan Messages: It auto-detects phishing in emails or chats in real-time.

- Review Alerts: Check identifications without any setup or training needed.

- Scale for Teams: Upgrade plans for dashboards and integrations.

- On-Device Only: Zero cloud, full privacy with lightning 0.3s detection.

- Compact SLM Power: 270M params handle threats locally without big hardware.

- No Setup Magic: Works out-of-box, no training for users or admins.

- Human-Layer Focus: Targets social engineering blind spots in EDR.

- Flexible Plans: From personal starter to enterprise with analytics.

- Insane speed and total privacy.

- No data leaks or cloud worries.

- Easy deploy, catches real threats.

- Scales well for teams.

- Beta stage, some features evolving.

- Hardware picky for optimal speed.

- Phishing-focused, not full suite.

- Limited to specific attacks now.

Custom

Pricing information is not directly provided.

Proud of the love you're getting? Show off your AI Toolbook reviews—then invite more fans to share the love and build your credibility.

Add an AI Toolbook badge to your site—an easy way to drive followers, showcase updates, and collect reviews. It's like a mini 24/7 billboard for your AI.

Reviews

Rating Distribution

Average score

Popular Mention

FAQs

Similar AI Tools

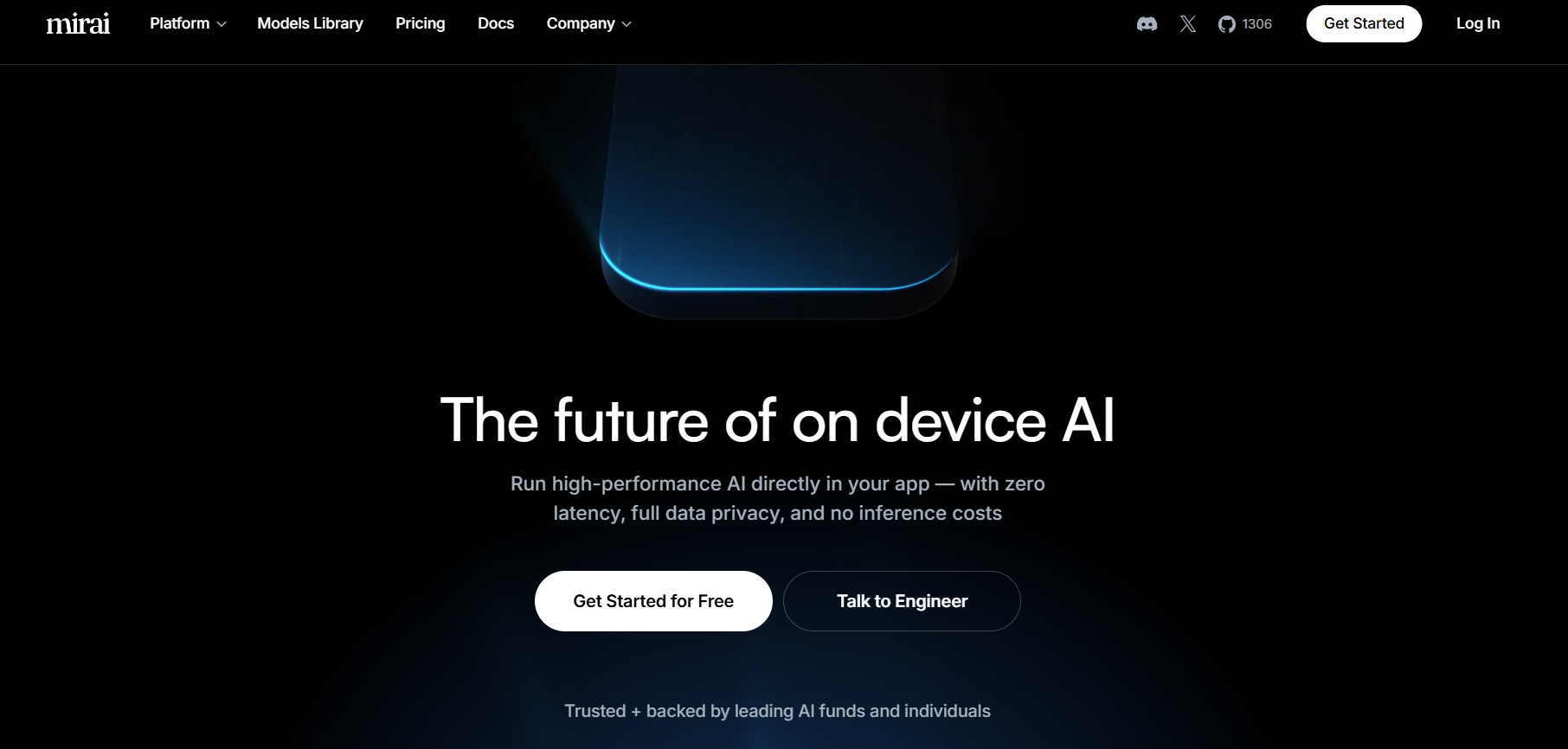

Mirai

TryMirai is an on-device AI infrastructure platform that enables developers to integrate high-performance AI models directly into their apps with minimal latency, full data privacy, and no inference costs. The platform includes an optimized library of models (ranging in parameter sizes such as 0.3B, 0.5B, 1B, 3B, and 7B) to match different business goals, ensuring both efficiency and adaptability. It offers a smart routing engine to balance performance, privacy, and cost, and tools like SDKs for Apple platforms (with upcoming support for Android) to simplify integration. Users can deploy AI capabilities—such as summarization, classification, general chat, and custom use cases—without relying on cloud offloading, which reduces dependencies on network connectivity and protects user data.

Mirai

TryMirai is an on-device AI infrastructure platform that enables developers to integrate high-performance AI models directly into their apps with minimal latency, full data privacy, and no inference costs. The platform includes an optimized library of models (ranging in parameter sizes such as 0.3B, 0.5B, 1B, 3B, and 7B) to match different business goals, ensuring both efficiency and adaptability. It offers a smart routing engine to balance performance, privacy, and cost, and tools like SDKs for Apple platforms (with upcoming support for Android) to simplify integration. Users can deploy AI capabilities—such as summarization, classification, general chat, and custom use cases—without relying on cloud offloading, which reduces dependencies on network connectivity and protects user data.

Mirai

TryMirai is an on-device AI infrastructure platform that enables developers to integrate high-performance AI models directly into their apps with minimal latency, full data privacy, and no inference costs. The platform includes an optimized library of models (ranging in parameter sizes such as 0.3B, 0.5B, 1B, 3B, and 7B) to match different business goals, ensuring both efficiency and adaptability. It offers a smart routing engine to balance performance, privacy, and cost, and tools like SDKs for Apple platforms (with upcoming support for Android) to simplify integration. Users can deploy AI capabilities—such as summarization, classification, general chat, and custom use cases—without relying on cloud offloading, which reduces dependencies on network connectivity and protects user data.

Aisera

Aisera is an AI-driven platform designed to transform enterprise service experiences through the integration of generative AI and advanced automation. It leverages Large Language Models (LLMs) and domain-specific AI capabilities to deliver proactive, personalized, and predictive solutions across various business functions such as IT, customer service, HR, and more.

Aisera

Aisera is an AI-driven platform designed to transform enterprise service experiences through the integration of generative AI and advanced automation. It leverages Large Language Models (LLMs) and domain-specific AI capabilities to deliver proactive, personalized, and predictive solutions across various business functions such as IT, customer service, HR, and more.

Aisera

Aisera is an AI-driven platform designed to transform enterprise service experiences through the integration of generative AI and advanced automation. It leverages Large Language Models (LLMs) and domain-specific AI capabilities to deliver proactive, personalized, and predictive solutions across various business functions such as IT, customer service, HR, and more.

inception

Inception Labs is an AI research company that develops Mercury, the world's first commercial diffusion-based large language models. Unlike traditional autoregressive LLMs that generate tokens sequentially, Mercury models use diffusion architecture to generate text through parallel refinement passes. This breakthrough approach enables ultra-fast inference speeds of over 1,000 tokens per second while maintaining frontier-level quality. The platform offers Mercury for general-purpose tasks and Mercury Coder for development workflows, both featuring streaming capabilities, tool use, structured output, and 128K context windows. These models serve as drop-in replacements for traditional LLMs through OpenAI-compatible APIs and are available across major cloud providers including AWS Bedrock, Azure Foundry, and various AI platforms for enterprise deployment.

inception

Inception Labs is an AI research company that develops Mercury, the world's first commercial diffusion-based large language models. Unlike traditional autoregressive LLMs that generate tokens sequentially, Mercury models use diffusion architecture to generate text through parallel refinement passes. This breakthrough approach enables ultra-fast inference speeds of over 1,000 tokens per second while maintaining frontier-level quality. The platform offers Mercury for general-purpose tasks and Mercury Coder for development workflows, both featuring streaming capabilities, tool use, structured output, and 128K context windows. These models serve as drop-in replacements for traditional LLMs through OpenAI-compatible APIs and are available across major cloud providers including AWS Bedrock, Azure Foundry, and various AI platforms for enterprise deployment.

inception

Inception Labs is an AI research company that develops Mercury, the world's first commercial diffusion-based large language models. Unlike traditional autoregressive LLMs that generate tokens sequentially, Mercury models use diffusion architecture to generate text through parallel refinement passes. This breakthrough approach enables ultra-fast inference speeds of over 1,000 tokens per second while maintaining frontier-level quality. The platform offers Mercury for general-purpose tasks and Mercury Coder for development workflows, both featuring streaming capabilities, tool use, structured output, and 128K context windows. These models serve as drop-in replacements for traditional LLMs through OpenAI-compatible APIs and are available across major cloud providers including AWS Bedrock, Azure Foundry, and various AI platforms for enterprise deployment.

Cresta

Cresta is an enterprise-grade generative AI platform built for contact centres, designed to combine human and AI agents in one unified workspace. It helps organisations analyse every customer conversation, extract insights, guide agents in real time, automate workflows and improve outcomes such as first-call resolution, conversion, and agent performance. The platform features include custom models trained on an organisation’s own data, low-latency real-time transcription and inference, no-code tools for business users to deploy and refine AI models, and a strong focus on combining quality assurance, coaching, automation and conversational intelligence. Recent funding has boosted the company’s growth and the product has been adopted by major enterprises to optimise service, sales and retention operations.

Cresta

Cresta is an enterprise-grade generative AI platform built for contact centres, designed to combine human and AI agents in one unified workspace. It helps organisations analyse every customer conversation, extract insights, guide agents in real time, automate workflows and improve outcomes such as first-call resolution, conversion, and agent performance. The platform features include custom models trained on an organisation’s own data, low-latency real-time transcription and inference, no-code tools for business users to deploy and refine AI models, and a strong focus on combining quality assurance, coaching, automation and conversational intelligence. Recent funding has boosted the company’s growth and the product has been adopted by major enterprises to optimise service, sales and retention operations.

Cresta

Cresta is an enterprise-grade generative AI platform built for contact centres, designed to combine human and AI agents in one unified workspace. It helps organisations analyse every customer conversation, extract insights, guide agents in real time, automate workflows and improve outcomes such as first-call resolution, conversion, and agent performance. The platform features include custom models trained on an organisation’s own data, low-latency real-time transcription and inference, no-code tools for business users to deploy and refine AI models, and a strong focus on combining quality assurance, coaching, automation and conversational intelligence. Recent funding has boosted the company’s growth and the product has been adopted by major enterprises to optimise service, sales and retention operations.

Langchain

LangChain is a powerful open-source framework designed to help developers build context-aware applications that leverage large language models (LLMs). It allows users to connect language models to various data sources, APIs, and memory components, enabling intelligent, multi-step reasoning and decision-making processes. LangChain supports both Python and JavaScript, providing modular building blocks for developers to create chatbots, AI assistants, retrieval-augmented generation (RAG) systems, and agent-based tools. The framework is widely adopted across industries for its flexibility in connecting structured and unstructured data with LLMs.

Langchain

LangChain is a powerful open-source framework designed to help developers build context-aware applications that leverage large language models (LLMs). It allows users to connect language models to various data sources, APIs, and memory components, enabling intelligent, multi-step reasoning and decision-making processes. LangChain supports both Python and JavaScript, providing modular building blocks for developers to create chatbots, AI assistants, retrieval-augmented generation (RAG) systems, and agent-based tools. The framework is widely adopted across industries for its flexibility in connecting structured and unstructured data with LLMs.

Langchain

LangChain is a powerful open-source framework designed to help developers build context-aware applications that leverage large language models (LLMs). It allows users to connect language models to various data sources, APIs, and memory components, enabling intelligent, multi-step reasoning and decision-making processes. LangChain supports both Python and JavaScript, providing modular building blocks for developers to create chatbots, AI assistants, retrieval-augmented generation (RAG) systems, and agent-based tools. The framework is widely adopted across industries for its flexibility in connecting structured and unstructured data with LLMs.

Chef

Chef by Convex is an AI-powered development assistant built to help developers create, test, and deploy web applications faster using natural language prompts. Integrated with Convex’s backend-as-a-service platform, Chef automates code generation, database setup, and API creation, enabling developers to move from concept to functional prototype within minutes. The tool understands developer intent, allowing users to describe what they want and instantly receive production-ready code snippets. With collaborative features and integrated testing environments, Chef by Convex simplifies modern app development for both individuals and teams, combining AI intelligence with backend scalability.

Chef

Chef by Convex is an AI-powered development assistant built to help developers create, test, and deploy web applications faster using natural language prompts. Integrated with Convex’s backend-as-a-service platform, Chef automates code generation, database setup, and API creation, enabling developers to move from concept to functional prototype within minutes. The tool understands developer intent, allowing users to describe what they want and instantly receive production-ready code snippets. With collaborative features and integrated testing environments, Chef by Convex simplifies modern app development for both individuals and teams, combining AI intelligence with backend scalability.

Chef

Chef by Convex is an AI-powered development assistant built to help developers create, test, and deploy web applications faster using natural language prompts. Integrated with Convex’s backend-as-a-service platform, Chef automates code generation, database setup, and API creation, enabling developers to move from concept to functional prototype within minutes. The tool understands developer intent, allowing users to describe what they want and instantly receive production-ready code snippets. With collaborative features and integrated testing environments, Chef by Convex simplifies modern app development for both individuals and teams, combining AI intelligence with backend scalability.

LM Studio

LM Studio is a local large language model (LLM) platform that enables users to run and download powerful AI language models like LLaMa, MPT, and Gemma directly on their own computers. This platform supports Mac, Windows, and Linux operating systems, providing flexibility to users across different devices. LM Studio focuses on privacy and control by allowing users to work with AI models locally without relying on cloud-based services, ensuring data stays on the user’s device. It offers an easy-to-install interface with step-by-step guidance for setup, facilitating access to advanced AI capabilities for developers, researchers, and AI enthusiasts without requiring an internet connection.

LM Studio

LM Studio is a local large language model (LLM) platform that enables users to run and download powerful AI language models like LLaMa, MPT, and Gemma directly on their own computers. This platform supports Mac, Windows, and Linux operating systems, providing flexibility to users across different devices. LM Studio focuses on privacy and control by allowing users to work with AI models locally without relying on cloud-based services, ensuring data stays on the user’s device. It offers an easy-to-install interface with step-by-step guidance for setup, facilitating access to advanced AI capabilities for developers, researchers, and AI enthusiasts without requiring an internet connection.

LM Studio

LM Studio is a local large language model (LLM) platform that enables users to run and download powerful AI language models like LLaMa, MPT, and Gemma directly on their own computers. This platform supports Mac, Windows, and Linux operating systems, providing flexibility to users across different devices. LM Studio focuses on privacy and control by allowing users to work with AI models locally without relying on cloud-based services, ensuring data stays on the user’s device. It offers an easy-to-install interface with step-by-step guidance for setup, facilitating access to advanced AI capabilities for developers, researchers, and AI enthusiasts without requiring an internet connection.

LLM as-a-service

LLM.co LLM-as-a-Service (LLMaaS) is a secure, enterprise-grade AI platform that provides private and fully managed large language model deployments tailored to an organization’s specific industry, workflows, and data. Unlike public LLM APIs, each client receives a dedicated, single-tenant model hosted in private clouds or virtual private clouds (VPCs), ensuring complete data privacy and compliance. The platform offers model fine-tuning on proprietary internal documents, semantic search, multi-document Q&A, custom AI agents, contract review, and offline AI capabilities for regulated industries. It removes infrastructure burdens by handling deployment, scaling, and monitoring, while enabling businesses to customize models for domain-specific language, regulatory compliance, and unique operational needs.

LLM as-a-service

LLM.co LLM-as-a-Service (LLMaaS) is a secure, enterprise-grade AI platform that provides private and fully managed large language model deployments tailored to an organization’s specific industry, workflows, and data. Unlike public LLM APIs, each client receives a dedicated, single-tenant model hosted in private clouds or virtual private clouds (VPCs), ensuring complete data privacy and compliance. The platform offers model fine-tuning on proprietary internal documents, semantic search, multi-document Q&A, custom AI agents, contract review, and offline AI capabilities for regulated industries. It removes infrastructure burdens by handling deployment, scaling, and monitoring, while enabling businesses to customize models for domain-specific language, regulatory compliance, and unique operational needs.

LLM as-a-service

LLM.co LLM-as-a-Service (LLMaaS) is a secure, enterprise-grade AI platform that provides private and fully managed large language model deployments tailored to an organization’s specific industry, workflows, and data. Unlike public LLM APIs, each client receives a dedicated, single-tenant model hosted in private clouds or virtual private clouds (VPCs), ensuring complete data privacy and compliance. The platform offers model fine-tuning on proprietary internal documents, semantic search, multi-document Q&A, custom AI agents, contract review, and offline AI capabilities for regulated industries. It removes infrastructure burdens by handling deployment, scaling, and monitoring, while enabling businesses to customize models for domain-specific language, regulatory compliance, and unique operational needs.

potpie.ai

Potpie.ai is an innovative AI agent platform that enables developers to create intelligent agents for their codebase in just minutes, transforming software development through automated understanding and interaction. Designed specifically for codebase analysis, it allows users to deploy AI agents that comprehend entire repositories, perform tasks like code reviews, bug detection, refactoring suggestions, and feature implementation autonomously. The platform emphasizes speed and simplicity, requiring minimal setup to integrate with existing projects on GitHub or local environments. Key capabilities include natural language queries for code exploration, multi-agent collaboration for complex workflows, and seamless integration with popular IDEs like VS Code. By leveraging advanced LLMs fine-tuned for code, Potpie.ai boosts productivity for solo developers and teams alike, making AI-assisted coding accessible without deep expertise.

potpie.ai

Potpie.ai is an innovative AI agent platform that enables developers to create intelligent agents for their codebase in just minutes, transforming software development through automated understanding and interaction. Designed specifically for codebase analysis, it allows users to deploy AI agents that comprehend entire repositories, perform tasks like code reviews, bug detection, refactoring suggestions, and feature implementation autonomously. The platform emphasizes speed and simplicity, requiring minimal setup to integrate with existing projects on GitHub or local environments. Key capabilities include natural language queries for code exploration, multi-agent collaboration for complex workflows, and seamless integration with popular IDEs like VS Code. By leveraging advanced LLMs fine-tuned for code, Potpie.ai boosts productivity for solo developers and teams alike, making AI-assisted coding accessible without deep expertise.

potpie.ai

Potpie.ai is an innovative AI agent platform that enables developers to create intelligent agents for their codebase in just minutes, transforming software development through automated understanding and interaction. Designed specifically for codebase analysis, it allows users to deploy AI agents that comprehend entire repositories, perform tasks like code reviews, bug detection, refactoring suggestions, and feature implementation autonomously. The platform emphasizes speed and simplicity, requiring minimal setup to integrate with existing projects on GitHub or local environments. Key capabilities include natural language queries for code exploration, multi-agent collaboration for complex workflows, and seamless integration with popular IDEs like VS Code. By leveraging advanced LLMs fine-tuned for code, Potpie.ai boosts productivity for solo developers and teams alike, making AI-assisted coding accessible without deep expertise.

Glean

Glean is a work AI platform that provides every employee with a personalized AI Assistant and powerful Agents to search, understand, create, summarize, and automate tasks across 100+ enterprise apps like Slack, Google Drive, GitHub, Jira, Salesforce, and Microsoft tools. It indexes folders, documents, emails, tickets, conversations, and code without storing data, delivering instant answers grounded in company knowledge while respecting real-time permissions through Glean Protect. The centralized platform enables engineering velocity, support confidence, sales acceleration, and cross-team productivity, saving up to 110 hours per user annually. Features include natural language search, AI agents for scale automation, extensibility via APIs/SDK/plugins, and enterprise-grade security with Gartner recognition as a leader in AI knowledge management.

Glean

Glean is a work AI platform that provides every employee with a personalized AI Assistant and powerful Agents to search, understand, create, summarize, and automate tasks across 100+ enterprise apps like Slack, Google Drive, GitHub, Jira, Salesforce, and Microsoft tools. It indexes folders, documents, emails, tickets, conversations, and code without storing data, delivering instant answers grounded in company knowledge while respecting real-time permissions through Glean Protect. The centralized platform enables engineering velocity, support confidence, sales acceleration, and cross-team productivity, saving up to 110 hours per user annually. Features include natural language search, AI agents for scale automation, extensibility via APIs/SDK/plugins, and enterprise-grade security with Gartner recognition as a leader in AI knowledge management.

Glean

Glean is a work AI platform that provides every employee with a personalized AI Assistant and powerful Agents to search, understand, create, summarize, and automate tasks across 100+ enterprise apps like Slack, Google Drive, GitHub, Jira, Salesforce, and Microsoft tools. It indexes folders, documents, emails, tickets, conversations, and code without storing data, delivering instant answers grounded in company knowledge while respecting real-time permissions through Glean Protect. The centralized platform enables engineering velocity, support confidence, sales acceleration, and cross-team productivity, saving up to 110 hours per user annually. Features include natural language search, AI agents for scale automation, extensibility via APIs/SDK/plugins, and enterprise-grade security with Gartner recognition as a leader in AI knowledge management.

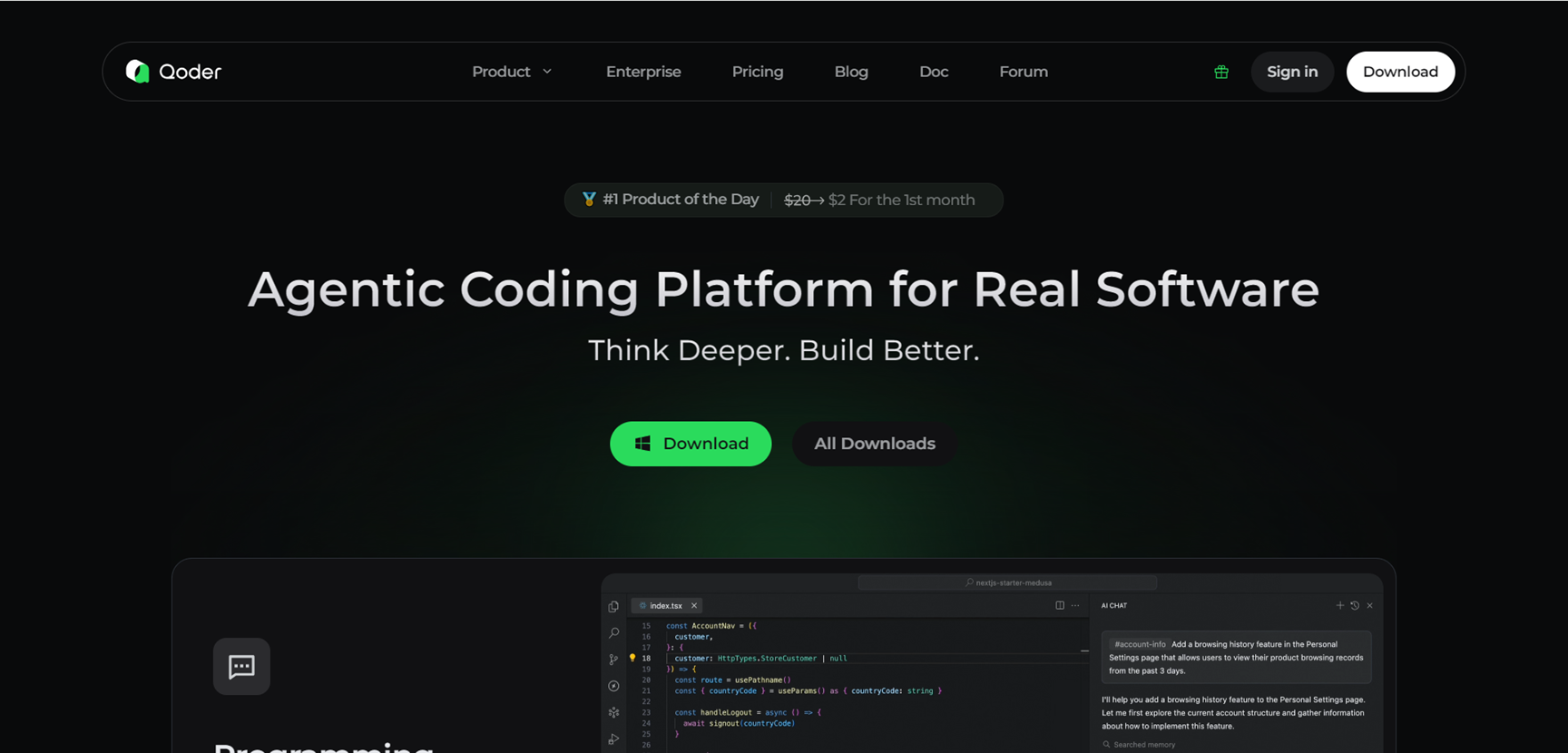

Qoder

Qoder is an agentic coding platform that powers real software development with AI agents handling complex tasks like code generation, testing, refactoring, and debugging across 200+ languages. It features NES intelligent suggestions, Quest mode for autonomous multi-file work, inline chats, codebase wikis, persistent memory learning, and MCP tool integration for external services. Downloadable for Windows, Mac, and Linux, it combines multi-model AI (Claude, GPT, Gemini) with deep context engineering for precise, iterative coding support.

Qoder

Qoder is an agentic coding platform that powers real software development with AI agents handling complex tasks like code generation, testing, refactoring, and debugging across 200+ languages. It features NES intelligent suggestions, Quest mode for autonomous multi-file work, inline chats, codebase wikis, persistent memory learning, and MCP tool integration for external services. Downloadable for Windows, Mac, and Linux, it combines multi-model AI (Claude, GPT, Gemini) with deep context engineering for precise, iterative coding support.

Qoder

Qoder is an agentic coding platform that powers real software development with AI agents handling complex tasks like code generation, testing, refactoring, and debugging across 200+ languages. It features NES intelligent suggestions, Quest mode for autonomous multi-file work, inline chats, codebase wikis, persistent memory learning, and MCP tool integration for external services. Downloadable for Windows, Mac, and Linux, it combines multi-model AI (Claude, GPT, Gemini) with deep context engineering for precise, iterative coding support.

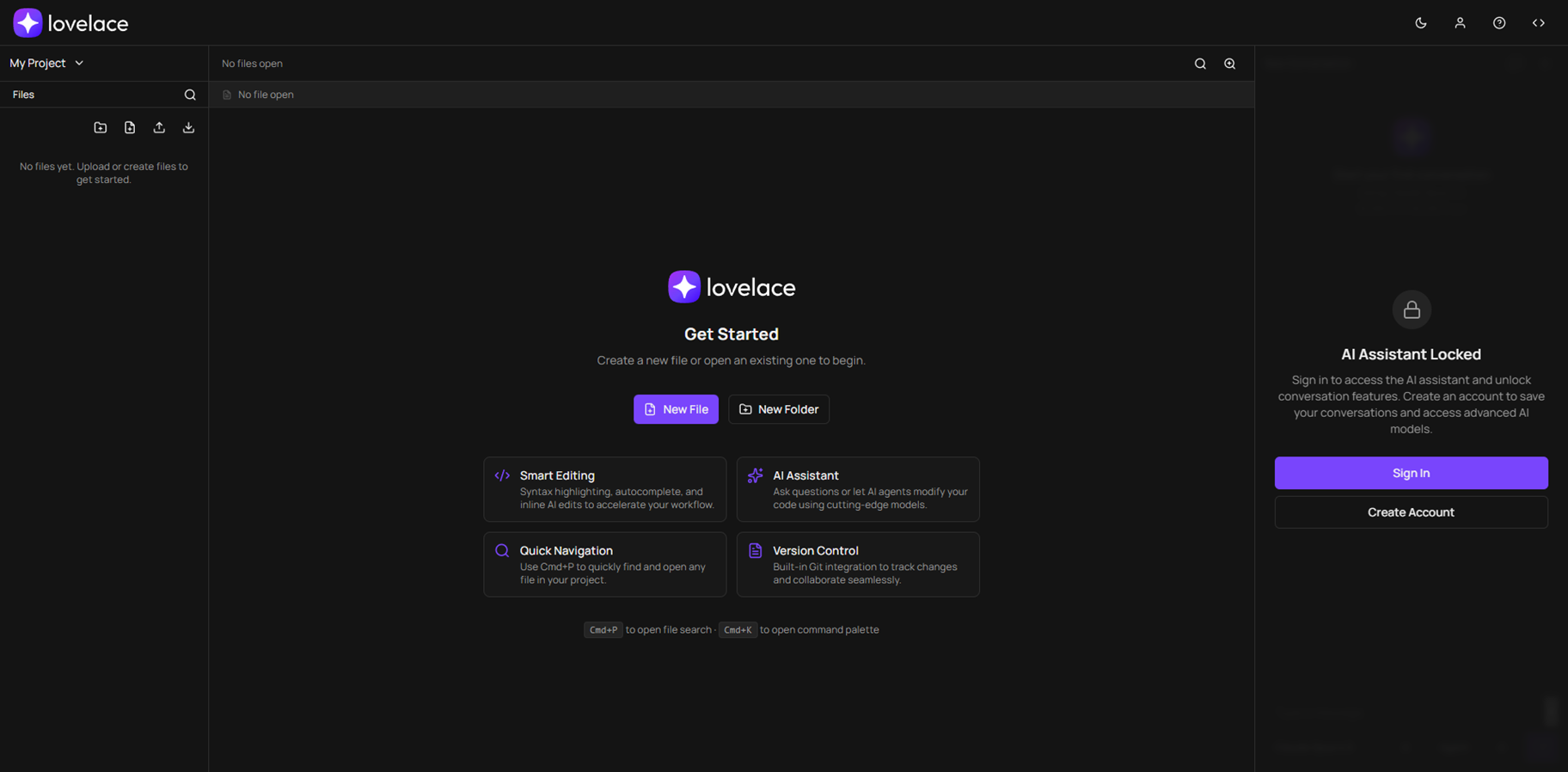

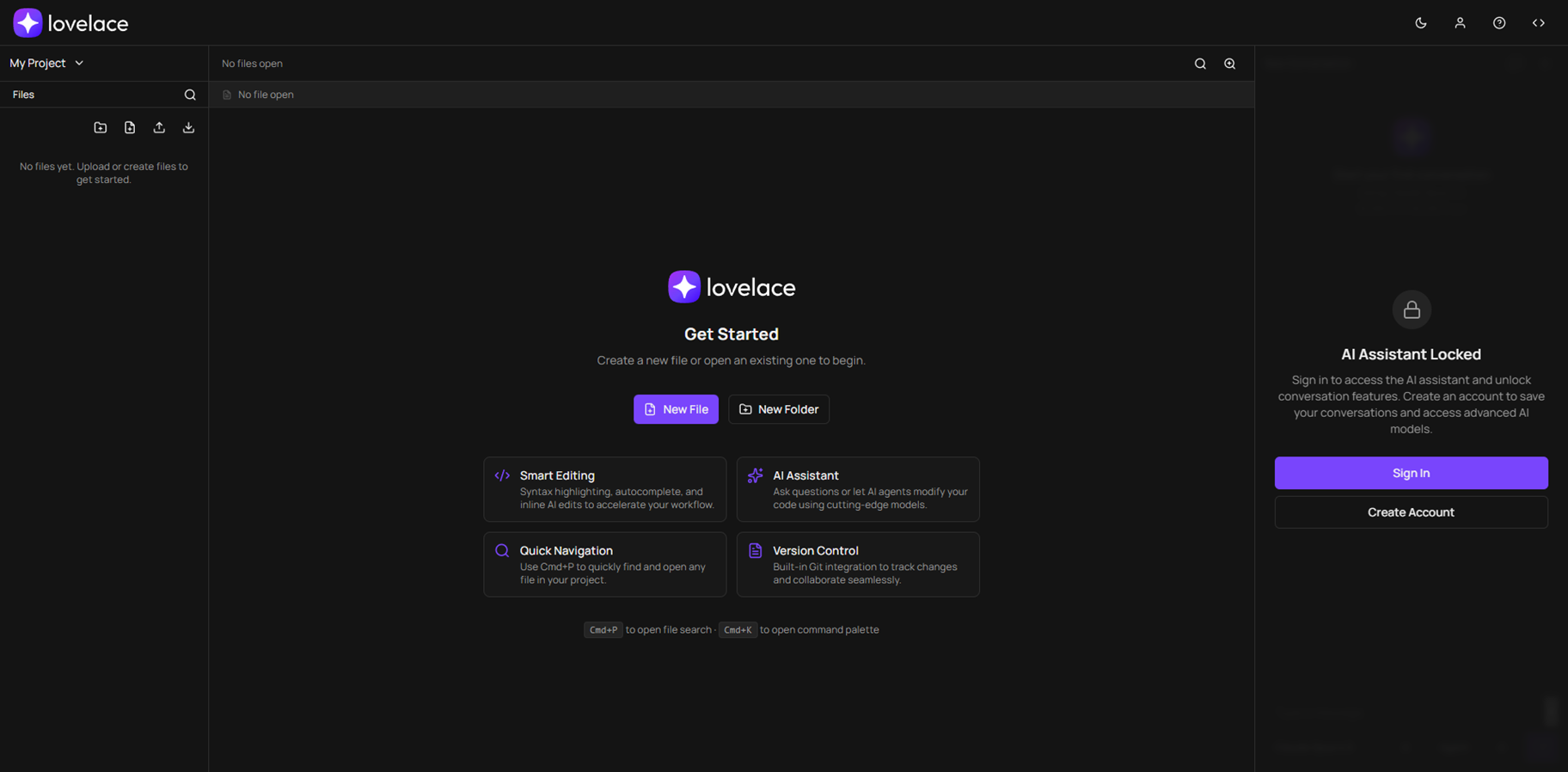

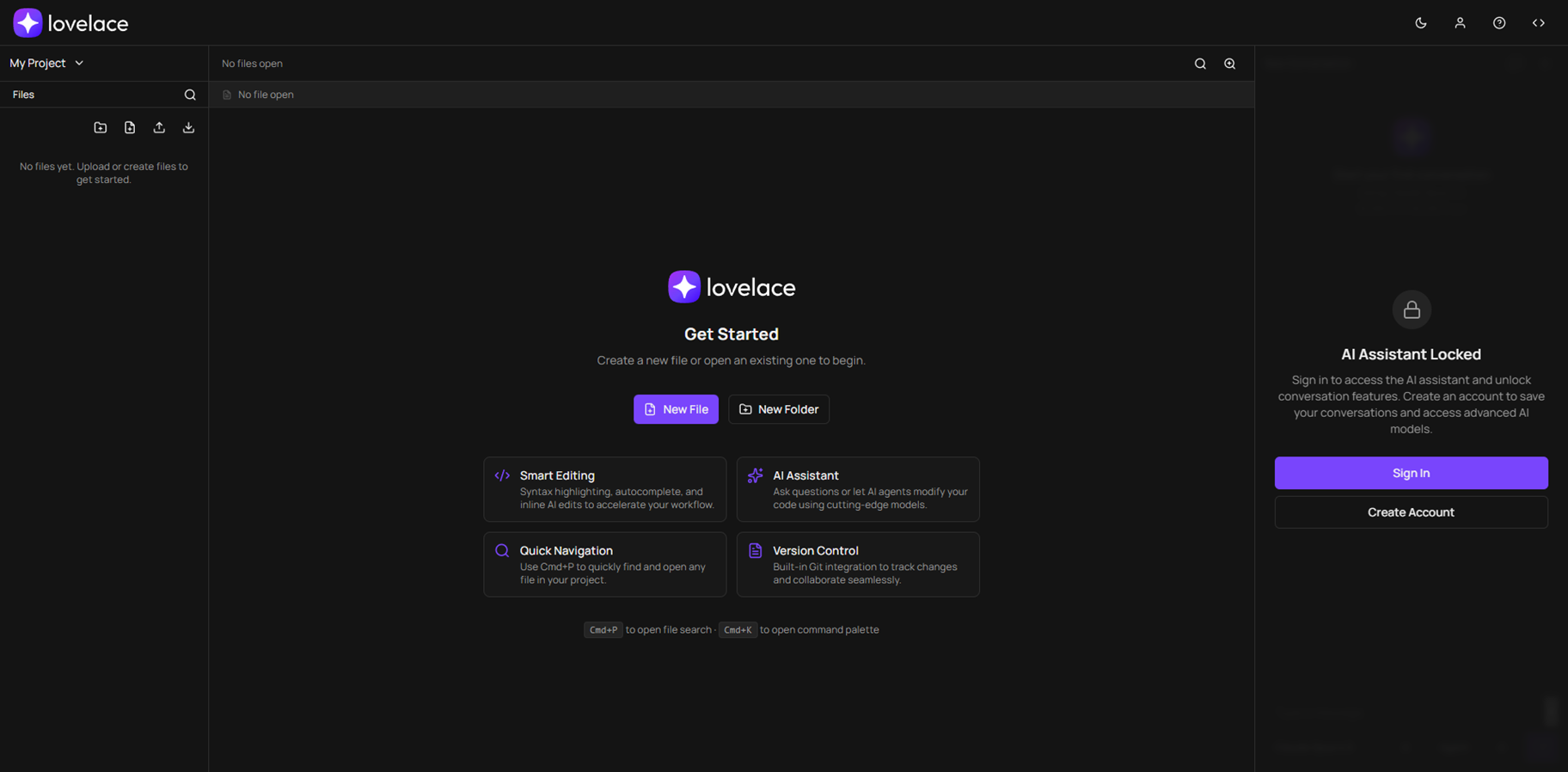

Lovelace

Lovelace is an AI-native Integrated Development Environment (IDE) tailored for enterprise engineering teams, boosting coding speed up to 4x with intelligent agents, context-aware autocomplete, and inline editing. Named after Ada Lovelace, it acts as a smart teammate that handles multi-step tasks like rewriting logic or updating dependencies across codebases via simple prompts. Secure by design, it aligns with ISO 27001, SOC 2, GDPR, and data sovereignty laws, never training on your IP, and offers risk monitoring for threats in code and dependencies. Integrates with Jira and monday.com for seamless workflows, enables team collaboration on prompts and reviews, and supports region-specific data storage. Currently available via Adaca's AI Pods, with public release planned for Q3 2025.

Lovelace

Lovelace is an AI-native Integrated Development Environment (IDE) tailored for enterprise engineering teams, boosting coding speed up to 4x with intelligent agents, context-aware autocomplete, and inline editing. Named after Ada Lovelace, it acts as a smart teammate that handles multi-step tasks like rewriting logic or updating dependencies across codebases via simple prompts. Secure by design, it aligns with ISO 27001, SOC 2, GDPR, and data sovereignty laws, never training on your IP, and offers risk monitoring for threats in code and dependencies. Integrates with Jira and monday.com for seamless workflows, enables team collaboration on prompts and reviews, and supports region-specific data storage. Currently available via Adaca's AI Pods, with public release planned for Q3 2025.

Lovelace

Lovelace is an AI-native Integrated Development Environment (IDE) tailored for enterprise engineering teams, boosting coding speed up to 4x with intelligent agents, context-aware autocomplete, and inline editing. Named after Ada Lovelace, it acts as a smart teammate that handles multi-step tasks like rewriting logic or updating dependencies across codebases via simple prompts. Secure by design, it aligns with ISO 27001, SOC 2, GDPR, and data sovereignty laws, never training on your IP, and offers risk monitoring for threats in code and dependencies. Integrates with Jira and monday.com for seamless workflows, enables team collaboration on prompts and reviews, and supports region-specific data storage. Currently available via Adaca's AI Pods, with public release planned for Q3 2025.

Editorial Note

This page was researched and written by the ATB Editorial Team. Our team researches each AI tool by reviewing its official website, testing features, exploring real use cases, and considering user feedback. Every page is fact-checked and regularly updated to ensure the information stays accurate, neutral, and useful for our readers.

If you have any suggestions or questions, email us at hello@aitoolbook.ai