- Individuals: Seeking a convenient way to access and compare different leading AI chatbot models.

- Users with existing API keys: Who want to consolidate their AI interactions and avoid recurring monthly fees for each model.

- Professionals & Researchers: Needing to analyze, summarize, or ask questions about content within files using AI.

- Anyone needing versatile AI chat capabilities: For various tasks, from generating text to data analysis.

- Content Creators: For generating or refining content using different AI perspectives.

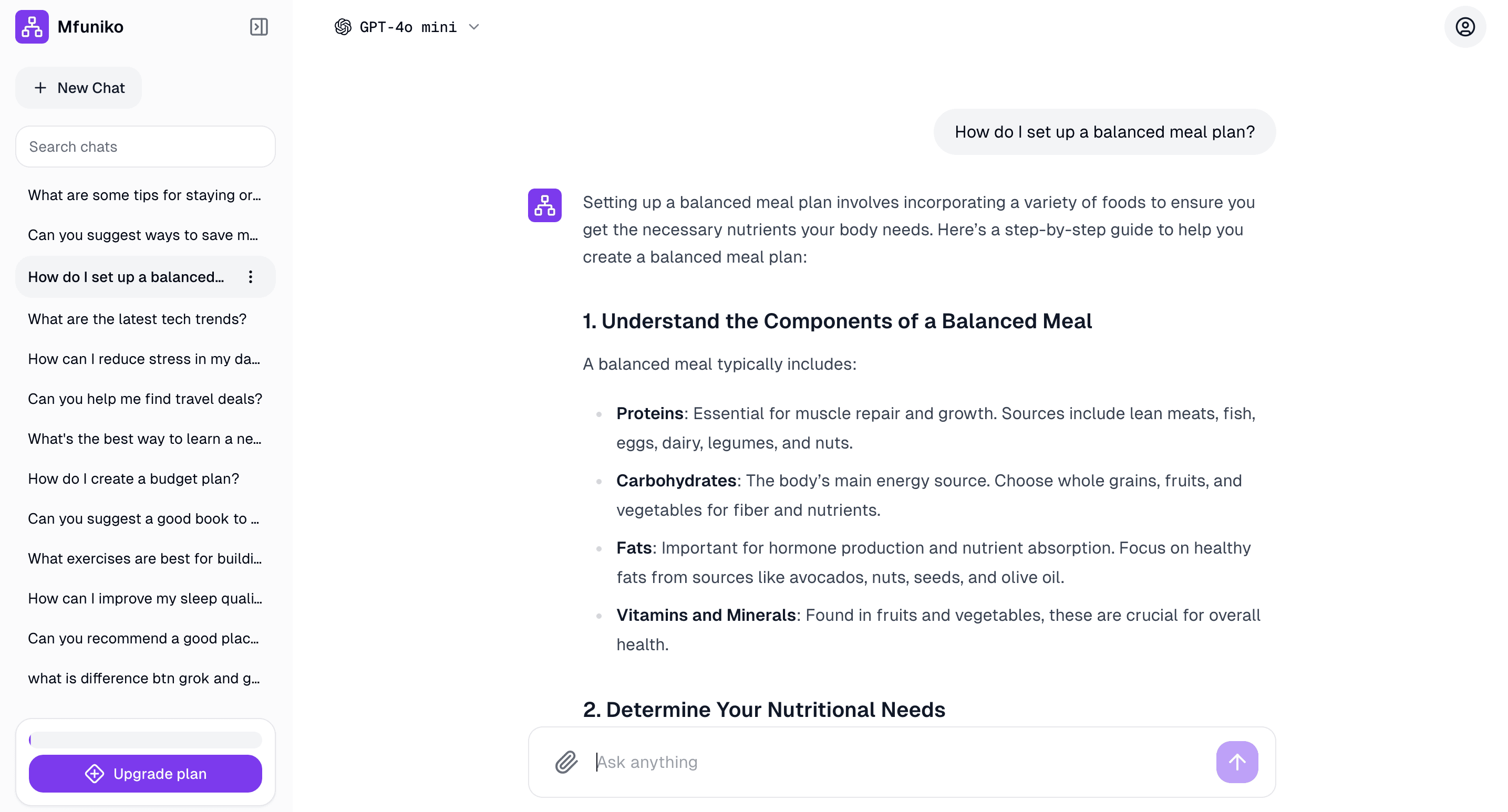

How to Use Mfuniko.com?

- Get Started: Users can begin by trying the platform for free, or by selecting one of the paid plans: Starter, Standard, or Pro.

- Connect API Keys: (Inferred) The platform works by allowing users to bring and connect their own API keys for the various AI models, enabling a pay-per-use structure for model interactions.

- Interact with AI Models: Choose and interact with top AI chatbots like ChatGPT, DeepSeek, Gemini, Claude, and Grok from a single interface.

- Organize & Share Chats: Utilize features to organize and share chat conversations across different devices.

- Chat with Files: Upload files to the platform to enable AI analysis, summarization, or to ask questions directly about the file's content.

- Explore FAQs: While specific step-by-step guidance isn't provided, the platform's FAQ section might offer more details on how to get started and its functionalities.

- Centralized Access to Multiple Top AI Models: Provides a single interface to interact with leading AI chatbots like ChatGPT, DeepSeek, Gemini, Claude, and Grok, eliminating the need to switch between different platforms.

- Pay-Only-For-What-You-Use Model: Unlike subscription-based services for individual AI models, Mfuniko allows users to use their own API keys, meaning they only pay for the actual tokens consumed, potentially saving on monthly fees.

- Chat with Files Capability: Offers the unique feature to upload files and interact with them using AI for tasks such as analysis, summarization, or answering specific questions about the content.

- Cross-Device Chat Organization & Sharing: Enables users to organize their chats and share them seamlessly across multiple devices, enhancing productivity and collaboration.

- Tiered Pricing Plans: Provides different paid plans (Starter, Standard, Pro) to cater to varying levels of usage and needs.

- Provides centralized access to multiple top AI chatbots (ChatGPT, DeepSeek, Gemini, Claude, Grok).

- Features a cost-effective pay-as-you-go model using personal API keys, avoiding recurring monthly fees.

- Includes the valuable "chat with files" feature for analysis, summarization, and querying documents.

- Allows users to organize and share their chat conversations across different devices.

- Offers a free trial option to get started and explore the platform's capabilities.

- Provides tiered pricing plans to suit various user needs and budgets.

- The website does not provide explicit step-by-step instructions on how to get started or if programming skills are needed to connect API keys.

- Requires users to have their own API keys for the individual AI models, which could be a hurdle for some.

- The specific differences and advanced functionalities between the "Standard" and "Pro" plans are vague.

- No mention of an iOS app or desktop application, implying web-only access.

- Details about the free trial's limitations or duration are not specified.

Starter

$ 29.99

Search, Save, and share chats

Standard

$ 49.99

Chat with documents

Text to speech

Pro

$ 69.99

Chat with images (vision)

Proud of the love you're getting? Show off your AI Toolbook reviews—then invite more fans to share the love and build your credibility.

Add an AI Toolbook badge to your site—an easy way to drive followers, showcase updates, and collect reviews. It's like a mini 24/7 billboard for your AI.

Reviews

Rating Distribution

Average score

Popular Mention

FAQs

Similar AI Tools

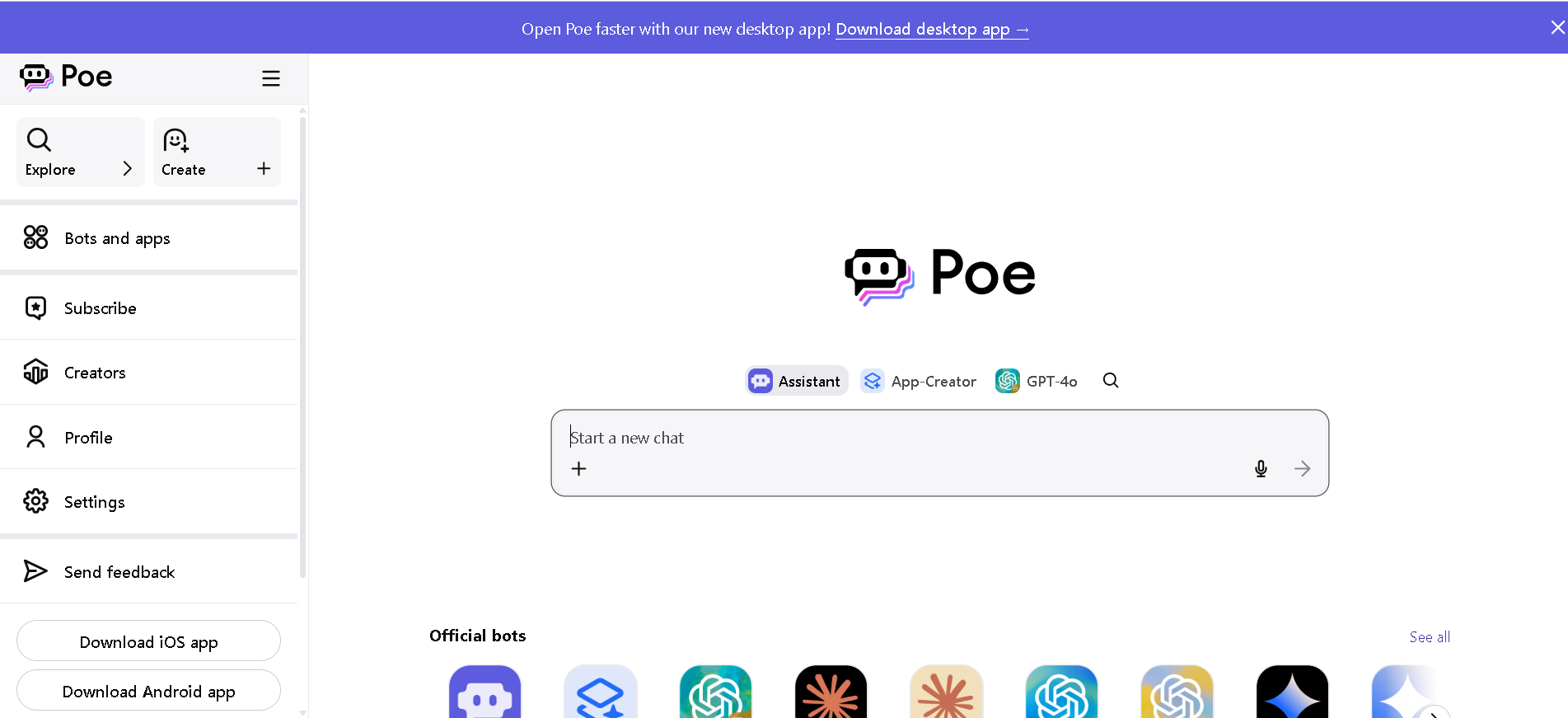

Poe AI

Poe.com is a comprehensive AI chatbot aggregation platform developed by Quora, providing users with unified access to a wide range of conversational AI models from various leading providers, including OpenAI, Anthropic, Google, and Meta. It simplifies the process of discovering and interacting with different AI chatbots and also empowers users to create and monetize their own custom AI bots.

Poe AI

Poe.com is a comprehensive AI chatbot aggregation platform developed by Quora, providing users with unified access to a wide range of conversational AI models from various leading providers, including OpenAI, Anthropic, Google, and Meta. It simplifies the process of discovering and interacting with different AI chatbots and also empowers users to create and monetize their own custom AI bots.

Poe AI

Poe.com is a comprehensive AI chatbot aggregation platform developed by Quora, providing users with unified access to a wide range of conversational AI models from various leading providers, including OpenAI, Anthropic, Google, and Meta. It simplifies the process of discovering and interacting with different AI chatbots and also empowers users to create and monetize their own custom AI bots.

LM Studio

LM Studio is a local AI toolkit that empowers users to discover, download, and run Large Language Models (LLMs) directly on their personal computers. It provides a user-friendly interface to chat with models, set up a local LLM server for applications, and ensures complete data privacy as all processes occur locally on your machine.

LM Studio

LM Studio is a local AI toolkit that empowers users to discover, download, and run Large Language Models (LLMs) directly on their personal computers. It provides a user-friendly interface to chat with models, set up a local LLM server for applications, and ensures complete data privacy as all processes occur locally on your machine.

LM Studio

LM Studio is a local AI toolkit that empowers users to discover, download, and run Large Language Models (LLMs) directly on their personal computers. It provides a user-friendly interface to chat with models, set up a local LLM server for applications, and ensures complete data privacy as all processes occur locally on your machine.

LM Arena

LMArena is a platform designed to allow users to contribute to the development of AI through collective feedback. Users interact with and provide feedback on various Large Language Models (LLMs) by voting on their responses, thereby helping to shape and improve AI capabilities. The platform fosters a global community and features a leaderboard to showcase user contributions.

LM Arena

LMArena is a platform designed to allow users to contribute to the development of AI through collective feedback. Users interact with and provide feedback on various Large Language Models (LLMs) by voting on their responses, thereby helping to shape and improve AI capabilities. The platform fosters a global community and features a leaderboard to showcase user contributions.

LM Arena

LMArena is a platform designed to allow users to contribute to the development of AI through collective feedback. Users interact with and provide feedback on various Large Language Models (LLMs) by voting on their responses, thereby helping to shape and improve AI capabilities. The platform fosters a global community and features a leaderboard to showcase user contributions.

Genloop AI

Genloop is a platform that empowers enterprises to build, deploy, and manage custom, private large language models (LLMs) tailored to their business data and requirements — all with minimal development effort. It turns enterprise data into intelligent, conversational insights, allowing users to ask business questions in natural language and receive actionable analysis instantly. The platform enables organizations to confidently manage their data-driven decision-making by offering advanced fine-tuning, automation, and deployment tools. Businesses can transform their existing datasets into private AI assistants that deliver accurate insights, while maintaining complete security and compliance. Genloop’s focus is on bridging the gap between AI and enterprise data operations, providing a scalable, trustworthy, and adaptive solution for teams that want to leverage AI without extensive coding or infrastructure complexity.

Genloop AI

Genloop is a platform that empowers enterprises to build, deploy, and manage custom, private large language models (LLMs) tailored to their business data and requirements — all with minimal development effort. It turns enterprise data into intelligent, conversational insights, allowing users to ask business questions in natural language and receive actionable analysis instantly. The platform enables organizations to confidently manage their data-driven decision-making by offering advanced fine-tuning, automation, and deployment tools. Businesses can transform their existing datasets into private AI assistants that deliver accurate insights, while maintaining complete security and compliance. Genloop’s focus is on bridging the gap between AI and enterprise data operations, providing a scalable, trustworthy, and adaptive solution for teams that want to leverage AI without extensive coding or infrastructure complexity.

Genloop AI

Genloop is a platform that empowers enterprises to build, deploy, and manage custom, private large language models (LLMs) tailored to their business data and requirements — all with minimal development effort. It turns enterprise data into intelligent, conversational insights, allowing users to ask business questions in natural language and receive actionable analysis instantly. The platform enables organizations to confidently manage their data-driven decision-making by offering advanced fine-tuning, automation, and deployment tools. Businesses can transform their existing datasets into private AI assistants that deliver accurate insights, while maintaining complete security and compliance. Genloop’s focus is on bridging the gap between AI and enterprise data operations, providing a scalable, trustworthy, and adaptive solution for teams that want to leverage AI without extensive coding or infrastructure complexity.

Langchain

LangChain is a powerful open-source framework designed to help developers build context-aware applications that leverage large language models (LLMs). It allows users to connect language models to various data sources, APIs, and memory components, enabling intelligent, multi-step reasoning and decision-making processes. LangChain supports both Python and JavaScript, providing modular building blocks for developers to create chatbots, AI assistants, retrieval-augmented generation (RAG) systems, and agent-based tools. The framework is widely adopted across industries for its flexibility in connecting structured and unstructured data with LLMs.

Langchain

LangChain is a powerful open-source framework designed to help developers build context-aware applications that leverage large language models (LLMs). It allows users to connect language models to various data sources, APIs, and memory components, enabling intelligent, multi-step reasoning and decision-making processes. LangChain supports both Python and JavaScript, providing modular building blocks for developers to create chatbots, AI assistants, retrieval-augmented generation (RAG) systems, and agent-based tools. The framework is widely adopted across industries for its flexibility in connecting structured and unstructured data with LLMs.

Langchain

LangChain is a powerful open-source framework designed to help developers build context-aware applications that leverage large language models (LLMs). It allows users to connect language models to various data sources, APIs, and memory components, enabling intelligent, multi-step reasoning and decision-making processes. LangChain supports both Python and JavaScript, providing modular building blocks for developers to create chatbots, AI assistants, retrieval-augmented generation (RAG) systems, and agent-based tools. The framework is widely adopted across industries for its flexibility in connecting structured and unstructured data with LLMs.

Ask Any Model

AskAnyModel is a unified AI interface that allows users to interact with multiple leading AI models — such as GPT, Claude, Gemini, and Mistral — from a single platform. It eliminates the need for multiple subscriptions and interfaces by bringing top AI models into one streamlined environment. Users can compare responses, analyze outputs, and select the best AI model for specific tasks like content creation, coding, data analysis, or research. AskAnyModel empowers individuals and teams to harness AI diversity efficiently, offering advanced tools for prompt testing, model benchmarking, and workflow integration.

Ask Any Model

AskAnyModel is a unified AI interface that allows users to interact with multiple leading AI models — such as GPT, Claude, Gemini, and Mistral — from a single platform. It eliminates the need for multiple subscriptions and interfaces by bringing top AI models into one streamlined environment. Users can compare responses, analyze outputs, and select the best AI model for specific tasks like content creation, coding, data analysis, or research. AskAnyModel empowers individuals and teams to harness AI diversity efficiently, offering advanced tools for prompt testing, model benchmarking, and workflow integration.

Ask Any Model

AskAnyModel is a unified AI interface that allows users to interact with multiple leading AI models — such as GPT, Claude, Gemini, and Mistral — from a single platform. It eliminates the need for multiple subscriptions and interfaces by bringing top AI models into one streamlined environment. Users can compare responses, analyze outputs, and select the best AI model for specific tasks like content creation, coding, data analysis, or research. AskAnyModel empowers individuals and teams to harness AI diversity efficiently, offering advanced tools for prompt testing, model benchmarking, and workflow integration.

Mobisoft Infotech

MI Team AI is a robust multi-LLM platform designed for enterprises seeking secure, scalable, and cost-effective AI access. It consolidates multiple AI models such as ChatGPT, Claude, Gemini, and various open-source large language models into a single platform, enabling users to switch seamlessly without juggling different tools. The platform supports deployment on private cloud or on-premises infrastructure to ensure complete data privacy and compliance. MI Team AI provides a unified workspace with role-based access controls, single sign-on (SSO), and comprehensive chat logs for transparency and auditability. It offers fixed licensing fees allowing unlimited team access under the company’s brand, making it ideal for organizations needing full control over AI usage.

Mobisoft Infotech

MI Team AI is a robust multi-LLM platform designed for enterprises seeking secure, scalable, and cost-effective AI access. It consolidates multiple AI models such as ChatGPT, Claude, Gemini, and various open-source large language models into a single platform, enabling users to switch seamlessly without juggling different tools. The platform supports deployment on private cloud or on-premises infrastructure to ensure complete data privacy and compliance. MI Team AI provides a unified workspace with role-based access controls, single sign-on (SSO), and comprehensive chat logs for transparency and auditability. It offers fixed licensing fees allowing unlimited team access under the company’s brand, making it ideal for organizations needing full control over AI usage.

Mobisoft Infotech

MI Team AI is a robust multi-LLM platform designed for enterprises seeking secure, scalable, and cost-effective AI access. It consolidates multiple AI models such as ChatGPT, Claude, Gemini, and various open-source large language models into a single platform, enabling users to switch seamlessly without juggling different tools. The platform supports deployment on private cloud or on-premises infrastructure to ensure complete data privacy and compliance. MI Team AI provides a unified workspace with role-based access controls, single sign-on (SSO), and comprehensive chat logs for transparency and auditability. It offers fixed licensing fees allowing unlimited team access under the company’s brand, making it ideal for organizations needing full control over AI usage.

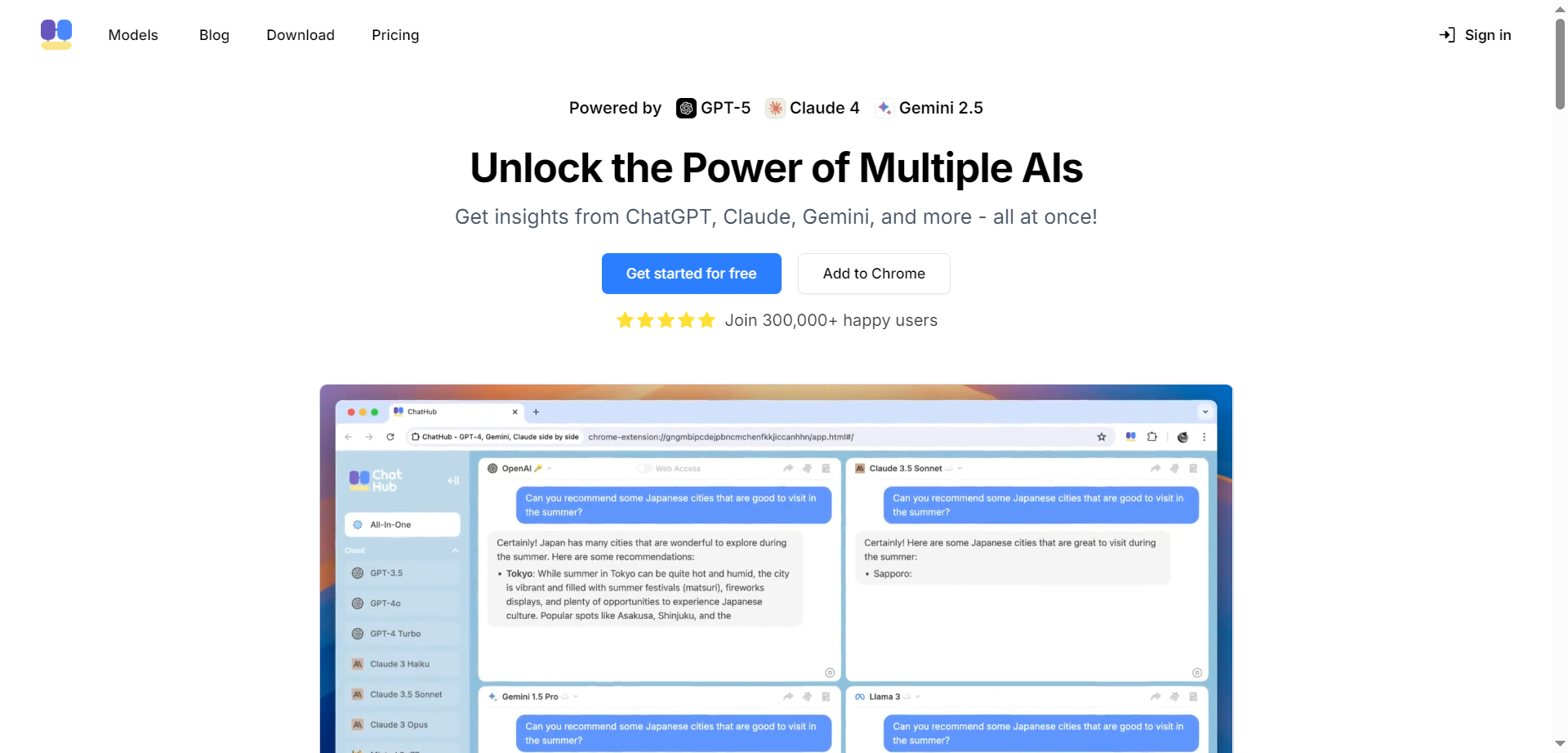

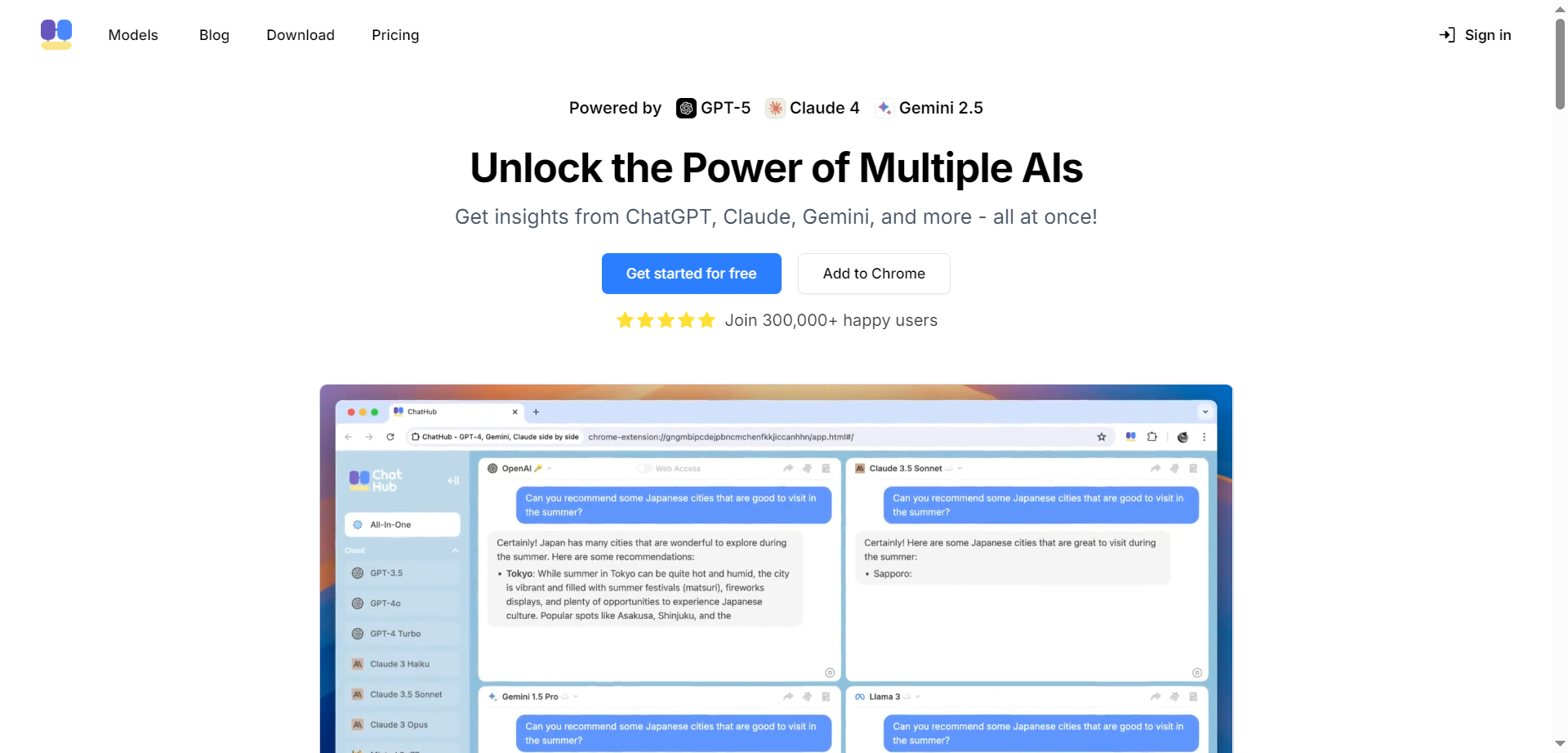

ChatHub

ChatHub is a platform that allows users to simultaneously interact with multiple AI chatbots, providing diverse AI-generated responses for enhanced insights and confidence. It supports top AI models like GPT-5, Claude 4, Gemini 2.5, Llama 3.3, and over 20 others, all accessible under one subscription. Users can input one question and receive multiple perspectives from these models, helping to cross-verify information and minimize hallucinations. ChatHub also includes AI-powered image generation using models such as DALL-E 3 and Stable Diffusion, file upload and analysis for documents and images, and features like AI-powered web search, code preview, prompt libraries, and productivity tools.

ChatHub

ChatHub is a platform that allows users to simultaneously interact with multiple AI chatbots, providing diverse AI-generated responses for enhanced insights and confidence. It supports top AI models like GPT-5, Claude 4, Gemini 2.5, Llama 3.3, and over 20 others, all accessible under one subscription. Users can input one question and receive multiple perspectives from these models, helping to cross-verify information and minimize hallucinations. ChatHub also includes AI-powered image generation using models such as DALL-E 3 and Stable Diffusion, file upload and analysis for documents and images, and features like AI-powered web search, code preview, prompt libraries, and productivity tools.

ChatHub

ChatHub is a platform that allows users to simultaneously interact with multiple AI chatbots, providing diverse AI-generated responses for enhanced insights and confidence. It supports top AI models like GPT-5, Claude 4, Gemini 2.5, Llama 3.3, and over 20 others, all accessible under one subscription. Users can input one question and receive multiple perspectives from these models, helping to cross-verify information and minimize hallucinations. ChatHub also includes AI-powered image generation using models such as DALL-E 3 and Stable Diffusion, file upload and analysis for documents and images, and features like AI-powered web search, code preview, prompt libraries, and productivity tools.

LM Studio

LM Studio is a local large language model (LLM) platform that enables users to run and download powerful AI language models like LLaMa, MPT, and Gemma directly on their own computers. This platform supports Mac, Windows, and Linux operating systems, providing flexibility to users across different devices. LM Studio focuses on privacy and control by allowing users to work with AI models locally without relying on cloud-based services, ensuring data stays on the user’s device. It offers an easy-to-install interface with step-by-step guidance for setup, facilitating access to advanced AI capabilities for developers, researchers, and AI enthusiasts without requiring an internet connection.

LM Studio

LM Studio is a local large language model (LLM) platform that enables users to run and download powerful AI language models like LLaMa, MPT, and Gemma directly on their own computers. This platform supports Mac, Windows, and Linux operating systems, providing flexibility to users across different devices. LM Studio focuses on privacy and control by allowing users to work with AI models locally without relying on cloud-based services, ensuring data stays on the user’s device. It offers an easy-to-install interface with step-by-step guidance for setup, facilitating access to advanced AI capabilities for developers, researchers, and AI enthusiasts without requiring an internet connection.

LM Studio

LM Studio is a local large language model (LLM) platform that enables users to run and download powerful AI language models like LLaMa, MPT, and Gemma directly on their own computers. This platform supports Mac, Windows, and Linux operating systems, providing flexibility to users across different devices. LM Studio focuses on privacy and control by allowing users to work with AI models locally without relying on cloud-based services, ensuring data stays on the user’s device. It offers an easy-to-install interface with step-by-step guidance for setup, facilitating access to advanced AI capabilities for developers, researchers, and AI enthusiasts without requiring an internet connection.

LLM as-a-service

LLM.co LLM-as-a-Service (LLMaaS) is a secure, enterprise-grade AI platform that provides private and fully managed large language model deployments tailored to an organization’s specific industry, workflows, and data. Unlike public LLM APIs, each client receives a dedicated, single-tenant model hosted in private clouds or virtual private clouds (VPCs), ensuring complete data privacy and compliance. The platform offers model fine-tuning on proprietary internal documents, semantic search, multi-document Q&A, custom AI agents, contract review, and offline AI capabilities for regulated industries. It removes infrastructure burdens by handling deployment, scaling, and monitoring, while enabling businesses to customize models for domain-specific language, regulatory compliance, and unique operational needs.

LLM as-a-service

LLM.co LLM-as-a-Service (LLMaaS) is a secure, enterprise-grade AI platform that provides private and fully managed large language model deployments tailored to an organization’s specific industry, workflows, and data. Unlike public LLM APIs, each client receives a dedicated, single-tenant model hosted in private clouds or virtual private clouds (VPCs), ensuring complete data privacy and compliance. The platform offers model fine-tuning on proprietary internal documents, semantic search, multi-document Q&A, custom AI agents, contract review, and offline AI capabilities for regulated industries. It removes infrastructure burdens by handling deployment, scaling, and monitoring, while enabling businesses to customize models for domain-specific language, regulatory compliance, and unique operational needs.

LLM as-a-service

LLM.co LLM-as-a-Service (LLMaaS) is a secure, enterprise-grade AI platform that provides private and fully managed large language model deployments tailored to an organization’s specific industry, workflows, and data. Unlike public LLM APIs, each client receives a dedicated, single-tenant model hosted in private clouds or virtual private clouds (VPCs), ensuring complete data privacy and compliance. The platform offers model fine-tuning on proprietary internal documents, semantic search, multi-document Q&A, custom AI agents, contract review, and offline AI capabilities for regulated industries. It removes infrastructure burdens by handling deployment, scaling, and monitoring, while enabling businesses to customize models for domain-specific language, regulatory compliance, and unique operational needs.

ChatGot IO

ChatGot.io is an AI-powered conversational platform that brings together multiple advanced language models in a single interface. It allows users to chat, generate content, analyze documents, write code, and create personalized AI assistants for productivity, learning, and creative tasks. By enabling users to switch between or combine different AI models, ChatGot.io delivers richer and more flexible responses than traditional single-model chatbots. Designed for individuals and teams, the platform simplifies access to AI-powered tools for writing, research, summarization, and everyday problem-solving.

ChatGot IO

ChatGot.io is an AI-powered conversational platform that brings together multiple advanced language models in a single interface. It allows users to chat, generate content, analyze documents, write code, and create personalized AI assistants for productivity, learning, and creative tasks. By enabling users to switch between or combine different AI models, ChatGot.io delivers richer and more flexible responses than traditional single-model chatbots. Designed for individuals and teams, the platform simplifies access to AI-powered tools for writing, research, summarization, and everyday problem-solving.

ChatGot IO

ChatGot.io is an AI-powered conversational platform that brings together multiple advanced language models in a single interface. It allows users to chat, generate content, analyze documents, write code, and create personalized AI assistants for productivity, learning, and creative tasks. By enabling users to switch between or combine different AI models, ChatGot.io delivers richer and more flexible responses than traditional single-model chatbots. Designed for individuals and teams, the platform simplifies access to AI-powered tools for writing, research, summarization, and everyday problem-solving.

Secure Chat

ChtSafe is a privacy-first secure AI chat platform that unifies access to multiple advanced AI models while protecting every conversation with end-to-end encryption. It allows users to interact with ChatGPT, Claude, Gemini, Groq, Perplexity, DeepSeek, and other models through a single secure interface without vendor lock-in or data tracking, ensuring total privacy and control over AI interactions.

Secure Chat

ChtSafe is a privacy-first secure AI chat platform that unifies access to multiple advanced AI models while protecting every conversation with end-to-end encryption. It allows users to interact with ChatGPT, Claude, Gemini, Groq, Perplexity, DeepSeek, and other models through a single secure interface without vendor lock-in or data tracking, ensuring total privacy and control over AI interactions.

Secure Chat

ChtSafe is a privacy-first secure AI chat platform that unifies access to multiple advanced AI models while protecting every conversation with end-to-end encryption. It allows users to interact with ChatGPT, Claude, Gemini, Groq, Perplexity, DeepSeek, and other models through a single secure interface without vendor lock-in or data tracking, ensuring total privacy and control over AI interactions.

Editorial Note

This page was researched and written by the ATB Editorial Team. Our team researches each AI tool by reviewing its official website, testing features, exploring real use cases, and considering user feedback. Every page is fact-checked and regularly updated to ensure the information stays accurate, neutral, and useful for our readers.

If you have any suggestions or questions, email us at hello@aitoolbook.ai