- Engineering & QA Teams: who need reliable UI / functional test coverage across apps.

- Product Developers & Front-End Engineers: wanting to test user flows quickly without creating detailed selector logic.

- Startups & SaaS Companies: that deploy often and need fast feedback on PRs and UI changes.

- Mobile/Desktop App Developers: needing test automation across platforms.

- Technical Leads & DevOps Teams: concerned with continuous integration, reliability, and reducing regression bugs.

How to Use It:

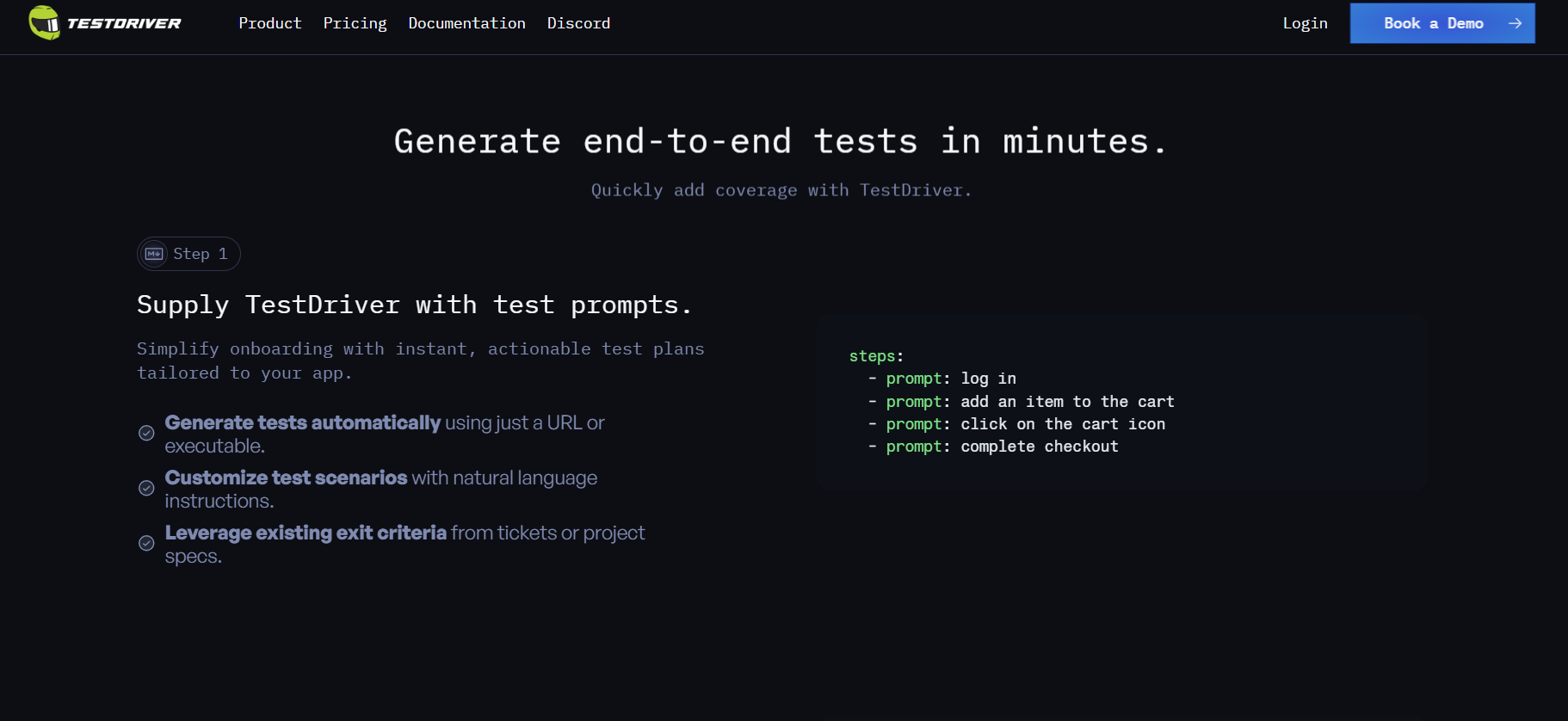

- Provide App & Prompts: Give TestDriver.ai a URL or app executable plus natural language instructions about flows to test.

- Auto-Generate Tests: The system creates tests automatically using vision-based selectors instead of fragile selector logic.

- Integrate with CI/CD: Run tests automatically on Pull Requests, on deploys, or on schedule using the provided integrations.

- Monitor Test Runs: View screen replays, logs, and dashboards to see failures, flaky tests, and trends.

- Maintain & Update: As your UI changes, TestDriver.ai adjusts tests so they stay in sync with app redesigns or minor changes.

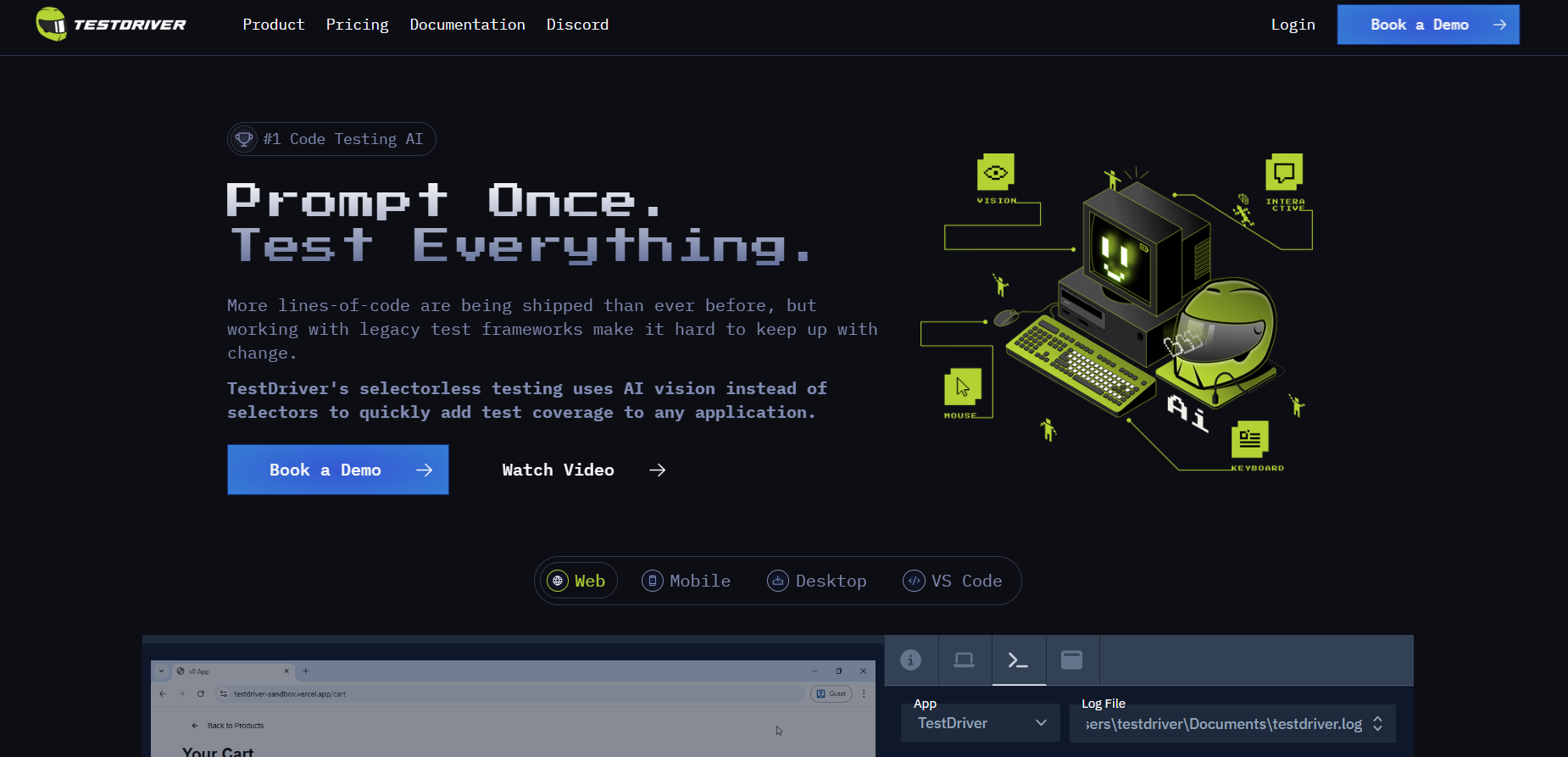

- Selectorless Testing: Unlike traditional UI tests which rely on brittle selectors, this uses vision-based AI so tests break less when UI structure changes.

- Natural Language Prompts: You describe testing steps in plain English rather than writing code.

- CI/CD Integration: You can plug TestDriver.ai into your pull request flow so tests run automatically.

- Multi-Platform Support: Web, desktop apps, hybrid environments are supported.

- Test Maintenance Automation: Tests automatically adapt to UI changes to reduce maintenance overhead.

- Speeds up test suite creation, reducing time to catch bugs.

- Makes UI testing more robust to interface changes.

- Provides useful visibility: screen replays, logs during test failures.

- Helps QA efforts scale without expanding teams.

- Natural language prompts lower technical barrier for writing tests.

- Vision-based testing can sometimes misinterpret visual cues depending on app design/styling.

- For complex UIs or custom components, prompts may need refinement or manual adjustment.

- Cost may increase with parallel runs, desktop app support, or high test volume.

- UI flakiness or environment issues might still cause false negatives/positives.

- Setup might require configuration to properly integrate with existing CI/CD workflows.

Pro

$ 20.00

- Everything you need to deploy your first tests.

Team

$ 250.00

- Everything you need to scale agentic user testing.

- $250 /parallel test

Enterprise

Custom

- Get full control and customization with on-prem deployments via AWS.

Proud of the love you're getting? Show off your AI Toolbook reviews—then invite more fans to share the love and build your credibility.

Add an AI Toolbook badge to your site—an easy way to drive followers, showcase updates, and collect reviews. It's like a mini 24/7 billboard for your AI.

Reviews

Rating Distribution

Average score

Popular Mention

FAQs

A tool to automate UI and functional testing using AI vision and natural language to reduce reliance on brittle selectors.

Not as much; you use natural language prompts to define tests instead of writing detailed selector-based scripts.

Web, desktop apps, and hybrid environments.

Yes; it integrates with pull requests, deploys, or scheduled test runs.

Screen replays, logs, dashboards, trends in failures, and visibility into test flakiness.

Similar AI Tools

Mixus

Mixus AI is an innovative platform that empowers users to build custom AI agents in plain English, in seconds. These agents automate and execute workflows—like researching, drafting emails, and task scheduling—with built-in human-in-the-loop oversight to ensure accuracy. They seamlessly connect to tools like Gmail, Salesforce, Jira, and Notion, enabling trustworthy automation. The platform blends AI efficiency with human oversight to guard against mistakes, particularly in enterprise-critical systems.

Mixus

Mixus AI is an innovative platform that empowers users to build custom AI agents in plain English, in seconds. These agents automate and execute workflows—like researching, drafting emails, and task scheduling—with built-in human-in-the-loop oversight to ensure accuracy. They seamlessly connect to tools like Gmail, Salesforce, Jira, and Notion, enabling trustworthy automation. The platform blends AI efficiency with human oversight to guard against mistakes, particularly in enterprise-critical systems.

Mixus

Mixus AI is an innovative platform that empowers users to build custom AI agents in plain English, in seconds. These agents automate and execute workflows—like researching, drafting emails, and task scheduling—with built-in human-in-the-loop oversight to ensure accuracy. They seamlessly connect to tools like Gmail, Salesforce, Jira, and Notion, enabling trustworthy automation. The platform blends AI efficiency with human oversight to guard against mistakes, particularly in enterprise-critical systems.

iftrue

Iftrue is a Slack-native assistant for engineering managers that provides context-aware guidance, real-time tracking of team progress, and analytics based on global standards like DORA and SPACE. It helps leaders spot blockers, plan smarter, monitor developer wellbeing, and drive better outcomes without leaving Slack.

iftrue

Iftrue is a Slack-native assistant for engineering managers that provides context-aware guidance, real-time tracking of team progress, and analytics based on global standards like DORA and SPACE. It helps leaders spot blockers, plan smarter, monitor developer wellbeing, and drive better outcomes without leaving Slack.

iftrue

Iftrue is a Slack-native assistant for engineering managers that provides context-aware guidance, real-time tracking of team progress, and analytics based on global standards like DORA and SPACE. It helps leaders spot blockers, plan smarter, monitor developer wellbeing, and drive better outcomes without leaving Slack.

TestGrid CoTester is an AI-driven software testing assistant embedded within the TestGrid platform, built to automate test generation, execution, bug detection, and workflow management. Pretrained on core software testing principles and frameworks, CoTester integrates with your stack to draft manual and automated test cases, run them across real browsers and devices, detect performance and functional issues, and even assign bugs and tasks to team members. Over time, it learns from your inputs to better align with your project’s architecture, tech stack, and team conventions.

CoTester by TestGr..

TestGrid CoTester is an AI-driven software testing assistant embedded within the TestGrid platform, built to automate test generation, execution, bug detection, and workflow management. Pretrained on core software testing principles and frameworks, CoTester integrates with your stack to draft manual and automated test cases, run them across real browsers and devices, detect performance and functional issues, and even assign bugs and tasks to team members. Over time, it learns from your inputs to better align with your project’s architecture, tech stack, and team conventions.

CoTester by TestGr..

TestGrid CoTester is an AI-driven software testing assistant embedded within the TestGrid platform, built to automate test generation, execution, bug detection, and workflow management. Pretrained on core software testing principles and frameworks, CoTester integrates with your stack to draft manual and automated test cases, run them across real browsers and devices, detect performance and functional issues, and even assign bugs and tasks to team members. Over time, it learns from your inputs to better align with your project’s architecture, tech stack, and team conventions.

Traycer

Traycer AI is an advanced coding assistant focused on planning, executing, and reviewing code changes in large projects. Rather than immediately generating code, it begins each task by creating detailed, structured plans that break down high-level intent into manageable actions. From there, it allows users to iterate on these plans, then hand them off to AI agents like Claude Code, Cursor, or others to implement the changes. Traycer also includes functionality to verify AI-generated changes against the existing codebase to catch errors early. It integrates with development environments (VSCode, Cursor, Windsurf) and supports features like “Ticket Assist,” which turns GitHub issues into executable plans directly in your IDE.

Traycer

Traycer AI is an advanced coding assistant focused on planning, executing, and reviewing code changes in large projects. Rather than immediately generating code, it begins each task by creating detailed, structured plans that break down high-level intent into manageable actions. From there, it allows users to iterate on these plans, then hand them off to AI agents like Claude Code, Cursor, or others to implement the changes. Traycer also includes functionality to verify AI-generated changes against the existing codebase to catch errors early. It integrates with development environments (VSCode, Cursor, Windsurf) and supports features like “Ticket Assist,” which turns GitHub issues into executable plans directly in your IDE.

Traycer

Traycer AI is an advanced coding assistant focused on planning, executing, and reviewing code changes in large projects. Rather than immediately generating code, it begins each task by creating detailed, structured plans that break down high-level intent into manageable actions. From there, it allows users to iterate on these plans, then hand them off to AI agents like Claude Code, Cursor, or others to implement the changes. Traycer also includes functionality to verify AI-generated changes against the existing codebase to catch errors early. It integrates with development environments (VSCode, Cursor, Windsurf) and supports features like “Ticket Assist,” which turns GitHub issues into executable plans directly in your IDE.

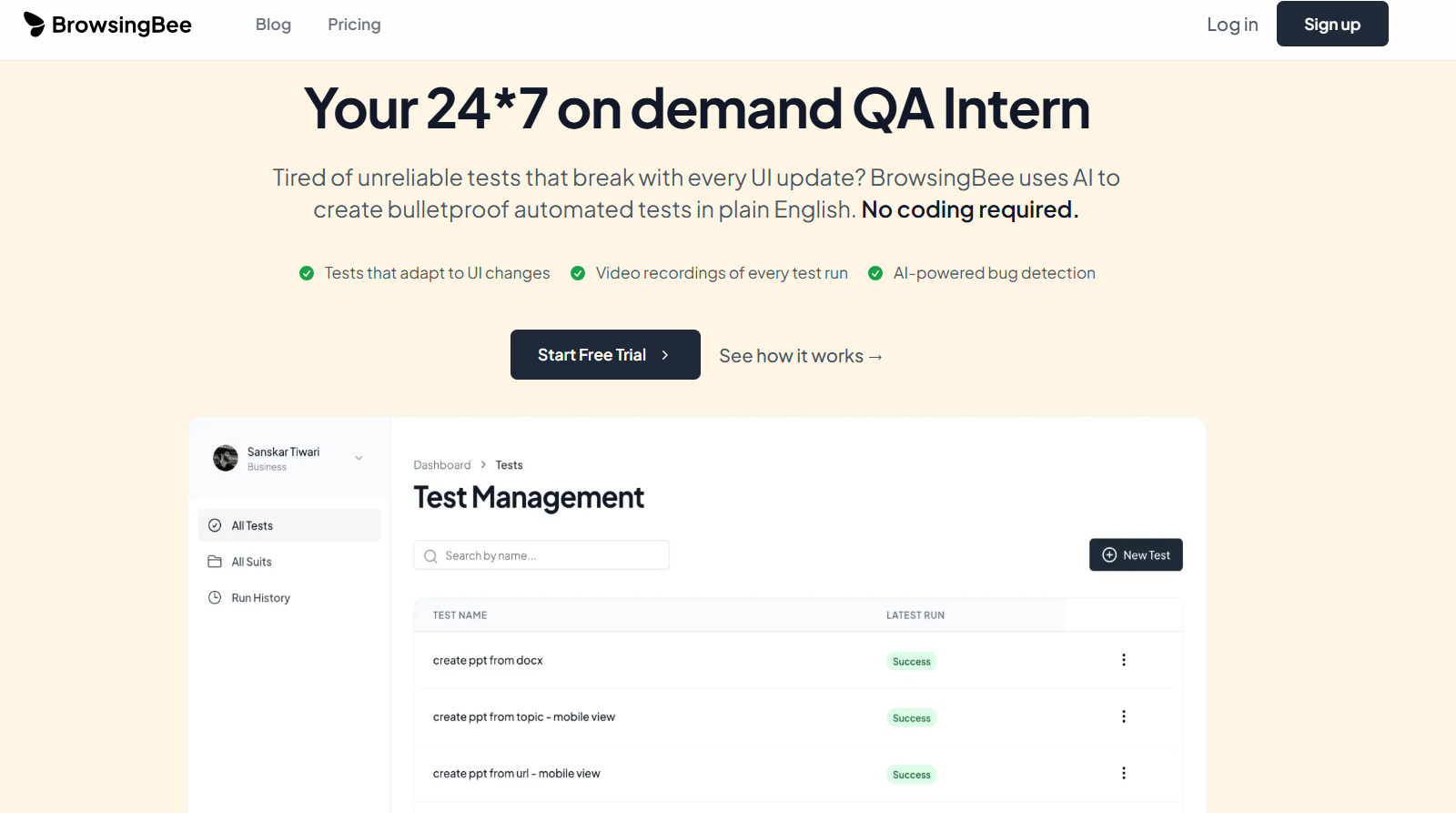

BrowsingBee

BrowsingBee is an AI-powered browser testing platform that allows users to write tests in plain English instead of code, making automated QA accessible to more people. The platform promises that tests created through natural language will be resilient to UI changes, adapting automatically via “self-healing” logic. It also records video playback of test runs, offers cross-browser support, regression testing, and alerting features. The goal is to reduce the overhead of maintaining brittle test suites and speed up test creation and debugging workflows.

BrowsingBee

BrowsingBee is an AI-powered browser testing platform that allows users to write tests in plain English instead of code, making automated QA accessible to more people. The platform promises that tests created through natural language will be resilient to UI changes, adapting automatically via “self-healing” logic. It also records video playback of test runs, offers cross-browser support, regression testing, and alerting features. The goal is to reduce the overhead of maintaining brittle test suites and speed up test creation and debugging workflows.

BrowsingBee

BrowsingBee is an AI-powered browser testing platform that allows users to write tests in plain English instead of code, making automated QA accessible to more people. The platform promises that tests created through natural language will be resilient to UI changes, adapting automatically via “self-healing” logic. It also records video playback of test runs, offers cross-browser support, regression testing, and alerting features. The goal is to reduce the overhead of maintaining brittle test suites and speed up test creation and debugging workflows.

BeSimple AI

Besimple AI specializes in building expert datasets to unblock AI production. From ground truth evaluation data to comprehensive safety data, the platform enables teams to confidently ship AI products. By providing high-quality, expert-curated datasets, Besimple ensures that AI models are trained, tested, and deployed with accuracy, reliability, and safety in mind. It is designed for AI developers, researchers, and enterprises who want to streamline data annotation, evaluation, and safety processes, accelerating AI production while maintaining high standards of quality.

BeSimple AI

Besimple AI specializes in building expert datasets to unblock AI production. From ground truth evaluation data to comprehensive safety data, the platform enables teams to confidently ship AI products. By providing high-quality, expert-curated datasets, Besimple ensures that AI models are trained, tested, and deployed with accuracy, reliability, and safety in mind. It is designed for AI developers, researchers, and enterprises who want to streamline data annotation, evaluation, and safety processes, accelerating AI production while maintaining high standards of quality.

BeSimple AI

Besimple AI specializes in building expert datasets to unblock AI production. From ground truth evaluation data to comprehensive safety data, the platform enables teams to confidently ship AI products. By providing high-quality, expert-curated datasets, Besimple ensures that AI models are trained, tested, and deployed with accuracy, reliability, and safety in mind. It is designed for AI developers, researchers, and enterprises who want to streamline data annotation, evaluation, and safety processes, accelerating AI production while maintaining high standards of quality.

Developer Toolkit

DeveloperToolkit.ai is an advanced AI-assisted development platform designed to help developers build production-grade, scalable, and maintainable software. It leverages powerful models like Claude Code and Cursor to generate production-ready code that’s secure, tested, and optimized for real-world deployment. Unlike tools that stop at quick prototypes, DeveloperToolkit.ai focuses on long-term code quality, maintainability, and best practices. Whether writing API endpoints, components, or full-fledged systems, it accelerates the entire development process while ensuring cleaner architectures and stable results fit for teams that ship with confidence.

Developer Toolkit

DeveloperToolkit.ai is an advanced AI-assisted development platform designed to help developers build production-grade, scalable, and maintainable software. It leverages powerful models like Claude Code and Cursor to generate production-ready code that’s secure, tested, and optimized for real-world deployment. Unlike tools that stop at quick prototypes, DeveloperToolkit.ai focuses on long-term code quality, maintainability, and best practices. Whether writing API endpoints, components, or full-fledged systems, it accelerates the entire development process while ensuring cleaner architectures and stable results fit for teams that ship with confidence.

Developer Toolkit

DeveloperToolkit.ai is an advanced AI-assisted development platform designed to help developers build production-grade, scalable, and maintainable software. It leverages powerful models like Claude Code and Cursor to generate production-ready code that’s secure, tested, and optimized for real-world deployment. Unlike tools that stop at quick prototypes, DeveloperToolkit.ai focuses on long-term code quality, maintainability, and best practices. Whether writing API endpoints, components, or full-fledged systems, it accelerates the entire development process while ensuring cleaner architectures and stable results fit for teams that ship with confidence.

Auto QA

AutoQA is an AI-powered automated testing platform designed to help software teams build reliable applications faster. It enables teams to create, run and manage test plans with intelligent automation, reducing manual effort and improving test coverage. The solution supports automated test execution, reporting and integration into CI/CD pipelines—making it especially useful for agile teams seeking higher quality and faster releases

Auto QA

AutoQA is an AI-powered automated testing platform designed to help software teams build reliable applications faster. It enables teams to create, run and manage test plans with intelligent automation, reducing manual effort and improving test coverage. The solution supports automated test execution, reporting and integration into CI/CD pipelines—making it especially useful for agile teams seeking higher quality and faster releases

Auto QA

AutoQA is an AI-powered automated testing platform designed to help software teams build reliable applications faster. It enables teams to create, run and manage test plans with intelligent automation, reducing manual effort and improving test coverage. The solution supports automated test execution, reporting and integration into CI/CD pipelines—making it especially useful for agile teams seeking higher quality and faster releases

Coderabbit AI

CodeRabbit AI is an intelligent code review assistant designed to automate software review processes, identify bugs, and improve code quality using machine learning. It integrates directly with GitHub and other version control systems to provide real-time analysis, review comments, and improvement suggestions. By mimicking human reviewer logic, CodeRabbit helps development teams maintain code standards while reducing time spent on manual reviews. Its AI models are trained on best coding practices, ensuring that every commit is efficient, secure, and optimized for performance.

Coderabbit AI

CodeRabbit AI is an intelligent code review assistant designed to automate software review processes, identify bugs, and improve code quality using machine learning. It integrates directly with GitHub and other version control systems to provide real-time analysis, review comments, and improvement suggestions. By mimicking human reviewer logic, CodeRabbit helps development teams maintain code standards while reducing time spent on manual reviews. Its AI models are trained on best coding practices, ensuring that every commit is efficient, secure, and optimized for performance.

Coderabbit AI

CodeRabbit AI is an intelligent code review assistant designed to automate software review processes, identify bugs, and improve code quality using machine learning. It integrates directly with GitHub and other version control systems to provide real-time analysis, review comments, and improvement suggestions. By mimicking human reviewer logic, CodeRabbit helps development teams maintain code standards while reducing time spent on manual reviews. Its AI models are trained on best coding practices, ensuring that every commit is efficient, secure, and optimized for performance.

Braintrust

Braintrust is an AI observability platform designed to help teams build high-quality AI products by enabling systematic testing, evaluation, and monitoring of AI features. It provides tools to run evaluations with real data, score AI responses, and monitor live model performance to detect quality drops or incorrect outputs. Braintrust facilitates collaboration among engineers and product managers with intuitive workflows, side-by-side comparison of model results, and automated as well as human scoring. The platform supports scalable infrastructure, automated alerts for quality and safety, and provides detailed analytics to optimize AI development and maintain production quality.

Braintrust

Braintrust is an AI observability platform designed to help teams build high-quality AI products by enabling systematic testing, evaluation, and monitoring of AI features. It provides tools to run evaluations with real data, score AI responses, and monitor live model performance to detect quality drops or incorrect outputs. Braintrust facilitates collaboration among engineers and product managers with intuitive workflows, side-by-side comparison of model results, and automated as well as human scoring. The platform supports scalable infrastructure, automated alerts for quality and safety, and provides detailed analytics to optimize AI development and maintain production quality.

Braintrust

Braintrust is an AI observability platform designed to help teams build high-quality AI products by enabling systematic testing, evaluation, and monitoring of AI features. It provides tools to run evaluations with real data, score AI responses, and monitor live model performance to detect quality drops or incorrect outputs. Braintrust facilitates collaboration among engineers and product managers with intuitive workflows, side-by-side comparison of model results, and automated as well as human scoring. The platform supports scalable infrastructure, automated alerts for quality and safety, and provides detailed analytics to optimize AI development and maintain production quality.

H2Loop

H2LooP AI is an enterprise-focused AI platform designed specifically for system software teams working in industries like automotive, electronics, IoT, telecom, avionics, and semiconductors. It integrates seamlessly with existing development toolsets without disrupting workflows. The platform offers fully on-premise deployment and is trained on a company’s proprietary system code, logs, and specifications, ensuring complete data privacy and security. H2LooP AI facilitates co-building, fast prototyping, and research-backed innovation tailored to complex system software development environments.

H2Loop

H2LooP AI is an enterprise-focused AI platform designed specifically for system software teams working in industries like automotive, electronics, IoT, telecom, avionics, and semiconductors. It integrates seamlessly with existing development toolsets without disrupting workflows. The platform offers fully on-premise deployment and is trained on a company’s proprietary system code, logs, and specifications, ensuring complete data privacy and security. H2LooP AI facilitates co-building, fast prototyping, and research-backed innovation tailored to complex system software development environments.

H2Loop

H2LooP AI is an enterprise-focused AI platform designed specifically for system software teams working in industries like automotive, electronics, IoT, telecom, avionics, and semiconductors. It integrates seamlessly with existing development toolsets without disrupting workflows. The platform offers fully on-premise deployment and is trained on a company’s proprietary system code, logs, and specifications, ensuring complete data privacy and security. H2LooP AI facilitates co-building, fast prototyping, and research-backed innovation tailored to complex system software development environments.

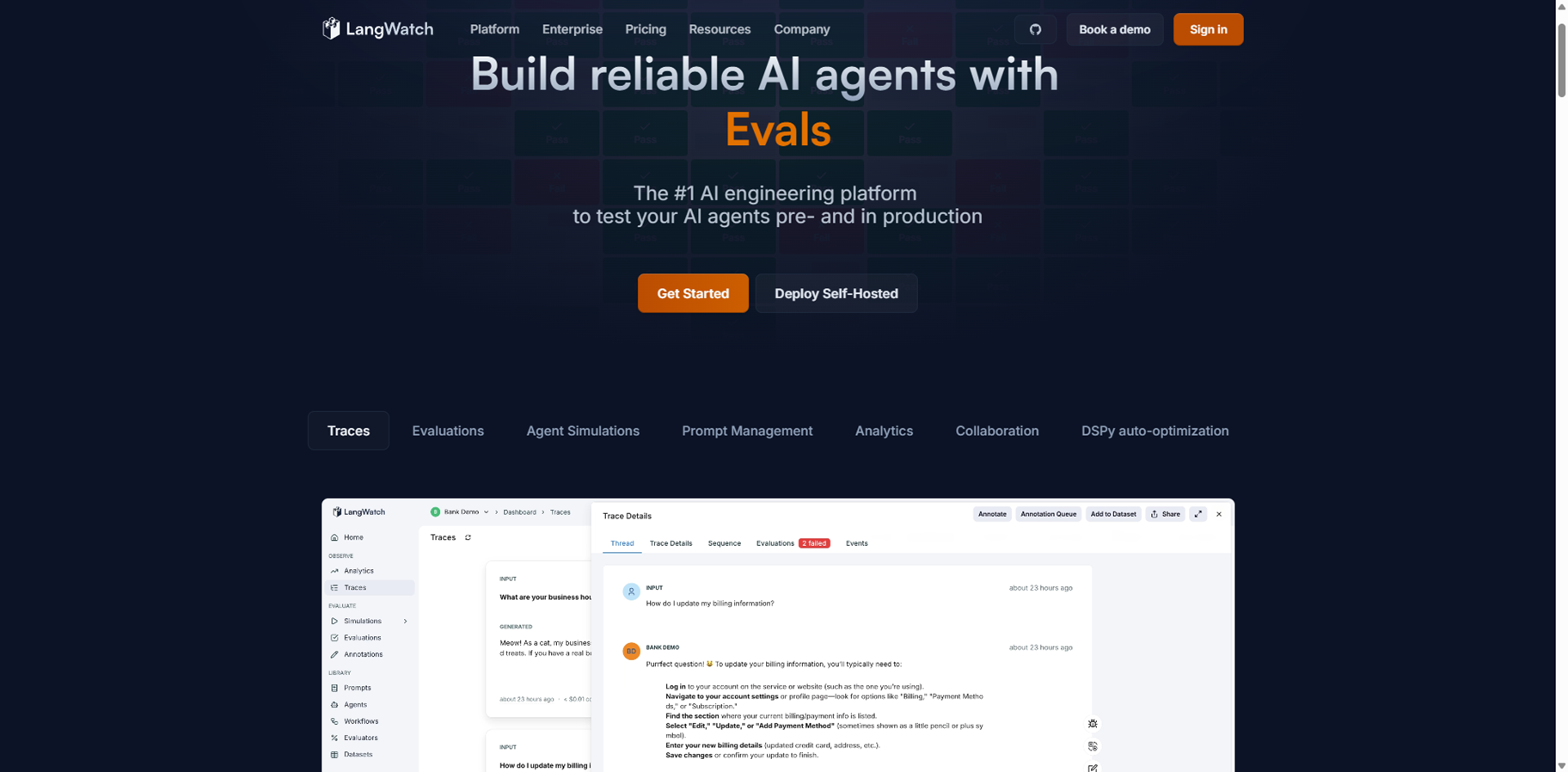

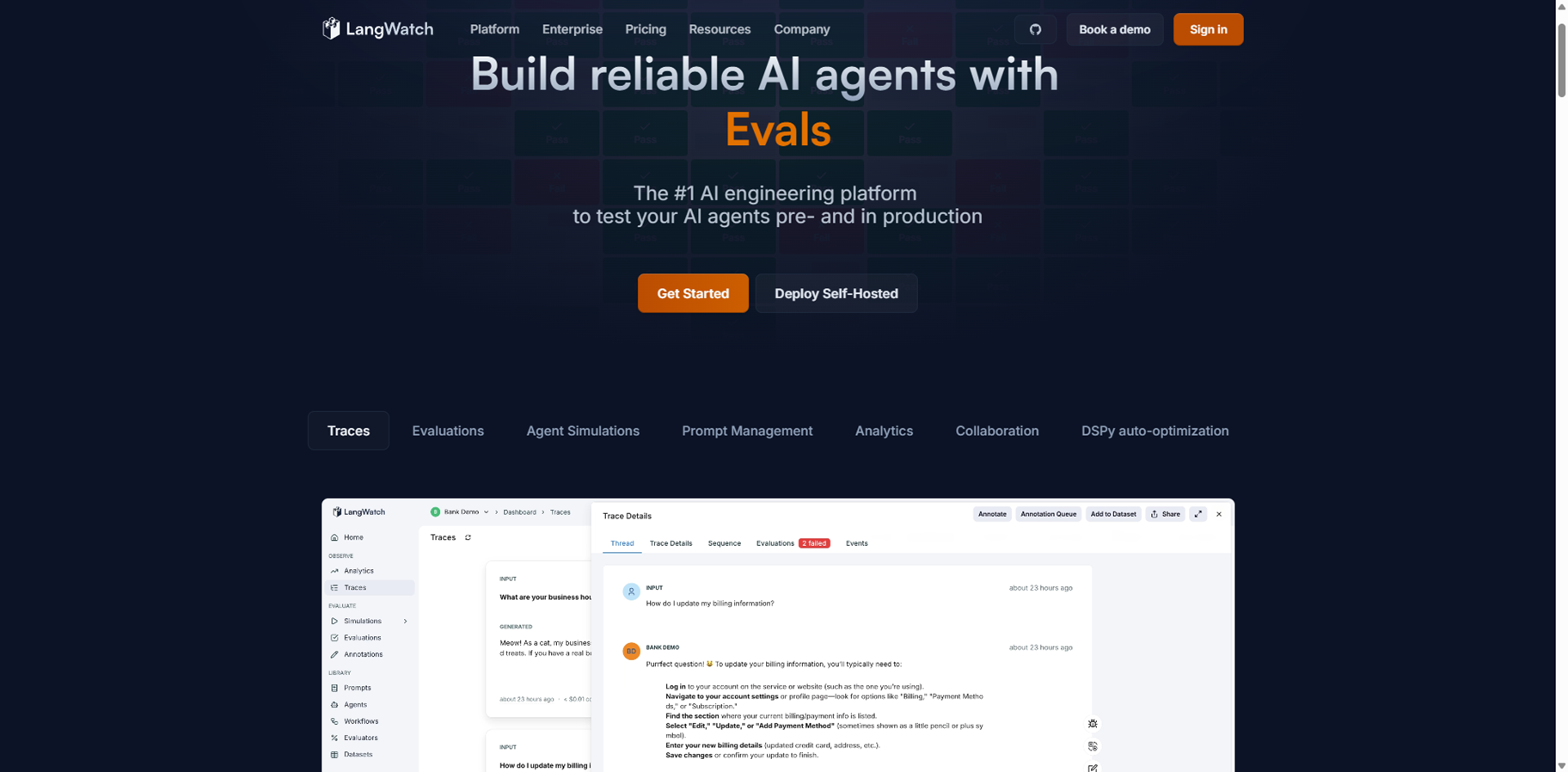

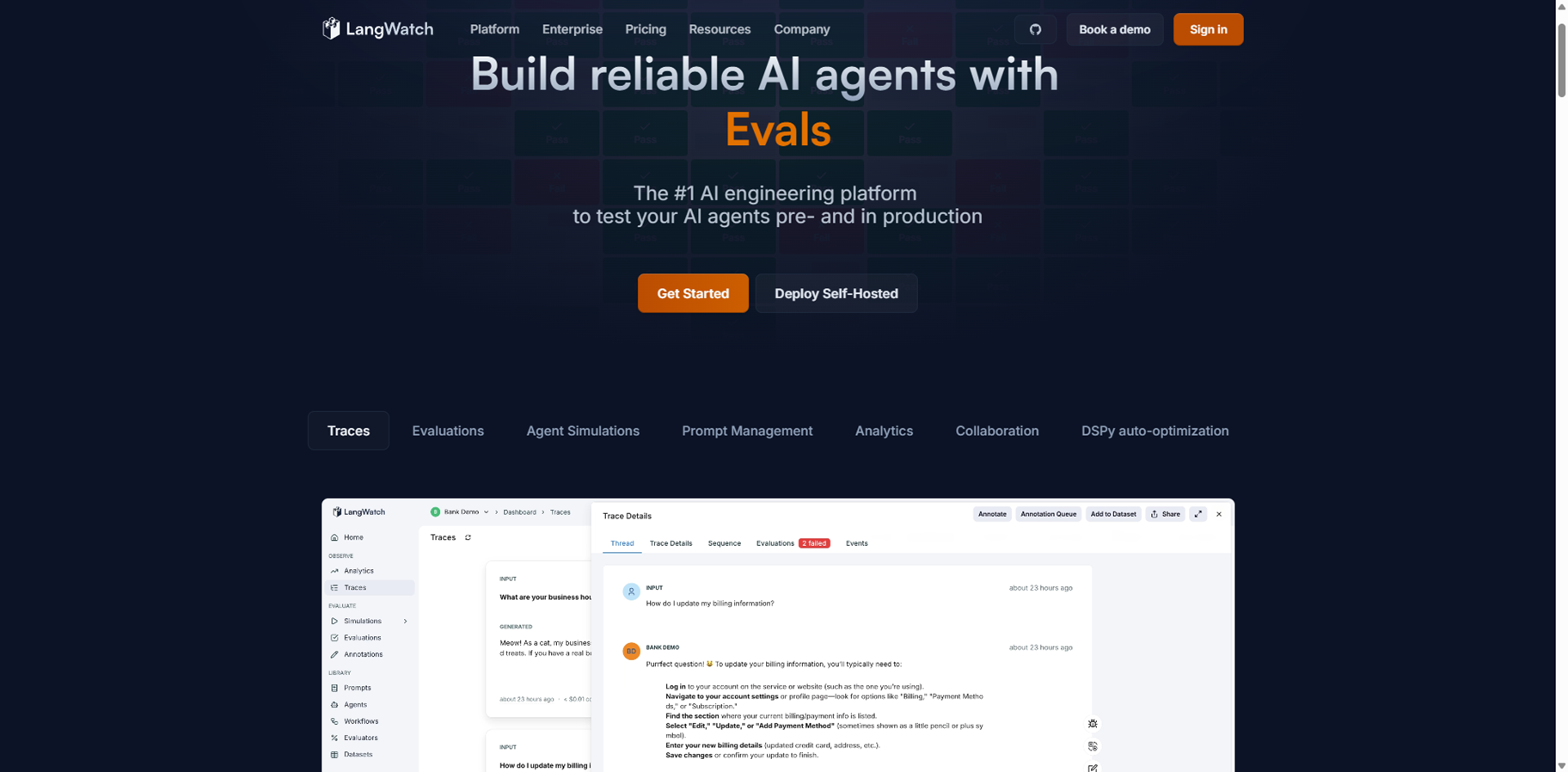

LangWatch

LangWatch.ai is the leading AI engineering platform built specifically to test, evaluate, and monitor AI agents from prototype through production, helping thousands of developers ship reliable complex AI without guesswork. It creates a continuous quality loop with tools like traces, custom evaluations, agent simulations, prompt management, analytics, collaboration features, and DSPy auto-optimization, boasting 400k monthly installs, 500k daily evaluations to curb hallucinations, and 5k GitHub stars. Teams use it to build prompts and models with version control and safe rollouts, run batch tests and synthetic conversations across scenarios, and track every change's impact programmatically or via UI. Fully open-source with OpenTelemetry integration, it works with any LLM, agent framework, or model via simple Python/TypeScript installs, offering self-hosting, enterprise security like ISO27001/SOC2, and no data lock-in for seamless tech stack fit.

LangWatch

LangWatch.ai is the leading AI engineering platform built specifically to test, evaluate, and monitor AI agents from prototype through production, helping thousands of developers ship reliable complex AI without guesswork. It creates a continuous quality loop with tools like traces, custom evaluations, agent simulations, prompt management, analytics, collaboration features, and DSPy auto-optimization, boasting 400k monthly installs, 500k daily evaluations to curb hallucinations, and 5k GitHub stars. Teams use it to build prompts and models with version control and safe rollouts, run batch tests and synthetic conversations across scenarios, and track every change's impact programmatically or via UI. Fully open-source with OpenTelemetry integration, it works with any LLM, agent framework, or model via simple Python/TypeScript installs, offering self-hosting, enterprise security like ISO27001/SOC2, and no data lock-in for seamless tech stack fit.

LangWatch

LangWatch.ai is the leading AI engineering platform built specifically to test, evaluate, and monitor AI agents from prototype through production, helping thousands of developers ship reliable complex AI without guesswork. It creates a continuous quality loop with tools like traces, custom evaluations, agent simulations, prompt management, analytics, collaboration features, and DSPy auto-optimization, boasting 400k monthly installs, 500k daily evaluations to curb hallucinations, and 5k GitHub stars. Teams use it to build prompts and models with version control and safe rollouts, run batch tests and synthetic conversations across scenarios, and track every change's impact programmatically or via UI. Fully open-source with OpenTelemetry integration, it works with any LLM, agent framework, or model via simple Python/TypeScript installs, offering self-hosting, enterprise security like ISO27001/SOC2, and no data lock-in for seamless tech stack fit.

Editorial Note

This page was researched and written by the ATB Editorial Team. Our team researches each AI tool by reviewing its official website, testing features, exploring real use cases, and considering user feedback. Every page is fact-checked and regularly updated to ensure the information stays accurate, neutral, and useful for our readers.

If you have any suggestions or questions, email us at hello@aitoolbook.ai