- Machine Learning Engineers: Deploy and manage models easily, focusing on building rather than infrastructure.

- Data Scientists: Quickly experiment with and integrate different models into their workflows.

- Software Developers: Integrate powerful AI functionalities into their applications with ease.

- Researchers: Access and utilize cutting-edge models for research and development.

- Businesses: Leverage AI capabilities without needing extensive in-house ML expertise.

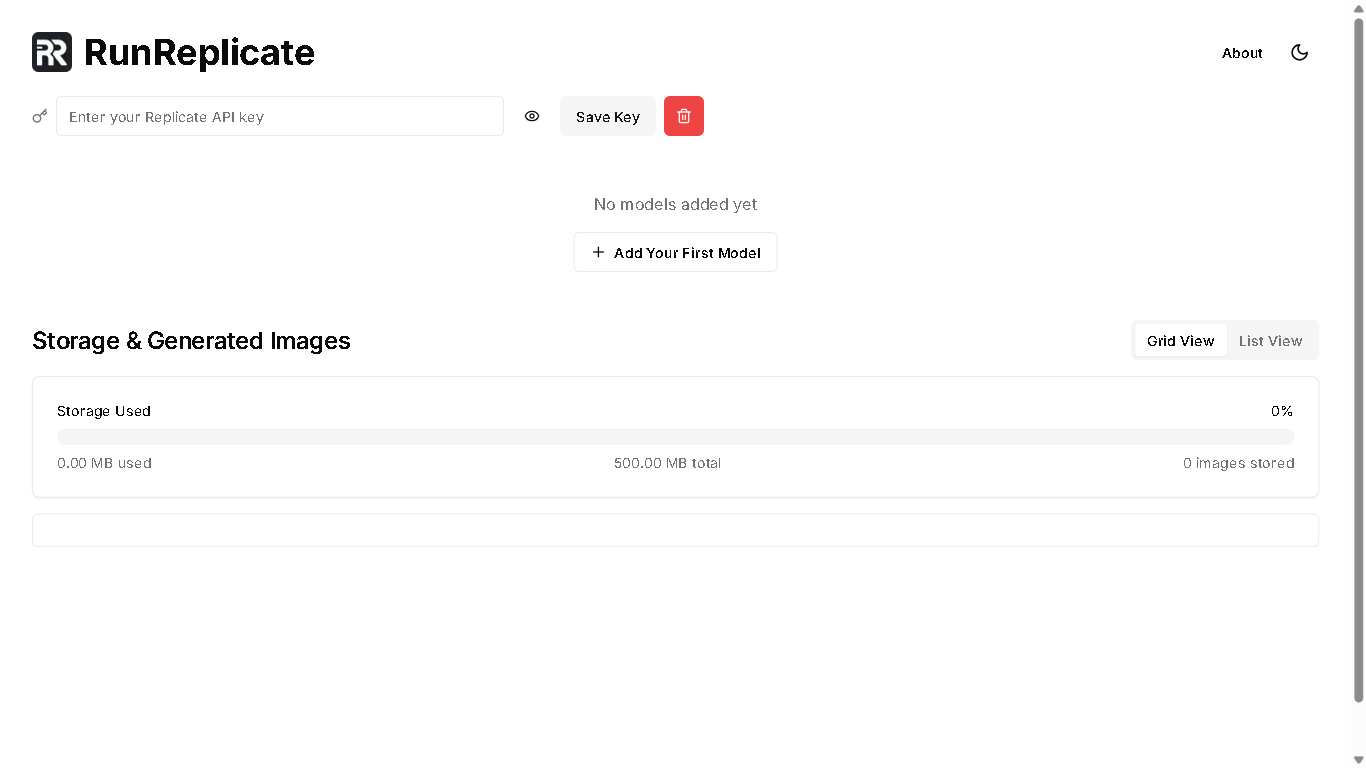

How to use it?

- Sign Up & Access the Platform: Create an account on the Replicate website to access the platform and its resources.

- Browse & Select Models: Explore the model library and choose the pre-trained models that best suit your needs.

- Run Your Model: Use the Replicate API or interface to run your chosen model, providing the necessary input data.

- Manage & Monitor: Track your model's performance and manage its resources through the platform's dashboard.

- Integrate & Deploy: Integrate the model's output into your application or workflow seamlessly.

- Extensive Model Library: Access a diverse range of pre-trained models from various fields.

- Easy API & Interface: Simple and intuitive API and web interface for easy integration and use.

- Scalability & Reliability: Run models at scale with Replicate's robust infrastructure.

- Version Control & Collaboration: Manage model versions and collaborate effectively with others.

- Secure & Private: Benefit from a secure and private environment for your models and data.

- Open Source Friendly: Supports and encourages the use of open-source models.

- Ease of Use: Simple API and interface make it accessible to a wide range of users.

- Extensive Model Selection: Offers a diverse catalog of pre-trained models.

- Scalability and Reliability: Provides robust infrastructure for running models at scale.

- Focus on Developer Experience: Prioritizes a smooth and efficient user experience.

- Pricing Model: The cost structure might be a barrier for some users.

- Limited Customization: Options for customization of certain aspects of the underlying models might be restricted.

- Dependence on Replicate's Infrastructure: Users are reliant on Replicate's platform and its availability.

Paid

custom

Proud of the love you're getting? Show off your AI Toolbook reviews—then invite more fans to share the love and build your credibility.

Add an AI Toolbook badge to your site—an easy way to drive followers, showcase updates, and collect reviews. It's like a mini 24/7 billboard for your AI.

Reviews

Rating Distribution

Average score

Popular Mention

FAQs

Similar AI Tools

H2o AI

H2O.ai is an advanced AI and machine learning platform that enables organizations to build, deploy, and scale AI models with ease. With a focus on automated machine learning (AutoML), explainable AI, and responsible AI practices, H2O.ai empowers data scientists, analysts, and businesses to extract insights, make predictions, and drive value from data at enterprise scale.

H2o AI

H2O.ai is an advanced AI and machine learning platform that enables organizations to build, deploy, and scale AI models with ease. With a focus on automated machine learning (AutoML), explainable AI, and responsible AI practices, H2O.ai empowers data scientists, analysts, and businesses to extract insights, make predictions, and drive value from data at enterprise scale.

H2o AI

H2O.ai is an advanced AI and machine learning platform that enables organizations to build, deploy, and scale AI models with ease. With a focus on automated machine learning (AutoML), explainable AI, and responsible AI practices, H2O.ai empowers data scientists, analysts, and businesses to extract insights, make predictions, and drive value from data at enterprise scale.

Radal AI

Radal AI is a no-code platform designed to simplify the training and deployment of small language models (SLMs) without requiring engineering or MLOps expertise. With an intuitive visual interface, you can drag your data, interact with an AI copilot, and train models with a single click. Trained models can be exported in quantized form for edge or local deployment, and seamlessly pushed to Hugging Face for easy sharing and versioning. Radal enables rapid iteration on custom models—making AI accessible to startups, researchers, and teams building domain-specific intelligence.

Radal AI

Radal AI is a no-code platform designed to simplify the training and deployment of small language models (SLMs) without requiring engineering or MLOps expertise. With an intuitive visual interface, you can drag your data, interact with an AI copilot, and train models with a single click. Trained models can be exported in quantized form for edge or local deployment, and seamlessly pushed to Hugging Face for easy sharing and versioning. Radal enables rapid iteration on custom models—making AI accessible to startups, researchers, and teams building domain-specific intelligence.

Radal AI

Radal AI is a no-code platform designed to simplify the training and deployment of small language models (SLMs) without requiring engineering or MLOps expertise. With an intuitive visual interface, you can drag your data, interact with an AI copilot, and train models with a single click. Trained models can be exported in quantized form for edge or local deployment, and seamlessly pushed to Hugging Face for easy sharing and versioning. Radal enables rapid iteration on custom models—making AI accessible to startups, researchers, and teams building domain-specific intelligence.

Mirai

TryMirai is an on-device AI infrastructure platform that enables developers to integrate high-performance AI models directly into their apps with minimal latency, full data privacy, and no inference costs. The platform includes an optimized library of models (ranging in parameter sizes such as 0.3B, 0.5B, 1B, 3B, and 7B) to match different business goals, ensuring both efficiency and adaptability. It offers a smart routing engine to balance performance, privacy, and cost, and tools like SDKs for Apple platforms (with upcoming support for Android) to simplify integration. Users can deploy AI capabilities—such as summarization, classification, general chat, and custom use cases—without relying on cloud offloading, which reduces dependencies on network connectivity and protects user data.

Mirai

TryMirai is an on-device AI infrastructure platform that enables developers to integrate high-performance AI models directly into their apps with minimal latency, full data privacy, and no inference costs. The platform includes an optimized library of models (ranging in parameter sizes such as 0.3B, 0.5B, 1B, 3B, and 7B) to match different business goals, ensuring both efficiency and adaptability. It offers a smart routing engine to balance performance, privacy, and cost, and tools like SDKs for Apple platforms (with upcoming support for Android) to simplify integration. Users can deploy AI capabilities—such as summarization, classification, general chat, and custom use cases—without relying on cloud offloading, which reduces dependencies on network connectivity and protects user data.

Mirai

TryMirai is an on-device AI infrastructure platform that enables developers to integrate high-performance AI models directly into their apps with minimal latency, full data privacy, and no inference costs. The platform includes an optimized library of models (ranging in parameter sizes such as 0.3B, 0.5B, 1B, 3B, and 7B) to match different business goals, ensuring both efficiency and adaptability. It offers a smart routing engine to balance performance, privacy, and cost, and tools like SDKs for Apple platforms (with upcoming support for Android) to simplify integration. Users can deploy AI capabilities—such as summarization, classification, general chat, and custom use cases—without relying on cloud offloading, which reduces dependencies on network connectivity and protects user data.

SiliconFlow

SiliconFlow is an AI infrastructure platform built for developers and enterprises who want to deploy, run, and fine-tune large language models (LLMs) and multimodal models efficiently. It offers a unified stack for inference, model hosting, and acceleration so that you don’t have to manage all the infrastructure yourself. The platform supports many open source and commercial models, high throughput, low latency, autoscaling and flexible deployment (serverless, reserved GPUs, private cloud). It also emphasizes cost-effectiveness, data security, and feature-rich tooling such as APIs compatible with OpenAI style, fine-tuning, monitoring, and scalability.

SiliconFlow

SiliconFlow is an AI infrastructure platform built for developers and enterprises who want to deploy, run, and fine-tune large language models (LLMs) and multimodal models efficiently. It offers a unified stack for inference, model hosting, and acceleration so that you don’t have to manage all the infrastructure yourself. The platform supports many open source and commercial models, high throughput, low latency, autoscaling and flexible deployment (serverless, reserved GPUs, private cloud). It also emphasizes cost-effectiveness, data security, and feature-rich tooling such as APIs compatible with OpenAI style, fine-tuning, monitoring, and scalability.

SiliconFlow

SiliconFlow is an AI infrastructure platform built for developers and enterprises who want to deploy, run, and fine-tune large language models (LLMs) and multimodal models efficiently. It offers a unified stack for inference, model hosting, and acceleration so that you don’t have to manage all the infrastructure yourself. The platform supports many open source and commercial models, high throughput, low latency, autoscaling and flexible deployment (serverless, reserved GPUs, private cloud). It also emphasizes cost-effectiveness, data security, and feature-rich tooling such as APIs compatible with OpenAI style, fine-tuning, monitoring, and scalability.

Vertesia HQ

Vertesia is an enterprise generative AI platform built to help organizations design, deploy, and operate AI applications and agents at scale using a low-code approach. Its unified system offers multi-model support, trust/security controls, and components like Agentic RAG, autonomous agent builders, and document processing tools, all packaged in a way that lets teams move from prototype to production rapidly.

Vertesia HQ

Vertesia is an enterprise generative AI platform built to help organizations design, deploy, and operate AI applications and agents at scale using a low-code approach. Its unified system offers multi-model support, trust/security controls, and components like Agentic RAG, autonomous agent builders, and document processing tools, all packaged in a way that lets teams move from prototype to production rapidly.

Vertesia HQ

Vertesia is an enterprise generative AI platform built to help organizations design, deploy, and operate AI applications and agents at scale using a low-code approach. Its unified system offers multi-model support, trust/security controls, and components like Agentic RAG, autonomous agent builders, and document processing tools, all packaged in a way that lets teams move from prototype to production rapidly.

Developer Toolkit

DeveloperToolkit.ai is an advanced AI-assisted development platform designed to help developers build production-grade, scalable, and maintainable software. It leverages powerful models like Claude Code and Cursor to generate production-ready code that’s secure, tested, and optimized for real-world deployment. Unlike tools that stop at quick prototypes, DeveloperToolkit.ai focuses on long-term code quality, maintainability, and best practices. Whether writing API endpoints, components, or full-fledged systems, it accelerates the entire development process while ensuring cleaner architectures and stable results fit for teams that ship with confidence.

Developer Toolkit

DeveloperToolkit.ai is an advanced AI-assisted development platform designed to help developers build production-grade, scalable, and maintainable software. It leverages powerful models like Claude Code and Cursor to generate production-ready code that’s secure, tested, and optimized for real-world deployment. Unlike tools that stop at quick prototypes, DeveloperToolkit.ai focuses on long-term code quality, maintainability, and best practices. Whether writing API endpoints, components, or full-fledged systems, it accelerates the entire development process while ensuring cleaner architectures and stable results fit for teams that ship with confidence.

Developer Toolkit

DeveloperToolkit.ai is an advanced AI-assisted development platform designed to help developers build production-grade, scalable, and maintainable software. It leverages powerful models like Claude Code and Cursor to generate production-ready code that’s secure, tested, and optimized for real-world deployment. Unlike tools that stop at quick prototypes, DeveloperToolkit.ai focuses on long-term code quality, maintainability, and best practices. Whether writing API endpoints, components, or full-fledged systems, it accelerates the entire development process while ensuring cleaner architectures and stable results fit for teams that ship with confidence.

Refold AI

Refold AI is an AI-native integration platform designed to automate enterprise software integrations by deploying intelligent AI agents that handle complex workflows and legacy systems like SAP, Oracle Fusion, and Workday Finance. These AI agents are capable of building and maintaining integrations autonomously by navigating custom logic, dealing with brittle APIs, managing authentication, and adapting in real-time to changing systems without manual intervention. Refold AI reduces integration deployment times by up to 70%, enabling product and engineering teams to focus on innovation rather than routine integration tasks. The platform supports seamless integration lifecycle automation, full audit logging, CI/CD pipeline integration, version control, error handling, and provides a white-labeled marketplace for user-centric integration management.

Refold AI

Refold AI is an AI-native integration platform designed to automate enterprise software integrations by deploying intelligent AI agents that handle complex workflows and legacy systems like SAP, Oracle Fusion, and Workday Finance. These AI agents are capable of building and maintaining integrations autonomously by navigating custom logic, dealing with brittle APIs, managing authentication, and adapting in real-time to changing systems without manual intervention. Refold AI reduces integration deployment times by up to 70%, enabling product and engineering teams to focus on innovation rather than routine integration tasks. The platform supports seamless integration lifecycle automation, full audit logging, CI/CD pipeline integration, version control, error handling, and provides a white-labeled marketplace for user-centric integration management.

Refold AI

Refold AI is an AI-native integration platform designed to automate enterprise software integrations by deploying intelligent AI agents that handle complex workflows and legacy systems like SAP, Oracle Fusion, and Workday Finance. These AI agents are capable of building and maintaining integrations autonomously by navigating custom logic, dealing with brittle APIs, managing authentication, and adapting in real-time to changing systems without manual intervention. Refold AI reduces integration deployment times by up to 70%, enabling product and engineering teams to focus on innovation rather than routine integration tasks. The platform supports seamless integration lifecycle automation, full audit logging, CI/CD pipeline integration, version control, error handling, and provides a white-labeled marketplace for user-centric integration management.

Mobisoft Infotech

MI Team AI is a robust multi-LLM platform designed for enterprises seeking secure, scalable, and cost-effective AI access. It consolidates multiple AI models such as ChatGPT, Claude, Gemini, and various open-source large language models into a single platform, enabling users to switch seamlessly without juggling different tools. The platform supports deployment on private cloud or on-premises infrastructure to ensure complete data privacy and compliance. MI Team AI provides a unified workspace with role-based access controls, single sign-on (SSO), and comprehensive chat logs for transparency and auditability. It offers fixed licensing fees allowing unlimited team access under the company’s brand, making it ideal for organizations needing full control over AI usage.

Mobisoft Infotech

MI Team AI is a robust multi-LLM platform designed for enterprises seeking secure, scalable, and cost-effective AI access. It consolidates multiple AI models such as ChatGPT, Claude, Gemini, and various open-source large language models into a single platform, enabling users to switch seamlessly without juggling different tools. The platform supports deployment on private cloud or on-premises infrastructure to ensure complete data privacy and compliance. MI Team AI provides a unified workspace with role-based access controls, single sign-on (SSO), and comprehensive chat logs for transparency and auditability. It offers fixed licensing fees allowing unlimited team access under the company’s brand, making it ideal for organizations needing full control over AI usage.

Mobisoft Infotech

MI Team AI is a robust multi-LLM platform designed for enterprises seeking secure, scalable, and cost-effective AI access. It consolidates multiple AI models such as ChatGPT, Claude, Gemini, and various open-source large language models into a single platform, enabling users to switch seamlessly without juggling different tools. The platform supports deployment on private cloud or on-premises infrastructure to ensure complete data privacy and compliance. MI Team AI provides a unified workspace with role-based access controls, single sign-on (SSO), and comprehensive chat logs for transparency and auditability. It offers fixed licensing fees allowing unlimited team access under the company’s brand, making it ideal for organizations needing full control over AI usage.

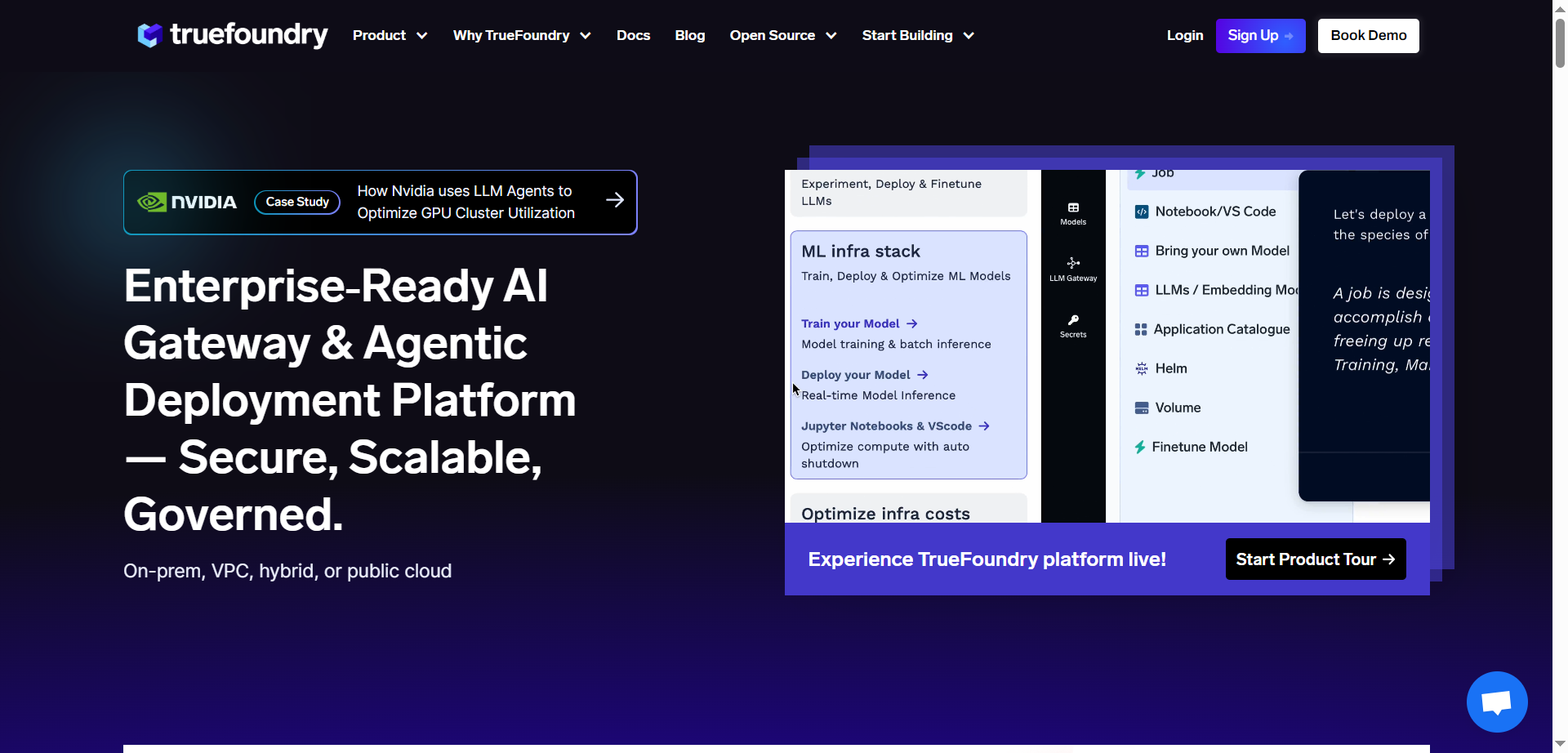

Truefoundry

TrueFoundry is an enterprise-ready AI gateway and agentic AI deployment platform designed to securely govern, deploy, scale, and trace advanced AI workflows and models. It supports hosting any large language model (LLM), embedding model, or custom AI models optimized for speed and scale on-premises, in virtual private clouds (VPC), hybrid, or public cloud environments. TrueFoundry offers comprehensive AI orchestration with features like tool and API registry, prompt lifecycle management, and role-based access controls to ensure compliance, security, and governance at scale. It enables organizations to automate multi-step reasoning, manage AI agents and workflows, and monitor infrastructure resources such as GPU utilization with observability tools and real-time policy enforcement.

Truefoundry

TrueFoundry is an enterprise-ready AI gateway and agentic AI deployment platform designed to securely govern, deploy, scale, and trace advanced AI workflows and models. It supports hosting any large language model (LLM), embedding model, or custom AI models optimized for speed and scale on-premises, in virtual private clouds (VPC), hybrid, or public cloud environments. TrueFoundry offers comprehensive AI orchestration with features like tool and API registry, prompt lifecycle management, and role-based access controls to ensure compliance, security, and governance at scale. It enables organizations to automate multi-step reasoning, manage AI agents and workflows, and monitor infrastructure resources such as GPU utilization with observability tools and real-time policy enforcement.

Truefoundry

TrueFoundry is an enterprise-ready AI gateway and agentic AI deployment platform designed to securely govern, deploy, scale, and trace advanced AI workflows and models. It supports hosting any large language model (LLM), embedding model, or custom AI models optimized for speed and scale on-premises, in virtual private clouds (VPC), hybrid, or public cloud environments. TrueFoundry offers comprehensive AI orchestration with features like tool and API registry, prompt lifecycle management, and role-based access controls to ensure compliance, security, and governance at scale. It enables organizations to automate multi-step reasoning, manage AI agents and workflows, and monitor infrastructure resources such as GPU utilization with observability tools and real-time policy enforcement.

Prompts AI

Prompts.ai is an enterprise-grade AI platform designed to streamline, optimize, and govern generative AI workflows and prompt engineering across organizations. It centralizes access to over 35 large language models (LLMs) and AI tools, allowing teams to automate repetitive workflows, reduce costs, and boost productivity by up to 10 times. The platform emphasizes data security and compliance with standards such as SOC 2 Type II, HIPAA, and GDPR. It supports enterprises in building custom AI workflows, ensuring full visibility, auditability, and governance of AI interactions. Additionally, Prompts.ai fosters collaboration by providing a shared library of expert-built prompts and workflows, enabling businesses to scale AI adoption efficiently and securely.

Prompts AI

Prompts.ai is an enterprise-grade AI platform designed to streamline, optimize, and govern generative AI workflows and prompt engineering across organizations. It centralizes access to over 35 large language models (LLMs) and AI tools, allowing teams to automate repetitive workflows, reduce costs, and boost productivity by up to 10 times. The platform emphasizes data security and compliance with standards such as SOC 2 Type II, HIPAA, and GDPR. It supports enterprises in building custom AI workflows, ensuring full visibility, auditability, and governance of AI interactions. Additionally, Prompts.ai fosters collaboration by providing a shared library of expert-built prompts and workflows, enabling businesses to scale AI adoption efficiently and securely.

Prompts AI

Prompts.ai is an enterprise-grade AI platform designed to streamline, optimize, and govern generative AI workflows and prompt engineering across organizations. It centralizes access to over 35 large language models (LLMs) and AI tools, allowing teams to automate repetitive workflows, reduce costs, and boost productivity by up to 10 times. The platform emphasizes data security and compliance with standards such as SOC 2 Type II, HIPAA, and GDPR. It supports enterprises in building custom AI workflows, ensuring full visibility, auditability, and governance of AI interactions. Additionally, Prompts.ai fosters collaboration by providing a shared library of expert-built prompts and workflows, enabling businesses to scale AI adoption efficiently and securely.

Gud prompt

GudPrompt is an AI-powered prompt engineering and workflow automation platform designed for teams and creators who want to build, organize, and optimize their AI prompts. It allows users to create prompt libraries, version prompts, collaborate with teammates, and run complex multi-step AI workflows. With its prompt templates, testing tools, and multi-model support, GudPrompt helps users generate consistent, high-quality AI outputs across various applications.

Gud prompt

GudPrompt is an AI-powered prompt engineering and workflow automation platform designed for teams and creators who want to build, organize, and optimize their AI prompts. It allows users to create prompt libraries, version prompts, collaborate with teammates, and run complex multi-step AI workflows. With its prompt templates, testing tools, and multi-model support, GudPrompt helps users generate consistent, high-quality AI outputs across various applications.

Gud prompt

GudPrompt is an AI-powered prompt engineering and workflow automation platform designed for teams and creators who want to build, organize, and optimize their AI prompts. It allows users to create prompt libraries, version prompts, collaborate with teammates, and run complex multi-step AI workflows. With its prompt templates, testing tools, and multi-model support, GudPrompt helps users generate consistent, high-quality AI outputs across various applications.

Databricks

Databricks is a leading data intelligence platform that unifies data, analytics, and AI to help enterprises build generative AI applications, democratize insights via natural language, and drive down costs through lakehouse architecture. It enables creation, tuning, and deployment of custom AI models with full data privacy, governance across structured and unstructured data, and intelligent processing for batch and real-time ETL. Featuring Agent Bricks for training AI agents on business data, serverless SQL/BI with 12x better price/performance, automated experiment tracking, context-aware search, and open data sharing via Marketplace, Databricks powers complete AI workflows while maintaining lineage, quality, and a single permission model.

Databricks

Databricks is a leading data intelligence platform that unifies data, analytics, and AI to help enterprises build generative AI applications, democratize insights via natural language, and drive down costs through lakehouse architecture. It enables creation, tuning, and deployment of custom AI models with full data privacy, governance across structured and unstructured data, and intelligent processing for batch and real-time ETL. Featuring Agent Bricks for training AI agents on business data, serverless SQL/BI with 12x better price/performance, automated experiment tracking, context-aware search, and open data sharing via Marketplace, Databricks powers complete AI workflows while maintaining lineage, quality, and a single permission model.

Databricks

Databricks is a leading data intelligence platform that unifies data, analytics, and AI to help enterprises build generative AI applications, democratize insights via natural language, and drive down costs through lakehouse architecture. It enables creation, tuning, and deployment of custom AI models with full data privacy, governance across structured and unstructured data, and intelligent processing for batch and real-time ETL. Featuring Agent Bricks for training AI agents on business data, serverless SQL/BI with 12x better price/performance, automated experiment tracking, context-aware search, and open data sharing via Marketplace, Databricks powers complete AI workflows while maintaining lineage, quality, and a single permission model.

Editorial Note

This page was researched and written by the ATB Editorial Team. Our team researches each AI tool by reviewing its official website, testing features, exploring real use cases, and considering user feedback. Every page is fact-checked and regularly updated to ensure the information stays accurate, neutral, and useful for our readers.

If you have any suggestions or questions, email us at hello@aitoolbook.ai